The field of information retrieval deals with locating information within documents, searching online databases, and searching the internet. An internet client-server architecture on the World Wide Web (WWW) allows websites to be accessed. This extremely powerful system allows the server complete autonomy over the server in terms of serving information to internet users. A Hypertext Document system, a large, distributed, non-linear text format for arranging information, is used to display the information.

Hence, a Web Crawler is a vital information retrieval system that traverses the Web and downloads the most suitable web documents that meet the user’s needs. An Internet crawler is a program that retrieves Web pages from the Internet and inserts them into a local repository. The purpose of these cookies is to create a replica of all the pages that have been visited, which later on are processed by a search engine, which will index the downloaded pages so that they can be accessed more quickly.

Watch our webinar on how public web data can fuel business growth:

What Is A Web Crawler?

Crawlers are software or scripts programmed to systematically and automatically browse the World Wide Web as part of an automated process. A web page consists of hyperlinks, which can be used to open other web pages that are linked to it, making the WWW’s structure and organization a graphical structure.

To move from page to page, a web crawler takes advantage of the graphical structure of the web pages. Besides being known as robots, spiders, and other similar terms. It is also known as a worm when these programs are installed on a computer. Crawlers are designed to retrieve Web pages and insert them into local repositories by retrieving them from the World Wide Web.

An online crawler is a program that creates a copy of all the pages that have been visited. The replica of all the pages is then processed by a search engine, which then indexes all the pages that have been downloaded to help with quick searches. This is the job of the search engine, which stores information about various web pages that it retrieves from the World Wide Web. There is an automated Web crawler that retrieves these pages, which stands for an automated Web browser that follows each link that it sees on the Web.

How Web Spiders Work?

It is important to note that from a conceptual standpoint, web crawlers’ algorithms are extremely simple and straightforward. Web crawlers identify URLs (hyperlinks), download their associated web pages, extract the URLs (hyperlinks) from those pages, and add URLs that have never been encountered before to the list. This is how crawlers can find your internal links and identify your referring domains (i.e., pages linking to you). That’s why having an internal link structure and choosing external websites for backlinks wisely is so important. It’ll help you improve your indexability, crawlability, and domain authority. With a high-level scripting language such as Perl, it is possible to implement a simple yet effective web crawler in just a few lines of code.

It is, without a doubt, true that the amount of information available on the web has increased thanks to the digital revolution. Up until 2025, there is expected to be an increase in global data generation of more than 180 zettabytes in the following five years. It is estimated that by 2025, 80% of the information on the planet will be unstructured, according to IDC.

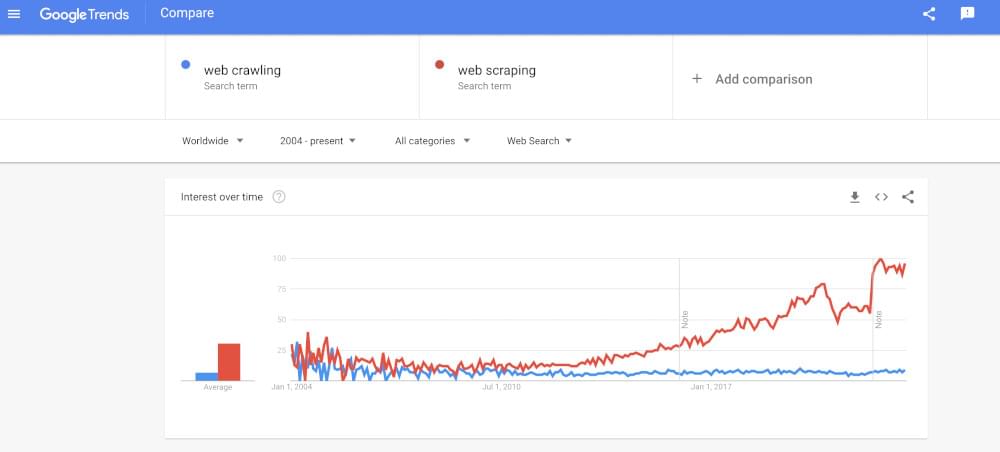

According to Google trends, interest in web crawlers has decreased significantly since 2004, according to Google data on web crawlers. Although, at the same time, the interest in web scraping has outpaced the interest in web crawlingthroughout the past few years. The meaning of this statement can be interpreted in several different ways, for example:

- Since the search engine industry is mature and dominated by Google and Baidu, many companies don’t need to build crawlers.

- Companies are investing in scraping because they are gaining an increasing interest in analytics and in making data-driven decisions.

- Search engines have been crawling the Internet since the early 2000s, so crawling by them is no longer a topic of increased interest since they have been doing this for so long.

How To Set Up A Web Crawler?

As a first step, Web crawlers start with seed URLs, also known as seed URLs. The crawler will crawl some URLs. It is necessary to download web pages for the seed URLs to extract the new links incorporated into the downloaded pages. These retrieved web pages are stored and well-indexed in the storage area so that with the help of these indexes, they can later be retrieved at a later date as and when required.

There is a confirmation of whether or not the extracted URLs from the downloaded page have already been downloaded by confirming whether their related documents have already been downloaded. If web crawlers do not download the URLs, they will be assigned back to them for further downloading if they are not already downloaded.

Once the URLs have been downloaded, this process is repeated until there are no more URLs that need to be downloaded. The goal of a crawler is to download millions of pages from the target site every day before it reaches the target. A figure that illustrates the processes involved in crawling can be found below.

Working Of A Web Crawler

It is possible to discuss the working of a web crawler in the following manner:

- The selection of the seed URL or URLs to be used as a starting point.

- This is being added to the frontiers.

- We will now pick the URL from the frontier and put it in our browser.

- This method retrieves the web page corresponding to the URL specified in the field.

- It is possible to extract new URL links from that web page by parsing it.

- The frontier will be updated with all newly discovered URLs.

- You must repeat steps 2 and 3 until the frontier is empty.

Web Crawler Use Cases

Data insights play a significant role in industries using web crawling and scraping. Media and entertainment, eCommerce, and retail companies have all realized the importance of insights data for business growth. Yet, they are suspicious about how data can be gathered online and acquired.

A compilation of use cases we cater to frequently is provided here as an introduction to structured data.

1. Market Research

The importance of market research for any business cannot be overstated. To help create an edge in the market, market researchers use data scraping to find market trends, research and development, and price analysis. As well as providing essential market research information, web scraping software details your competitors and products.

Using web scrapers to extract accurate real-time data from such vast data is much easier than manually searching. Last but not least, web scraping makes data collection easy and cost-effective.

2. Lead Generation

Customer relationships are the lifeblood of any business. It would be best if you strived to add more potential customers to your business to grow. It is a necessity in almost every industry to scrape the Web to generate leads. Lead generation via web scraping helps companies find the best and most qualified leads at scale.

3. Competitive Intelligence

The market research also includes competitive intelligence. By collecting and analyzing data, it performs various tasks. Keeping an eye on the market and your competitors’ activities will help you discover trends and business opportunities.

Businesses can get data from multiple websites quickly and easily with a web scraping tool. It is easiest to collect and compile such data by web scraping. To gather competitive data, users should learn how to scrape websites for information such as real-time prices, product updates, customer information, reviews, feedback, and many more.

4. Pricing Comparison

With increased competition among marketers, businesses need to monitor their competitors’ pricing strategies. Consumers are always looking for the best deal at the lowest price. These factors motivate firms to compare product prices, including sales and discounts.

Using web scraping and data mining to extract data points from multiple websites and online stores plays a crucial role in business and marketing decision-making.

5. Sentiment Analysis

Consumers’ perceptions of services, products, or brands are crucial to businesses. For businesses to thrive measuring customer sentiment is vital. Customer feedback and reviews help businesses understand what needs to be improved in their products or services.

Reviews are available on many sites aggregating software reviews, and Web scraping to collect sentiment analysis on marketplaces helps businesses understand customer needs and preferences.

Examples Of Web Crawlers

The Googlebot is the most well-known crawler, but many other search engines employ their crawlers. The following are some examples of this:

- Crawlbase

- Bingbot

- DuckDuckBot

- Baidu

- Bing

- Yandex

What Is The Primary Purpose Of A Web Crawler?

An automated web crawler (or web spider) searches the internet in a systematic, logical approach. Caching may be used to speed up the loading of a recently visited webpage or by a search engine bot to know what to retrieve when a user searches. Almost all the time, search engines apply a search function through a bot to provide relevant links to user searches. Google, Bing, Yahoo, etc., will display a list of web pages based on the query entered by the user.

Using a web spider bot is similar to going to an unorganized library and compiling a card list for others to find relevant information quickly. They will read each book’s title, summary, and a bit of internal context to categorize them. Although web crawlers work similarly, they have a more complex way. Following hyperlinks from one page to the next, the bot will follow hyperlinks from those pages to further pages.

It is unknown how much search engines crawl publicly available data. Due to 1.2 million types of content published daily, some sources estimate that 70 percent of the internet is indexed.

What Is A Web Crawler Used For?

Crawlers, sometimes called spiders or spider bots, are Internet bots that systematically search the Web and are usually operated by search engines for Web indexing. Most search engines and websites update their web content indexes and content using Web crawling software. Web crawlers copy pages for processing by a search engine, which indexes the downloaded pages so that users can search more efficiently.

Concluding Remarks

Crawlers are an essential part of any marketing or SEO campaign on the Web. Content wouldn’t be found quickly without them. Even though they are pretty complex scientifically, modern web crawlers like Crawlbase are so user-friendly that anyone can use them.

Whether you’re an online retailer or brand distributor, site crawls provide valuable data. Companies are using it to gain insights that will help them develop good strategies. The result will be better offered, more competitiveness, improved market understanding, and better business decisions. It’s easy to crawl with the right tool, even though it’s a complex process.