One of the most powerful web data collection techniques is web crawling, which involves finding all the URLs for one or more domains. Python has several popular web-crawling libraries and frameworks for web crawling. We will first introduce different web crawling techniques and use cases, then show you simple web crawling with Python using libraries: requests, Beautiful Soup, and Scrapy. Next, we’ll see why it’s better to use a web crawling framework like Crawlbase.

A web crawler, also known as a web spider or web robot, automatically searches the Internet for content. The term crawler comes from the web crawler, the Internet’s original search engine, and search engine bots are the most well-known crawler. Search engines use web bots to index the contents of web pages all over the Internet so that they can appear in search engine results.

Web crawlers collect data, including a website’s URL, meta tag information, web page content, page links, and the destinations of those links. They maintain a note of previously downloaded URLs to prevent repeatedly downloading the same page. It also checks for errors in HTML code and hyperlinks.

Web crawling searches websites for information and retrieves documents to create a searchable index. The crawl begins on a website page and proceeds through the links towards other sites until all of them have been scanned.

Crawlers can automate tasks such as:

• Archiving old copies of websites as static HTML files.

• Extracting and displaying content from websites in spreadsheets.

• Identifying broken links and the pages that contain them that need to be fixed.

• Comparing old and modern versions of websites.

• Extracting information from page meta tags, body content, headlines, and picture descriptive alt-tags

Automated Web Crawling Techniques

Automated web crawling techniques involve using software to automatically gather data from online sources. These highly efficient methods can be scaled up to handle large-scale web scraping tasks.

1: Web Scraping Libraries

Web scraping libraries are software packages that offer ready-made functions and tools for web scraping tasks. These libraries make the process of navigating web pages, parsing HTML data, and locating elements to extract much simpler. Below are some examples of popular web scraping libraries:

- Beautiful Soup: Specifically designed for parsing and extracting web data from HTML and XML sites. Beautiful Soup is a useful data collection technique for static websites that do not require JavaScript to load.

- Scrapy: Provides a framework for building web scrapers and crawlers. It is a great choice for complex web scraping tasks that involve logging in or dealing with cookies.

- Puppeteer: A JavaScript-based web scraping library that can be used to scrape dynamic web pages.

- Cheerio: Well-suited for scraping static web pages, as it does not support the execution of JavaScript.

- Selenium: Automates web interactions and retrieves data from dynamic sites. Selenium is ideal web scraping framework for websites that require user interaction, such as clicking buttons, filling out forms, and scrolling the page.

2: Web Scraping Tools

A web scraping tool is a program or software that collects data from various internet sources automatically. Depending on your organization’s specific needs, available resources, and technical proficiency, you have the option to use either an in-house or outsourced web scraper.

In-house web scrapers offer the advantage of customization, allowing users to tailor the web crawler to their specific data collection requirements. However, developing an in-house web scraping tool may need technical expertise and resources, including time and effort for maintenance.

3: Web Scraping APIs

Web scraping APIs empower developers with the ability to retrieve and extract pertinent information from websites. Various websites offer web scraping APIs, including popular platforms like Twitter, Amazon, and Facebook. Nevertheless, certain websites may not provide APIs for the specific data being targeted, needing a web scraping service to gather web data. In certain cases, employing an API can be more economical than resorting to web scraping, particularly when the desired data is accessible through an API and the volume of data needed falls within the API’s limitations.

4: Headless Browsers

Headless browsers, such as PhantomJS, Puppeteer, or Selenium, provide users with the capability to gather web data without a graphical user interface. This mode of operation makes headless browsers ideal for scraping interactive and dynamic websites that utilize client-side or server-side scripting. Using headless browsers, web crawlers can access and extract data that may not be readily visible in the website’s HTML code.

One of the key advantages of using a headless browser is its ability to interact with dynamic page elements like buttons and drop-down menus. This feature allows for a more comprehensive data collection process.

Here are the general steps involved in data extraction with a headless browser:

- Set up the headless browser: Choose the appropriate headless browser for your web scraping project and configure it on your server. Each headless browser has its own specific setup requirements, which may depend on factors such as the target website or the programming language being used. It is important to select a headless browser that supports JavaScript and other client-side scripting languages to scrape dynamic web pages effectively.

- Install the necessary libraries: Install a programming language, such as Python or JavaScript, that will enable you to parse and extract the desired data from the web.

- Maintain web scraping tools: Dynamic websites often undergo frequent changes. As a result, it is crucial to regularly update and maintain your web scraping tools to ensure they remain effective. Changes to the underlying HTML code of the website may require adjustments to the scraping process in order to continue extracting accurate and relevant data.

Headless browser is a powerful data collection technique for crawling dynamic and interactive websites. By following the outlined steps and staying vigilant in maintaining your web scraping tools, you can obtain valuable information that may not be easily accessible through traditional means.

5: HTML Parsing

HTML parsing is a data collection technique commonly used to automatically extract data from HTML code. If you want to collect web data through HTML parsing, follow these steps:

- Inspect the HTML code of the target page: Use the developer tools in your browser to examine the HTML code of the web page you wish to scrape. This will allow you to understand the structure of the HTML code and identify the specific elements you want to extract, such as text, images, or links.

- Select a parser: When choosing a parser, consider factors like the programming language being used and the complexity of the website’s HTML structure. The parser you choose should be compatible with the programming language you are using for web scraping. Here are some popular parsers for different programming languages:

- Beautiful Soup and lxml for Python

- Jsoup for Java

- HtmlAgilityPack for C#

- Parse the HTML: This involves reading and interpreting the HTML code of the target web page to extract the desired data elements.

- Extract the data: Use the selected parser to collect the specific data elements you need.

Following these steps, you can extract data from HTML code using HTML parsing techniques.

6: DOM Parsing

DOM parsing enables the parsing of HTML or XML documents into their respective Document Object Model (DOM) representations. The DOM Parser is a component of the W3C standard and offers various methods for traversing the DOM tree and extracting specific information, like text content or attributes.

Use Cases for Web Crawling

Monitoring of Competitor Prices

Retail and businesses can acquire a more comprehensive understanding of how specific entities or consumer groups feel about their price tactics and their competitors’ pricing strategies by employing advanced web crawling techniques. By leveraging and acting on this information, they may better align pricing and promotions with market and customer objectives.

Monitoring the Product Catalogue

Businesses can also use web crawling to collect product catalogues and listings. Brands can address customer issues and fulfil their needs regarding product specifications, accuracy, and design by monitoring and analysing large volumes of product data available on various sites. This can help firms better target their audiences with individualised solutions, resulting in higher customer satisfaction.

Social media and news monitoring

The web crawler can track what’s being said about you and your competitors on news sites, social media sites, forums, as well as other places. It’s capable of making sense of your brand experience examples better and faster than you can. This piece of data can be handy for your marketing team to monitor your brand image through sentiment analysis. This could help you understand more about your customers’ impressions of you and how you compare to your competition.

How to Crawl the Website Using Python Library, Beautiful Soup

Beautiful Soup is a popular Python library that aids in parsing HTML or XML documents into a tree structure so that data may be found and extracted. This library has a simple interface with automated encoding conversion to make website data more accessible.

This library includes basic methods and Pythonic idioms for traversing, searching, and changing a parse tree, as well as automated Unicode and UTF-8 conversions for incoming and outgoing texts.

Installing Beautiful Soup 4

1 | pip install beautifulsoup4 |

Installing Third-party libraries

1 | pip install requests |

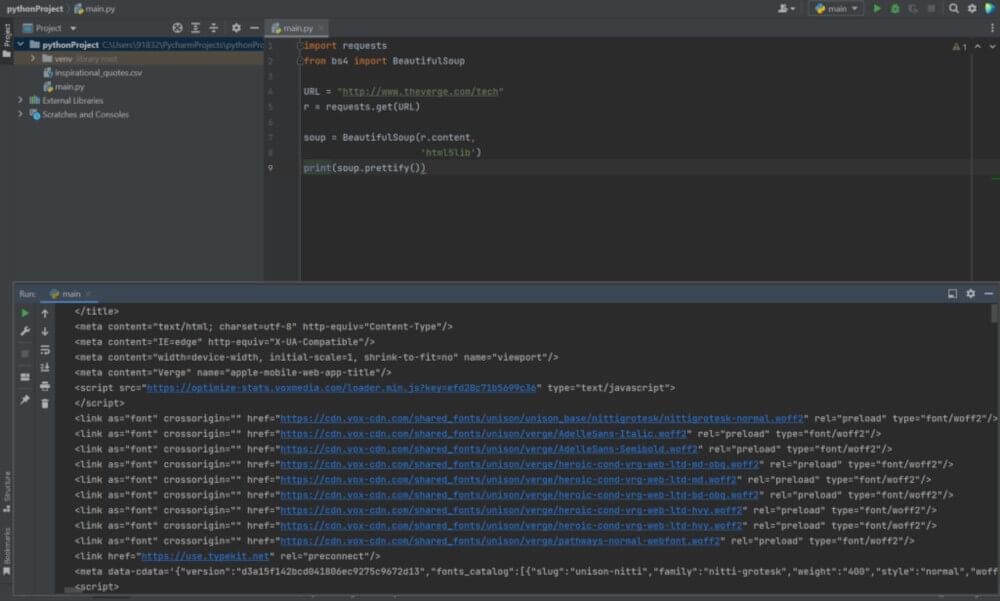

Accessing the HTML content from the webpage

1 | import requests |

Parsing the HTML content

1 | import requests |

How to Crawl Website with Python using Scrapy

Scrapy is a Python framework for web crawling with Python on a large scale. It provides you with all of the features you need to extract data from websites easily, analyse it as needed, and save it in the structure and format of your choice.

Scrapy is compatible with Python 2 and 3. When you’re using Anaconda, you may download the package from the conda-forge channel, which has up-to-date packages for Linux, Windows, and Mac OS X.

To install Scrapy using conda, run:

1 | conda install -c conda-forge scrapy |

If you’re using Linux or Mac OS X, you can install scrapy through

1 | pip install scrapy |

In order to execute the crawler in the shell, enter:

1 | fetch("https://www.reddit.com") |

Scrapy produces a “response” object containing the downloaded data when you crawl something with it. Let’s have a look at what the crawler has gotten.

1 | view(response) |

How to Crawl Website with Python using Crawlbase

Crawling the web might be difficult and frustrating because some websites can block your requests and even restrict your IP address. Writing a simple crawler in Python may not be sufficient without using proxies. To properly crawl relevant data on the web, you’ll require Crawlbase Crawling API, which lets you scrape most web pages without dealing with banned requests or CAPTCHAs.

Let’s demonstrate how to use Crawlbase Crawling API to create your crawling tool.

the requirements for our basic scraping tool:

Take note of your Crawlbase token, which will be the authentication key when using the Crawling API. Let’s begin by downloading and installing the library we’ll use for this project. On your console, type the following command:

1 | pip install crawlbase |

The next step is to import the Crawlbase API

1 | from crawlbase import CrawlingAPI |

Next, after initialising the API, enter your authentication token as follows:

1 | api = CrawlingAPI({'token': 'USER_TOKEN'}) |

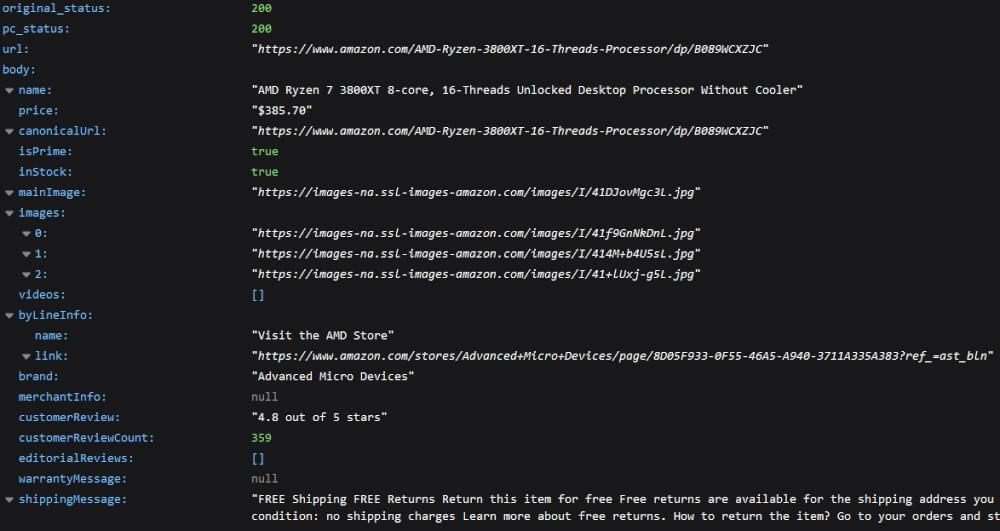

Enter your target URL or any other website you wish to crawl. We’ll use Amazon as an example in this demonstration.

1 | targetURL = 'https://www.amazon.com/AMD-Ryzen-3800XT-16-Threads-Processor/dp/B089WCXZJC' |

The following section of our code will enable us to download the URL’s whole HTML source code and, if successful, will show the result on your console or terminal:

1 | response = api.get(targetURL) |

We’ve now built a crawler. Crawlbase responds to every request it receives. If the status is 200 or successful, our code will show you the crawled HTML. Any other result, such as 503 or 404, indicates that the web crawler was unsuccessful. On the other hand, the API employs thousands of proxies around the world, ensuring that the best data are obtained.

One of the best features of the Crawling API is that you can use the built-in data scrapers for supported sites, which fortunately includes Amazon. Send the data scraper as a parameter in our GET request to use it. Our complete code should now seem as follows:

1 | from crawlbase import CrawlingAPI |

If everything works properly, you will receive a response similar to the one below:

Conclusion

Using a web crawling framework like Crawlbase will make crawling very simple compared to other crawling solutions for any scale of crawling, and the crawling tool will be complete in just a few lines of code. You won’t have to worry about website restrictions or CAPTCHAs with the Crawling API will ensure that your scraper will stay effective and reliable at all times allowing you to focus on what matters most to your project or business.