Costco is one of the largest warehousing companies in the world with 800 over warehouses globally and millions of customers. The inventory includes groceries all the way up to electronics, home goods and clothes. With such a vast range of products, Costco product data could be gold in the eyes of businesses, researchers, and developers.

You can extract data from Costco to get insights into product prices, product availability, customer feedback etc. Using the data you pull from Costco, you can make informed decisions and track market trends. In this article, you will learn how to scrape Costco product data with the Crawlbase’s Crawling API and Python.

Let’s jump right into the process!

Table of Contents

- Why Scrape Costco for Product Data?

- Key Data Points to Extract from Costco

- Crawlbase Crawling API for Costco Scraping

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for Selectors

- Writing the Costco Search Listings Scraper

- Handling Pagination

- Storing Data in a JSON File

- Complete Code

- Inspecting the HTML for Selectors

- Writing the Costco Product Page Scraper

- Storing Data in a JSON File

- Complete Code

Why Scrape Costco for Product Data?

Costco known for its variety of great quality products at low prices making it popular among millions. Scraping Costco’s product data can be used for many purposes including price comparison, market research, inventory management and product analysis. By getting this data businesses can monitor product trends, track pricing strategies and understand customer preferences.

Whether you’re a developer building an app, a business owner doing market research or just someone curious about product pricing, scraping Costco can be super useful. By extracting product information such as price, availability and product description you can make more informed decisions or have automated systems that keep you updated in real time.

In next sections, we will learn about the key data points to consider and walk you through the step by step process of setting up a scraper to get Costco’s product data.

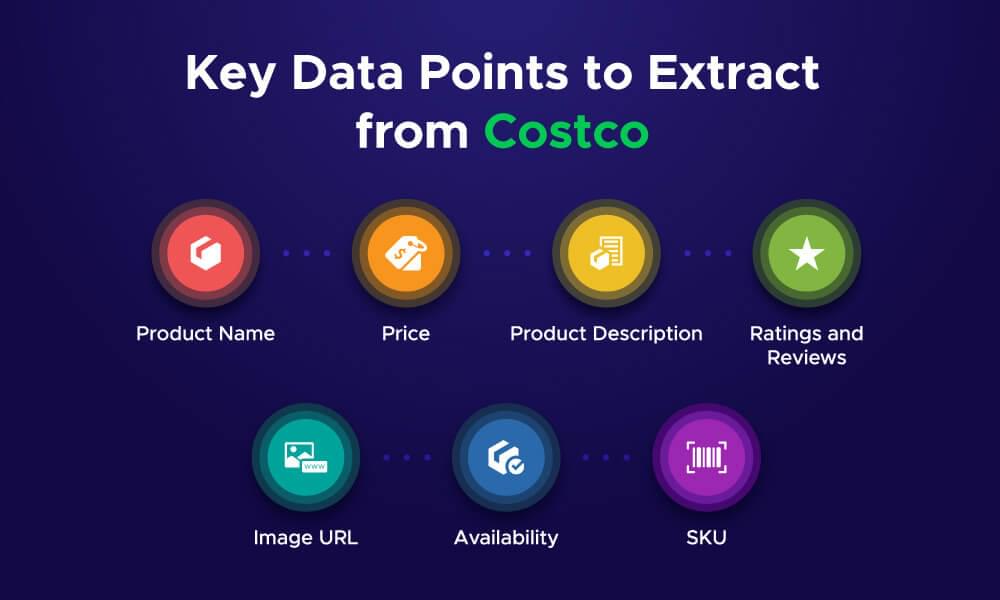

Key Data Points to Extract from Costco

When scraping Costco for product data you want to focus on getting useful information to make informed decisions. Here are the key data points to consider:

- Product Name: The product name is important for identifying and organizing items.

- Price: The price of each product helps with price comparison and tracking price changes over time.

- Product Description: Detailed descriptions give insights into the features and benefits of each item.

- Ratings and Reviews: Collecting customer reviews and star ratings gives valuable feedback on product quality and customer satisfaction.

- Image URL: The product image is useful for visual references and marketing purposes.

- Availability: The product image is good for visual references and marketing purposes.

- SKU (Stock Keeping Unit): Unique product identifiers like SKUs are important for tracking inventory and managing data.

Once you have these data points, you can build a product database to support your business needs such as market research, inventory management and competitive analysis. Next we’ll look at how Crawlbase Crawling API can help with scraping Costco.

Crawlbase Crawling API for Costco Scraping

Crawlbase’s Crawling API makes scraping Costco websites super easy and fast. Costco’s website uses dynamic content, which means some product data is loaded via JavaScript. That makes scraping harder, but Crawlbase Crawling API renders the page like a real browser.

Here’s why Crawlbase Crawling API is a great choice for scraping Costco:

- Handles Dynamic Content: It handles JavaScript heavy pages, so all data is loaded and accessible for scraping.

- IP Rotation: To avoid getting blocked by Costco, Crawlbase does IP rotation for you, so you don’t have to worry about rate limits or bans.

- High Performance: With Crawlbase, you can scrape large volumes of data quickly and efficiently, saving you time and resources.

- Customizable Requests: You can set custom headers, cookies or even control the requests behavior to fit your needs.

With these advantages, Crawlbase Crawling API simplifies the entire process, making it a perfect solution for extracting product data from Costco. In the next section, we’ll set up Python environment for Costco scraping.

Crawlbase Python Library

Crawlbase has a Python library that makes web scraping a lot easier. This library requires an access token to authenticate. You can get a token after creating an account on crawlbase.

Here’s an example function demonstrating how to use the Crawlbase Crawling API to send requests:

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase offers two types of tokens:

- Normal Token for static sites.

- JavaScript (JS) Token for dynamic or browser-based requests.

For scraping dynamic sites like Costco, you’ll need the JS Token. Crawlbase provides 1,000 free requests to get you started, and no credit card is required for this trial. For more details, check out the Crawlbase Crawling API documentation.

Setting Up Your Python Environment

Before you start scraping Costco, you need to set up a proper Python environment. This involves installing Python, the required libraries, and an IDE to write and test your code.

Installing Python and Required Libraries

- Install Python: Download and install Python from the official Python website. Choose the latest stable version for your operating system.

- Install Required Libraries: After installing Python, you’ll need some libraries to work with Crawlbase Crawling API and to handle the scraping process. Open your terminal or command prompt and run the following commands:

1 | pip install beautifulsoap4 |

**beautifulsoup4**: BeautifulSoup makes it easier to parse and navigate through the HTML structure of the web pages.**crawlbase**: Crawlbase is the official library from Crawlbase that you’ll use to connect with their API.

Choosing an IDE

Choosing the right Integrated Development Environment (IDE) can make coding easier and more efficient. Here are a few popular options:

- VS Code: Simple and lightweight, multi-purpose, free with Python extensions.

- PyCharm: A robust Python IDE with many built-in tools for professional development.

- Jupyter Notebooks: Good for running codes with an interactive setting, especially for data projects.

Now that you have Python and the required libraries installed, and you’ve chosen an IDE, you can start scraping Costco product data. In the next section we will go step by step on how to scrape Costco search listings.

How to Scrape Costco Search Listings

Now that we’ve set up the Python environment, let’s get into scraping Costco search listings. In this section we’ll cover how to inspect the HTML for selectors, write a scraper using Crawlbase and BeautifulSoup, handle pagination and store the scraped data in a JSON file.

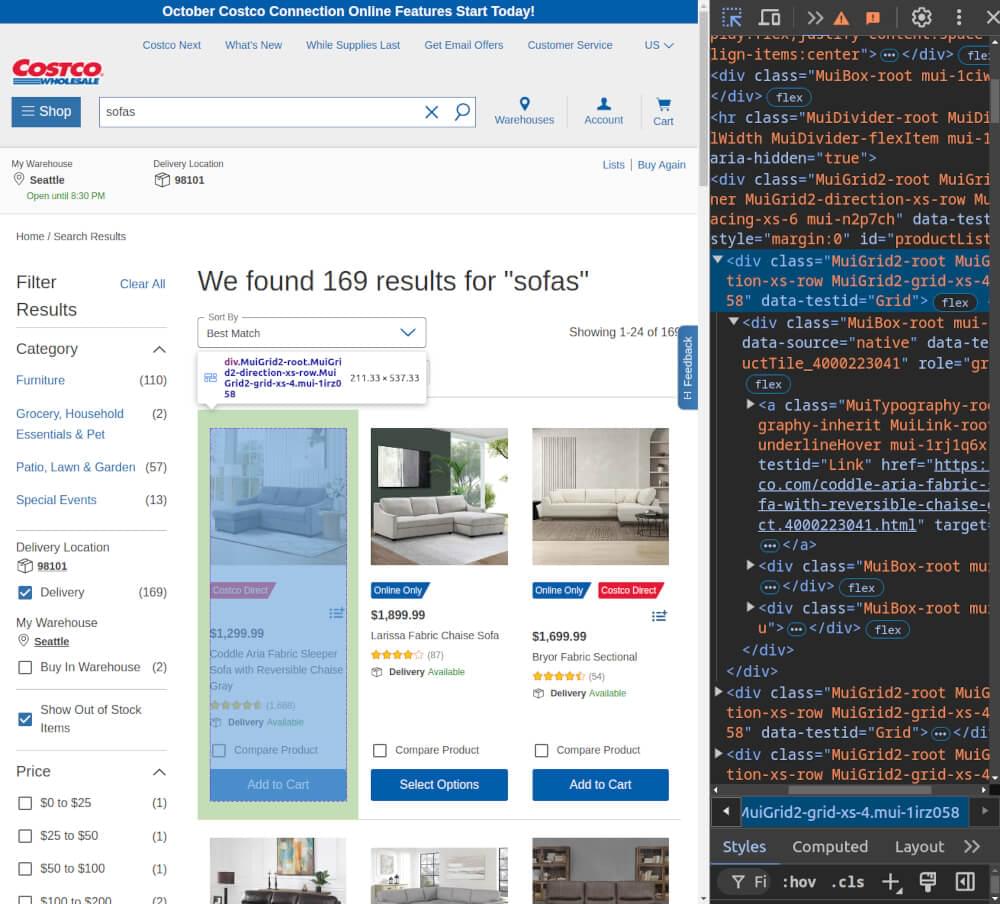

Inspecting the HTML for Selectors

To scrape the Costco product listings efficiently we need to inspect the HTML structure. Here’s what you’ll typically need to find:

- Product Title: Found in a

<div>withdata-testidstarting withText_ProductTile_. - Product Price: Located in a

<div>withdata-testidstarting withText_Price_. - Product Rating: Found in a

divwithdata-testidstarting withRating_ProductTile_. - Product URL: Embedded in an

<a>tag withdata-testid="Link". - Image URL: Found in an

<img>tag under thesrcattribute.

Additionally, Product listings are inside div[id="productList"], with items grouped under div[data-testid="Grid"].

Writing the Costco Search Listings Scraper

Crawlbase Crawling API provide multiple parameters which you can use with it. Using Crawlbase’s JS Token you can handle dynamic content loading on Costco. The ajax_wait and page_wait parameters can be used to give the page time to load.

Let’s write a scraper that collects the product title, price, product URL and image URL from the Costco search results page using Crawlbase Crawling API and BeautifulSoup.

1 | from crawlbase import CrawlingAPI |

In this code:

- fetch_search_listings(): This function uses the Crawlbase API to fetch the HTML content from the Costco search listings page.

- scrape_costco_search_listings(): This function parses the HTML using BeautifulSoup to extract product details like title, price, product URL, and image URL.

Handling Pagination

Costco search results can span multiple pages. To scrape all products, we need to handle pagination. Costco uses the ¤tPage= parameter in the URL to load different pages.

Here’s how to handle pagination:

1 | def scrape_all_pages(base_url, total_pages): |

This code will scrape multiple pages of search results by appending the ¤tPage= parameter to the base URL.

How to Save Data in a JSON File

Once you’ve scraped the product data, it’s important to store it for later use. Here’s how you can save the product listings into a JSON file:

1 | import json |

This function will write the scraped product details into a costco_product_listings.json file.

Complete Code

Here’s the complete code to scrape Costco search listings, handle pagination, and store the data in a JSON file:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

How to Scrape Costco Product Pages

Now that we’ve covered how to scrape Costco search listings, next step is to extract detailed product information from individual product pages. In this section we’ll cover how to inspect the HTML for selectors, write a scraper for Costco product pages, and store the data in a JSON file.

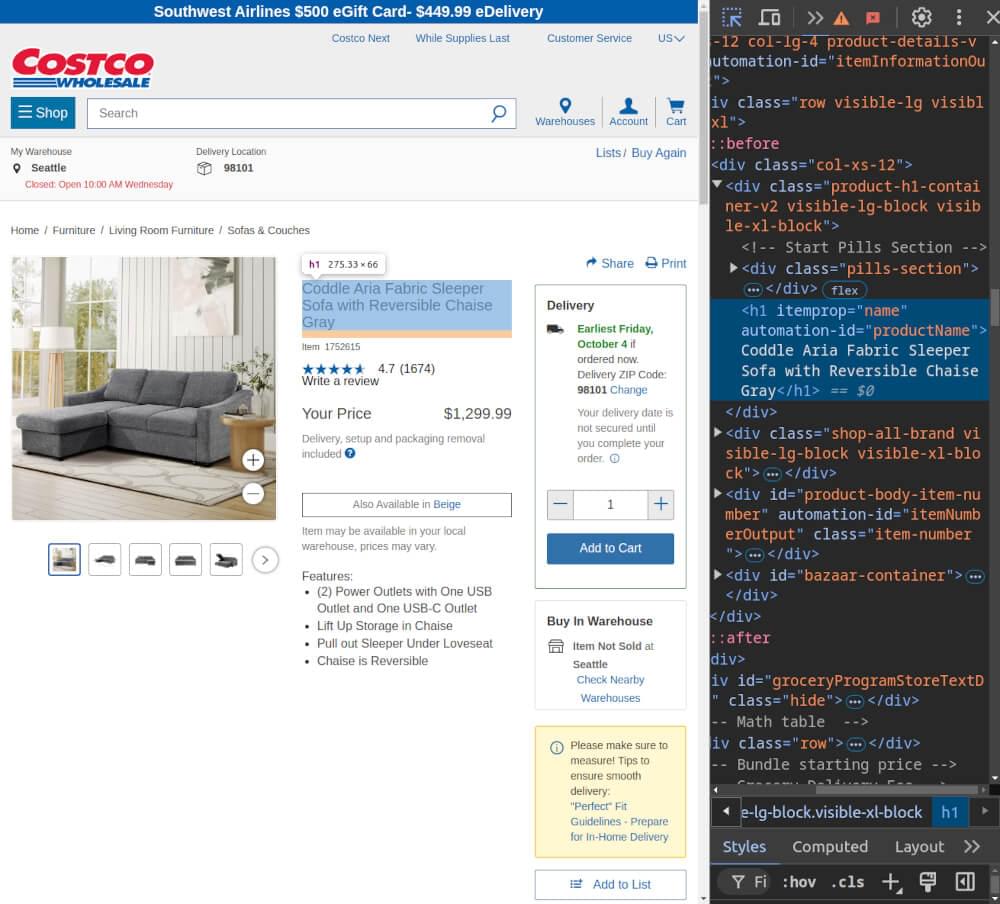

Inspecting the HTML for Selectors

To scrape individual Costco product pages we need to inspect the HTML structure of the page. Here’s what you’ll typically need to find:

- Product Title: The title is found inside an

<h1>tag with the attributeautomation-id="productName". - Product Price: The price is located within a

<span>tag with the attributeautomation-id="productPriceOutput". - Product Rating: The rating is found within a

<div>tag with the attributeitemprop="ratingValue". - Product Description: Descriptions are located inside a

<div>tag with the idproduct-tab1-espotdetails. - Images: The product image URL is extracted from an

<img>tag with the classthumbnail-imageby grabbing thesrcattribute. - Specifications: The specifications are stored within a structured HTML, typically using rows in

<div>tags with classes like.spec-name, and the values are found in sibling<div>tags.

Writing the Costco Product Page Scraper

We’ll now create a scraper that extracts detailed information from individual product pages, product title, price, description and images. The scraper will use Crawlbase Crawling API ajax_wait and page_wait parameters for fetching the content and BeautifulSoup for parsing the HTML.

1 | from crawlbase import CrawlingAPI |

In this code:

**fetch_product_page()**: This function uses Crawlbase to fetch the HTML content from a Costco product page.**scrape_costco_product_page()**: This function uses BeautifulSoup to parse the HTML and extract relevant details like the product title, price, description, and image URL.

Storing Data in a JSON File

Once we have scraped the product details, we can store them in a JSON file for later use.

1 | import json |

This code will write the scraped product details into a costco_product_details.json file.

Complete Code

Here’s the complete code that fetches and stores Costco product page details, using Crawlbase and BeautifulSoup:

1 | from crawlbase import CrawlingAPI |

With this code, you can now scrape individual Costco product pages and store detailed information like product titles, prices, descriptions, and images in a structured format.

Example Output:

1 | { |

Optimize Costco Scraper with Crawlbase

Scraping product data from Costco can be a powerful tool for tracking prices, product availability and market trends. With Crawlbase Crawling API and BeautifulSoup you can automate the process and store the data in JSON for analysis.

Follow this guide to build a scraper for your needs, whether it’s for competitor analysis, research or inventory tracking. Just make sure to follow the website’s terms of service. If you’re interested in exploring scraping from other e-commerce platforms, feel free to explore the following comprehensive guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Flipkart

📜 How to Scrape Etsy

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Good luck with your scraping journey!

Frequently Asked Questions

Q. Is scraping Costco legal?

Scraping Costco or any website must be done responsibly and within the website’s legal guidelines. Always check the site’s terms of service to make sure you’re allowed to scrape the data. Don’t scrape too aggressively to prevent overwhelming their servers. Using tools like Crawlbase which respects rate limits and manages IP rotation can help keep your scraping activity within acceptable boundaries.

Q. Why use Crawlbase Crawling API for scraping Costco?

Crawlbase Crawling API is designed to handle complex websites that use JavaScript like Costco. Many websites dynamically load content making it hard for traditional scraping methods to work. Crawlbase helps bypass those limitations by rendering JavaScript and providing the full HTML of the page making it easier to scrape the required data. Also it manages proxies and rotates IPs which helps prevent getting blocked while scraping large amount of data.

Q. What data can I extract from Costco using this scraper?

Using this scraper, you can extract key data points from Costco product pages such as product names, prices, descriptions, ratings and image URLs. You can also capture product page links and handle pagination to scrape through multiple pages of search listings efficiently. This data can be stored in a structured format like JSON for easy access and analysis.