Noon is one of the biggest e-commerce platforms in the Middle East, with millions of customers across the UAE, Saudi Arabia, and Egypt. Noon has a huge product catalog and thousands of daily transactions. Scraping Noon data helps businesses to track prices, competitors and market insights.

But scraping Noon is tough. The website has dynamic content, JavaScript-based elements, and anti-bot measures that can block traditional scraping methods. We will use Crawlbase Crawling API to extract search results and product details while handling these challenges.

This tutorial will show you how to scrape Noon data using Python with step-by-step examples for structured data extraction.

Let’s start!

Table of Contents

- Why Scrape Noon Data?

- Key Data Points to Extract from Noon

- Challenges While Scraping Noon

- Setting Up Your Python Environment

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for CSS Selectors

- Writing the Noon Search Listings Scraper

- Handling Pagination

- Storing Data in a CSV File

- Complete Code Example

- Inspecting the HTML for CSS Selectors

- Writing the Product Page Scraper

- Storing Data in a CSV File

- Complete Code Example

Why Scrape Noon Data?

With a vast product catalog covering electronics, fashion, beauty, groceries, and more, it’s a major player in the region’s e-commerce industry.

Here’s why people scrape Noon:

- Price Tracking: Monitor competitor prices and adjust your pricing strategy.

- Product Availability: Keep track of stock levels and demand trends.

- Customer Insights: Analyze reviews, ratings, and product descriptions to understand consumer preferences.

- SEO & Marketing Strategies: Get product metadata and optimise your listings for visibility.

- Sales & Discounts Monitoring: Track ongoing promotions and special deals.

Key Data Points to Extract from Noon

Noon has millions of products across different categories, so to make the most out of scraping, Noon focuses on the most important data points to aid your business decisions and get a competitive edge. The below image shows some of the data points to focus on.

Challenges While Scraping Noon

Scraping Noon can be good but there are some challenges you may encounter. Here are some common Noon scraping challenges and their solutions:

Dynamic Content (JavaScript Rendering): Noon uses JavaScript to load dynamic content so it’s harder to scrape. Without proper tools, the content may not load at all or load incorrectly, resulting in incomplete or wrong data.

Solution: Use the Crawlbase Crawling API, which handles JavaScript rendering seamlessly, and you get the complete page content, including dynamically loaded elements like product details and prices.

Anti-Bot Measures: Websites like Noon implement anti-bot technologies like CAPTCHAs and rate-limiting to prevent automated scraping.

Solution: The Crawlbase Crawling API bypasses these protections by rotating IP addresses, solving CAPTCHAs and mimicking human-like browsing behavior so you don’t get blocked while scraping.

Complex Pagination: Navigating through search results and product pages involves multiple pages of data. Handling pagination correctly is important so you don’t miss anything.

Solution: Crawlbase Crawling API provides different parameters to handle pagination so you can scrape all pages of search results or product listings without having to manually navigate through them.

Legal & Ethical Concerns: Scraping any website, including Noon, must be done according to legal and ethical guidelines. You must respect the site’s robots.txt file, limit scraping frequency, and avoid scraping sensitive information.

Solution: Always follow best practices for responsible scraping, like using proper delay intervals and anonymizing your requests.

By using the right tools like Crawlbase and following ethical scraping practices, you can overcome these challenges and scrape Noon efficiently.

Setting Up Your Python Environment

Before you start scraping Noon data, you need to set up your environment.This includes installing Python, required libraries, and choosing the right IDE to code.

Installing Python and Required Libraries

If you don’t have Python installed, download the latest version from python.org and follow the installation instructions for your OS.

Next, install the required libraries by running:

1 | pip install crawlbase beautifulsoup4 pandas |

- Crawlbase – Bypasses anti-bot protections and scrapes JavaScript heavy pages.

- BeautifulSoup – Extracts structured data from HTML.

- Pandas – Handles and stores data in CSV format.

Choosing an IDE for Scraping

Choosing the right Integrated Development Environment (IDE) makes scraping easier. Here are some good options:

- VS Code – Lightweight and feature-rich with great Python support.

- PyCharm – Powerful debugging and automation features.

- Jupyter Notebook – Ideal for interactive scraping and quick data analysis.

With Python installed, libraries set up and IDE ready, you’re now ready to start scraping Noon data.

Scraping Noon Search Results

Scraping search results from Noon will give you the product names, prices, ratings, and URLs. This data is useful for competitive analysis, price monitoring, and market research. In this section, we will guide you through the process of scraping search results from Noon, handling pagination, and storing the data in a CSV file.

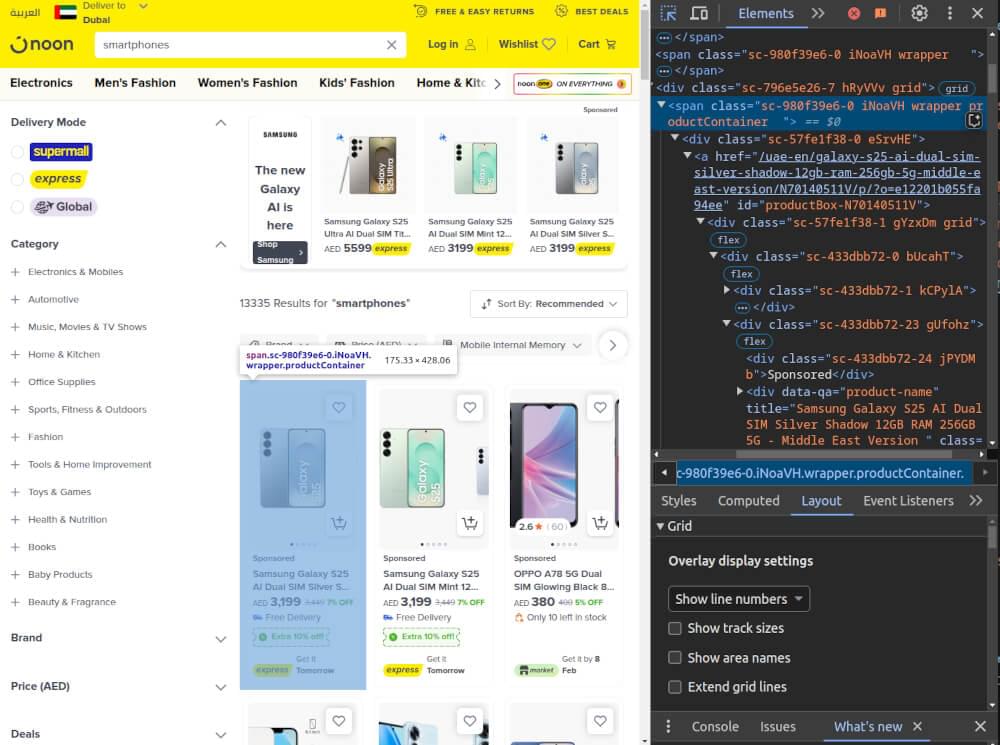

Inspecting the HTML for CSS Selectors

Before we start writing the scraper, we need to inspect the HTML structure of Noon’s search results page. By doing this we can find the CSS selectors to extract the product details.

- Go to Noon.com and search for a product (e.g., “smartphones”).

- Right-click on any product and choose Inspect or Inspect Element in Chrome Developer Tools.

- Identify the following key HTML elements:

- Product Title: Found in the

<div data-qa="product-name">tag. - Price: Found in the

<strong class="amount">tag. - Currency: Found in the

<span class="currency">tag. - Ratings: Found in the

<div class="dGLdNc">tag. - Product URL: Found in the

hrefattribute of the<a>tag.

Once you identify the relevant elements and their CSS classes or IDs, you can proceed to write the scraper.

Writing the Noon Search Listings Scraper

Now that we’ve inspected the HTML structure, we can write a Python script to scrape the product data from Noon. We’ll use Crawlbase Crawling API for bypassing anti-bot measures and BeautifulSoup for parsing the HTML.

1 | from crawlbase import CrawlingAPI |

We first initialize the CrawlingAPI class with a token for authentication. The scrape_noon_search function fetches the HTML of a search results page from Noon based on a query and page number, handling AJAX content loading. The extract_product_data function parses the HTML using BeautifulSoup, extracting details such as product titles, prices, ratings, and URLs. It then returns this data in a structured list of dictionaries.

Handling Pagination

Noon’s search results span across multiple pages. To scrape all the data, we need to handle pagination and loop through each page. Here’s how we can do it:

1 | def scrape_all_pages(query, max_pages): |

This function loops through the specified number of pages, fetching and extracting product data until all pages are processed.

Storing Data in a CSV File

Once we’ve extracted the product details, we need to store the data in a structured format. The most common and easy-to-handle format is CSV. Below is the code to save the scraped data:

1 | import csv |

This function takes the list of products and saves it as a CSV file, making it easy to analyze or import into other tools.

Complete Code Example

Here is the complete Python script to scrape Noon search results, handle pagination, and store the data in a CSV file:

1 | from crawlbase import CrawlingAPI |

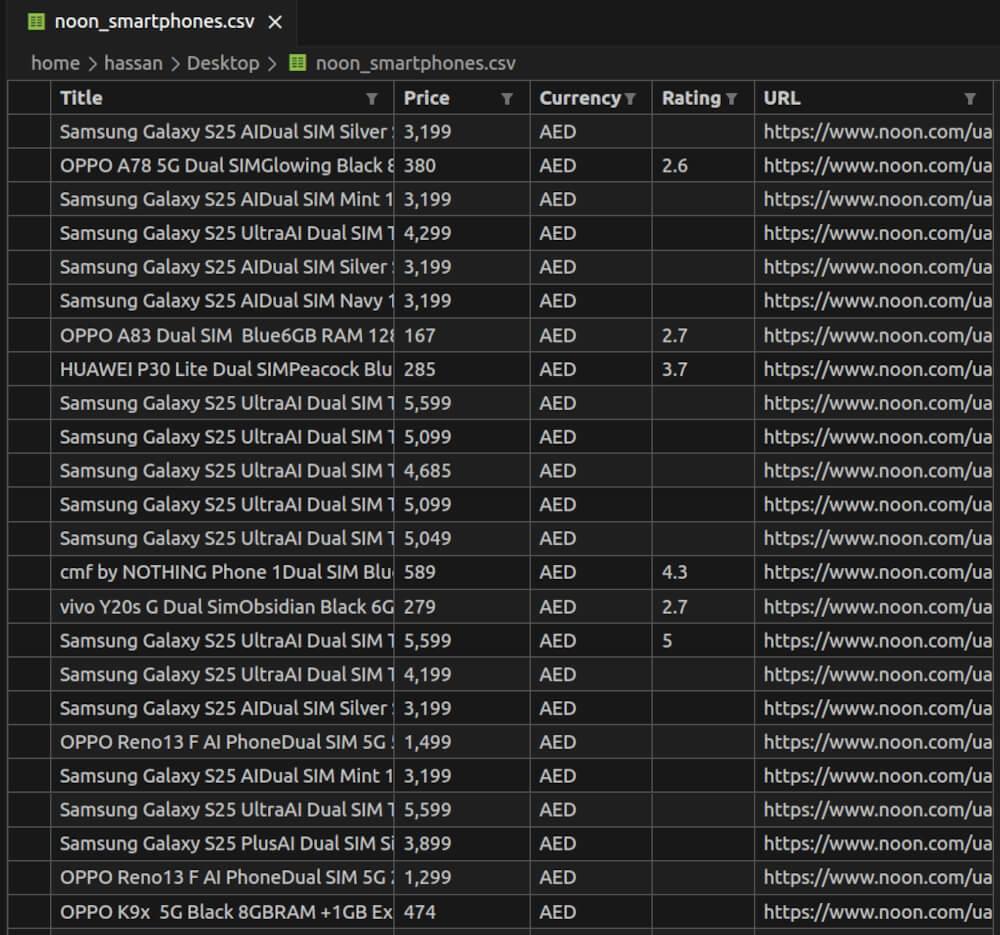

noon_smartphones.csv Snapshot:

Scraping Noon Product Pages

Scraping product pages on Noon will give you all the product details, including descriptions, specifications, and customer reviews. This data will help businesses optimize their product listings and customer behavior. In this section, we will go through the process of inspecting the HTML structure of a product page, writing the scraper and saving the data into a CSV file.

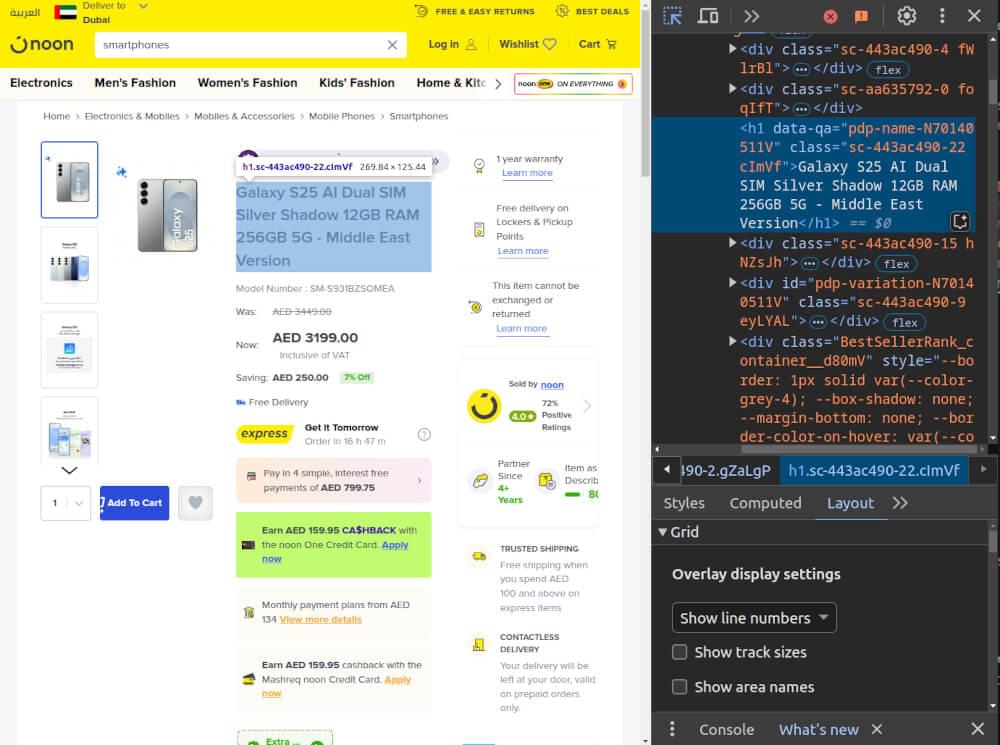

Inspecting the HTML for CSS Selectors

Before we write the scraper, we need to inspect the HTML structure of the product page to identify the correct CSS selectors for the elements we want to scrape. Here’s how to do it:

- Open a product page on Noon (e.g., a smartphone page).

- Right-click on a product detail (e.g., product name, price, description) and click on Inspect in Chrome Developer Tools.

- Look for key elements, such as:

- Product Name: Found in the

<h1 data-qa^="pdp-name-">tag. - Price: Found in the

<div data-qa="div-price-now">tag. - Product Highlights: Found in the

<div class="oPZpQ">tag, specifically within an unordered list (<ul>). - Product Specifications: Found in the

<div class="dROUvm">tag, within a table’s<tr>tags containing<td>elements.

Once you identify the relevant elements and their CSS classes or IDs, you can proceed to write the scraper.

Writing the Noon Product Page Scraper

Now, let’s write a Python script to scrape the product details from Noon product pages using Crawlbase Crawling API and BeautifulSoup.

1 | from crawlbase import CrawlingAPI |

Storing Data in a CSV File

Once we’ve extracted the product details, we need to store this information in a structured format like CSV for easy analysis. Here’s a simple function to save the scraped data:

1 | import csv |

Complete Code Example

Now, let’s combine everything into a complete script. The main() function will scrape data for multiple product pages and store the results in a CSV file.

1 | from crawlbase import CrawlingAPI |

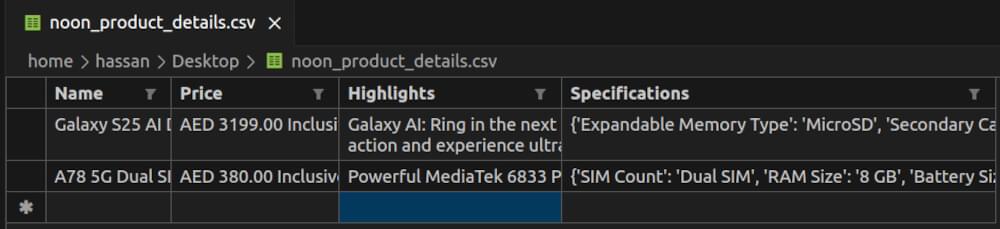

noon_product_details.csv Snapshot:

Final Thoughts

Scraping Noon data is great for businesses to track prices, analyze competitors and improve product listings. Crawlbase Crawling API makes this process easier by handling JavaScript rendering and CAPTCHA protections so you get complete and accurate data with no barriers.

With Python and BeautifulSoup, scraping data from Noon search results and product pages is easy. Follow ethical practices and set up the right environment, and you’ll have the insights to stay ahead in the competitive e-commerce game.

If you want to scrape from other e-commerce platforms check out these other guides.

📜 How to Scrape Amazon

📜 How to scrape Walmart

📜 How to Scrape AliExpress

📜 How to Scrape Zalando

📜 Easy Steps to Extract Data from Zoro

Contact our support if you have any questions. Happy scraping!

Frequently Asked Questions

Q. Is web scraping legal?

Web scraping is legal as long as you follow ethical guidelines. Make sure to respect the website’s robots.txt file, don’t overload the servers with requests, and don’t scrape sensitive data. Always make sure your scraping practices comply with the website’s terms of service and local laws.

Q. What is Crawlbase Crawling API and how does it help with scraping Noon?

Crawlbase Crawling API is a tool that helps bypass common obstacles like JavaScript rendering and CAPTCHA when scraping websites. It makes sure you can scrape dynamic content from Noon without getting blocked. Whether you’re scraping product pages or search results, Crawlbase handles the technical stuff so you can get the data easily.

Q. Can I scrape product prices and availability from Noon using this method?

Yes, you can scrape product prices, availability, ratings, and other important data from Noon. Inspect the HTML structure to find CSS selectors and use BeautifulSoap for HTML parsing. Use Crawlbase Crawling API for handling JS rendering and CAPTCHAs.