Healthline.com is one of the top health and wellness websites with detailed articles, tips and insights from experts. From article lists to in-depth guides, it has content for many use cases. Whether you’re researching, building a health database or analyzing wellness trends, scraping data from Healthline can be super useful.

But scraping a dynamic website like healthline.com is not easy. The site uses JavaScript to render its pages so traditional web scraping methods won’t work. That’s where Crawlbase Crawling API comes in. Crawlbase handles JavaScript rendered content seamlessly, makes the whole scraping process easy.

In this blog, we will cover why you might want to scrape Healthline.com, the key data points to target, how to scrape it using the Crawlbase Crawling API with Python, and how to store the scraped data in a CSV file. Let’s get started!

Table Of Contents

- Why Scrape Healthline.com?

- Key Data Points to Extract from Healthline.com

- Crawlbase Crawling API for Healthline.com Scraping

- Why Use Crawlbase Crawling API

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML Structure

- Writing the Healthline.com Listing Scraper

- Storing Data in a CSV File

- Complete Code

- Inspecting the HTML Structure

- Writing the Healthline.com Article Page

- Storing Data in a CSV File

- Complete Code

Why Scrape Healthline.com?

Healthline.com is a trusted health, wellness and medical information site with millions of visitors every month. Their content is well researched, user friendly and covers a wide range of topics from nutrition and fitness to disease management and mental health. So if you need to gather health related data.

Here’s why you might scrape Healthline:

- Building Health Databases: If you’re building a health and wellness site you can extract structured data from Healthline, article titles, summaries and key points.

- Trend Analysis: Scrape Healthline articles to see what’s trending, what’s new, and what users are interested in.

- Research Projects: Students, data analysts, and researchers can use Healthline data for studies or projects on health, fitness, or medical breakthroughs.

- Content Curation: Health bloggers or wellness app developers can get inspiration or references for their content.

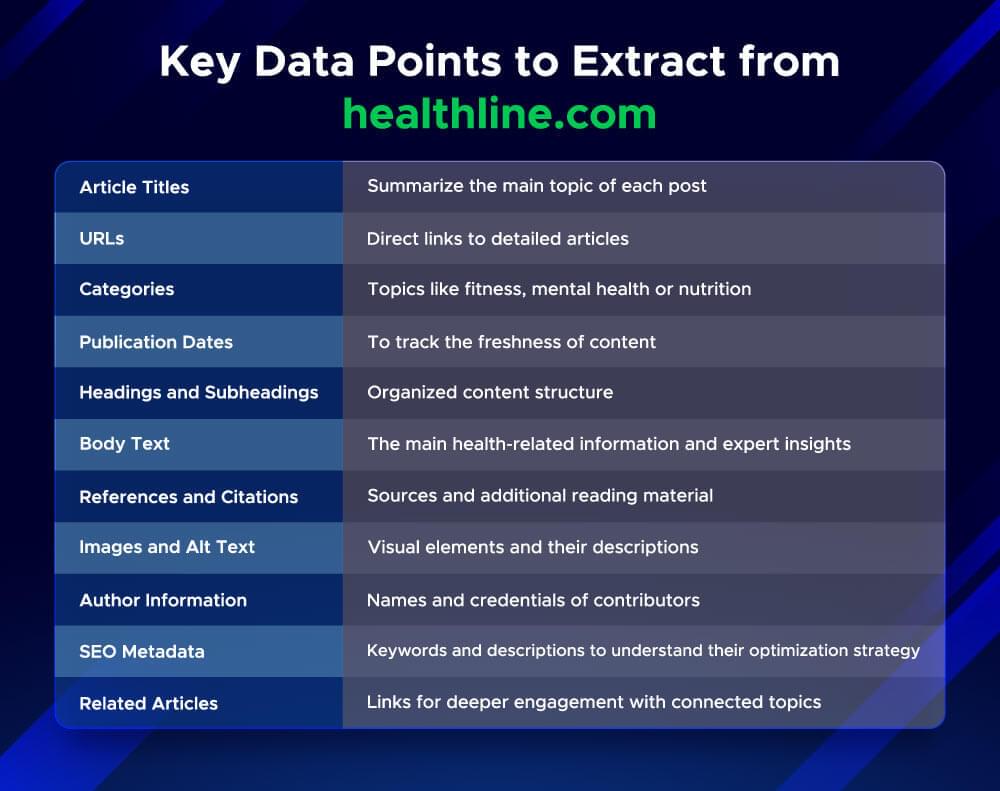

Key Data Points to Extract from healthline.com

When scraping healthline.com, focus on the following valuable data points:

Gathering all this information gives you a full picture of the website’s content which is super useful for research or health projects. The next section explains how the Crawlbase Crawling API makes scraping this data easy.

Crawlbase Crawling API for healthline.com Scraping

Scraping healthline.com requires handling JavaScript rendered content which can be tricky. The Crawlbase Crawling API takes care of JavaScript rendering, proxies and other technicalities for you.

Why Use Crawlbase Crawling API?

The Crawlbase Crawling API is perfect for scraping healthline.com because:

- Handles JavaScript Rendering: For dynamic websites like healthline.com.

- Automatic Proxy Rotation: Prevents IP blocks by rotating proxies.

- Error Handling: Handles CAPTCHAs and website restrictions.

- Easy Integration with Python: Easy with Crawlbase Python library.

- Free Trial: Offers 1,000 free requests for an easy start.

Crawlbase Python Library

Crawlbase also has a Python library to make it easy to integrate the API into your projects. You’ll need an access token which is available after signing up. Follow this example to send a request to the Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Key Notes:

- Use a JavaScript (JS) Token for scraping dynamic content.

- Crawlbase supports static and dynamic content scraping with dedicated tokens.

- With the Python library, you can scrape and extract data without worrying about JavaScript rendering or proxies.

Now, let’s get started with setting up your Python environment to scrape healthline.com.

Setting Up Your Python Environment

Before you start scraping healthline.com you need to set up your Python environment. This step will ensure that you have all the tools and libraries you need to run your scraper.

1. Install Python and Required Libraries

Download and install Python from the official Python website. Once Python is installed you can use pip, Python’s package manager, to install the libraries:

1 | pip install crawlbase beautifulsoup4 pandas |

- Crawlbase: Handles the interaction with the Crawlbase Crawling API.

- BeautifulSoup: For parsing HTML and extracting required data.

- Pandas: Helps structure and store scraped data in CSV files or other formats.

2. Choose an IDE

An Integrated Development Environment (IDE) makes coding more efficient. Popular options include:

- PyCharm: A powerful tool with debugging and project management features.

- Visual Studio Code: Lightweight and beginner-friendly with plenty of extensions.

- Jupyter Notebook: Ideal for testing and running small scripts interactively.

3. Create a New Project

Set up a project folder and create a Python file where you will write your scraper script. For example:

1 | mkdir healthline_scraper |

Once your environment is ready, you’re all set to start building your scraper for healthline.com. In the next sections, we’ll go step by step through writing the scraper for listing pages and article pages.

Scraping Healthline.com Articles Listings

To scrape article listings from healthline.com, we’ll use the Crawlbase Crawling API for dynamic JavaScript rendering. Let’s break this down step by step, with professional yet easy-to-understand code examples.

1. Inspecting the HTML Structure

Before writing code, open healthline.com and navigate to an article listing page. Use the browser’s developer tools (usually accessible by pressing F12) to inspect the HTML structure.

Example of an article link structure:

1 | <div class="css-1hm2gwy"> |

Identify elements such as:

- Article titles: Found in an

atag with classcss-17zb9f8. - Links: Found in

hrefattribute of an atag. - Description: Found in an

divelement with classcss-1evntxy.

2. Writing the Healthline.com Listing Scraper

We’ll use the Crawlbase Crawling API to fetch the page content and BeautifulSoup to parse it. We will use the ajax_wait and page_wait parameters provided by Crawlbase Crawling API to handle JS content. You can read about these parameters here.

1 | from crawlbase import CrawlingAPI |

3. Storing Data in a CSV File

You can use the pandas library to save the scraped data into a CSV file for easy access.

1 | import pandas as pd |

4. Complete Code

Combining everything, here’s the full scraper:

1 | from crawlbase import CrawlingAPI |

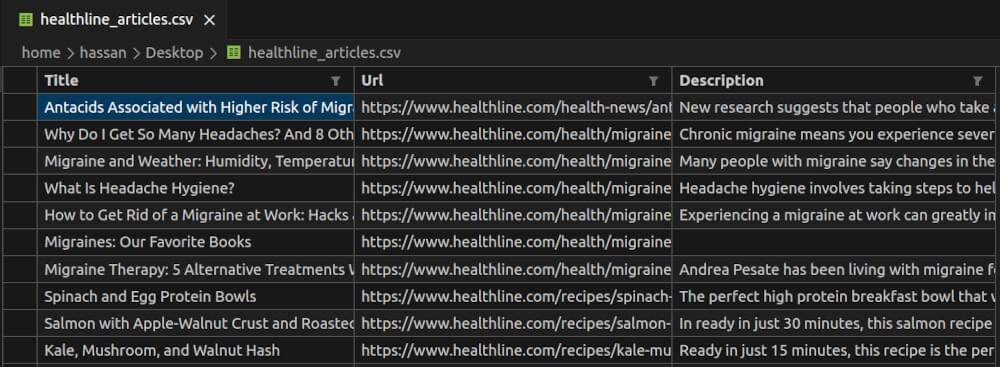

healthline_articles.csv Snapshot:

Scraping Healthline.com Article Page

After collecting the listing of articles, the next step is to scrape details from individual article pages. Each article page typically contains detailed content, such as the title, publication date, and main body text. Here’s how to extract this data efficiently using the Crawlbase Crawling API and Python.

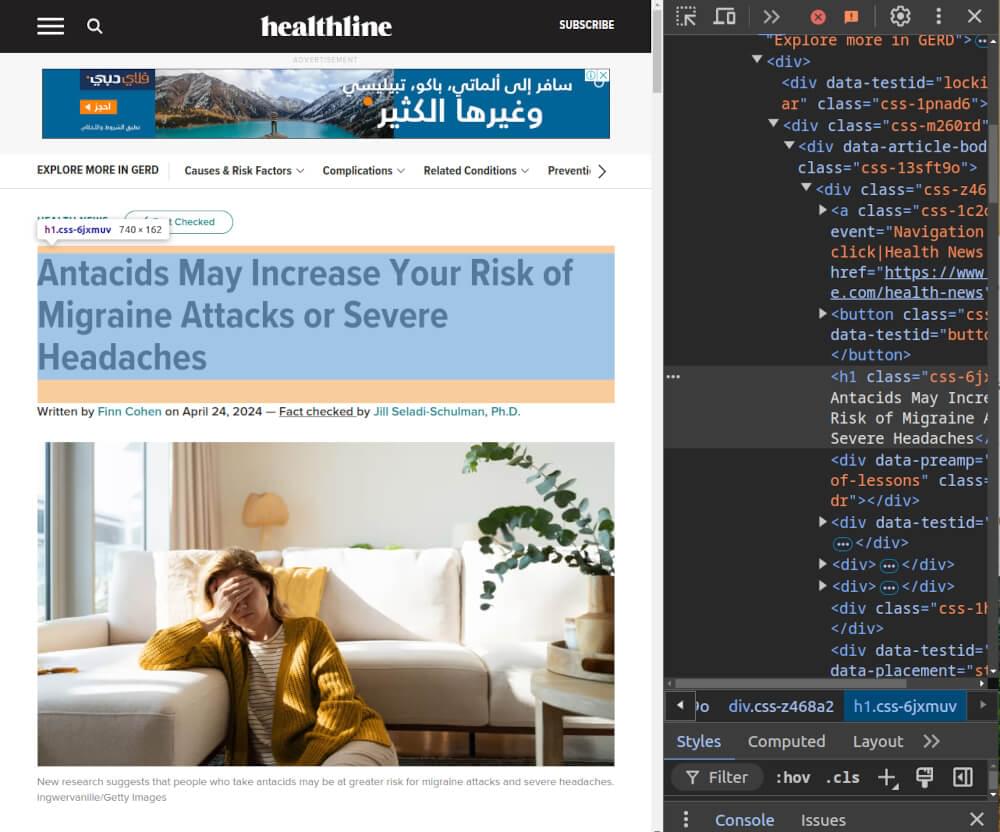

1. Inspecting the HTML Structure

Open an article page from healthline.com in your browser and inspect the page source using developer tools (F12).

Look for:

- Title: Found in

<h1>tag with classcss-6jxmuv. - Byline: Found in a

divwith attributedata-testid="byline". - Body Content: Found in

articletag with classarticle-body.

2. Writing the Healthline.com Article Scraper

We’ll fetch the article’s HTML using the Crawlbase Crawling API and extract the desired information using BeautifulSoup.

1 | from crawlbase import CrawlingAPI |

3. Storing Data in a CSV File

After scraping multiple article pages, save the extracted data into a CSV file using pandas.

4. Complete Code

Here’s the combined code for scraping multiple articles and saving them to a CSV file:

1 | from crawlbase import CrawlingAPI |

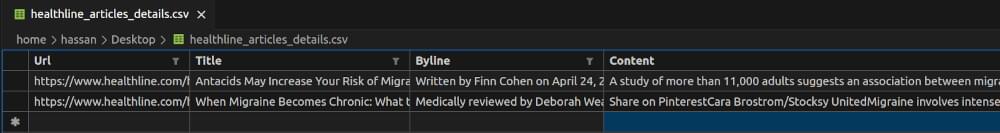

healthline_articles_details.csv Snapshot:

Final Thoughts

Scraping healthline.com can unlock valuable insights by extracting health-related content for research, analysis, or application development. Using tools like the Crawlbase Crawling API makes this process easier, even for websites with JavaScript rendering. With the step-by-step guidance provided in this blog, you can confidently scrape article listings and detailed pages while handling complexities like pagination and structured data storage.

Always remember to use the data responsibly and ensure your scraping activities comply with legal and ethical guidelines, including the website’s terms of service. If you want to do more web scraping, check out our guides on scraping other key websites.

📜 How to Scrape Monster.com

📜 How to Scrape Groupon

📜 How to Scrape TechCrunch

📜 How to Scrape X.com Tweet Pages

📜 How to Scrape Clutch.co

If you have questions or want to give feedback our support team can help with web scraping. Happy scraping!

Frequently Asked Questions

Q. Is it legal to scrape data from healthline.com?

Scraping data from any website, including healthline.com, depends on the website’s terms of service and applicable laws in your region. Always review the site’s terms and conditions before scraping. For ethical scraping, ensure that your activities do not overload the server, and avoid using the data for purposes that violate legal or ethical guidelines.

Q. What challenges might I face when scraping healthline.com?

Healthline.com uses JavaScript to render content dynamically, which means the content might not be immediately available in the HTML source. Additionally, you might encounter rate-limiting or anti-scraping mechanisms. Tools like Crawlbase Crawling API help you overcome these challenges with features like JavaScript rendering and proxy rotation.

Q. Can I scrape other similar websites using the same method?

Yes, the techniques and tools outlined in this blog can be adapted to scrape other JavaScript-heavy websites. By using Crawlbase Crawling API and inspecting the HTML structure of the target website, you can customize your scraper to collect data from similar platforms efficiently.