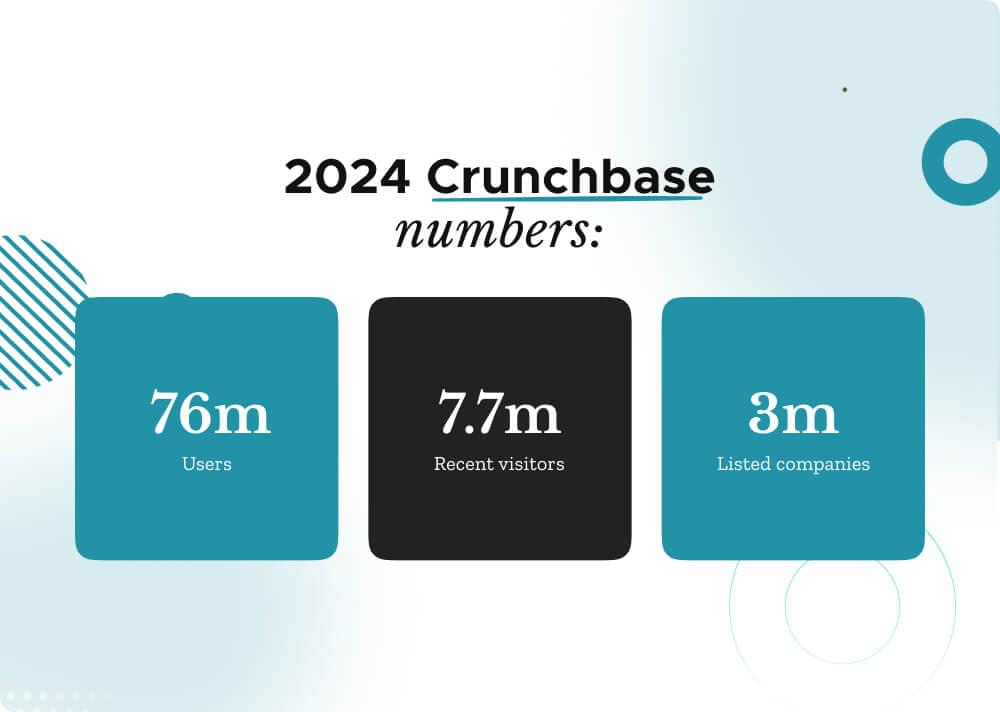

This blog is a step-by-step tutorial on how to scrape Crunchbase data. Crunchbase stands as one of the main provider of private-company prospecting and research solutions with an impressive track record of serving over 75 million individuals worldwide. In February 2024 alone, Crunchbase attracted 7.7 million visitors seeking insights into the dynamic world of startups and business ventures.

Crunchbase is also the go-to platform for entrepreneurs, investors, sales professionals, and market researchers alike, boasting a repository of data including 3 million listed companies.

However, accessing and extracting this data for analysis or research purposes can be challenging. In this guide, we’ll show you easy steps to Crunchbase scraping.

We’ll explore how to create a Crunchbase Scraper using Python, BeautifulSoup, and other relevant libraries. We’ll also integrate the Crawling API from Crawlbase, a powerful Crunchbase API designed to bypass these obstacles effectively. Let’s get into the project details.

3. Crawling Crunchbase webpage

5. Scraping Company Description

6. Scraping the Company Location

8. Scraping Company Website URL

11. Complete the Crunchbase Scraper code

12. Frequently Asked Questions

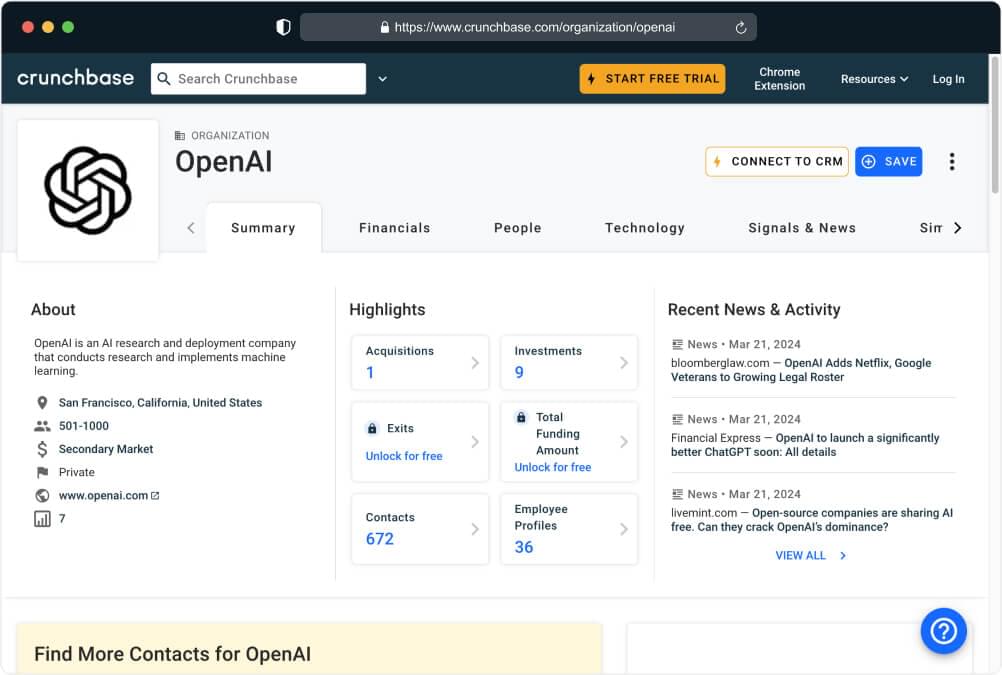

This project aims to help you build a Crunchbase scraper using Python and BeautifulSoup to scrape . It utilizes Crawlbase’s Crawling API to bypass captchas and potential blocks and fetch page content. Our goal is to extract various details about companies listed on Crunchbase for Market Research, Investment Analysis, Competitor Analysis, Partnership Identification, Recruitment, and more.

For this purpose we will be targeting this particular Crunchbase URL as our example.

Here’s the list of data points we will be extracting from Crunchbase company pages:

- Title: The name of the company, for example, “OpenAI”.

- Description: A brief overview or summary of the company’s activities, such as “A research organization specializing in artificial intelligence”.

- Location: The geographical location where the company is based, like “San Francisco, California, USA”.

- Employees: The approximate number of people employed by the company, for instance, “Over 100 employees”.

- Company URL: The web address of the company’s official website, for example, “https://www.openai.com/“.

- Rank: The position or rank of the company within Crunchbase’s database, such as “Ranked 5th in Crunchbase”.

- Founded Date: The date when the company was established, like “Founded in 2015”.

- Founders: The names of the individuals who founded the company, for instance, “Elon Musk and Sam Altman”.

By extracting Crunchbase data, the script aims to provide valuable knowledge into the profile of the company listed on Crunchbase. This data can also enable stakeholders to gain insights into market dynamics, competitive landscapes, and industry trends, ultimately supporting strategic decision-making and informed action.

With this understanding of the project scope and data extraction process, we can now move forward with setting up the necessary prerequisites and preparing your coding environment.

1. Prerequisites to Scrape Crunchbase data

Before we proceed to scraping Crunchbase with Python and utilizing the power of Crawlbase API, it’s important to ensure that you have the necessary prerequisites in place. These foundational elements will equip you with the knowledge, tools, and access needed to kickstart your web scraping projects effectively.

Let’s discuss the key prerequisites required to begin your web scraping project, specifically focusing on extracting data from Crunchbase:

Basic Python Knowledge

Familiarity with the Python programming language is essential. This includes understanding how to write and execute Python code.

Here’s a recommendation: If you’re new to Python, there are plenty of resources available to help you learn. Websites like Codecademy, Coursera, and Udemy offer introductory Python courses suitable for beginners. Additionally, reading Python documentation and practicing coding exercises on platforms like LeetCode or HackerRank can solidify your understanding.

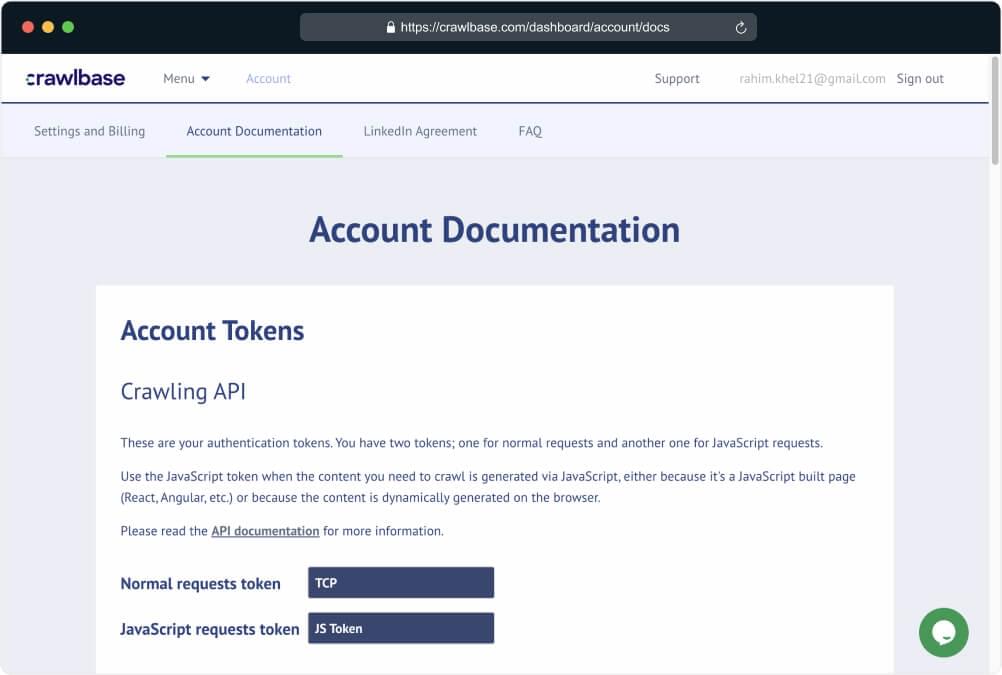

Crawlbase API Account

How to Create an Account: Visit the Crawlbase website and navigate to the sign-up page. Fill out the required information to create your account. Once registered, you’ll get your API credentials from the Account Documentation page.

Importance of API Credentials: Treat your API credentials as sensitive information. They’re essential for accessing Crawlbase’s services and performing web scraping tasks. Keep them secure and avoid sharing them with unauthorized individuals.

Choosing an Integrated Development Environment (IDE)

Recommendation: For Python development, popular IDEs include PyCharm, VSCode (Visual Studio Code), and Jupyter Notebook. Each IDE has its advantages, so you may want to explore them to find the one that best suits your needs.

IDE Installation: Simply download and install your chosen IDE from their respective websites. Follow the installation instructions provided, and you’ll be ready to start coding in Python.

2. Installing Dependencies

First, make sure to install Python. Visit the official Python website and download the latest version compatible with your operating system. Alternatively, you can use package managers like Anaconda, which provide a comprehensive Python distribution along with popular packages and development environments.

Follow the installation instructions provided on the Python website or through the Anaconda installer. Ensure that Python is properly installed and configured on your system.

Packages Installation

To setup your Python environment for web scraping, open Command Prompt or Terminal following the installation of Python. Then, create a directory on your system to house your Python scraper code, either through the mkdir command (on macOS/Linux) or via the file explorer (on Windows).

Next, install the required packages using pip, Python’s package manager, by executing specific commands in your command prompt or terminal.

1 | pip install crawlbase |

These commands will download and install the required libraries:

- Crawlbase Python library: The Crawlbase library provides a convenient Python class designed as a wrapper for the Crawlbase API (Crawling API and more). This lightweight and dependency-free class enables seamless integration of Crawlbase’s web scraping functionalities into Python applications.

- BeautifulSoup: As one of the most widely-used Python frameworks for web scraping, Beautiful Soup simplifies HTML parsing with its intuitive features, making it easier to extract data from web pages.

3. Crawling Crunchbase webpage

Now that we have the important dependencies installed, let’s begin the coding process.

Extracting Crunchbase data involves fetching the HTML content of the desired pages using the Crawling API. Below are the steps to crawl a Crunchbase webpage using Python and the provided code snippet:

Import the CrawlingAPI Class:

- Import the

CrawlingAPIclass from thecrawlbaselibrary. This class enables interaction with the Crawling API.

Define the crawl Function:

- Create a function named

crawlthat takes two parameters:page_url(the URL of the Crunchbase webpage to crawl) andapi_token(your Crawlbase API token).

Initialize the CrawlingAPI Object:

- Inside the

crawlfunction, initialize theCrawlingAPIobject by passing a dictionary with your API token as the value for the'token'key.

Fetch the Page Content:

- Use the

get()method of theCrawlingAPIobject to fetch the HTML content of the specifiedpage_url. Store the response in theresponsevariable.

Check the Response Status:

- Check if the response status code (accessed using

response['status_code']) equals 200, indicating a successful request.

Extract Data or Handle Errors:

- If the request is successful, extract and print the HTML content of the page (accessed using

response["body"]). Otherwise, print an error message indicating the cause of the failure.

Specify the Page URL and API Token:

- In the

__main__block of your script, specify the Crunchbase page URL to crawl (page_url) and your Crawlbase API JavaScript token (api_token).

Call the crawl Function:

- Finally, call the

crawlfunction with the specifiedpage_urlandapi_tokenas arguments to initiate the crawling process.

Here’s the code implementing these steps. You can copy and paste the code and save it as a Python file in your directory. e.g. scraper.py

1 | from crawlbase import CrawlingAPI |

Let’s execute the above code snippet, To run it we need to execute the below command:

1 | python scraper.py |

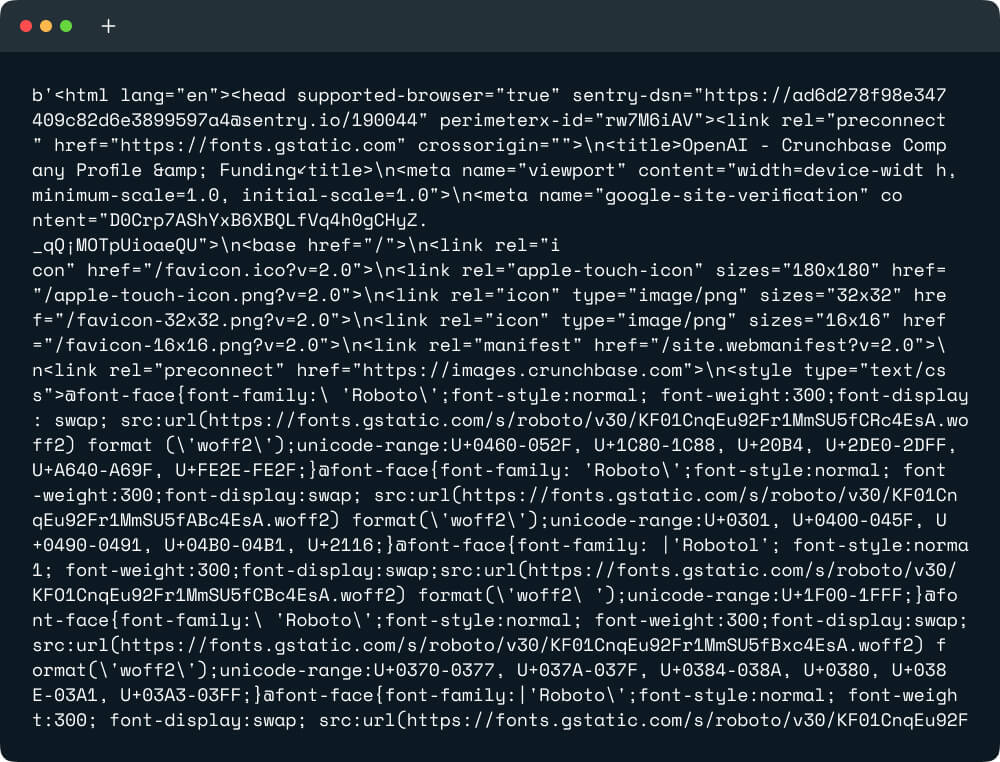

Output:

We have successfully crawled the HTML data of the Crunchbase webpage. Next, we will proceed to scraping Crunchbase data using the BeautifulSoup4 package in Python.

4. Scrape Crunchbase Company Title

Now, let’s focus on extracting the title of the company from the crawled HTML of the Crunchbase webpage. We’ll use the BeautifulSoup4 package for parsing the HTML content and locating the relevant information.

Import BeautifulSoup at the beginning of your Python script or function.

1 | from bs4 import BeautifulSoup |

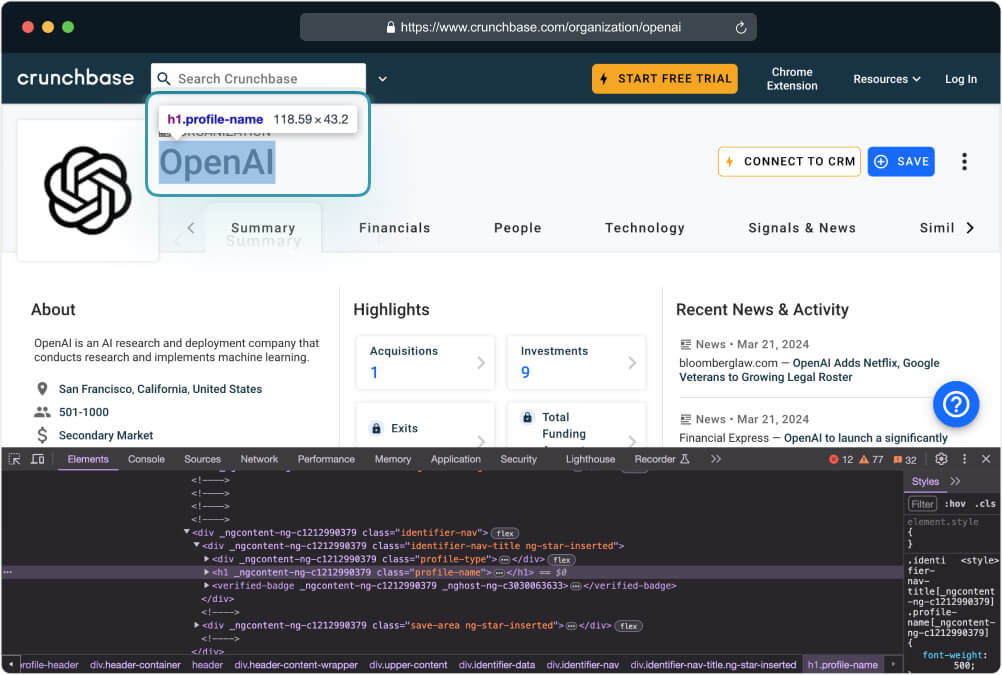

Identify the HTML element containing the company title. Inspect the Crunchbase webpage or view the HTML source to determine the appropriate selector.

Utilize BeautifulSoup selectors to target the title element within the webpage. This involves specifying the appropriate class that matches the desired element.

1 | def scrape_data(response): |

This code snippet finds the first occurrence of the <h1> element with the class “profile-name” in the HTML content stored in the variable soup. It then extracts the text inside that element and removes any extra spaces or newlines using the get_text(strip=True) method. Finally, it assigns the cleaned text to the variable title and return the title.

5. Scrape Crunchbase Company Description

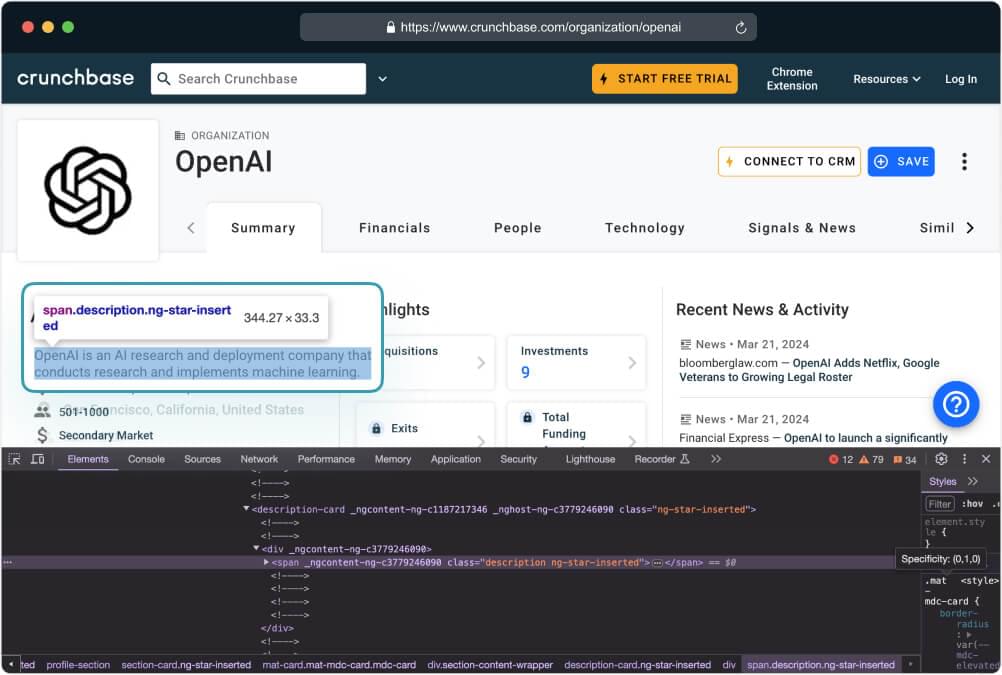

In this section, we’ll focus on extracting the description of the company from the crawled HTML of the Crunchbase webpage.

1 | def scrape_data(response): |

This code extracts the description of a Crunchbase page by searching for a specific <span> tag with a class attribute of ‘description’ in the parsed HTML content. It then returns this description text in a dictionary format.

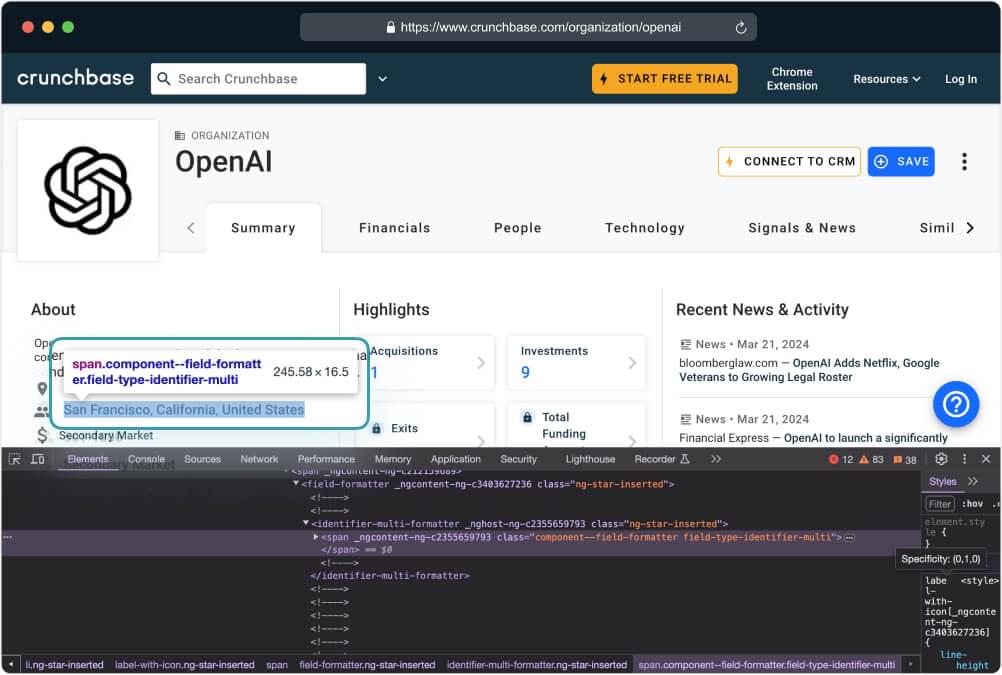

6. Scrape Crunchbase’s Company Location

The company location provides valuable information about where the company is based, which can be crucial for various analyses and business purposes.

When scraping the company location, we’ll continue to leverage the BeautifulSoup4 package for parsing the HTML content and locating the relevant information. The location of the company is typically found within the webpage’s content, often in a specific HTML element as shown below:

1 | def scrape_data(response): |

This code snippet extracts the location information from a specific element on the page, particularly from a <li> element within the .section-content-wrapper. It then returns this information as part of a dictionary containing other extracted data. Additionally, it handles exceptions by printing an error message if any occur during the scraping process.

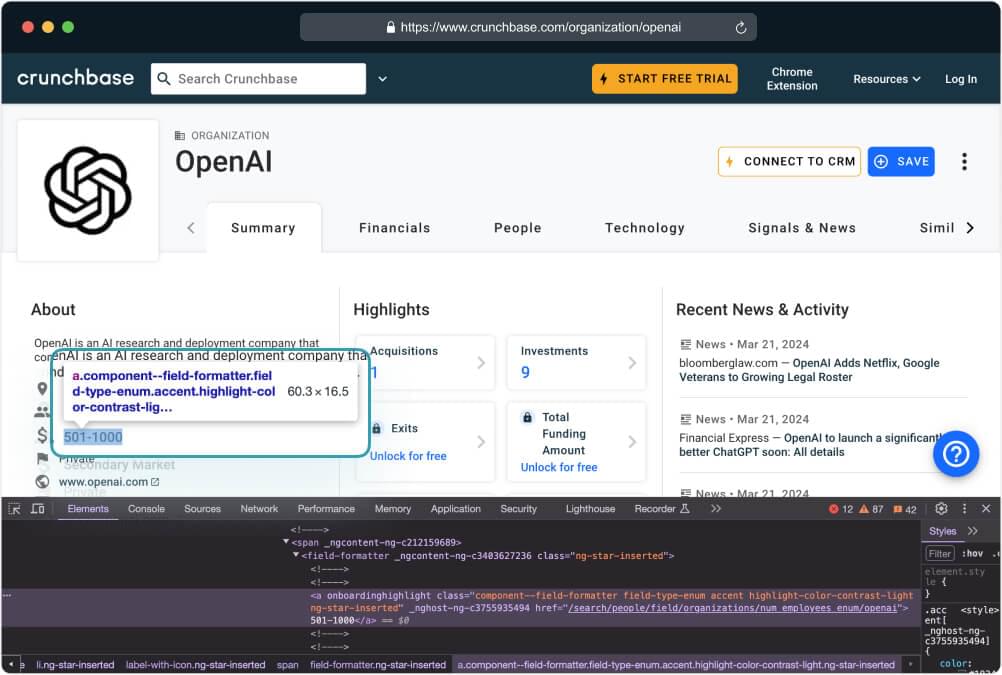

7. Scrape Crunchbase’s Company employees

The number of employees is a crucial metric that provides insights into the size and scale of the company. Similar to previous sections, we’ll utilize the BeautifulSoup4 package for parsing the HTML content and locating the relevant information. The number of employees is typically found below the location section as shown below:

1 | def scrape_data(response): |

The provided code defines a function named scrape_data responsible for extracting data from a web page’s HTML content. It utilizes BeautifulSoup for parsing the HTML, specifically targeting a specific HTML element representing the number of employees at a company on a Crunchbase webpage. Within a try-except block, the code attempts to extract the text content of this element using the CSS selector '.section-content-wrapper li.ng-star-inserted:nth-of-type(2)'.

If successful, the extracted number of employees is returned as part of a dictionary. In case of any exceptions during the scraping process, the code handles them by printing an error message and returning an empty dictionary, indicating the absence of extracted data.

8. Scrape Crunchbase’s Company Website URL

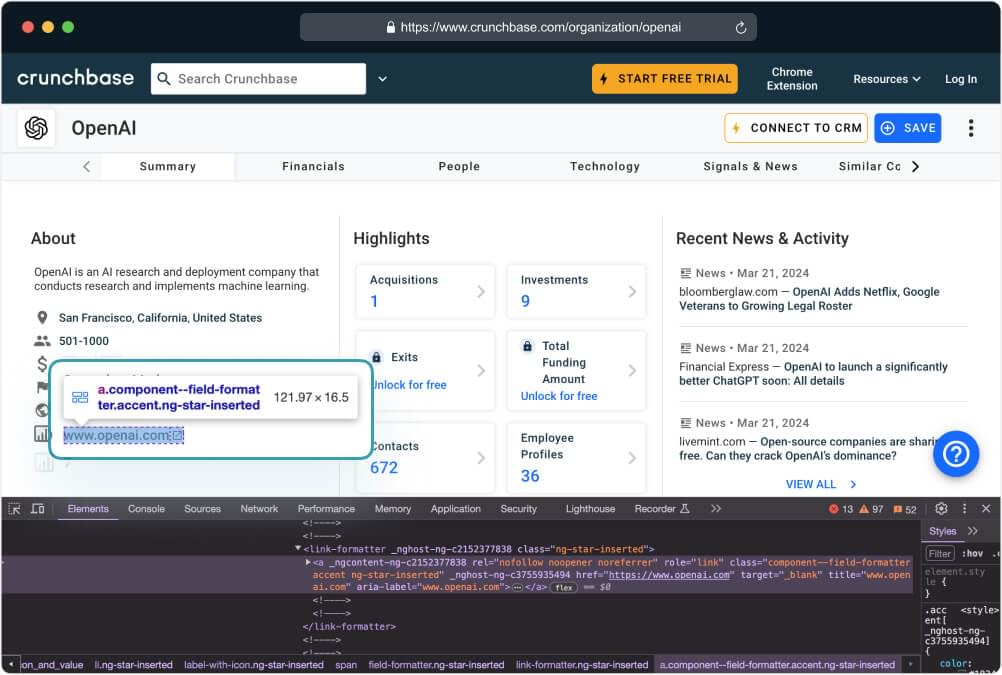

The company website URL is a fundamental piece of information that offers direct access to the company’s online presence. Typically, the company website URL is located within the “About” section as you can see below:

Much like previous sections, we’ll use the BeautifulSoup4 package to navigate through the HTML content of the Crunchbase webpage and pinpoint the data using a CSS selector.

1 | def scrape_data(response): |

This code defines a function named scrape_data that attempts to extract the company website URL from the HTML content of a Crunchbase webpage. We parsed the HTML content and employs a CSS selector .section-content-wrapper li.ng-star-inserted:nth-of-type(5) a[role="link"] to locate the specific <a> (anchor) element representing the company URL.

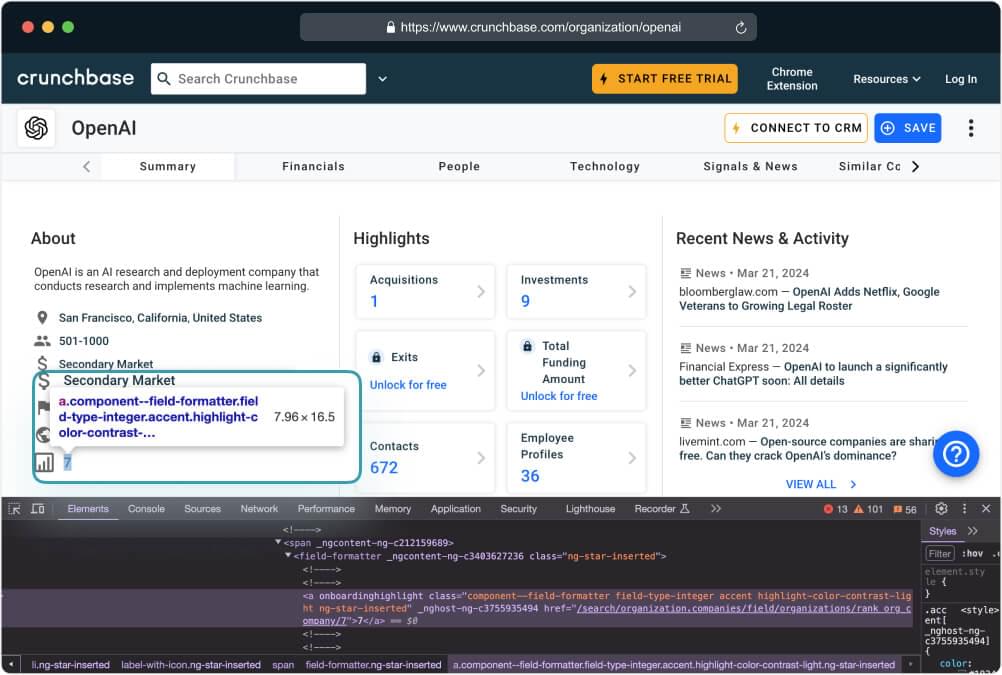

9. Scrape Crunchbase’s Company Rank

The company rank provides insights into the standing or position of the company within its industry or sector. The company rank is found within the About section often labeled or identified as “Rank” or similar.

1 | def scrape_data(response): |

This code snippet attempts to extract the rank of the company from the HTML content. It utilizes BeautifulSoup’s select_one() method along with a CSS selector .section-content-wrapper li.ng-star-inserted:nth-of-type(6) span to locate the specific HTML element representing the rank.

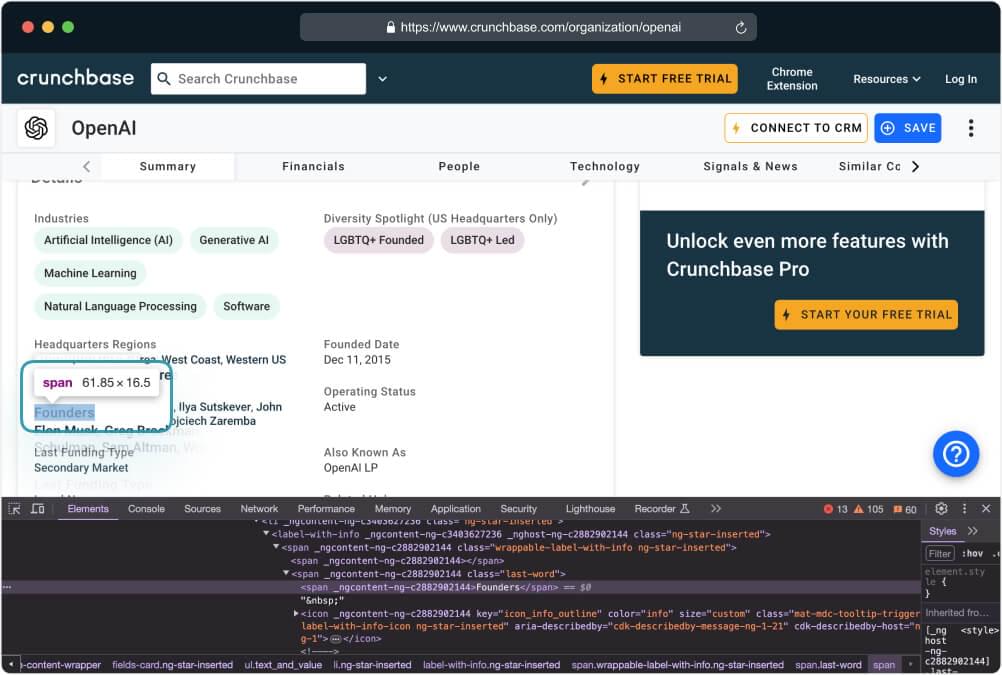

10. Scrape Crunchbase’s Company Founders

Typically, information about company founders is listed within the About section of the company webpage, often labeled or identified as “Founders” or similar.

Below is the code snippet demonstrating how to extract the company founders’ information using BeautifulSoup:

1 | def scrape_data(response): |

This code snippet extracts the founders’ information from the HTML content. It utilizes BeautifulSoup’s select_one() method along with a CSS selector .mat-mdc-card.mdc-card .text_and_value li:nth-of-type(5) field-formatter to locate the specific HTML element showing the founders’ information.

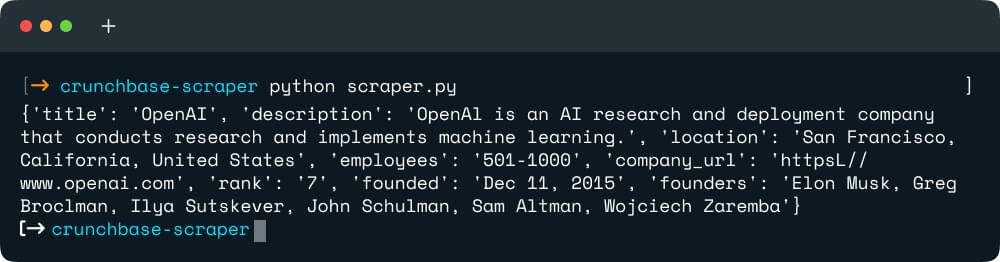

11. Complete the Crunchbase Scraper code

Now that we’ve explored each component of the scraping process and developed individual functions to extract specific data points from a Crunchbase webpage, it’s time to bring it all together into a cohesive script.

The complete code below integrates functions for crawling and scraping data, making it easy to gather various information about a company from its Crunchbase profile.

1 | from crawlbase import CrawlingAPI |

Executing the code by using the command python scraper.py should provide a similar output as shown below:

This tutorial serves as a comprehensive guide for creating a Crunchbase scraper using Python and the Crawlbase API. It shows step-by-step instructions to easily extract important information from Crunchbase company profiles.

Note that this tutorial is freely available for use, and we encourage you to utilize it for your projects. This tutorial serves as a solid starting point for everyone, whether you’re new to web scraping or a seasoned developer aiming to improve your skills.

Feel free to adapt and customize the code provided to suit your specific requirements. You can use it not only for scraping Crunchbase data but also as a guide for scraping other websites of interest. You’ll be ready to handle various web scraping tasks with confidence by using the concepts and techniques taught in this tutorial.

Additionally, if you are interested in exploring more projects similar to this one, you can browse through the following tutorials for additional inspiration:

How to Build Wayfair Price Tracker

Scrape Wikipedia in Python - Ultimate Tutorial

For more customization options and access to advanced features, please check the Crawlbase Crawling API documentation. Should you have any inquiries or feedback, feel free to reach out to our support team.

Frequently Asked Questions

Q. Should I use the Crunchbase API or the Crawling API?

If you would like to access Crunchbase data officially and in a structured manner, it’s recommended to utilize the official Crunchbase API. However, please note that the data accessible through the Crunchbase API may have limitations.

On the other hand, if you require greater freedom and flexibility, or if your data needs to extend beyond Crunchbase, you might find the Crawling API provided by Crawlbase to be a more suitable option.

Q. How do I pull data from Crunchbase?

- Sign up for Crawlbase and get your JavaScript token

- Choose a target website or URL.

- Send an HTTP/HTTPS request to the API

- Integrate with Python and BS4 to scrape specific data

- Import the data in JSON, CSV, or Excel

Q: Can Crunchbase be scraped?

Scraping thousands of data points on Crunchbase can be done in just a few minutes using the Crawlbase Crawling API.

With a Crawlbase account, you can utilize the JavaScript token to crawl the entire HTML code of the page. Then, to extract specific content, you can develop a scraper using Python BeautifulSoup or any other third-party parser of your choice.

Q. What is Crunchbase used for?

Crunchbase is a platform used worldwide to find business information. It’s popular among entrepreneurs, investors, salespeople, and researchers. It’s a great place to learn about companies, startups, investors, funding, acquisitions, and industry trends.

Q. What does Crunchbase do?

Crunchbase offers a business prospecting platform that utilizes real-time company data. Designed to help salespeople, CEOs, and individuals alike, the platform makes it easy to find, track, and keep an eye on companies. This enables more efficient deal discovery and acquisition.

Q. How to extract financial data from Crunchbase automatically?

Financial data is hidden behind a log in session on Crunchbase. In order to extract the data automatically, you will have to do the following:

- Login to the website on a real browser manually

- Extract the session cookies from the browser

- Send the cookies to the Crawling API using the cookies parameter.

Once logged in, you can apply the same scraping techniques demonstrated in this tutorial using Python to retrieve the financial data.