Automating product research with APIs used to be something only developers could pull off. But things have changed. Today, some tools enable anyone, even without a technical background, to design and run their own automation workflows.

In this walkthrough, we’ll show you how to use n8n, an open-source automation platform, together with the Crawlbase Crawling API to automatically collect product details from eCommerce websites like Amazon and send them directly into Google Sheets.

No coding skills needed. You don’t need to learn Python or any other programming language.

Just a simple workflow that keeps running quietly in the background.

By the end, you’ll have a compact but powerful setup for Amazon data scraping that updates itself whenever you add new ASINs or schedule an automated run.

This kind of setup works perfectly for:

- eCommerce researchers collecting large sets of data

- Competitor analysts tracking price changes

- Amazon sellers tracking variations

- Data analysts studying market trends over time

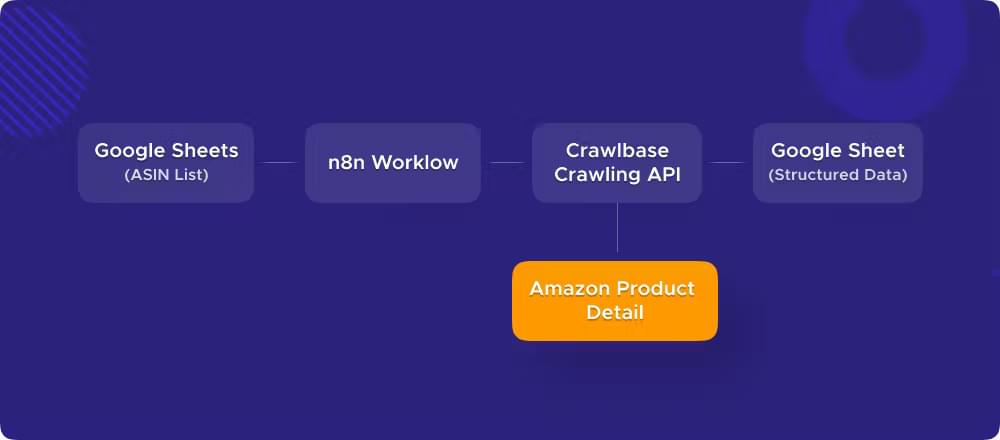

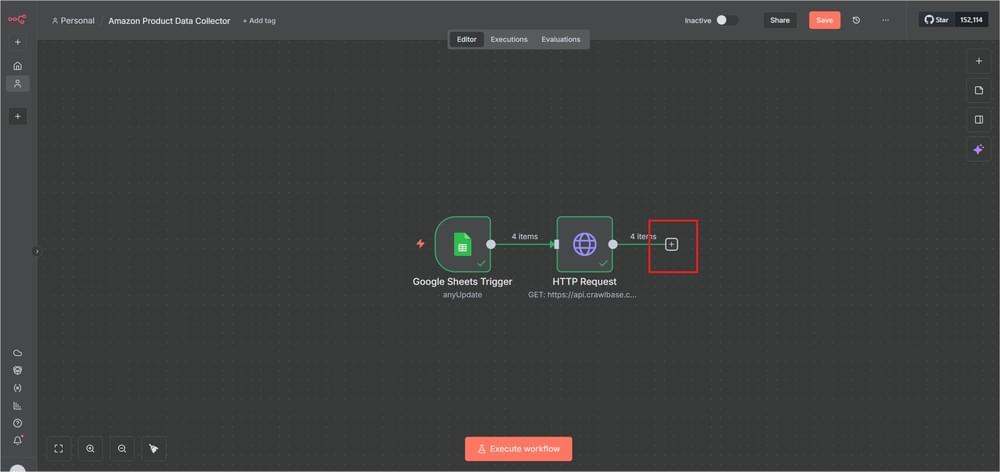

Here’s what the entire process looks like from start to finish:

It’s a simple setup: you start with a list of ASINs in Google Sheets, n8n runs the workflow, Crawlbase fetches the data from Amazon, and the results get written back automatically.

Simple Checklist for Automating Amazon Product Research

Before setting up the workflow, gather a few basics. You won’t be coding anything here, just connecting a few easy tools.

n8n

Use this to build and run the automation. Host it locally or sign up for n8n Cloud. It’s a drag-and-drop workspace that links steps together, so no programming is needed.

Crawlbase account

Crawlbase does the crawling. It loads Amazon pages and returns clean, structured data, even for JavaScript-heavy sites. Create an account at Crawlbase and copy your API tokens from the dashboard, we’ll use it in n8n soon.

Google account with Sheets API on

This lets n8n pull ASINs from a spreadsheet and write product details back in. You’ll connect your Google account the first time you add a Google Sheets node.

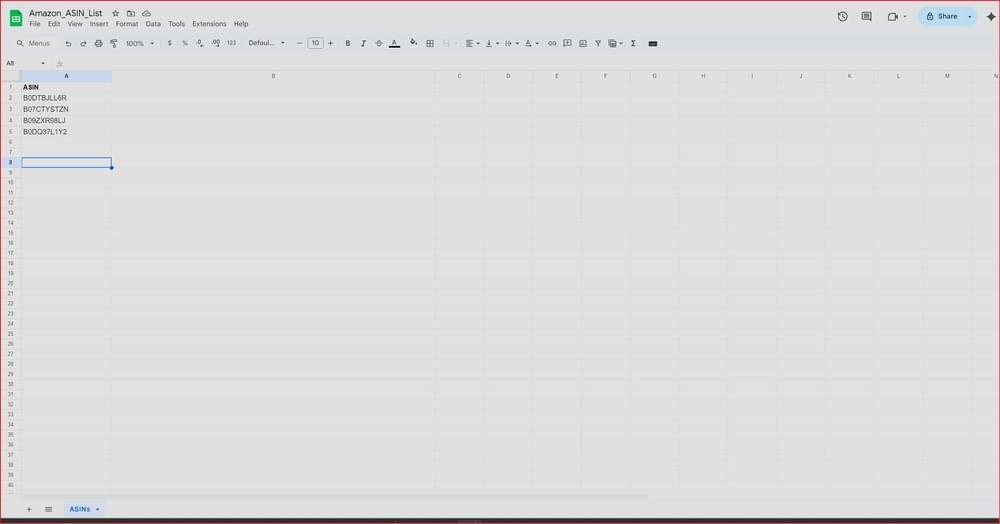

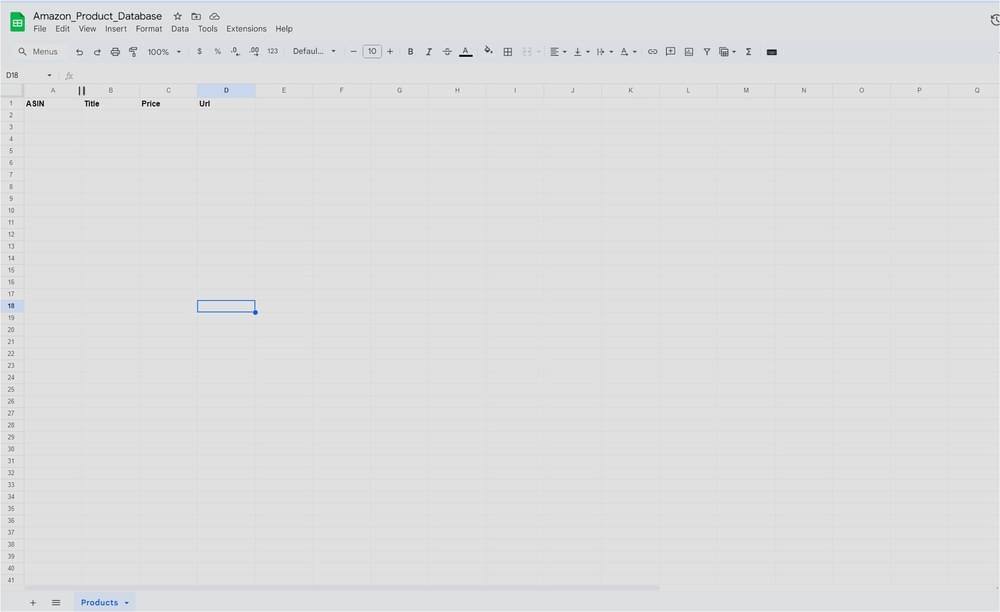

A Google Sheet with your ASIN list

Open a blank sheet and add one column with ASINs. Call it “ASIN List” or anything you like. Later, n8n will read from here and update rows automatically after each crawl.

How to Build Your n8n Workflow for Amazon Product Research Automation

It’s time to put the pieces together. This is where we’ll connect your data sources and automate the entire eCommerce research process from end to end.

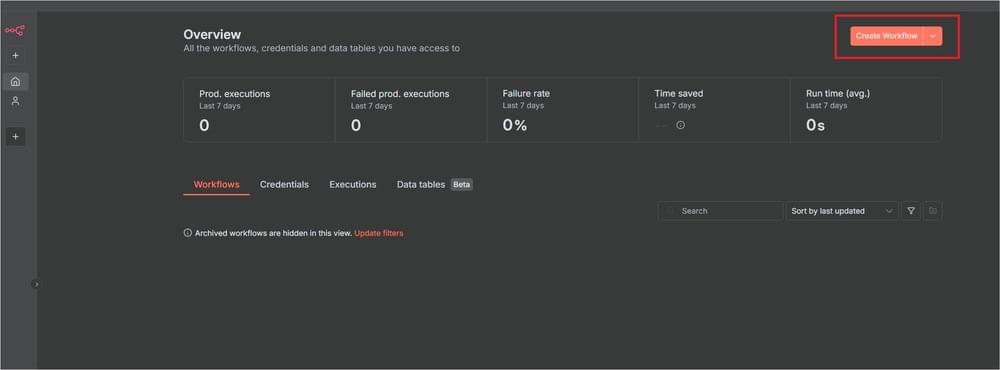

Step 1: Create a New Workflow in n8n

Log in to your n8n dashboard and click the Create Workflow button to start a fresh one.

Give it a clear name like “Amazon Product Data Collector”.

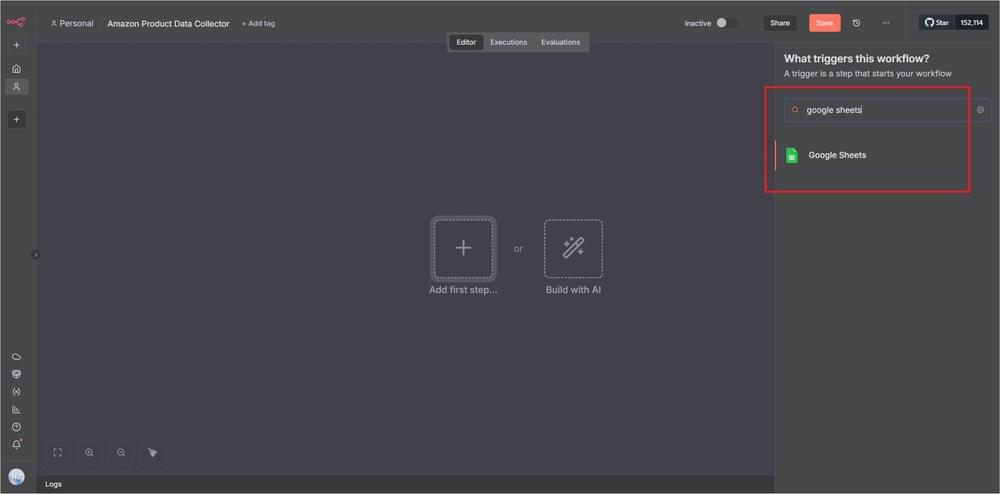

Step 2: Connect Google Sheets Node (ASIN List)

Let’s set up the trigger that starts the workflow whenever a new ASIN is added to your Google Sheet.

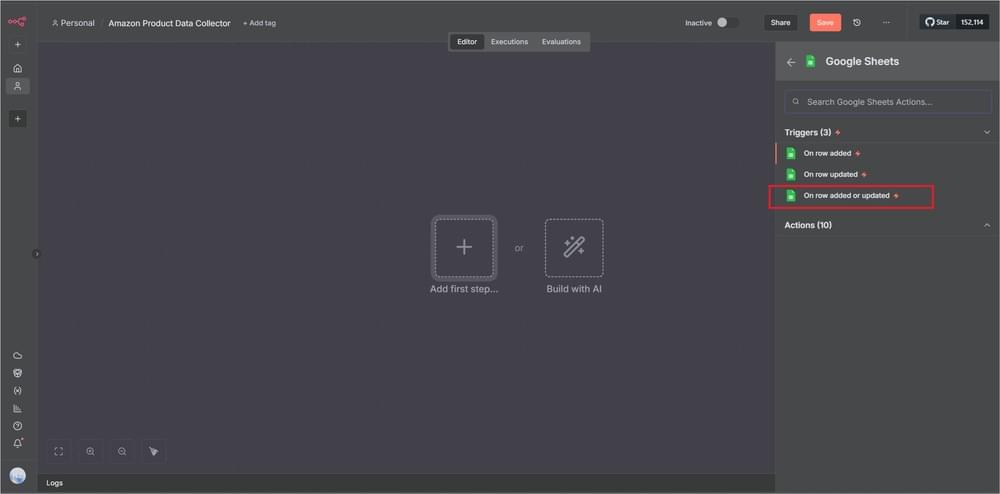

Search for Google Sheets in the panel and drag it into your workflow.

Choose the On row added or updated trigger so the workflow runs automatically when new data appears.

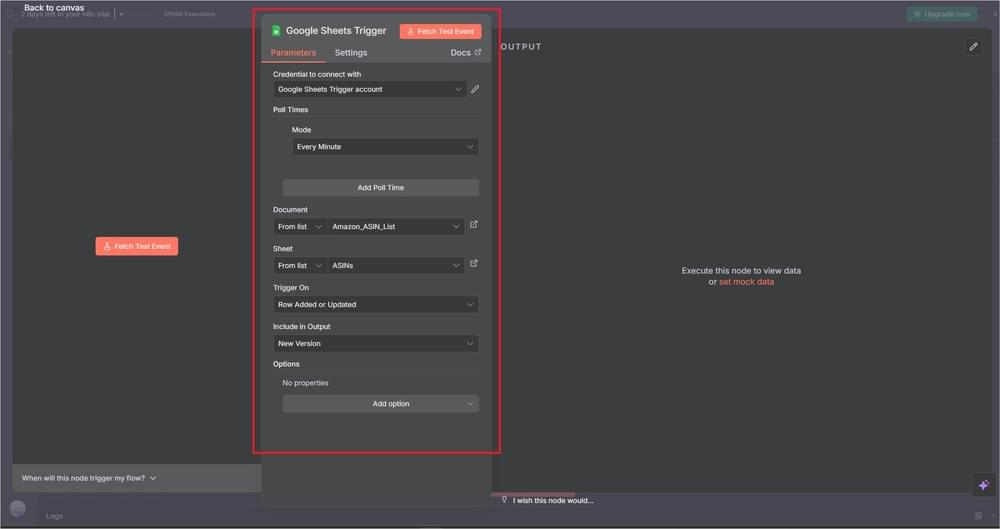

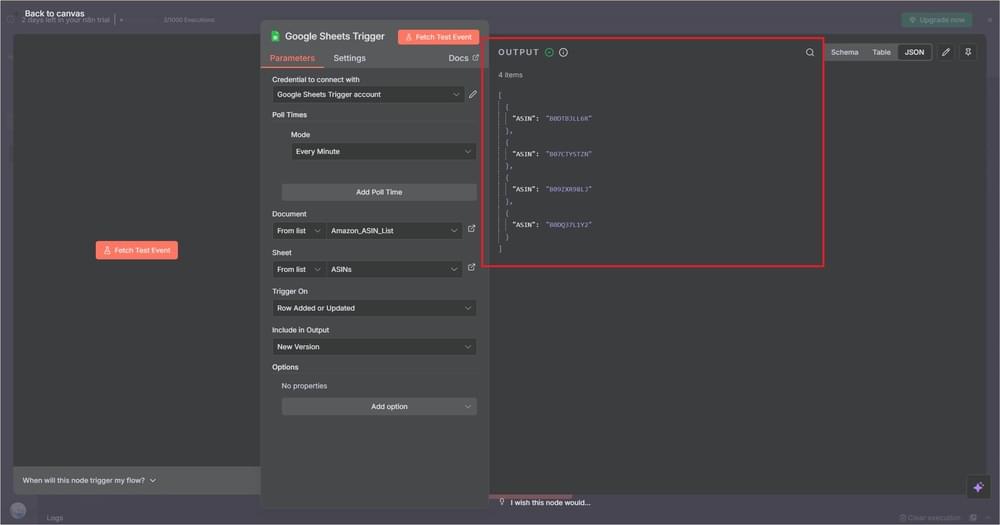

Authenticate with your Google account, then select your spreadsheet and worksheet.

Click Fetch Test Event to make sure the connection works correctly.

Now your workflow will know exactly where to pull ASINs from.

Step 3: Add the Crawlbase Crawling API Node

This part is important as it will allow our workflow to fetch live data from an eCommerce website like Amazon.

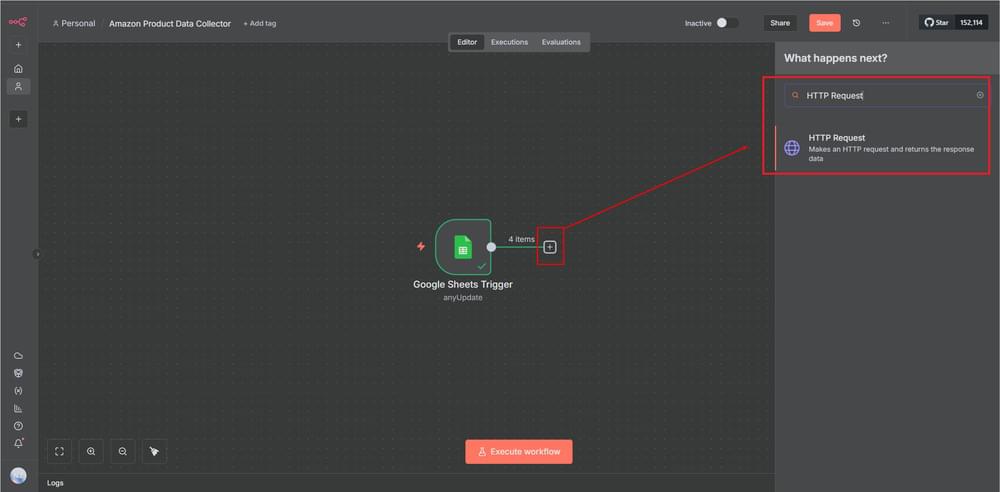

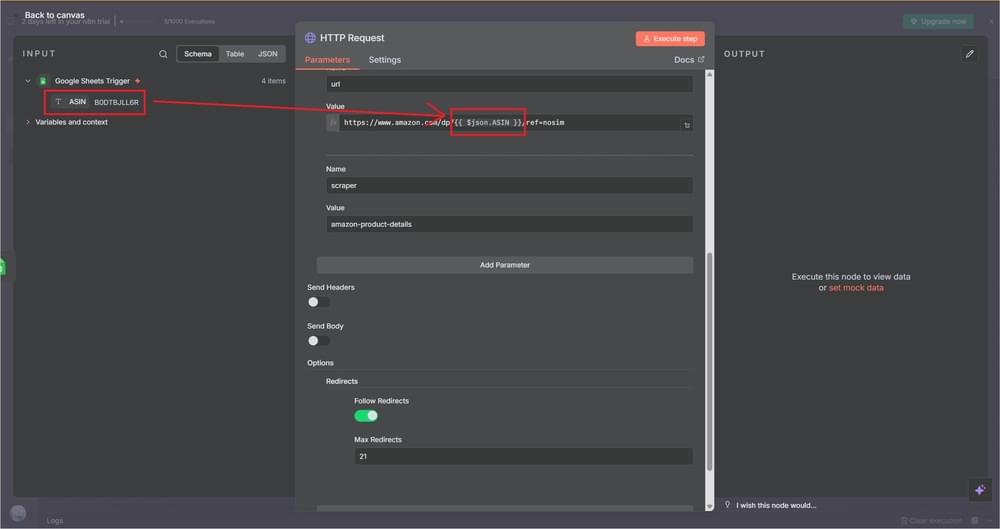

Since n8n doesn’t yet have a built-in Crawlbase node, we’ll use the HTTP Request node instead.

Add a new node and search for HTTP Request in the nodes panel.

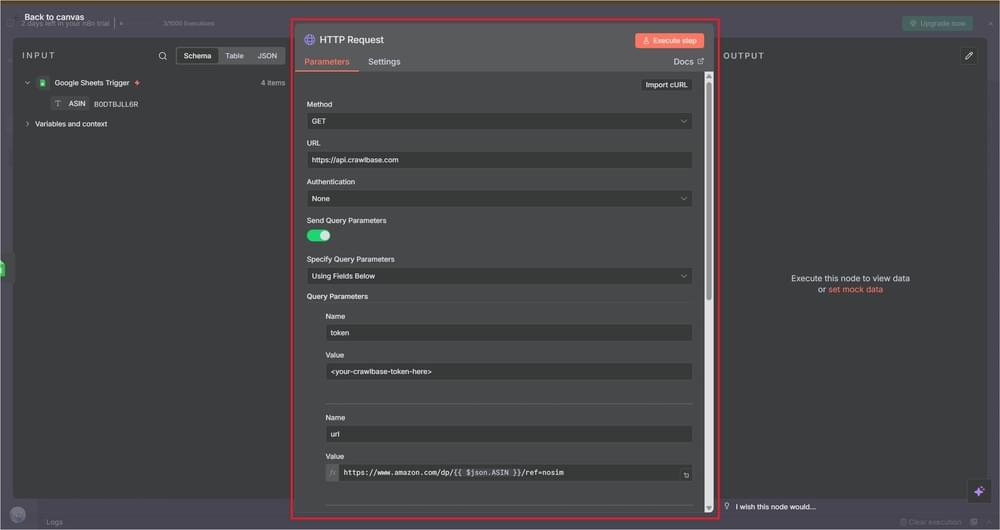

Open the HTTP Request node configuration panel, then fill out the following details:

Under Parameters

- Method:

GET - URL:

https://api.crawlbase.com - Authentication:

None - Send Query Parameters: Toggle ON

Next, under the Query Parameters section, add these fields exactly as shown below:

Query Parameters

| Name | Value | Description |

|---|---|---|

| token | <your-crawlbase-token-here> | Your Crawlbase API key. You can find this in your Crawlbase account dashboard. |

| url | https://www.amazon.com/dp/{{ $json.ASIN }}/ref=nosim | This dynamically builds the Amazon product URL using the ASIN from your Google Sheet. |

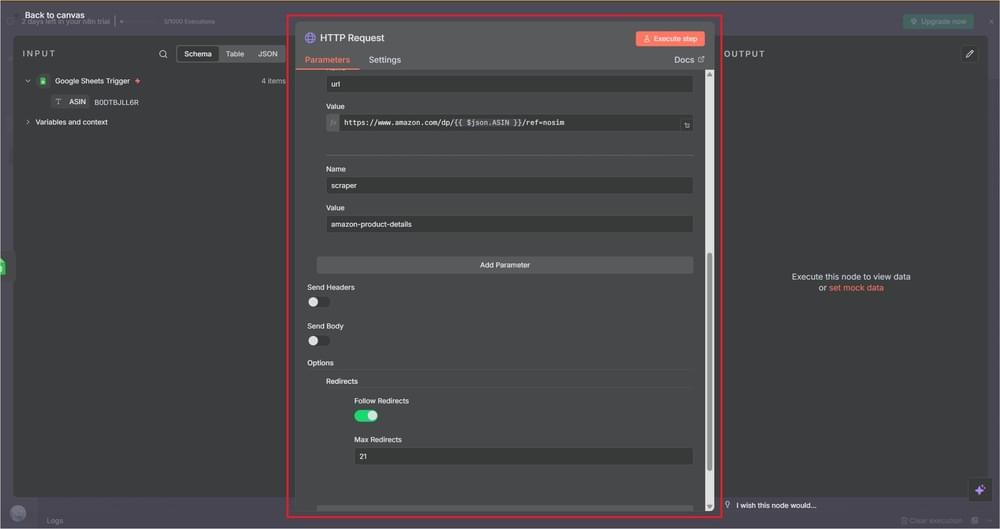

| scraper | amazon-product-details | This tells Crawlbase to use the built-in Amazon Product Details scraper. |

Alternatively, you can drag the ASIN from the previous Google Sheets node to dynamically build the URL.

Your setup should now look something like this:

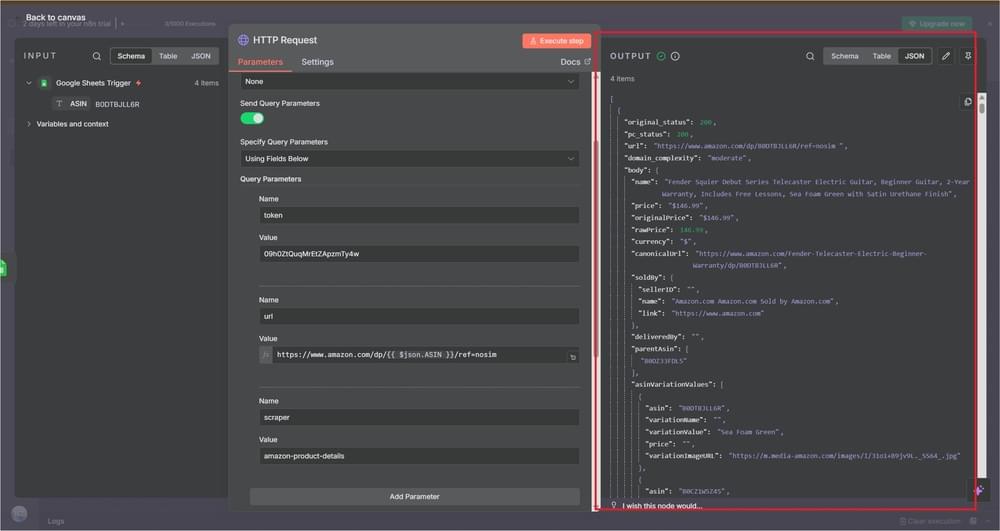

Once everything is filled out, click Execute Node on the top right.

You’ll see a JSON response in the Output panel on the right if it’s working properly. It’ll include fields like title, price, rating, reviews_count, and more.

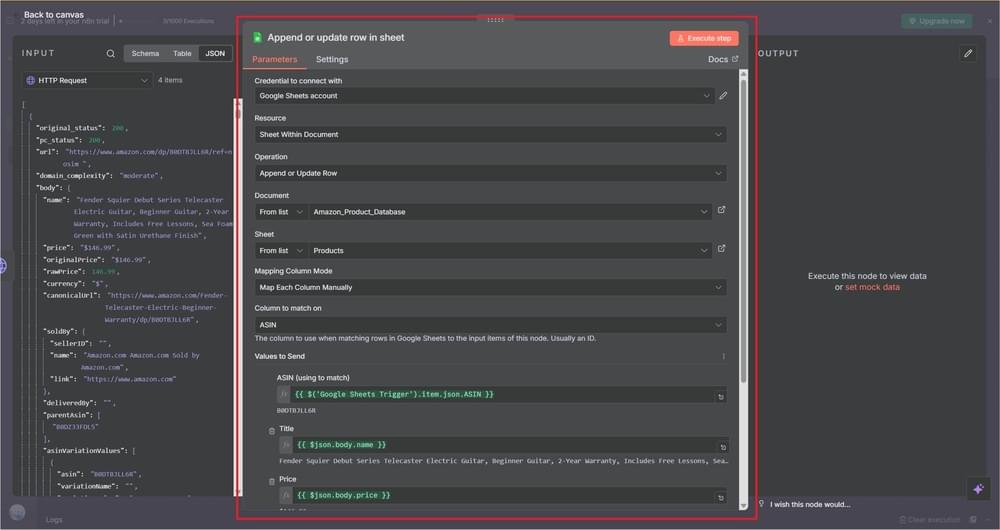

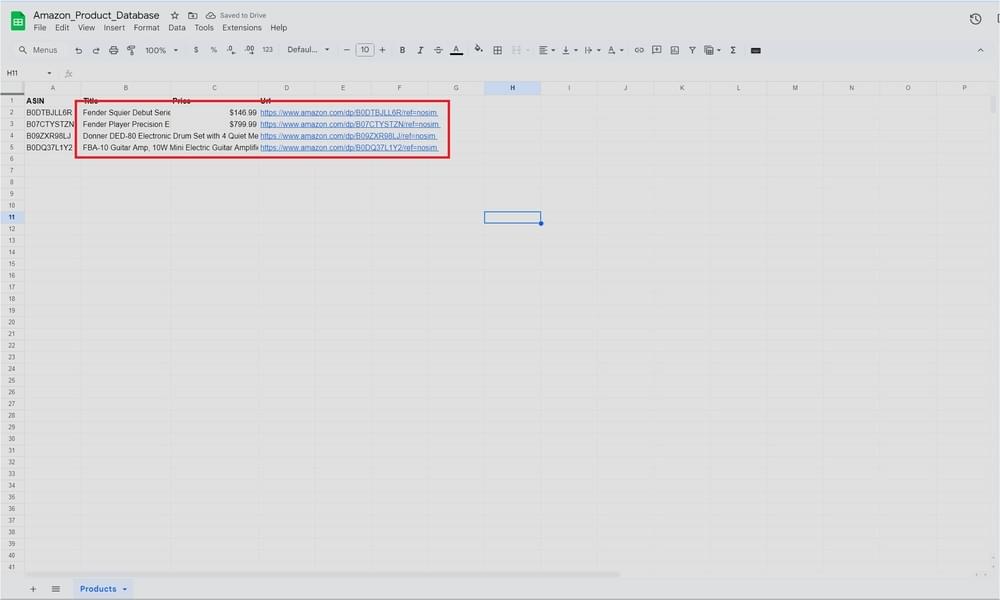

Step 4: Store Results in Google Sheets

Now that Crawlbase is returning clean product data, the last step is to store it in a Google Sheet.

Proceed by either opening your Google Sheet or creating a new one, then add a few columns to store the product information you want to capture (e.g., ASIN, Title, Price, URL).

You can always add more fields later, depending on the data you’re collecting from Crawlbase.

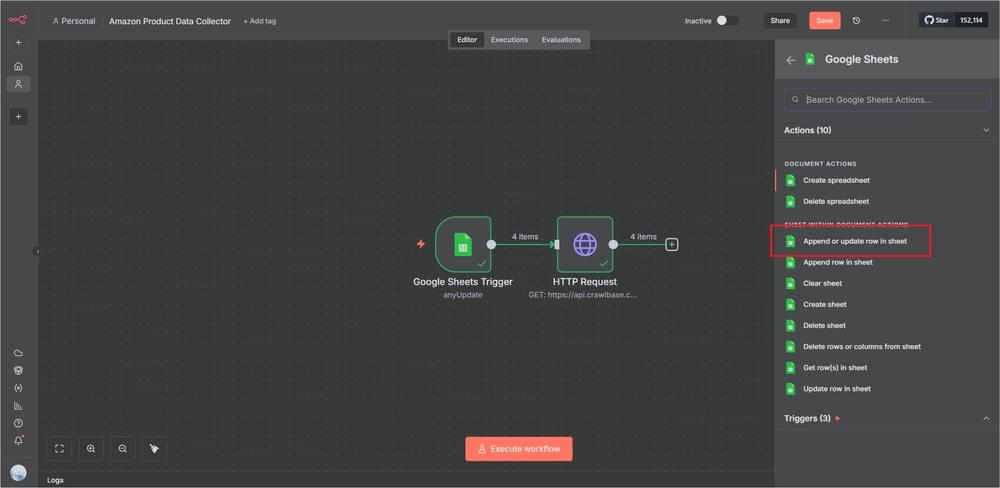

Go back to your n8n workflow and add another step right after the HTTP Request node once done.

Search for Google Sheets in the node panel, then choose Append or update row in sheet under Actions.

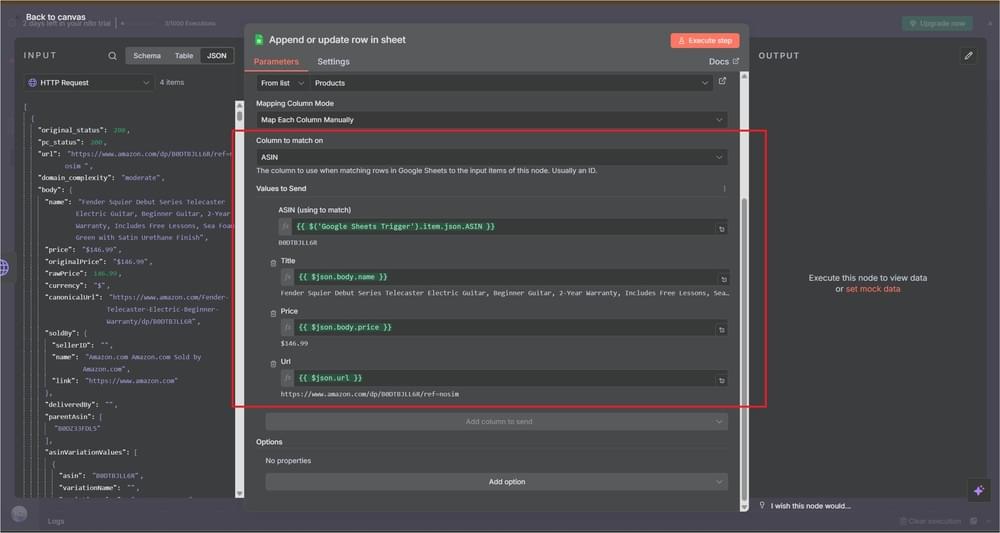

Then, set up your parameters just like the screenshot below:

Make sure the Column to match on section is set to ASIN to avoid errors. And populate the fields with data from the Crawlbase API response, exactly like this:

1 | ASIN (using to match) |

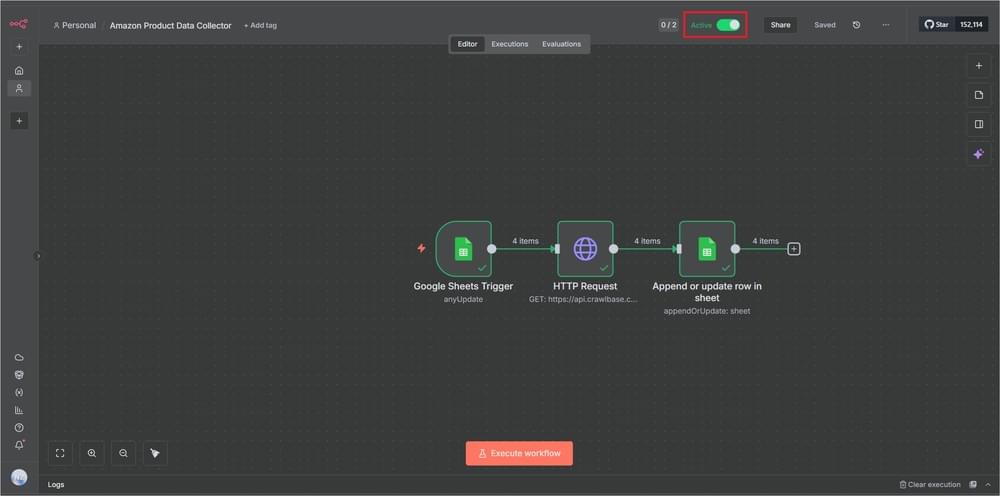

Step 5: Activating Your Workflow

In the top-right corner of your n8n workspace, switch Active to ON. This enables your workflow so it can start grabbing data automatically.

Go ahead and test it by running the workflow once. Within a few seconds, you should see your Google Sheet start filling in with the product details pulled from Amazon like titles, prices, and links all neatly organized.

That’s it. The workflow will automatically start collecting fresh data whenever you add new ASINs and keep your sheet up to date, significantly reducing your manual work.

Improve and Scale Your eCommerce Research Automation

Once your workflow is running, you already have the basics in place. From here, it’s easy to build on what you started and make it more useful. You don’t have to rebuild anything. Just add a few small upgrades over time.

Schedule automatic runs

Instead of running it by hand, add a Schedule Trigger node in n8n. You can set it to run once a day or every few hours. Whatever fits your routine. Your Google Sheet will stay updated with fresh Amazon data while you focus on other work.

Capture more details

Right now, you might only be collecting titles and prices. That’s fine for testing, but Crawlbase can do more. You can include ratings, review counts, or seller names. The extra information gives better insight into how products perform and how they change over time.

Save your crawled data

If you want a backup of everything you collect, use Crawlbase Cloud Storage. Add store=true to your API request, and Crawlbase will automatically save each crawl in your account. It’s useful if you ever need to go back, review old pages, or compare changes without scraping again.

Explore other Crawlbase scrapers

Crawlbase works with all kinds of scrapers for pretty much any site you need to pull data from, and you don’t need to change your whole approach. Just swap out the data scraper type when you want different information.

That simple setup you’ve got now can do so much more with a few tweaks. Before you know it, you’ll have a solid research tool that stays fresh with new data, keeps track of everything you’ve gathered, and helps you monitor products with spot-on information every time you use it.

Final Thoughts

Congratulations! You’ve just built a complete, automated system for gathering Amazon product data without writing a single line of code.

You now have a setup that saves hours of manual work and keeps your research consistently up to date, thanks to n8n handling the workflow and Crawlbase doing the scraping.

If you haven’t already, sign up for a Crawlbase account and start experimenting with the Crawling API.

You can use the same approach for other eCommerce platforms, or even expand it to build full-scale data dashboards.

Set it up once and let your data take care of itself.