Crawlbase’s Crawler is a versatile web crawler that lets you extract online data at scale. The Crawler simplifies the crawling process, allowing you to harvest big data conveniently and validly. It’s what you need to take control of web scraping and get the data you need for your business requirements.

The Crawler effortlessly takes care of JavaScript browsers, queues, proxies, data pipelines, and other web scraping difficulties. This enables you to make the most of data extraction and retrieve online information without any hassles.

By the end of this tutorial, you’ll have learned how to use the Crawler to retrieve data from online sources.

What we’ll cover

- What is the Crawler?

- How the Crawler works

- How to create a local webhook

- How to expose the local server

- How to create a crawler

- How to send Crawler request

What you’ll need

- Node.js development environment

- Crawlbase’s Crawler

- Express

- Ngrok

Ready?

Let’s get going…

What is the Crawler?

Built on top of the Crawling API, the Crawler is a push system that lets you scrape data seamlessly and fast. It works asynchronously on top of the Crawling API.

The Crawler is suitable for large-scale projects that require scraping enormous amounts of data. Once you set up a webhook endpoint on your server, the harvested data will be delivered there, allowing you to edit and customize it to meet your specific needs.

The Crawler provides the following benefits:

- Easily add scraped data to your applications without the complexities of managing queues, blocks, proxies, captchas, retries, and other web crawling bottlenecks.

- Get crawled data to your desired webhook endpoint, allowing you to conveniently integrate the data to your specific use case.

- Perform advanced web scraping on any type of website, including the complicated ones supporting JavaScript rendering.

- Anonymously scrape data without worrying about disclosing your identity.

- Scrape online data using a simple pricing model that does not require long-term contracts. You only pay for successful requests.

How the Crawler works

As mentioned earlier, the Crawler works on top of the Crawling API. This allows you to augment the capabilities of the Crawling API and extract data easily and smoothly.

This is how to make a request with the Crawling API:

https://api.crawlbase.com/?token=add_token&url=add_url

As you can see above, apart from the base part of the URL, the Crawling API requires the following two mandatory query string parameters:

- A unique authorization token that Crawlbase provides to authorize you to use the API. You can use either the normal token for making generic web requests or the JavaScript token for making advanced, real browser requests.

- URL of the website that you want to scrape. It should begin with HTTP or HTTPS. It should also be encoded to ensure valid transmission over the Internet.

When working with the Crawler, apart from the above two query string parameters, you need to add the following two mandatory parameters:

- Callback parameter— &callback=true

- Name of your crawler (we’ll see how to create a crawler later)— &crawler=add_crawler_name

So, your Crawler request will look like this:

https://api.crawlbase.com?token=add_token&callback=true&crawler=add_crawler_name&url=add_url

Since the Crawler works with callbacks, you need to create a webhook endpoint on your server to receive the scraped data.

The webhook should fulfill the following conditions:

- It should be publicly accessible by Crawlbase servers.

- It should be configured to receive HTTP POST requests and respond within 200 milliseconds with a status code of 200, 201, or 204.

After making a request, the crawler engine will harvest the specified data and send it to your callback endpoint using the HTTP POST method with GZIP data compression scheme.

By default, the data is structured using the HTML format; that is, as &format=html. You can also specify to receive the data in JSON format using the &format=json query string parameter.

How to create a local webhook

Let’s demonstrate how you can create a webhook endpoint on your server to receive the scraped data.

For this tutorial, we’ll use the Express Node.js web application framework to create a local webhook server on our machine. Then, we’ll use ngrok to make our local server securely accessible over the Internet. This will ensure our webhook is publicly accessible by Crawlbase servers.

To get started, create a new directory on your development environment, navigate to it on the terminal window, and run the following command to initialize a new Node.js project:

npm init

Next, install the Express framework:

npm install express

Next, create a file called server.js and add the following code:

1 | // import express |

The code above may look familiar if you’ve worked with Express before. We started by initializing Express and defining a port.

Next, we used the built-in express.text() middleware function to parse the incoming raw body data and return it as a plain string. We used it because Crawlbase uses the content-type of text/plain in the API headers.

We passed the limit parameter property into the function to specify the amount of data we intend to receive and avoid overwhelming our server. The middleware function will also automatically decompress the crawled data encoded with GZIP.

We also used the built-in express.urlencoded() middleware function to parse the incoming requests with urlencoded payloads and convert them into a format we can use. We passed the extended parameter property into the function to specify that we need to use the qs querystring parsing and stringifying library.

Notice that we told Express to use the middleware before defining the webhook’s route. This will make them get executed before the route, ensuring the route can access the parsed data in the HTTP POST body.

Next, we set up a simple webhook route for handling the incoming call. We created it at http://localhost:3000/ to handle the request.

We used the body property of the req object to log the crawled data on the console. We also accessed all the API request header information using the headers property.

It’s also vital to respond fast with an HTTP 2xx status code. The Crawlbase Crawler requires this response within 200 milliseconds.

Next, to launch the simple Express webhook application, run the following command:

node server.js

Remember to keep the local server running; don’t close it.

How to expose the local server

The next task is to use ngrok to expose the local running server to the world. There are several ways of setting up ngrok. For this tutorial, we’ll download ngrok it, unzip the ngrok.exe file, and store the file in a folder on our local machine.

To fire up ngrok, launch another terminal, navigate to the directory where the executable file is stored, and run the following command:

ngrok http 3000

Notice that we exposed the server on port 3000, which is the same one the local webhook application is listening on.

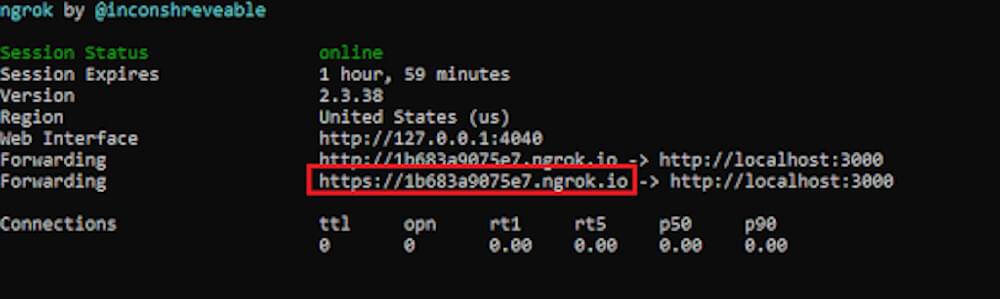

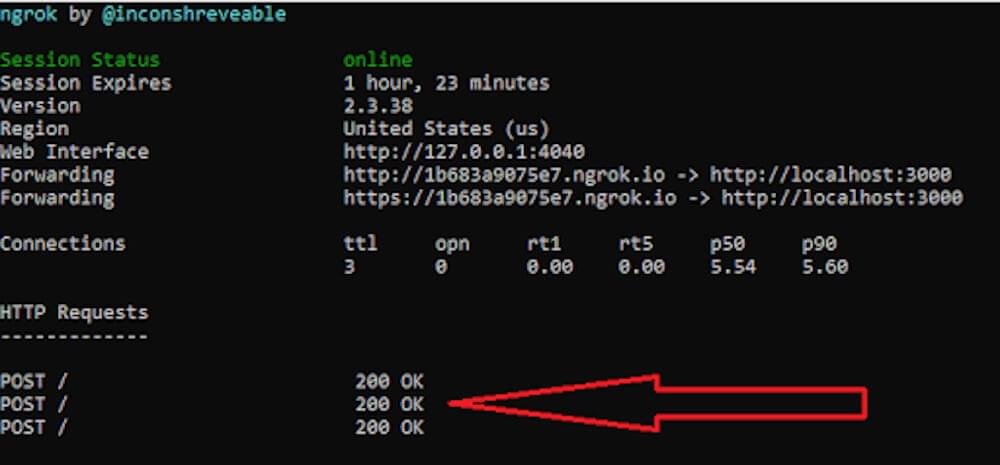

After launching ngrok, it’ll display a UI with a public URL where the local server is forwarded to. This is the URL we’ll use for creating a crawler on Crawlbase’s dashboard. The UI also displays other status and metrics information.

How to create a crawler

As mentioned previously, the Crawler requires you to append the name of a crawler as one of its query string parameters.

You need to navigate to Crawlbase’s dashboard and create a crawler here.

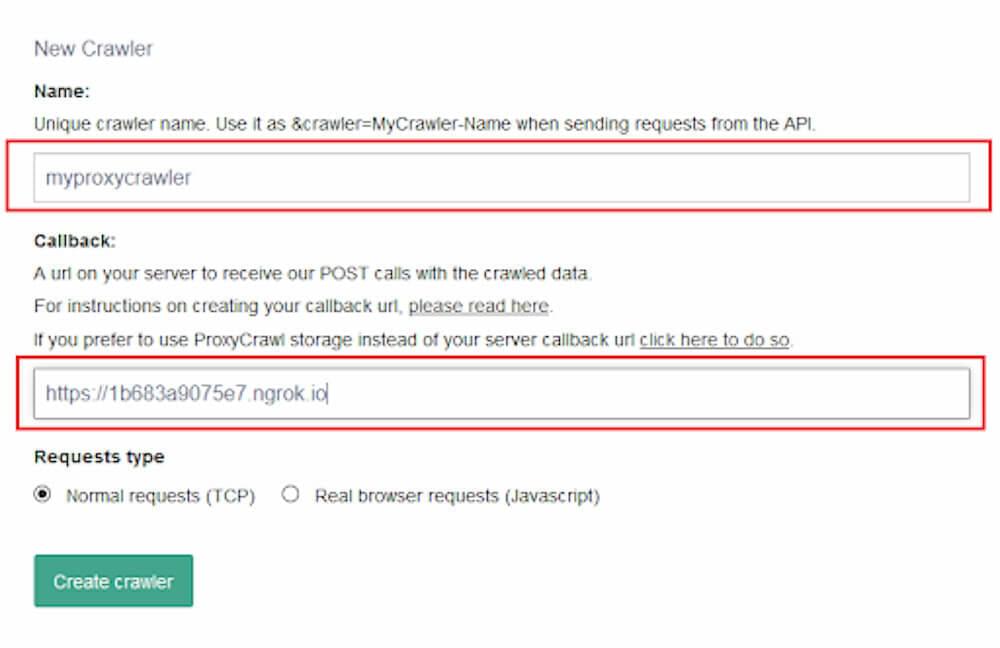

You’ll need to provide a unique name that identifies your crawler. On the callback URL section, let’s input the public ngrok URL we created previously. This is where the crawled data will be delivered to.

You’ll also specify whether you need to make normal requests or real browser requests.

When done, click the Create crawler button.

You’ll then see your crawler on the Crawlers page.

How to send Crawler request

With everything set up, it’s now time to send the Crawler request and retrieve some online data. This is where the real action begins. For this tutorial, we’ll retrieve the contents of this page.

There are many ways of making HTTP requests in Node.js. For this tutorial, we’ll use the lightweight Crawlbase Node.js library.

Open another terminal window and install it:

npm install crawlbase

Next, create a file called request.js and add the following code:

1 | const { CrawlingAPI } = require('crawlbase'); |

To make the request, run the following command

node request.js

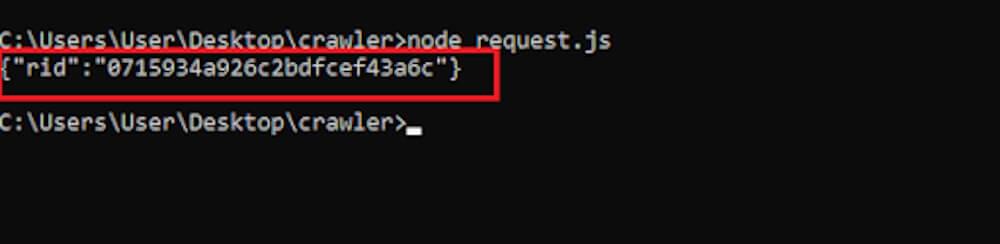

If the request is successful, the Crawler sends back a JSON representation with a unique identifier RID. You can use the RID to identify the request in the future.

This is the push response we got:

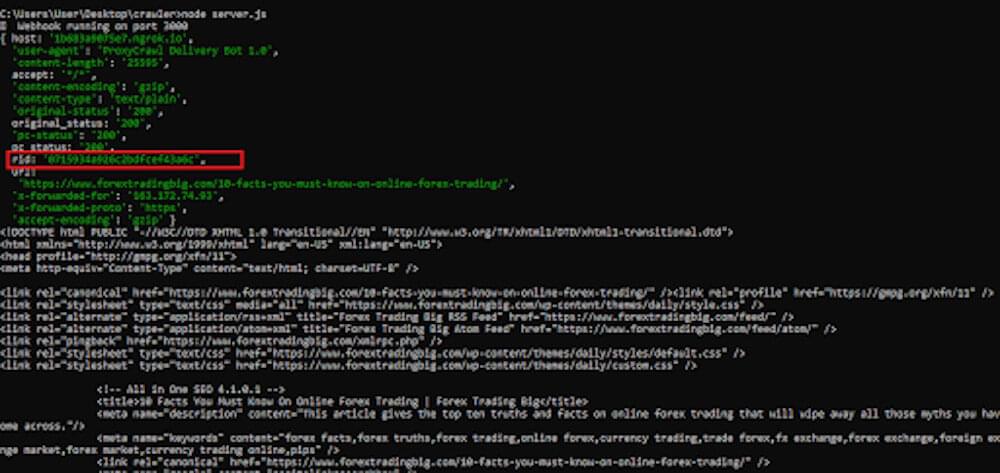

If we check our webhook server, we can see that the target page’s HTML content was crawled successfully:

Notice that the same RID was received on the webhook server, just like in the push response.

The ngrok UI also shows that the POST request was successful:

That’s it!

Conclusion

In this tutorial, we have demonstrated how you can use the versatile Crawlbase’s Crawler to scrape data from online sources with an asynchronous callback function.

With the Crawler, you can make online data extraction fast and smooth. You’ll not worry about revealing your identity or experiencing access blockades when retrieving online information. It’s what you need to take your web crawling efforts to the next level.

If you want to learn more on how to use the API, check its documentation here.

Click here to create a free Crawlbase account and start using the Crawler.