When scaling your web scraping processes, you need to consider using a reliable, efficient, and manageable solution, regardless of the volume of data you are scraping.

Most developers find it challenging when handling extensive scraping due to the vast amount of data involved. Your code, which worked perfectly for small projects, suddenly starts breaking, getting blocked, or becoming impossible to maintain.

That’s where Crawlbase comes in. Our solution is designed specifically to facilitate a smooth transition. You won’t need to rewrite everything from scratch or completely change your workflow anymore. How? We’ll show you in great detail.

Table of Contents

Why Scaling Matters in Web Scraping

On a small scale, the web scraping process is simple. You write the script, send the request on a handful of web pages, and your scraper does the job in sequence. However, once you try to increase the scale of your project to scrape thousands or even millions of pages, things start to fall apart.

Scaling isn’t just about doing the same thing but more. It’s about doing it smart. You have to keep in mind common scaling problems like:

- Rate limiting

- Concurrency issues

- Retry challenges

- Code efficiency problems

- Storage limitations

If you ignore these issues, your scraper might work today, but it’ll crash tomorrow when your data needs grow or when the target site changes.

One of the first decisions you’ll need to make is whether to use synchronous or asynchronous scraping. This choice alone can drastically resolve most of the scaling problems and affect how fast and efficiently you scale.

How to Choose between Sync and Async Web Scraping

When building a scalable scraper, how you send and handle requests matters. In small projects, scraping synchronously might be fine. However, as you scale, choosing the right approach can be the difference between a fast and efficient scraper and one that gets bogged down or blocked.

Synchronous Web Scraping

Synchronous scraping is a very straightforward process. You send a request, wait for the response, process the data, and then move on to the next one. Everything happens one step at a time, like standing in line and waiting your turn.

This approach is easy to implement and great for small-scale jobs or testing because:

- The code is simple to read and debug

- It’s easier to manage errors since requests happen in order

- You don’t have to worry much about concurrency or task coordination

Crawlbase’s Crawling API is a strong example of a synchronous crawler that gets the job done. But as you scale up, synchronous crawling can become a bottleneck. Your scraper spends a lot of time just waiting for servers to respond, waiting for timeouts, waiting for retries. And all that waiting adds up.

Asynchronous Web Scraping

Asynchronous scraping means you can send multiple requests, and the system handles or executes those requests at once rather than waiting for each one to finish before executing the next request. This approach is essential for scaling because it removes the idle time spent waiting on network responses.

In practical terms, this means higher throughput, better resource utilization, and the ability to scrape large volumes of data faster.

Crawlbase provides a purpose-built, asynchronous crawling and scraping system known simply as The Crawler. It’s designed to handle high-volume scraping by letting you push multiple URLs to be crawled concurrently without needing to manage complex infrastructure.

Here’s how it works:

- The Crawler is a push system based on callbacks.

- You push URLs to be scraped to the Crawler using the Crawling API.

- Each request is assigned a RID (Request ID) to help you track it throughout the process.

- Any failed requests will be automatically retried until a valid response is received.

- The Crawler will POST the results back to a webhook URL on your server.

The Crawler gives you a powerful way to implement asynchronous scraping. Processing multiple pages in parallel and retrying failing requests automatically. This means solving rate-limiting, concurrency, and retry issues simultaneously.

What Should You Do to Scale Web Scraping?

Crawlbase’s Crawling API is a great tool that handles millions of requests reliably. It’s designed for large-scale scraping and works excellent if you need direct, immediate responses for each request. It’s simple to implement and ideal for smaller to medium-sized jobs, quick scripts, and integrations where real-time results are crucial.

However, when you’re dealing with enterprise-level scraping, millions of URLs, tight concurrency demands, and the need for robust queuing, using the Crawler makes much more sense.

The Crawler is designed for high-volume data scraping. It’s scalable by design, supports concurrent processing and automatic retries, and can grow with your needs.

So, here’s how you should scale:

Build a scalable scraper using the Crawling API when you:

- Need real-time results

- Are you running smaller jobs

- Prefer a simpler request-response model

Then, transition to the the Crawler when you:

- Need to process thousands or millions of URLs

- Want to handle multiple requests simultaneously

- Want to offload the retry process from your end

- Are you building a scalable, production-grade data pipeline

In short, If your goal is true scalability, moving from synchronous to asynchronous scraping with the Crawler is the best choice.

How to Set Up Your Scalable Crawler

In this section, we’ll provide you with a step-by-step guide, including best practices on how to build a scalable web scraper. Note that we chose to demonstrate this by using Python with Flask and Waitress, as this method is both lightweight and easier to implement.

Let’s begin.

Python Requirements

- Set up a basic Python environment. Install Python 3 on your system.

- Install the required dependencies. You can download this file and execute the command below in your terminal:

1 | python -m pip install requests flask waitress |

- For our Webhook, install and configure ngrok. This is required to make the webhook publicly accessible to Crawlbase.

Webhook Integration

Step 1: Create a file and name it webhook_http_server.py, then copy and paste the code below:

1 | from flask import Flask |

Looking at the code above, here are some good practices we’re following for a webhook:

- We only accept

HTTP POSTrequests, which is the standard for webhooks. - We check for important headers like

rid,Original-Status, andPC-Statusfrom Crawlbase response to make sure the request has the right info. - We ignore dummy requests from Crawlbase. These are just “heartbeat” messages sent to check if your webhook is up and running.

- We also look for a custom header

My-Idwith a value of constantREQUEST_SECURITY_ID. This value is just a string, you can make up anything you want for extra security. Using this header is a best practice for protecting your webhook, because it verifies if incoming responses are genuine and intended for you. - Lastly, actual jobs are handled in a separate thread, allowing us to quickly reply within 200ms. This setup should be able to handle approximately 200 requests per second without issue.

Step 2: Add the rest of the code below. This is where the actual data from Crawlbase is processed and saved. For simplicity, we use filesystem to track crawled requests. As an alternative, you can use database or Redis.

1 | def handle_webhook_request(request_content): |

This part of the code neatly unpacks a completed crawl job from Crawlbase, organizes the files in their own folder, saves both the notes and the actual website data, and notifies you if anything goes wrong.

Step 3: Complete the code by configuring waitress package to run the server. Here, we use port 5768 to listen to incoming requests, but you can change this to any value you prefer.

1 | if __name__ == "__main__": |

Here’s what the complete script for our webhook_http_server.py looks like:

1 | from flask import Flask |

Step 4: Use the command below to run our temporary public server.

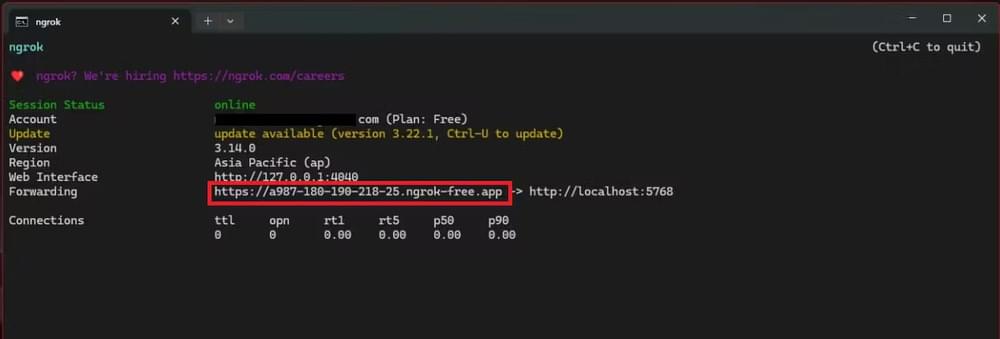

1 | ngrok http 5768 |

ngrok will give you a link or a “forwarding URL” you can share with Crawlbase so it knows where to send the results.

Tip: When you want to use this in production (not just for testing), it’s better to run your webhook on a public server and use a tool like nginx for security and reliability.

Step 5: Run the Webhook HTTP server.

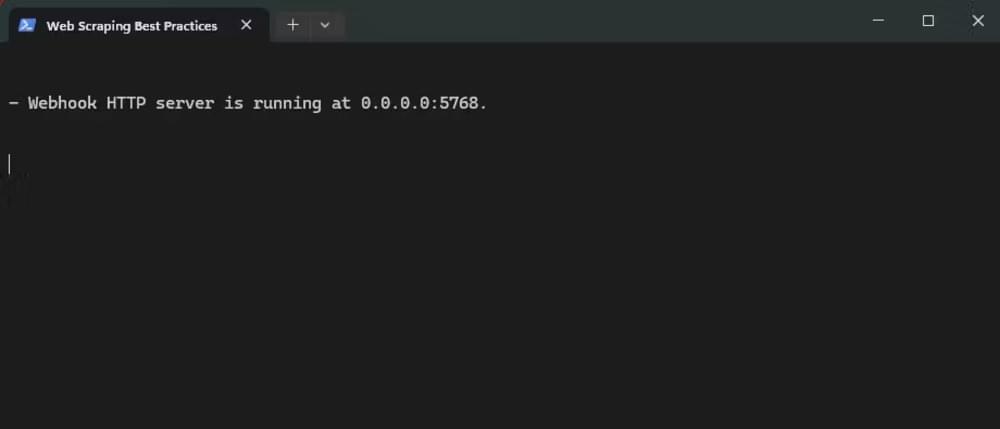

1 | python webhook_http_server.py |

This will now initiate our Webhook HTTP server, ready to receive data from Crawlbase.

Step 6: Configure Your Crawlbase Account.

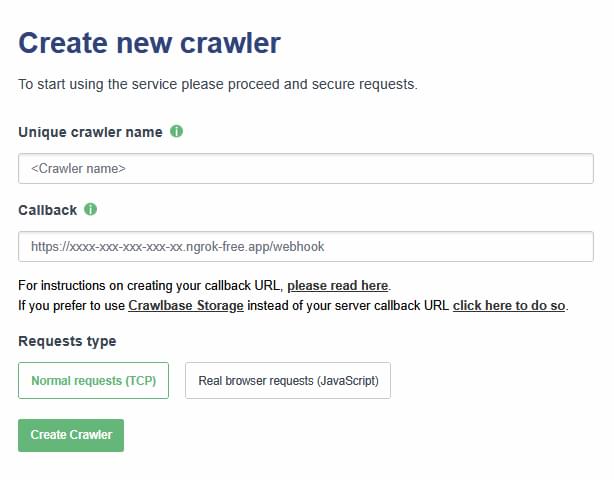

- Sign up for a Crawlbase account and add your billing details to activate the Crawler.

- Create a new Crawler here. Copy the forwarding URL provided by ngrok earlier in Step 4 and paste it into the Callback URL field.

- Select Normal requests (TCP) for this guide’s purpose.

How to Handle Large-Scale Data Processing

Now that our webhook is online, we are ready to scrape the web at scale. We’ll write a script to let you quickly send a list of websites to Crawlbase. It will also retry requests automatically if there’s a temporary issue.

Step 1: Basic Crawl Request

Create a new Python file and name it as crawl.py

Copy and paste this code:

1 | from pathlib import Path |

What’s happening in this part of the script?

After each crawl request is sent to Crawlbase, it creates a dedicated folder named after the rid. This approach enables you to keep track of your crawl requests, making it easy to match the results with their original URLs later on.

Additionally, when submitting the request, we add a custom header called My-Id with a value of REQUEST_SECURITY_ID.

Step 2: Error Handling and Retry Logic

When writing a scalable scraper, always ensure it can handle errors and include some sort of logic to retry any failing requests. If you don’t handle these problems, your whole process could stop just because of one minor glitch.

Here’s an example:

1 | import time |

Wrap your web request with the retry_operation function to ensure it automatically retries up to max_retries times in case of errors.

1 | def perform_request(): |

Step 3: Batch Processing Technique

When sending thousands of URLs, it’s a good idea to batch your URLs in smaller groups and send only a set number of requests at once. We will control this via the BATCH_SIZE value per second.

1 | def batch_crawl(urls): |

In this section, multiple requests in a batch are processed simultaneously to expedite the process. Once a batch is finished, the script waits a short moment (DELAY_SECONDS) before starting with the next batch. This is an efficient method for handling web scraping at scale.

Here is the complete code. Copy this and overwrite the code in your crawl.py file.

1 | from pathlib import Path |

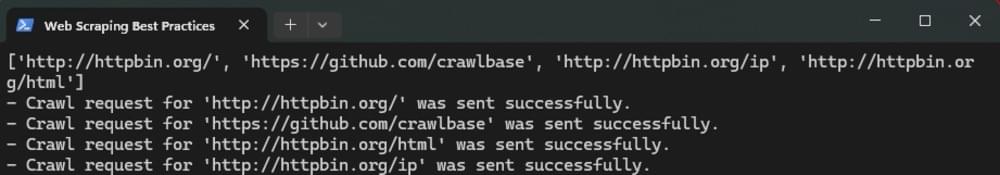

Step 4: Run your Web Crawler.

1 | python crawl.py |

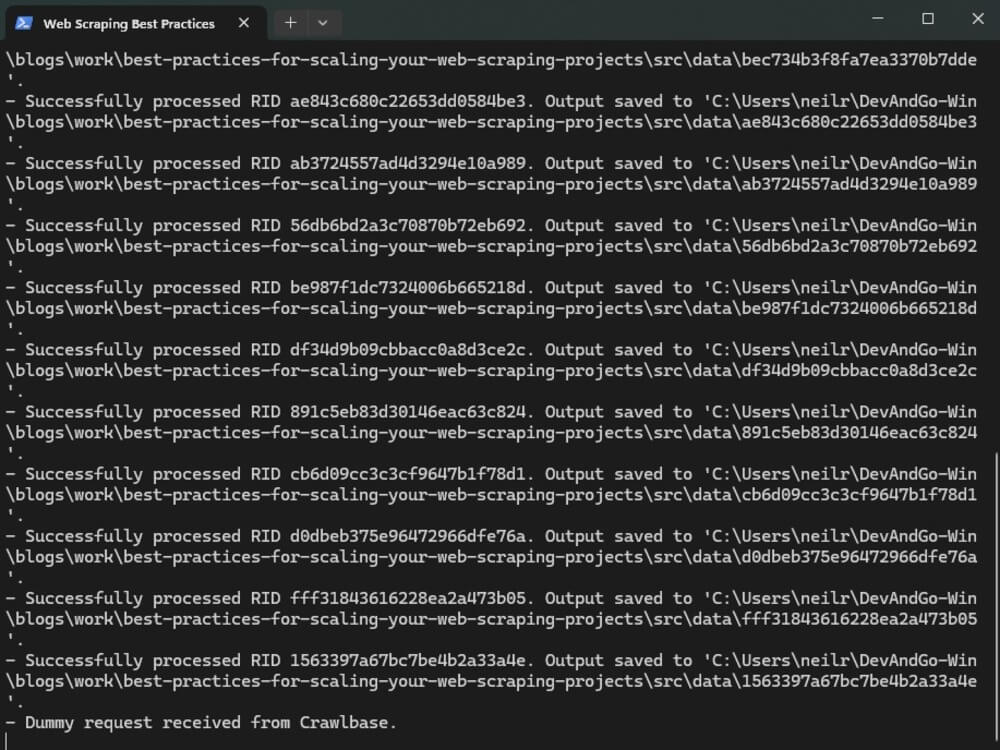

Example output:

Go to Webhook HTTP Server terminal console, and you should see a similar output below:

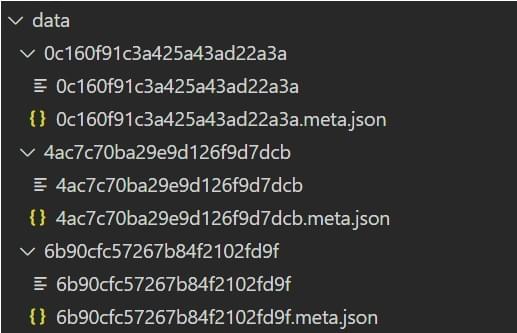

This process generates a data directory, which includes a subdirectory named <rid> for each crawl request:

The

<rid>file contains the scraped data.The

<rid>.meta.json filecontains the associated metadata:

Example:

1 | { |

Get the complete code at Github.

Crawler Maintenance and Monitoring

Once your scaled-up web scraper is up and running, proper monitoring and maintenance are required to keep things scalable and efficient. Here are some things to consider:

Manage Crawler Traffic

Crawlbase offers a complete set of APIs that let you do the following:

- Pause or unpause a crawler

- Purge or delete jobs

- Check active jobs and queue size

For more details, you can explore the Crawler API Docs.

Additionally, if your crawler seems to be lagging or stalls unexpectedly, you can monitor its latency or the time difference since the oldest job was added to the queue from the Crawlers dashboard. If needed, you can also restart your crawler directly from that page.

Monitoring Tools

Use these tools to keep track of your Crawler activity and detect issues before they impact the scale.

- Crawler Dashboard - View the current cost, success, and fail counts of your TCP or JavaScript Crawler.

- Live Monitor - See real-time activity, including successful crawls, failures, queue size, and pending retries.

- Retry Monitor - View a detailed description of the requests being retried.

Note: Those failed attempts shown on the dashboard represent internal retry logic. You do not need to worry about handling them, as the system is designed to request failed jobs for retry automatically.

Crawler Limits

Here are the default values to keep in mind when scaling:

- Push Rate Limit: 30 requests/second

- Concurrency: 10 simultaneous jobs

- Retry Count: 110 attempts per request

- The combined queue limit for all your Crawlers is 1 million pages. If this limit is reached, the system temporarily pauses push requests and resumes automatically when the queue clears.

Keep in mind that these limits can be adjusted to meet your specific requirements. Just reach out to Crawlbase Customer Support to request an upgrade.

Scale your Web Scraping with Crawlbase

Web scraping projects have evolved beyond writing robust scripts and managing proxies. You need an enterprise-grade infrastructure that is both legally compliant and adaptable to the evolving needs of the modern business world.

Crawlbase is designed for performance, reliability, and scalability. Through its solutions, businesses and developers like yours have extracted actionable insights for growth.

Frequently Asked Questions

Q: Can I create my own Crawler webhook?

Yes, to scale web scraping, it’s always a good practice to create a webhook for your Crawler. We recommend checking our complete guide How to Use Crawlbase Crawler to learn how.

Q: Can I test the Crawler for free?

Currently, you will need to add your billing details first to use the Crawler. Crawlbase does not provide free credits by default when you sign up for the Crawler, but you can contact customer support to request a free trial.

Q: What are the best practices for handling dynamic content in web scraping?

Looking to tackle dynamic content in web scraping? Here are some top-notch practices to keep in mind:

Leverage API: Take a peek at the network activity to see if the data is being pulled from an internal API. This method is usually quicker and more reliable for scraping.

Wait Strategically: Instead of relying on hardcoded timeouts, use smart waiting strategies like

waitForSelectororwaitForNetworkIdleto make sure all elements are fully loaded before you proceed.Use a Scraping API: Tools like Crawlbase can make your life easier by handling dynamic content for you, managing rendering, JavaScript execution, and even anti-bot measures.

Q: What are the best practices for rotating proxies in web scraping?

Now, if you’re curious about the best practices for rotating proxies in web scraping, here’s what you should consider:

Use Residential or Datacenter Proxies Wisely: Pick the right type based on the website you’re targeting. Residential proxies are tougher to detect but come at a higher cost.

Automate Rotation: Set up automatic IP rotation after a few requests or after a certain number of seconds to keep things fresh.

Avoid Overloading a Single IP: Spread your requests evenly across different proxies to dodge patterns that might alert anti-bot systems.

Monitor Proxy Health: Keep an eye on response times, status codes, and success rates to spot and replace any failing proxies.

Use a Managed Proxy Solution: Services like Crawlbase provide built-in proxy management and rotation, so you won’t have to deal with manual setups.

Q: What are the best practices for handling large datasets from web scraping?

When it comes to handling large datasets from web scraping, there are several best practices that can make a significant difference. Here are a few tips to keep in mind:

Use Pagination and Batching: Instead of scraping everything at once, break your tasks into smaller chunks using page parameters or date ranges. This helps prevent overwhelming servers or running into memory issues.

Store Data Incrementally: Stream your scraped data directly into databases or cloud storage as you go. This way, you can avoid memory overload and keep everything organized.

Normalize and Clean Data Early: Take the time to clean, deduplicate, and structure your data while you’re scraping. This will lighten the load for any processing you need to do later on.

Implement Retry and Logging Systems: Monitor any URLs that fail to scrape and establish a system to retry them at a later time. Logging your scraping stats can also help you track your progress and spot any issues.

Use Scalable Infrastructure: Think about using asynchronous scraping, job queues, or serverless functions to handle larger tasks. Tools like Crawlbase can help you scale your data extraction without the hassle of managing backend resources.