This blog focuses on AliExpress Search Page Scraping using JavaScript. AliExpress is a massive marketplace with useful product information critical for online businesses. It serves as a veritable goldmine for those seeking data for purposes such as product analysis and market research. However, AliExpress has some strong defenses. If you try to extract data without caution, you’re likely to run into blocks, bot detection, and possibly CAPTCHAs - those puzzles that stop automated data collection in its tracks.

But don’t worry! We’ll guide you through every step, showing you how to grab data effectively and sidestep those blocking obstacles without spending too much time and money. It’s like having a friendly tutor by your side, explaining each part of the process.

By the end of this blog, you’ll better understand how to use crawling tools to get the data you need from AliExpress, helping your business make smart decisions in the world of online shopping.

We have created a video tutorial on this blog, in case you like video tutorials.

Oh and Happy Scraping this Halloween!

Table of Contents

I. How to Search using Keywords in AliExpress

II. Project Scope and Structure

III. Setting Up Your Environment

IV. AliExpress Search Page Scraping

V. Accepting Keywords through Postman

VIII. Frequently Asked Questions

I. How to Search using Keywords in AliExpress

Searching the AliExpress Search Engine Results Page (SERP) with keywords is a straightforward process. Here are the steps to search for products on AliExpress using keywords:

- Visit AliExpress: Open your web browser and go to the AliExpress website (www.aliexpress.com).

- Enter Keywords: On the AliExpress homepage, you’ll find a search bar at the top. Enter your desired keywords in this search bar. These keywords should describe the product you’re looking for. For example, if you’re searching for “red sneakers,” simply type “red sneakers” in the search box.

- Click “Search”: After entering your keywords, click on the “Search” button or hit “Enter” on your keyboard. AliExpress will then process your search query.

- Browse Search Results: The AliExpress SERP will display a list of products that match your keywords. You can scroll through the search results to explore different products. The results will include images, product titles, prices, seller ratings, and other relevant information.

Browsing through individual products on AliExpress is a walk in the park. Yet, when you’re faced with the daunting task of sifting through thousands of keywords and extracting data from the search results, things can take a turn toward the tedious. How do you tackle this challenge? How do you make it possible to extract product information from AliExpress in the shortest possible time? The solution lies just a scroll away, so keep reading to uncover the secrets.

II. Project Scope and Structure

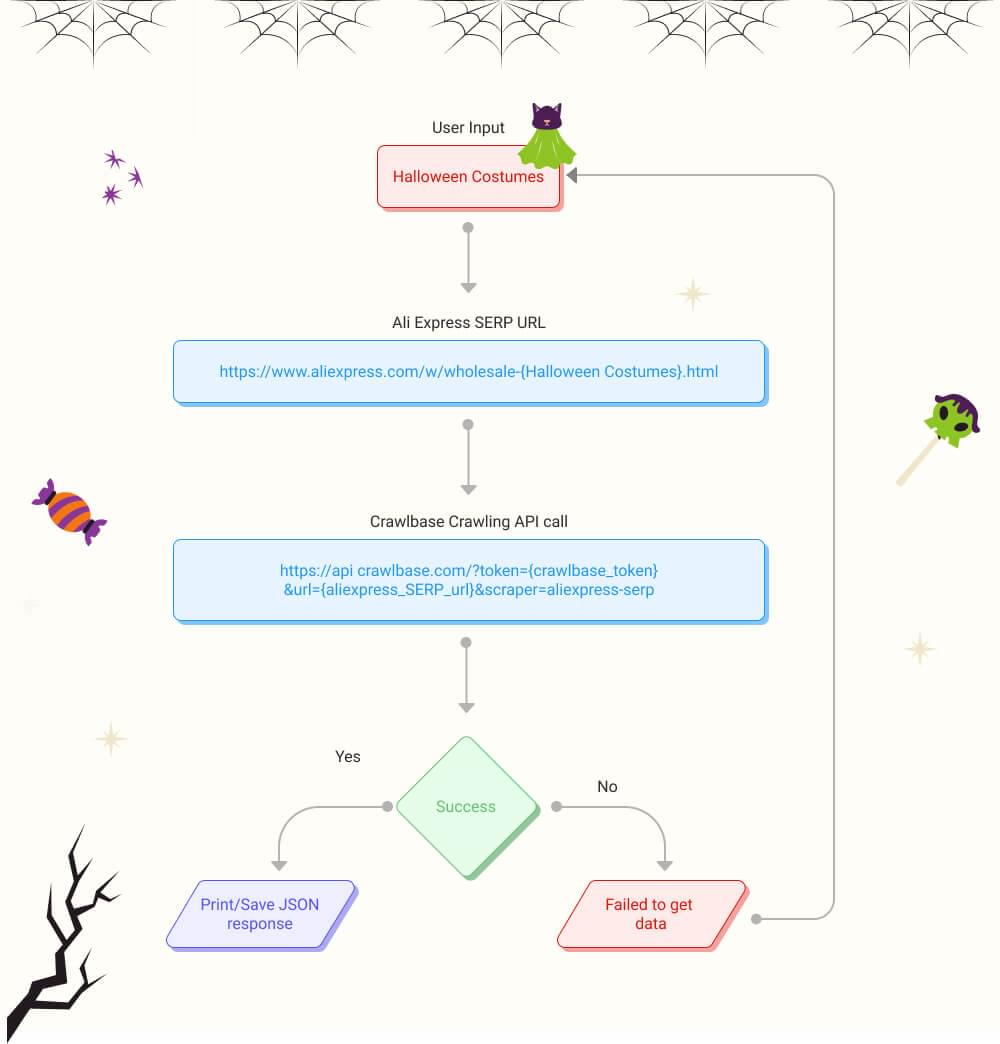

Our aim is to ease and scale your process of searching for products in AliExpress, scrape the results, and store them for use. Whether you require data for analytical purposes, market research, or pricing strategies. The project will allow you to input keywords, which are then transformed into valid AliExpress Search Engine Results Page (SERP) URLs. These URLs are then forwarded to the Crawlbase API for efficient web scraping.

To achieve this, we will utilize Postman to accept user input, JavaScript in conjunction with Bun (JavaScript Runtime), Express package, and finally, the Crawlbase Crawling API to crawl and scrape AliExpress. This approach ensures seamless data retrieval while minimizing the risk of being blocked during the scraping process.

Below, you’ll find a simplified representation of the project’s structure.

III. Setting Up Your Environment

So, you’ve got your keywords prepped, and you’re all set to dive headfirst into the realm of AliExpress data. But before we proceed on our web scraping adventure, there’s a bit of housekeeping to attend to – setting up our environment. It is an essential prep work to ensure a smooth journey ahead.

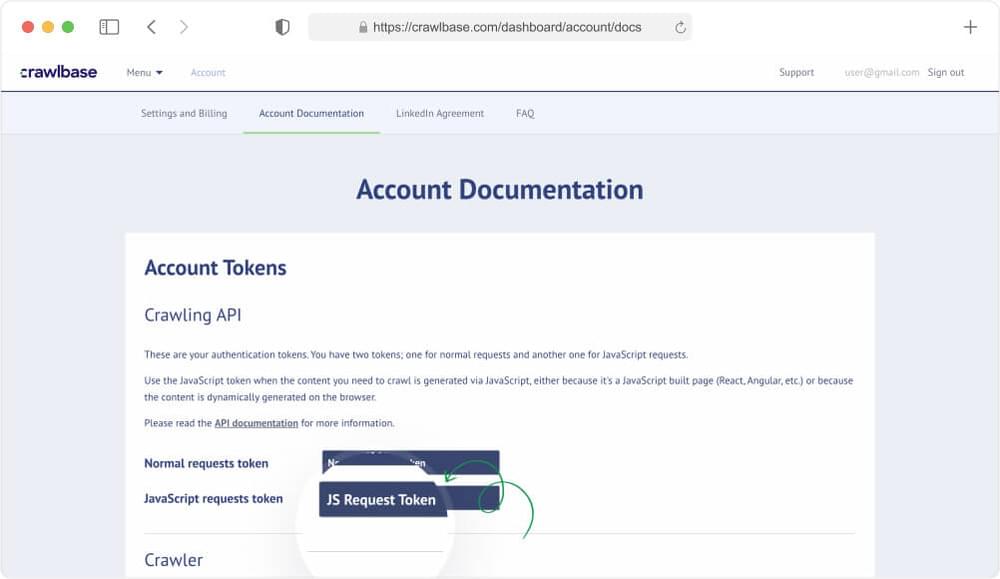

1. Acquiring Your Crawlbase JavaScript Token

To get started, we’ll need to create a free Crawlbase account and acquire a JavaScript token. This token is essential for enabling our efficient data scraping from AliExpress pages, using a headless browser infrastructure and a specialized Crawling API data scraper designed specifically for AliExpress SERPs.

2. Establishing Your JavaScript Environment

Now that you’ve got that JavaScript token securely in your grip, it’s time to set the stage for our coding journey. Begin by creating a new project directory for your scraping application. In this example, we’re creating a folder named ‘Crawlbase’

1 | mkrdir Crawlbase |

3. Utilizing the Power of Bun

In this project, we’ll be leveraging the power of Bun, so it’s crucial to ensure that you have Bun properly installed. Bun is a versatile, all-in-one toolkit tailored for JavaScript and TypeScript applications.

At the heart of Bun lies the Bun runtime, a high-performance JavaScript runtime meticulously engineered to replace Node.js. What sets it apart is its implementation in the Zig programming language and its utilization of JavaScriptCore under the hood. These factors work in harmony to significantly reduce startup times and memory consumption, making it a game-changer for your development and web scraping needs.

Execute the line below:

1 | cd Crawlbase && bun init |

This command is used to initialize a new project with Bun. When you run bun init in your command line or terminal, it sets up the basic structure and configuration for your web scraping project. This can include creating directories and files that are necessary for your project to function correctly.

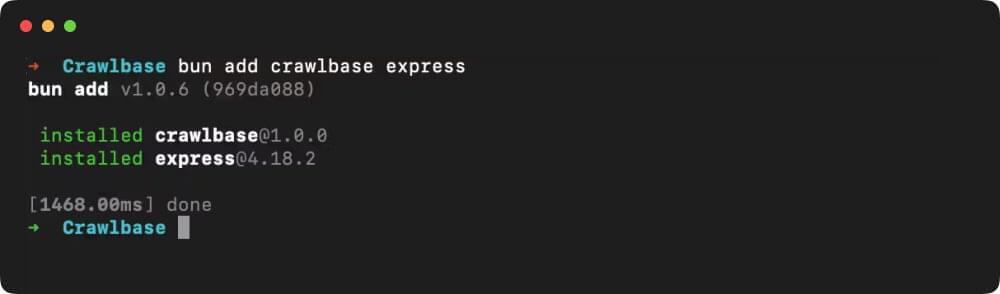

4. Crawlbase JavaScrip Library and Express

We’re going to introduce two vital libraries: Crawlbase and Express. The Crawlbase JavaScript library is an absolute gem in this context, allowing us to seamlessly integrate the Crawling API into our JavaScript project and Express is a popular web application framework that we’ll use to create our scraping server.

To get these essential libraries added to your project, simply run the following command in your terminal:

1 | bun add crawlbase express |

With the Crawlbase library and Express now in the mix, you’re on your way to unlocking the full potential of the Crawling API and creating a robust scraping application. We’re making strides, so stay tuned as we proceed further in this exciting project.

IV. AliExpress Search Page Scraping Results

Now that the development environment is all set, let’s dive into the core function of our code. You can copy and paste the following code block and understand it by reading the explanation below.

1 | const express = require('express'); // Import the 'express' module |

- We start by importing the necessary modules:

express,CrawlingAPIfrom Crawlbase, andfsfor file system operations. - We initialize the Crawlbase Crawling API with your Crawlbase JavaScript token. This token grants access to the Crawlbase services.

- An Express app is created, and we specify the port number for the server. It defaults to port 3000 if not defined in the environment variables.

- We define a route, “/scrape-products,” using

app.get. This route listens for GET requests and is responsible for the web scraping process. - Within this route, we use

api.getto request the HTML content from an AliExpress URL that is dynamically generated based on the user’s search keywords. We replace spaces in the keywords with hyphens to create the appropriate URL structure. - We specify the “aliexpress-serp” scraper to instruct Crawlbase to use the AliExpress SERP scraper for this specific URL.

- If the response from the API has a status code of 200 (indicating success), we extract the scraped product data and log it in the console. The scraped data is then sent back as a JSON response to the client.

- If the API response has a different status code, an error is thrown with a message indicating the failure status.

- In the case of any errors or exceptions, we handle them by logging an error message and sending a 500 Internal Server Error response with a message indicating that the data wasn’t saved.

- Finally, we start the Express app, and it begins listening on the specified port. A message is displayed in the console to confirm that the server is up and running.

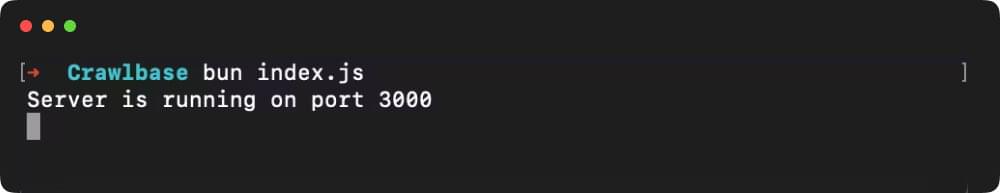

To execute the app, simply run the command below:

1 | bun index.js |

The server is up and running:

This code sets up a functional web server that can scrape product data from AliExpress search results based on user-defined keywords. It uses the Crawlbase library and Express to provide a simple API endpoint for web scraping, making your project all the more dynamic and interactive.

Now, how will a user enter the keywords exactly? Let’s find out on the next section of the blog.

V. Accepting Keywords through Postman

After we’ve set up our web scraping server to extract data from AliExpress search results, it’s time to test it out using Postman, a popular and intuitive API testing tool.

In this section, we’ll show you how to use Postman to send keyword queries to our /scrape-products route and receive the scraped data. Keep in mind that you can use any keywords you like for this test. For our example, we’ll be searching for “Halloween costumes” on AliExpress.

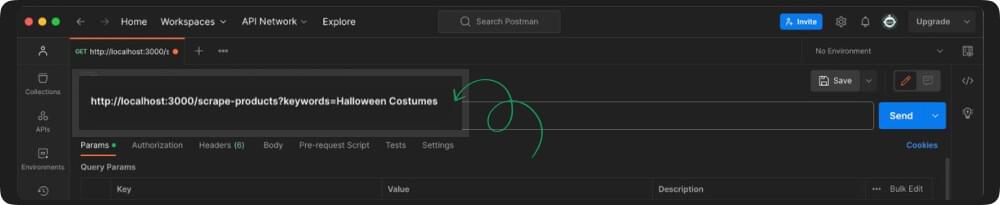

- Open Postman: If you haven’t already, download and install Postman, and fire it up.

- Select Request Type: In Postman, choose the type of HTTP request you want to make. In our case, we’ll select “GET” since we’re fetching data.

- Enter the URL: In the URL field, enter the endpoint for your scraping route. Assuming your server is running locally on port 3000, it would be something like

http://localhost:3000/scrape-products. Make sure to adjust the URL according to your setup.

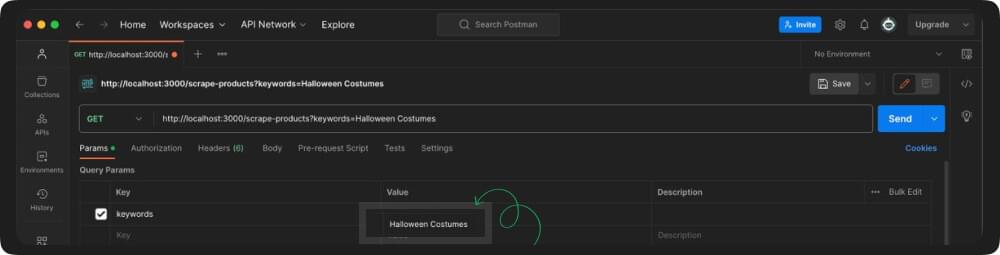

- Add Keywords as a Query Parameter: To provide keywords for your search, you’ll include them as query parameters. In Postman, you can add these parameters to the request URL. For our example, we’ll add

keywordsas a parameter with the value “Halloween costumes.” In the URL, it will look something like this:http://localhost:3000/scrape-products?keywords=Halloween%20costumes.

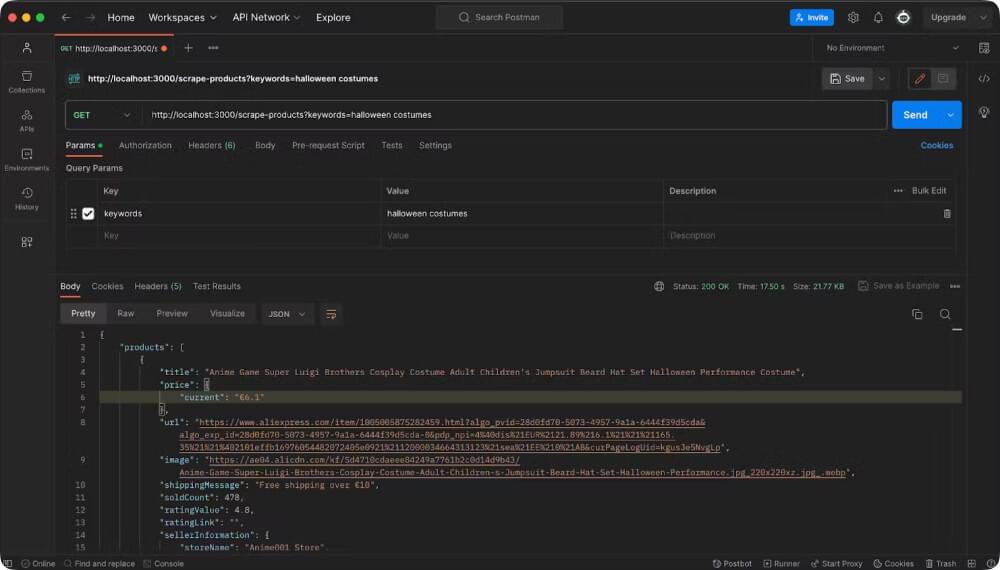

- Send the Request: Click the “Send” button in Postman to fire off your request. Your server will respond by sending back the scraped data.

- View the Response: Postman will display the response in the bottom panel. You should see the scraped data from AliExpress, which can be in JSON format or another format depending on how your server is configured.

1 | { |

That’s it! You’ve successfully used Postman to send keywords to your web scraping server and received the scraped data in response. Remember, you can replace “Halloween costumes” with any keywords you want to search for on AliExpress. This process demonstrates the dynamic nature of your web scraping application, making it adaptable to various search queries.

VI. Saving the Data to JSON

In our journey of scraping AliExpress search results so far, we’ve successfully set up our web scraping server and tested it with various keywords using Postman. Now, it’s time to enhance our project further by adding a feature to save the scraped data to a JSON file. This step is incredibly valuable as it allows you to store and later analyze the data you’ve extracted.

We’ve introduced a crucial addition to the /scrape-products route in our code. This addition ensures that the data we scrape isn’t just sent in response but also saved in a structured JSON file. Let’s evaluate the code.

1 | // Saving the scraped products in JSON file |

- We use the fs module, which we imported earlier, to write data to a file. In this case, we’re creating a new file named “AliExpressProducts.json” in the current working directory.

JSON.stringify({ scrapeProducts }, null, 2)converts our scraped data (in thescrapeProductsvariable) into a JSON-formatted string. The null, 2 arguments are for pretty printing, which adds indentation for readability.- The

fs.writeFileSyncmethod then writes this JSON string to the specified file.

Below is the complete code of our project:

1 | const express = require('express'); // Import the 'express' module |

With this addition, the scraped data will not only be available in real-time responses but also stored in a structured JSON file for future use. It’s a crucial step to ensure you can analyze, process, and visualize the data you collect from AliExpress efficiently.

VII. Conclusion

One of the remarkable things about the code we’ve walked through is that it’s not just for demonstration purposes – it’s designed for you to copy, paste, and adapt for your own web scraping projects. Whether you’re scraping AliExpress or exploring other web scraping endeavors, this code can serve as a solid foundation.

Here are a few key points to consider:

Accessibility: The code is readily accessible, and you can copy it without any restrictions.

Adaptability: Feel free to modify the code to suit your specific use case. Want to scrape data from a different website? You can change the URL and adjust the scraping logic accordingly.

Keyword Flexibility: While we’ve used “Halloween costumes” as an example, you can replace it with any search keywords that align with your needs. This flexibility empowers you to target any product or content you’re interested in.

Data Storage: The code includes functionality to store scraped data to a JSON file. You can customize the file name, format, or storage location to match your preferences.

Integration: This code can be integrated into your existing projects or used as a standalone web scraping application. It’s versatile and adaptable to your requirements.

Learning and Exploration: Even if you’re new to web scraping, this code serves as an educational tool. By examining and experimenting with it, you can gain valuable insights into web scraping techniques.

If you want to learn Scraping Aliexpress using Python, here is a comprehensive guide for you:

📜 Scraping AliExpress with Python

And before you leave, I’m leaving some links down for you to read and excel at scraping data.

📜 How to Scrape Amazon Search Pages

📜 How to Scrape Walmart Search Pages

Additionally, for other e-commerce scraping guides, check out our tutorials on scraping product data from Walmart, eBay, and Amazon.

So, go ahead, copy the code, experiment with it, and mold it to your unique needs. It’s your gateway to the world of web scraping, and the possibilities are only limited by your imagination. Whether you’re pursuing e-commerce data, research, or any other data-driven project, this code can be your trusty starting point.

Enjoy scraping this Halloween!

VIII. Frequently Asked Questions

Q. Why choose AliExpress as a data source for web scraping?

AliExpress is a prime candidate for web scraping because it is one of the world’s largest e-commerce platforms, offering a vast and diverse range of products from numerous sellers. There are several compelling reasons to choose AliExpress, some of them are as follows:

1. Wide Product Variety: AliExpress hosts a staggering array of products, from electronics to fashion, home goods, and more. This diversity makes it an ideal source for market research and product analysis.

2. Competitive Insights: By scraping AliExpress, businesses can gain valuable insights into market trends, popular products, pricing strategies, and competition, enabling informed decision-making.

3. Pricing Data: AliExpress often offers competitive prices, and scraping this data can help businesses in pricing strategies and staying competitive in the market.

4. Supplier Information: Businesses can use scraped data to identify potential suppliers and assess their reliability, product quality, and pricing.

5. User Reviews and Ratings: AliExpress contains a wealth of user-generated reviews and ratings. Scraping this information provides insights into product quality and customer satisfaction.

6. Product Images: Scraping product images can be beneficial for e-commerce businesses in building product catalogs and marketing materials.

In summary, AliExpress offers an abundance of data that can be invaluable for e-commerce businesses, making it a prime choice for web scraping to gain a competitive edge and make informed business decisions.

Q. How can I ensure data privacy and security when web scraping AliExpress with the Crawlbase API?

Crawlbase’s feature-rich framework takes care of data privacy and security while web scraping AliExpress. It ensures your anonymity with rotating proxies, user-agent customization, and session management. Advanced algorithms handle CAPTCHAs, optimizing scraping rates to prevent server overloads, and adapt to evolving security measures, maintaining a high level of privacy and security. With Crawlbase, your scraping on AliExpress is both secure and private, allowing you to focus on your objectives while staying anonymous and compliant with ethical scraping practices.

Q. What are some real-world applications of web scraping on AliExpress?

Web scraping from AliExpress has a wide range of practical applications in the real world. Here are some examples of how businesses can utilize the data obtained from AliExpress:

Market Research: Web scraping allows businesses to gather information on trending products, pricing strategies, and customer preferences. This data is vital for conducting market research and making informed decisions about product offerings and pricing.

Competitor Analysis: Scraping data from AliExpress enables businesses to monitor competitors’ pricing, product listings, and customer reviews. This competitive intelligence helps companies adjust their strategies to gain an edge in the market.

Price Comparison: Businesses can use scraped data to compare prices of products on AliExpress with their own offerings. This helps in adjusting pricing strategies to remain competitive.

SEO and Keywords: Extracting keywords and popular search terms from AliExpress can aid in optimizing SEO strategies, ensuring that products are easily discoverable on search engines.

Trend Identification: Web scraping can be used to identify emerging trends and popular product categories, enabling businesses to align their offerings with market demand.

Marketing Campaigns: Data from AliExpress can inform the development of marketing campaigns, targeting products that are currently in demand and aligning promotions with seasonal trends.

Product Development: Analyzing customer feedback and preferences can guide the development of new products or improvements to existing ones.

These are just a few real-world applications of web scraping on AliExpress, and businesses across various industries can use this data to improve decision-making, enhance their competitiveness, and streamline operations.

Q. Where can I find additional resources or support for web scraping and using the Crawlbase API?

Crawlbase offers a plethora of additional resources to support your web scraping endeavors and to make the most of the Crawlbase API. For more examples, use cases, and in-depth information, we recommend browsing through Crawlbase’s Knowledge Hub page. There, you’ll discover a valuable collection of content and guides to enhance your web scraping skills and maximize the potential of the Crawlbase API. It’s a valuable resource to expand your knowledge and ensure you’re well-equipped for successful web scraping projects.