Smart Proxy

Get smart proxies for your web crawler now and stop worrying about proxy lists.

No credit card required. Get the first 1000 requests for free.

Intelligent. Secure. Proxies.

Advanced Smart Proxy Features

Start with 5,000 credits free of charge upon signing up

Unlimited Bandwidth

You are free to consume as much data as needed. No bandwidth restrictions, guaranteed.

Custom Geolocalization

Get the ability to maintain proxy sessions with custom geolocation to boost your success rate.

Artificial Intelligence

Developed and validated using AI and machine learning techniques to guarantee fast and accurate results.

If you need a custom solution or expert advice on Smart Proxy, our team of engineers is ready to handle every challenge.

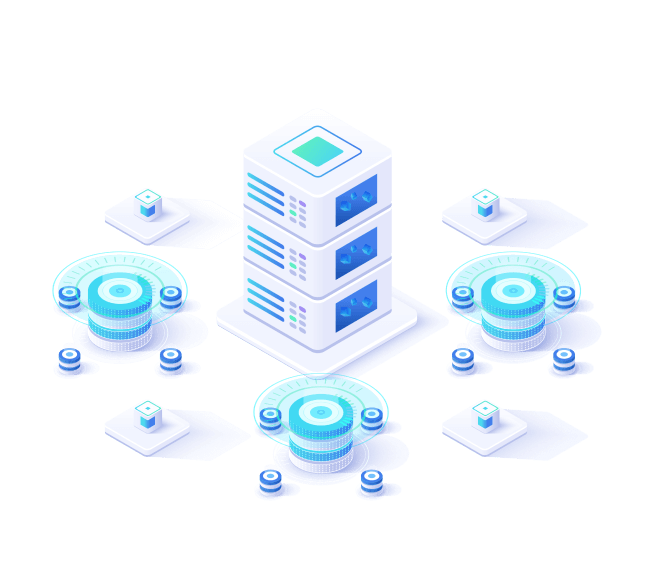

Crawlbase Smart Proxy

Forwards your connection requests to a randomly rotating IP address in a pool of proxies before reaching the target website.

Unlike other proxies, the Smart Proxy is more effective in avoiding blocked requests and bans because it uses a combination of artificial intelligence and machine learning to bypass CAPTCHAs and blocks. It also allows you to use a single node to connect to a proxy network countless times. The biggest benefit of using this type of proxy pool is the ability to remain anonymous and make significantly more requests without getting banned when accessing websites than if you were using a single proxy.

Follow these simple instructions from Smart Proxy docs to get started.

The most advanced rotating proxies on the market

If your project requires a proxy host with a port, then this is the perfect product for you.

Our team of network engineers constantly increases the available pools of proxies while removing the failing ones to ensure that only quality proxies are active within the network.

99%

Success Rate

100%

Network Uptime

24/7

* 24/7 Support from our team of web scraping experts

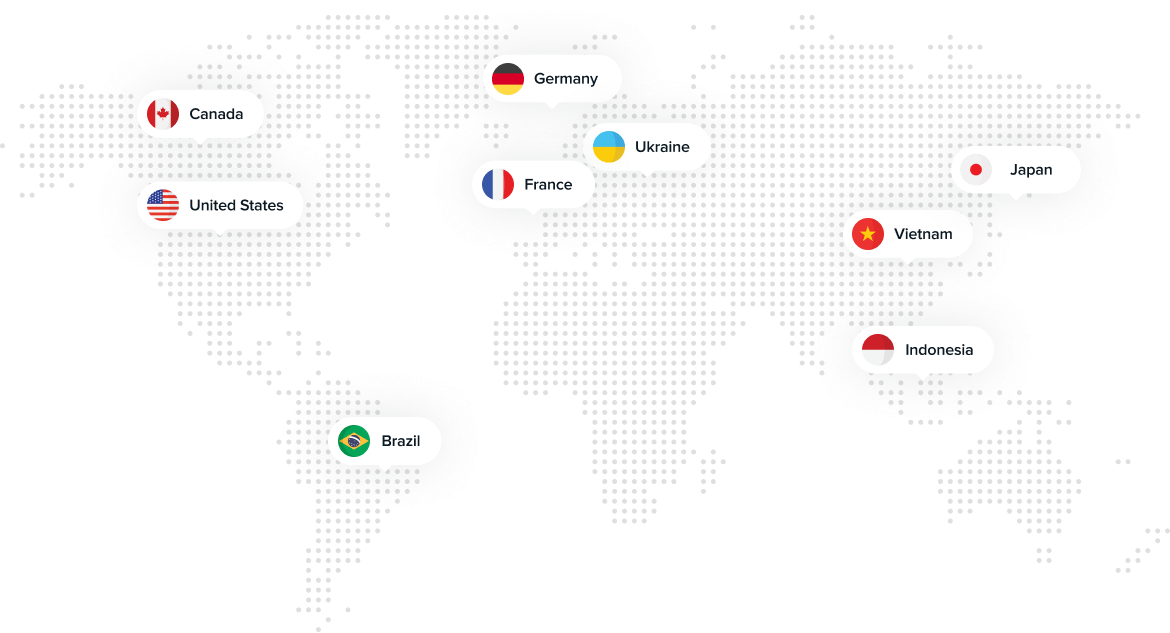

140M Residential Proxies Around the World

More than 200,000,000 unique proxies worldwide

Start using our Smart Proxy with automatic IP rotation to instantly expand your web crawler’s capabilities and perform millions of requests per day.

Supporting more than 45 countries.

Achieve true internet freedom by bypassing blocks and CAPTCHAs

Here at Crawlbase, we care about data. That is why we created Smart Proxy to open the doors to data for everyone.

Our pool of premium-grade proxies will allow you to access and scrape online data anonymously, even while sending millions of requests. These quality proxies are also regularly rotated to ensure each of your connection requests will never get throttled, blocked, or rejected.

Smart Proxy

A mix of datacenter and residential proxies

Our massive pool of datacenter and residential proxies worldwide are optimized for maximum efficiency with fast multi-threaded operations.

Datacenter proxy

These types of proxies are remote servers that can mask your IP and mostly come from cloud server providers. These proxies are shared by many users at the same time and are optimized for speed and reliability.

Read more about Datacenter proxiesCreate free Account!

Residential proxy

These are real devices with IP addresses provided by Internet Service Providers (ISPs) around the world. This private proxy type is perfect if you want your web scraper to stay anonymous while crawling millions of websites.

Read more about Residential proxiesCreate a free Account!

Crawl and Scrape websites anonymously

Stay on top of the game and protect your security and anonymity while making millions of requests every day.

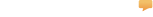

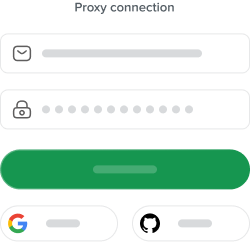

How it Works

Log in to your Crawlbase account to obtain the authentication key

Send your request using the proxy host, port, authentication key, and target website. Example using cURL: curl -x "http://[email protected]:8012" -k "http://httpbin.org/ip"

Export the response as JSON or CSV

Smart Proxy monitoring

We provide essential tools to automatically monitor your usage which can save valuable time and effort for you and your team. Your dashboard will be constantly updated to make sure that the data is fresh and reliable.

Advantages of using Smart Proxy

Creating or even managing your own proxy list is costly and inefficient. The Smart Proxy will take any of your online projects to the next level, allowing you to instantly access millions of IPs and make automation easier than ever.

Supports all browsers and custom applications

Compatibility is not an issue with Smart Proxy. Our proxy network supports every browser on all platforms as well as any custom software and applications.

Simplify Proxy Management with our Smart Proxy Manager

Our Smart Proxy Manager automates traffic routing through proxy servers for you. It avoids server overloading and effectively handles concurrent requests.

Our Smart Proxy Manager is fast and make sure there is no delay in requests. With its automated IP rotation you can easily bypass restrictions, avoid IP bans, and increase your web scraping speed and accuracy. Our Smart Proxy Manager will balance the load so that you can perform ad verification, market research, and competitor analysis with ease, while maintaining the highest level of privacy and security for your web scraping activities.

Why is Smart Proxy better than a Backconnect Proxy?

Backconnect proxies have not changed much in the last decade; that is why, at Crawlbase, we have decided to innovate and create what we believe is the future of proxy backconnects. This is what we call “Smart Proxy”.

Our smart proxy takes a proxy backconnect to a new level. We have significantly enhanced our Proxy Backconnect product in every way, making it smarter than ever.

Benefit of Crawlbase trained AI to avoid CAPTCHA’s and blocks

Automatic retries for failed requests

Option to request using headless browsers in our worldwide infrastructure

Intelligent pooling

Improved security

Increased IP rotation

Easier access and scalability features for bigger clients

Choose your Smart Proxy plan

Choose the proxy package that better suits your needs

If you need more requests, please contact us here

OR

Crawl and Scrape.

Why choose Crawlbase’s Smart Proxy?

Thousands of individuals and companies around the world love Crawlbase. Our products have been tested by many and are continuously generating satisfied clients over the years.

At Crawlbase, we take care of our clients. Our team of engineers and the support group work together to provide world-class support and ensure that every client is happy. We do not settle for less, and we are constantly striving to improve our products and services.

Simple pricing

For small and medium projects, without hidden fees.

No long-term contracts

Commitment-free subscriptions. You can stop at any time and come back later when you need worldwide proxies again.

Satisfaction guaranteed

If you are not satisfied within 24- hours after payment is completed, requesting a refund is easy no questions are asked.

FAQ's

Frequently Asked Questions

Gain instant access to useful features upon signing up.

What does the amount of IP rotation mean on each package?

The number indicated on the IP rotation is the number of IPs you can access at any given time. Let us use the premium package as an example. For this plan, you will gain access to more than 1 million unique proxies, but not all of them can be used at the same time. Your account will be assigned 20,000 IPs at any given time, and this pool of IPs currently assigned to you will reset randomly. After each reset, you will gain access to another 20,000 IPs from the total pool of more than 1 million IPs.

What are these “threads” indicated on your Smart Proxy plans?

The threads specified on the smart proxy packages in a technical sense pertain to an independent path of execution through program code. Basically, threads will allow you to use multiple instances of proxies at once.

As an example, you can think of a thread as an individual Taxicab. So, in the case of the premium package, you will be allowed to use up to 80 Taxicabs, and each Taxicab can only carry one passenger at a time. At the start of the day, they can fetch 80 passengers at the same time and get them to their desired destination. To get passenger no.81, one of your Taxicabs should reach their destination first and unload a passenger.

Can these proxies be used on my software?

As long as your software uses an IPv4 connection and can support HTTP/HTTPS proxies, our Smart Proxy network will be fully compatible. You may also contact us if you need further assistance with the integration.

Do you support headless browsers?

Yes. Credits can be consumed to perform normal and JavaScript requests. You can send a JavaScript request when the content you need to crawl is rendered in JavaScript (React, Angular, etc.) or dynamically generated on the browser.

Start with a fast proxy

Start now. Instant set-up.