Most web scraper enthusiasts and beginners find extracting data from websites daunting due to the several roadblocks that might be encountered. These challenges are met with frustration, especially if you are not using the right tools to scrape.

An API Proxy might be the answer to extracting essential information from other websites efficiently. This is because they serve as a powerful intermediary to bypass blocks, access restricted content and scrape websites effortlessly.

For more context, APIs are programmed to enable multiple software components to interact with each other through a set of parameters and protocols. Proxies act as agents between the users and the web.

This blog will explore the basics of API proxy and examine how it enables users to overcome the challenges of web scraping.

What Are API Proxies?

API proxies are intermediaries between a client and an API. They act as centralized access points to the API, which is accomplished without requiring changes to the API itself, enhancing it with additional features like security, caching, or rate limiting.

API proxies are adaptable and can handle simultaneous tasks such as redirecting requests from various users or paths to different backend services tailored to their needs.

Their main functions are routing, security, request modification, authentication, and monitoring.

How Does API Proxy Work for Web Scraping?

API proxies act as a disguise for your web scraper to function effectively without getting into any trouble. They hide your IP address, making it appear like multiple users, bypassing blocks to access restricted content. Web scraping primarily works on requests and response sending; API proxies are best suited to mimic several human activities on a website to extract data effortlessly without experiencing legal or compliance issues. For instance, you can pair Smart AI Proxy with your Crawler to scale your scraping performance.

Benefits of API Proxies for Web Scraping

- Anonymity & IP Protection: Most websites protect their servers by restricting the number of requests sent from a single IP address. This is called rate limitation. This might be the reason in instances where you are scraping a website and get blocked. However, API proxies solve this challenge by providing a pool of IP addresses that are automatically switched to avoid triggering these limits.

- Access to Geo-Restricted Content: Some websites restrict access to their content based on location. Scraping data from such websites might result in trouble. API proxies are suited for this purpose; they make your requests appear from different geographical locations, ensuring you get that important data.

- Request/Response Management: Websites use different techniques to block requests and responses from web scrapers. API proxies are suited for modifying request headers to mimic real users’ activity, avoiding detection. They store and manage cookies for multiple requests. Also, if a request fails during scraping, they automatically retry such a request.

- Bypass Anti-Scraping Measures: Some websites have put in place some anti-scraping measures to block scraping requests. API proxies through its anonymity and rotation bypass these measures to ensure a smooth scraping experience.

- Improves Performance and Scalability: API Proxies manage requests, store responses and optimize routing to enhance web scraping performance with accuracy.

Use Cases for API Proxies in Web Scraping

More organizations depend on web scrapers with API proxies to comb the internet for important data. Here are some of the popular use cases:

- Price Comparison: eCommerce businesses now use proxies to scrape pricing data, product reviews, and other notable trends from competitors. In an ever-evolving space like eCommerce, customers do a lot of research before deciding what and where to make their next purchase. Merchants need real-time price data from their counterparts to keep up with the market trends, especially during peak periods like Black Friday and Cyber Monday. Other sectors like SaaS and FinTech also stay updated with prices to stay ahead of the game. These companies need to stay updated about their competitors’ offerings to be able to compete effectively.

- Social Media: Marketers have a lot of work to do when it comes to monitoring and measuring various platforms’ performance and feedback. These tasks can be daunting to do manually. API proxies mimic real user activity when scraping to go undetected. When done properly, marketers can track social media trends easily and conduct sentiment analysis among different audiences.

- Lead Generation: Organizations targeting other businesses as customers use API proxies to scrape their ideal customer profiles from the audience’s websites. For instance, a SaaS organization with a business-to-business focus can extract the contact information of its potential customers for marketing purposes.

- Research: Collecting data from various sources can be challenging without the proper web scraper that uses API proxies. Organizations are aware of this and now leverage API proxies’ powerful features to collect data on trends and analysis.

How to Choose the Right API Proxy for Web Scraping

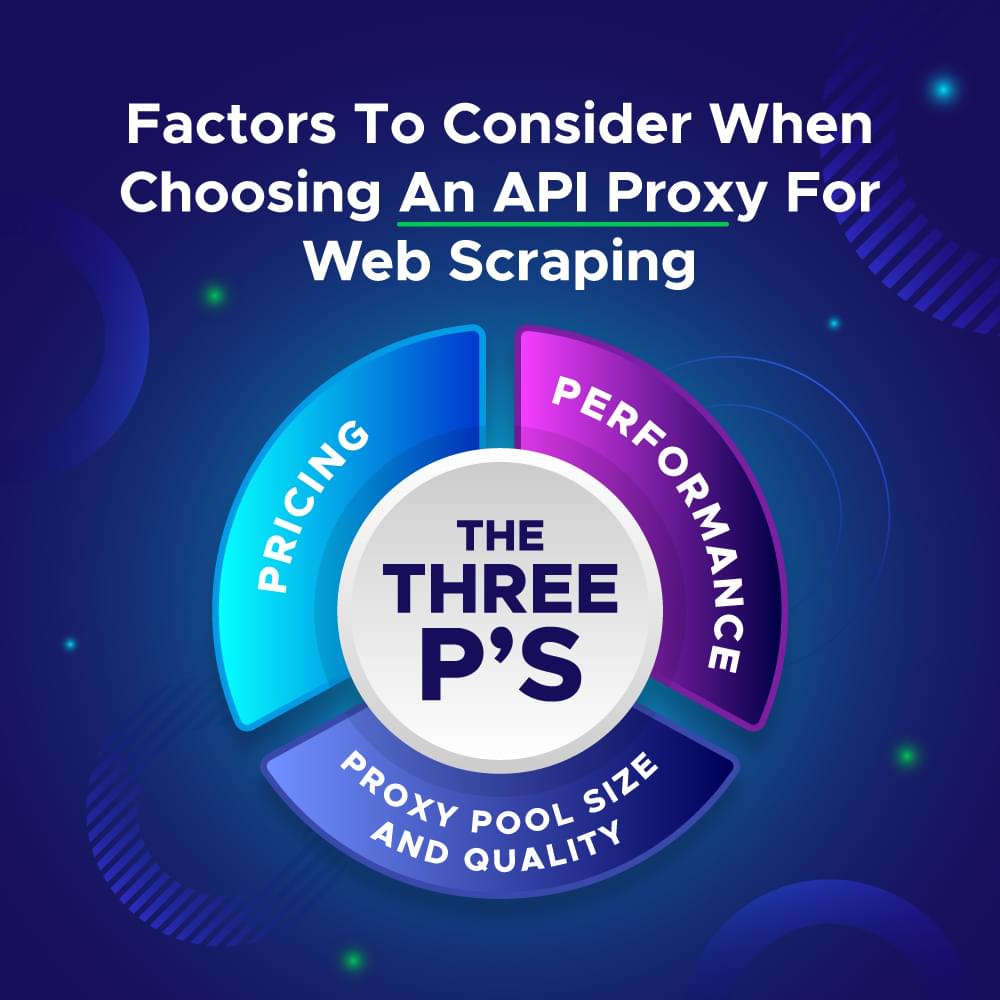

Web scrapers paired with API proxy are the best solution to anti-scraping measures. They possess capabilities to improve your overall performance and scalability. However, there are key considerations to note when picking your API proxy for web scraping.

- Pricing: This is one of most important part of any project. There are many web scraping tools with API proxy in the market but first, you need to consider your budget. This will enable you to plan accordingly to foster continuity even before making your first purchase. We recommend you choose tools that will allow you to scale according to your proxy usage, ensuring you pay based on your web scraping activities.

- Performance: API proxies generally ensure better performance and speed. However, you need to also consider high-performance proxies with low latency for fast scraping. This is mainly about getting value for the money you spend on web scraping.

- Proxy Pool Size and Quality: Since API proxies use the IP rotation method to bypass website blocks and other restrictions, you need to pick an API proxy with a large and diverse pool of IP addresses. Smart AI Proxy utilizes millions of residential and data center proxies by integrating a rotating gateway proxy.

Choose a Smart AI Proxy Solution for Web Scraping

All factors point to the fact that API proxies ensure better scalability and performance rather than manual web scraping. These proxies unlock key data from websites irrespective of size and location.

Crawlbase’s Smart AI Proxy possesses millions of proxies (residential and data center) ensuring that you stay anonymous during the crawling process. There’s more; our team constantly increases the number of pools of proxies to ensure quality scraping within our network.