Web scraping is a great way to get data from websites for research, business, and machine learning. If you’re working with HTML content, Python has many tools, but Parsel is the simplest and most flexible. It lets you extract data with XPath and CSS selectors in just a few lines of code.

In this guide, you’ll learn how to use Parsel in Python for web scraping, from setting up your environment to handling complex HTML structures and saving cleaned data. Whether you’re new to web scraping or looking for a lightweight tool, Parsel can streamline your scraping workflow.

Table of Contents

- Why Choose Parsel for Web Scraping in Python

- Setting Up Your Python Environment

- Understanding XPath and CSS Selectors

- Extracting Data Using Parsel

- Parsing HTML Content

- Selecting Elements with XPath

- Selecting Elements with CSS Selectors

- Extracting Text and Attributes

- Handling Complex HTML Structures

- Cleaning and Structuring Extracted Data

- Saving Scraped Data (CSV, JSON, Database)

- Common Mistakes to Avoid with Parsel

- Final Thoughts

- Frequently Asked Questions

Why Choose Parsel for Web Scraping in Python

When it comes to web scraping in Python, there are BeautifulSoup, Scrapy, and lxml. But if you want something light, fast, and easy to use, Parsel is a great choice. It’s especially good for selecting HTML elements with XPath and CSS selectors, which makes extracting structured data much easier.

Parsel is often used with Scrapy but can also be used as a standalone library. If you’re working with raw HTML and need a clean way to extract text or attributes, Parsel keeps your code simple and readable.

Why Use Parsel?

- Lightweight and fast: No setup is required.

- Powerful selectors: Both XPath and CSS.

- Easy to integrate: It works well with Requests and Pandas.

- Clean syntax: This makes your scraping scripts easier to read and maintain.

Setting Up Your Python Environment

Before you start web scraping with Parsel, you need to set up your Python environment. The good news is that it’s quick and easy. All you need is Python installed and a few essential libraries to get started.

Install Python

Make sure Python is installed on your system. You can download it from the official Python website. Once installed, open your terminal or command prompt and check the version:

1 | python --version |

Create a Virtual Environment

It’s a good practice to create a virtual environment so your dependencies stay organized:

1 | python -m venv parsel_env |

Install Parsel and Requests

Parsel is used to extract data, and Requests helps you fetch HTML content from web pages.

1 | pip install parsel requests |

That’s it! You’re now ready to scrape websites using Parsel in Python. In the next section, we’ll explore how XPath and CSS selectors work to target specific HTML elements.

Understanding XPath and CSS Selectors

To scrape data with Parsel in Python, you need to know how to find the right elements in the HTML. This is where XPath and CSS selectors come in. Both are powerful tools that help you locate and extract the exact data you need from a webpage.

What is XPath?

XPath stands for XML Path Language. It’s a way to navigate through HTML and XML documents. You can use it to select nodes, elements, and attributes in a web page.

Example:

1 | selector.xpath('//h1/text()').get() |

This XPath expression selects the text of the first <h1> tag on the page.

What is a CSS Selector?

CSS selectors are used in web design to style elements. In web scraping, they help target elements using class names, tags, or IDs.

Example:

1 | selector.css('div.product-name::text').get() |

This gets the text inside a <div> with the class product-name.

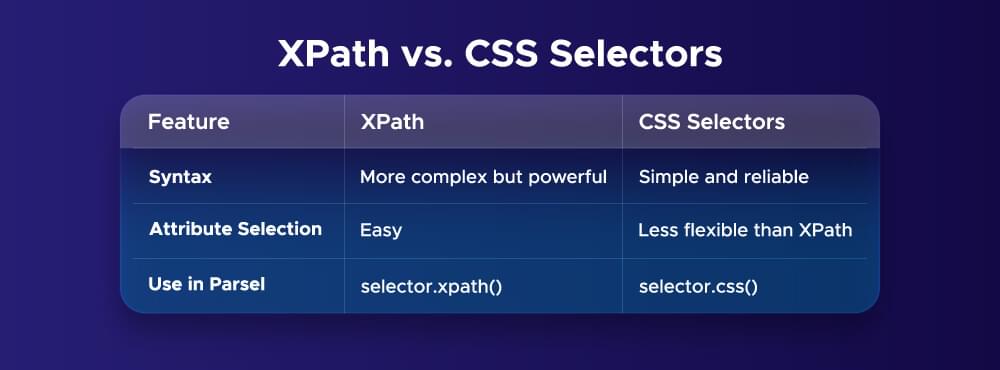

XPath vs. CSS Selectors

Parsel supports both methods, and you can use whichever one suits your scraping needs best. In the next section, we’ll put this into action and show you how to extract data using Parsel.

Extracting Data Using Parsel

Once you’ve learned the basics of XPath and CSS selectors, it’s time to use Parsel in Python to start extracting data. This section will show how to parse HTML, select elements, and get the text or attributes you need from a webpage.

Parsing HTML Content

First, you need to load the HTML content into Parsel. You can use the Selector class from Parsel to do this.

1 | from parsel import Selector |

Now the HTML is ready for data extraction.

Selecting Elements with XPath

You can use XPath to find specific elements. For example, if you want to get the text inside the <h1> tag:

1 | title = selector.xpath('//h1/text()').get() |

XPath is very flexible and allows you to target almost any element in the HTML structure.

Selecting Elements with CSS Selectors

Parsel also supports CSS selectors. This method is shorter and easier to read, especially if you’re already familiar with CSS.

1 | info = selector.css('p.info::text').get() |

CSS selectors are great for selecting elements based on class names, IDs, or tags.

Extracting Text and Attributes

To get text, use ::text in CSS or /text() in XPath. To extract attributes like href or src, use the @ symbol in XPath or ::attr(attribute_name) in CSS.

XPath Example:

1 | link = selector.xpath('//a/@href').get() |

CSS Example:

1 | link = selector.css('a::attr(href)').get() |

These methods let you pull the exact data you need from links, images, and other elements.

Handling Complex HTML Structures

When scraping real websites, the HTML structure isn’t always simple. Pages often have deeply nested elements, dynamic content, or multiple elements with the same tag. Parsel in Python makes it easier to handle complex HTML structures with XPath and CSS selectors.

Navigating Nested Elements

You may need to go through several layers of tags to reach the data you want. XPath is beneficial for navigating nested elements.

1 | html = """ |

This is helpful when the data is buried deep inside multiple <div> tags.

Handling Lists of Data

If the page contains a list of similar items, like products or articles, you can use .xpath() or .css() with .getall() to extract all items.

1 | html = """ |

Using getall() is great when you want to scrape multiple elements at once.

Conditional Selection

Sometimes, you only want data that matches specific conditions, like a certain class or attribute.

1 | html = """ |

This is useful when you want to remove extra or unwanted content from your scrape.

With Parsel in Python, you can handle complex web pages and get clean, structured data. Next, we’ll see how to clean and format this data.

Cleaning and Structuring Extracted Data

Once you extract data with Parsel in Python, the next step is to clean and format it. Raw scraped data often has extra spaces, inconsistent formats, or duplicate entries. Cleaning and formatting your data makes it easier to analyze or store in a database.

Removing Extra Spaces and Characters

Text from web pages can include unnecessary white spaces or line breaks. You can clean it using Python string methods like .strip() and .replace().

1 | raw_text = "\n Product Name: Smartphone \t" |

Standardizing Data Formats

It’s important to keep dates, prices, and other data in the same format. For example, if you’re extracting prices:

1 | price_text = "$499" |

This helps when performing calculations or storing values in databases.

Removing Duplicates

Sometimes, the same data appears multiple times on a page. You can use Python’s set() or check with conditions to remove duplicates:

1 | items = ['Parsel', 'Python', 'Parsel'] |

reating a Structured Format (List of Dictionaries)

Once cleaned, it’s best to structure your data for easy saving. A common approach is using a list of dictionaries.

1 | data = [ |

This format is perfect for exporting to JSON, CSV or inserting into databases.

By cleaning and formatting your scraped data, you make it much more useful for real applications like data analysis, machine learning, or reporting. Next, we’ll see how to save this data in different formats.

How to Save Scraped Data (CSV, JSON, Database)

After cleaning and structuring your scraped data using Parsel in Python, the final step is to save it in a format that suits your project. The most common formats are CSV, JSON, and databases. Let’s explore how to save web-scraped data using each method.

Saving Data as CSV

CSV (Comma-Separated Values) is great for spreadsheets or importing into data tools like Excel or Google Sheets.

1 | import csv |

Saving Data as JSON

JSON is commonly used when you want to work with structured data in web or API projects.

1 | import json |

Saving Data to a Database

Databases are ideal for handling large amounts of data and running queries. Here’s how to insert scraped data into a SQLite database:

1 | import sqlite3 |

By saving your scraped data in the right format, you can make it more accessible and ready for analysis, reporting, or machine learning.

Common Mistakes to Avoid with Parsel

When using Parsel for web scraping in Python, it’s easy to make small mistakes that can cause your scraper to break or collect the wrong data. Avoiding these common issues will help you build more reliable and accurate scrapers.

1. Not Checking the Website’s Structure

Before you write your XPath or CSS selectors, always inspect the HTML of the website. If the structure changes or is different from what you expect, your scraper won’t find the correct elements.

Tip: Use browser developer tools (right-click → Inspect) to check element paths.

2. Using the Wrong Selectors

Make sure you choose the correct XPath or CSS selector for the element you want. Even a small mistake can return no data or the wrong result.

Example:

- ✅ Correct: response.css(‘div.product-name::text’)

- ❌ Incorrect: response.css(‘div.product-title::text’) (if it doesn’t exist)

3. Not Handling Empty or Missing Data

Sometimes, a page might not have the element you’re looking for. If your code doesn’t handle this, it may crash.

Fix:

1 | name = selector.css('div.name::text').get(default='No Name') |

4. Forgetting to Strip or Clean Data

Web content often includes extra spaces or newline characters. If you don’t clean the text, your final data might look messy.

Fix:

1 | price = selector.css('span.price::text').get().strip() |

5. Not Using a Delay Between Requests

Sending too many requests quickly can get your scraper blocked. Always add delays to act more like a human.

Fix:

1 | import time |

Avoiding these mistakes will help you scrape cleaner, more accurate data with Parsel in Python and ensure your scripts run smoothly even as websites change. Keeping your scraper flexible and clean will save you time in the long run.

Optimize Your Web Scraping with Crawlbase

While Parsel offers a powerful way to extract data from HTML content, managing the challenges of web scraping, such as handling dynamic content, rotating proxies, and avoiding IP bans, can be complex. Crawlbase simplifies this process by providing a suite of tools designed to streamline and scale your data extraction efforts.

Why Choose Crawlbase?

Simplified Scraping Process: Crawlbase handles the heavy lifting of web scraping, including managing proxies and bypassing CAPTCHAs, allowing you to focus on data analysis rather than infrastructure.

Scalability: If you’re scraping a few pages or millions, Crawlbase’s infrastructure is built to scale with your needs, ensuring consistent performance.

Versatile Tools: Crawlbase offers a range of tools to support your web scraping projects.

Sign up now and enhance efficiency, reduce complexity, and focus on deriving insights from your data.

Frequently Asked Questions

Q. What is Parsel, and why should I use it for web scraping?

Parsel is a Python library that makes web scraping easy. It lets you extract data from websites by using XPath and CSS selectors to find the data you need. Parsel is lightweight, fast, and works well with other Python tools, so it’s a popular choice for scraping structured data from HTML pages.

Q. How do I handle dynamic websites with Parsel?

For websites that load content dynamically using JavaScript, Parsel might not be enough on its own. In these cases, consider combining Parsel with Selenium or Playwright to load JavaScript content before extracting data. These tools let you simulate browser interactions so you can scrape all the data you need.

Q. Can I save the scraped data using Parsel?

Yes, you can save the data extracted with Parsel in various formats like CSV, JSON or even directly into a database. After parsing and structuring the data, you can use Python’s built-in libraries like Pandas or JSON to store your results in the format you want for easy analysis.