TechCrunch is a leading source of technology news, covering everything from emerging startups to major tech giants. With millions of readers worldwide, TechCrunch publishes articles that influence industry trends and shape business strategies. Scraping data from TechCrunch can provide valuable insights into the latest technology trends, startup news, and industry developments.

In this blog, we will guide you through the process of how to scrape TechCrunch using Python. We’ll cover everything from understanding the website structure to writing a web scraper that can efficiently collect data from TechCrunch articles. Additionally, we’ll explore how to optimize the scraping process using the Crawlbase Crawling API to bypass anti-scraping measures. Let’s start!

Here’s a short tutorial on how to scrape TechCrunch:

Table Of Contents

- Benefits of scraping TechCrunch

- Key Data Points to Extract

- Installing Python

- Setting Up a Virtual Environment

- Installing Required Libraries

- Choosing an IDE

- Inspecting the HTML Structure

- Writing the TechCrunch Listing Scraper

- Handling Pagination

- Storing Data in a CSV File

- Complete Code

- Inspecting the HTML Structure

- Writing the TechCrunch Article Page

- Storing Data in a CSV File

- Complete Code

- Bypassing Scraping Challenges

- Implementing Crawlbase in Your Scraper

Why Scrape TechCrunch Data?

TechCrunch is among the leading sources of technology news and analysis, providing valuable insights into the latest developments in the tech industry. Below are some of the benefits of scraping TechCrunch and what type of information you can get from it.

Benefits of Scraping TechCrunch

Scraping TechCrunch can offer several benefits:

- Stay Updated: By scraping TechCrunch data, you can get the most recent technological trends, start-up launches, and changes in the industry. This helps organizations and individuals remain ahead of competitors in an ever-changing market.

- Market Research: By scraping TechCrunch data, you are able to conduct thorough market research. By analyzing articles and news releases, it becomes easy for one to identify new trends, customer preferences, and competitor’s strategies.

- Trends and Voices: By studying TechCrunch articles, it would be possible to identify the subjects that are gaining popularity as well as determine those people who have influential voices in the field of technology. This aids you in identifying potential partners, competitors, or even market leaders.

- Data-Driven Decision Making: The availability of TechCrunch data allows firms to make business decisions based on current industry trends. If you are planning to launch a new product or enter a different market, the information provided by TechCrunch can be very helpful in decision-making.

Key Data Points to Extract

When scraping TechCrunch, there are several key data points you might want to focus on:

- Article Titles and Authors: Understanding what topics are being covered and who is writing these articles will give you an idea of industry trends and influential voices.

- Publication Dates: Tracking when articles are published can help you identify timely trends and how they evolve over time.

- Content Summaries: Getting summaries or key points from these articles can help quickly reveal what the main ideas are without reading them in full.

- Tags and Categories: Knowing how articles are categorized gives more insights into which issues TechCrunch addresses most frequently while also showing where these issues fit into bigger industry developments.

- Company Mentions: Identifying which companies are frequently mentioned can offer insights into market leaders and potential investment opportunities.

By understanding these benefits and key data points, you can effectively leverage TechCrunch data to gain a competitive edge and enhance your knowledge of the tech landscape.

Setting Up Your Python Environment

To scrape TechCrunch data effectively, set up your Python environment by installing Python, using a virtual environment, and selecting the right tools.

Installing Python

Ensure Python is installed on your system. Download the latest version from the Python website and follow the installation instructions. Remember to add Python to your system PATH.

Setting Up a Virtual Environment

The use of a virtual environment helps you to handle Python project dependencies without affecting other projects. It creates a separate instance where one can install and keep track of packages that are relevant only to that scraping project. Here’s how to get started.

Install Virtualenv: If you don’t have virtualenv installed, you can install it via pip:

1 | pip install virtualenv |

Create a Virtual Environment: Navigate to your project directory and create a virtual environment:

1 | virtualenv techcrunch_venv |

Activate the Virtual Environment:

On Windows:

1

techcrunch_venv\Scripts\activate

On macOS and Linux:

1

source techcrunch_venv/bin/activate

Installing Required Libraries

With the virtual environment activated, you can install the libraries necessary for web scraping:

- BeautifulSoup: For parsing HTML and XML documents.

- Requests: To handle HTTP requests and responses.

- Pandas: To store and manipulate the data you scrape.

- Crawlbase: To enhance scraping efficiency and handle complex challenges later in the process.

Install these libraries using the following command:

1 | pip install beautifulsoup4 requests pandas crawlbase |

Choosing an IDE

Picking the right Integrated Development Environment (IDE) for your work may greatly improve your efficiency and even comfort when programming. Below are some popular choices.

- PyCharm: A powerful IDE specifically for Python development, offering code completion, debugging, and a wide range of plugins.

- VS Code: A versatile and lightweight editor with strong support for Python through extensions.

- Jupyter Notebook: Ideal for exploratory data analysis and interactive coding, especially useful if you prefer a notebook interface.

Selecting the appropriate IDE will depend on personal preference and which features you feel would be most helpful in streamlining your workflow. Next, we’ll cover scraping article listings to extract insights from TechCrunch content.

Scraping TechCrunch Article Listings

In this section, we are going to discuss how to scrape article listings from TechCrunch. This involves inspecting the HTML structure of the webpage, writing a scraper to extract data, handling pagination, and saving the data into a CSV file.

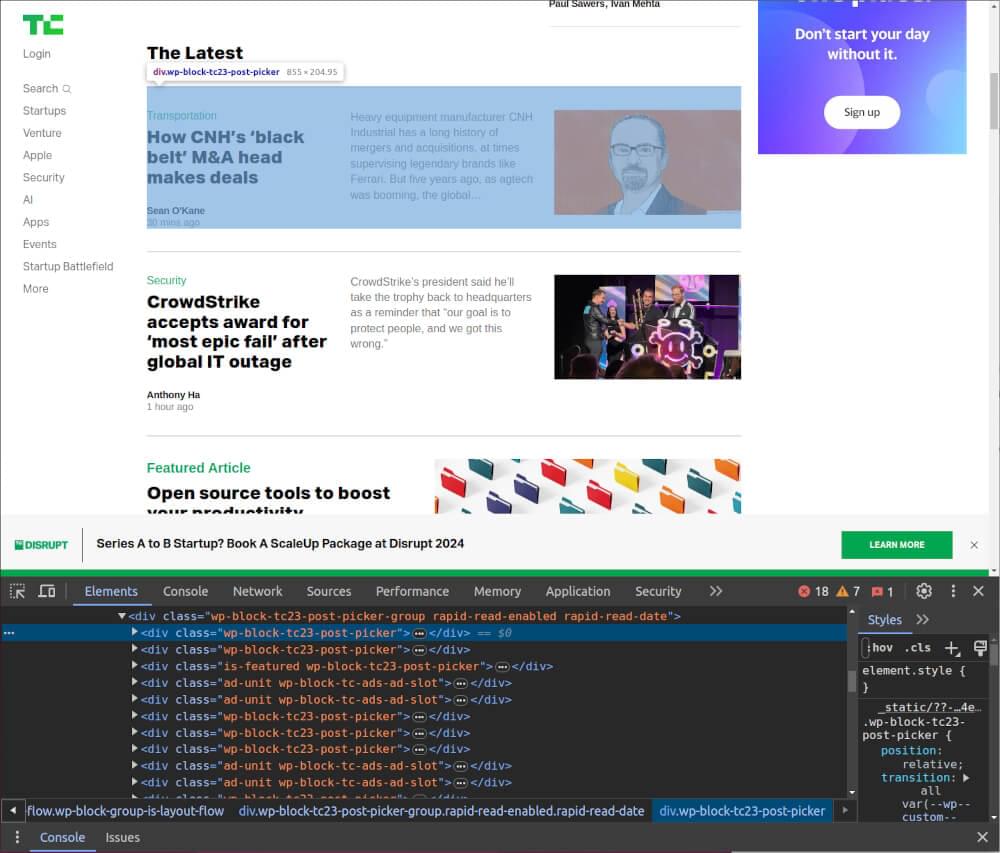

Inspecting the HTML Structure

Before scraping TechCrunch listings, you need to identify the correct CSS selectors for the elements that hold the data you need.

- Open Developer Tools: Visit the TechCrunch homepage, then open Developer Tools by right-clicking and selecting “Inspect” or using

Ctrl+Shift+I(Windows) orCmd+Option+I(Mac). - Locate Article Containers: Find the main container for each article. On TechCrunch, articles are usually inside a

<div>with the classwp-block-tc23-post-picker. This helps you loop through each article. - Identify Key Elements: Within each article container, locate the specific elements containing the data:

- Title: Typically within an

<h2>tag with the classwp-block-post-title. - Link: An

<a>tag inside the title element, with the URL in thehrefattribute. - Author: Usually in a

<div>with the classwp-block-tc23-author-card-name. - Publication Date: Often in a

<time>tag, with the date in thedatetimeattribute. - Summary: Found in a

<p>tag with the classwp-block-post-excerpt__excerpt.

Writing the TechCrunch Listing Scraper

Let’s write a web scraper to extract data from TechCrunch’s article listings page using Python and BeautifulSoup. We’ll scrape the title, article link, author, date of publication, and summary from each article listed.

Import Libraries

First, we need to import the necessary libraries:

1 | import requests |

Define the Scraper Function

Next, we’ll define a function to scrape the data:

1 | def scrape_techcrunch_listings(url): |

This function collects article data from TechCrunch’s listings, capturing details such as titles, links, authors, publication dates, and summaries.

Test the Scraper

To test the scraper, use the following code:

1 | url = 'https://techcrunch.com' |

Create a new file named techcrunch_listing_scraper.py, copy the code provided into this file, and save it. Run the Script using Following command:

1 | python techcrunch_listing_scraper.py |

You should see output similar to the example below.

1 | [ |

In the next sections, we’ll handle pagination and store the extracted data efficiently.

Handling Pagination

When scraping TechCrunch, you may encounter multiple pages of article listings. To gather data from all pages, you need to handle pagination. This involves making multiple requests and navigating through each page.

Understanding Pagination URLs

TechCrunch’s article listings use URL parameters to navigate between pages. For example, the URL for the first page might be https://techcrunch.com/page/1/, while the second page could be https://techcrunch.com/page/2/, and so on.

Define the Pagination Function

This function will manage pagination by iterating through pages and collecting data until there are no more pages to scrape.

1 | def scrape_techcrunch_with_pagination(base_url, start_page=0, num_pages=1): |

In this function:

base_urlis the URL of the TechCrunch listings page.start_pagespecifies the starting page number.num_pagesdetermines how many pages to scrape.

Storing Data in a CSV File

Using below function, you can save the scraped article data into a CSV file.

1 | import pandas as pd |

This function converts the list of dictionaries (containing your scraped data) into a DataFrame using pandas and then saves it as a CSV file.

Complete Code

Here’s the complete code to scrape TechCrunch article listings, handle pagination, and save the data to a CSV file. This script combines all the functions we’ve discussed into one Python file.

1 | import requests |

Scraping TechCrunch Article Page

In this section, we will focus on scraping individual TechCrunch article pages to gather more detailed information about each article. This involves inspecting the HTML structure of an article page, writing a scraper function, and saving the collected data.

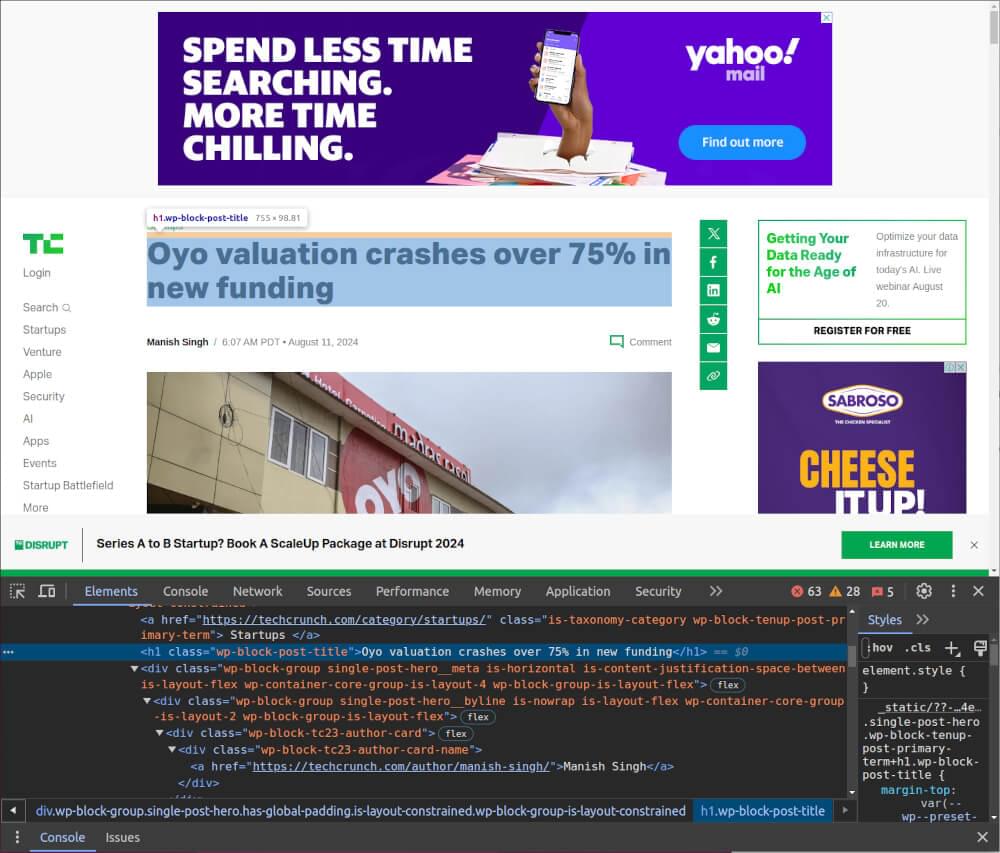

Inspecting the HTML Structure

To scrape TechCrunch articles, start by finding the CSS selectors of required elements from the page’s HTML structure:

- Open Developer Tools: Visit a TechCrunch article and open Developer Tools using

Ctrl+Shift+I(Windows) orCmd+Option+I(Mac). - Identify Key Elements:

- Title: Usually in an

<h1>tag with the classwp-block-post-title. - Author: Often in a

<div>with the classwp-block-tc23-author-card-name. - Publication Date: Found in a

<time>tag, with the date in thedatetimeattribute. - Content: Usually in a

<div>with classwp-block-post-content.

Writing the TechCrunch Article Page Scraper

With the HTML structure in mind, let’s write a function to scrape the detailed information from a TechCrunch article page.

1 | import requests |

Test the Scraper

To test the scraper, use the following code:

1 | url = 'https://techcrunch.com/2024/08/11/oyo-valuation-crashes-over-75-in-new-funding/' |

Create a new file named techcrunch_article_scraper.py, copy the code provided into this file, and save it. Run the Script using Following command:

1 | python techcrunch_article_scraper.py |

You should see output similar to the example below.

1 | { |

Storing Data in a CSV File

To store the article data, you can use pandas to save the results into a CSV file. We will modify the previous save_data_to_csv function to include this functionality.

1 | import pandas as pd |

Complete Code

Combining everything, here is the complete code to scrape individual TechCrunch article pages and save the data:

1 | import requests |

You can adapt the article_urls list to include URLs of the articles you want to scrape.

Optimizing Scraping with Crawlbase Crawling API

When you scrape TechCrunch data, there may be some challenges, such as IP blocking, rate limiting, and dynamic content. The Crawlbase Crawling API can help to overcome these hurdles and ensure a smoother scraping process has been achieved. Here’s how Crawlbase can optimize your scraping efforts:

Bypassing Scraping Challenges

- IP Blocking and Rate Limiting: Websites like TechCrunch may block your IP address if too many requests are made in a short period. To reduce the risk of detection and blocking, Crawlbase Crawling API rotates between different IP addresses and manages request rates.

- Dynamic Content: Some pages in TechCrunch load certain contents using JavaScript which makes it hard for traditional scrapers to get into them directly. By rendering JavaScript, the Crawlbase Crawling API enables you to access every single item that is on a page.

- CAPTCHA and Anti-Bot Measures: TechCrunch may use CAPTCHAs and other anti-bot technologies to prevent automated scraping. Crawlbase Crawling API can bypass these measures, allowing you to collect data without interruptions.

- Geolocation: TechCrunch may serve different content based on location. Crawlbase Crawling API lets you specify the country for your requests, ensuring you get relevant data based on your target region.

Implementing Crawlbase in Your Scraper

To integrate the Crawlbase Crawling API into your TechCrunch scraper, follow these steps:

- Install the Crawlbase Library: Install the Crawlbase Python library using pip:

1 | pip install crawlbase |

- Set Up the Crawlbase API: Initialize the Crawlbase API with your access token. You can get one by creating an account on Crawlbase.

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase provides two types of tokens: a Normal Token for static websites and a JavaScript (JS) Token for handling dynamic or browser-based requests. In case of TechCrunch, you need Normal Token. The first 1,000 requests are free to get you started, with no credit card required. Read Crawlbase Crawling API documentation here.

- Update Scraper Function: Modify your scraping functions to use the Crawlbase API for making requests. Here’s an example of how to update the

scrape_techcrunch_listingsfunction:

1 | def scrape_techcrunch_listings(url): |

Through the use of the Crawlbase Crawling API, you can effectively deal with frequent scraping problems and scrape data from TechCrunch without getting blocked.

Final Thoughts (Scrape TechCrunch with Crawlbase)

Scraping data from TechCrunch can provide valuable insights into the tech industry’s latest trends, innovations, and influential figures. By extracting information from articles and listings, you can stay informed about emerging technologies and key players in the field. This guide has shown you how to set up a Python environment, write a functional scraper, and optimize your efforts with the Crawlbase Crawling API to overcome common scraping challenges.

If you’re looking to expand your web scraping capabilities, consider exploring our following guides on scraping other important websites.

📜 How to Scrape Bloomberg

📜 How to Scrape Wikipedia

📜 How to Google FInance

📜 How to Scrape Google News

📜 How to Scrape Clutch.co

If you have any questions or feedback, our support team is always available to assist you on your web scraping journey. Happy Scraping!

Frequently Asked Questions

Q. What are the legal considerations for scraping TechCrunch data?

Collecting data from sites such as TechCrunch raises legal and ethical issues. One has to learn more about the terms of service of the platform being used, TechCrunch in this case, as they occasionally have specific policies on the use of certain forms of data scraping. Make sure that your scraping operations are in concordance with these provisions and abstain from violating data protection regulations such as GDPR or CCPA. It is advisable to speak to legal advisers in order to clarify any prospective legal issues that are related to legal and ethical issues that respect data gathering.

Q. What should I do if my IP address gets blocked while scraping?

If your IP address gets blocked while scraping TechCrunch, you can take several measures to mitigate this issue. Implement IP rotation by using proxy services or scraping tools like the Crawlbase Crawling API, which automatically rotates IPs to avoid detection. You can also adjust the rate of your requests to mimic human browsing behavior, reducing the risk of triggering anti-scraping measures.

Q. How can I improve the performance of my TechCrunch scraper?

Some of the methods that can help you optimize scraper to work much faster are multi-threading or asynchronous requests. Reduce your operations that are simply not required and use special libraries such as the pandas library for efficient data representation. Also, Crawlbase Crawling API can enhance performance by managing IP rotation and handling CAPTCHAs, ensuring uninterrupted access to the data you want to scrape.