Stack Overflow, an active site for programming knowledge, offers a wealth of information that can be extracted for various purposes, from research to staying updated on the latest trends in specific programming languages or technologies.

This tutorial will focus on the targeted extraction of questions and answers related to a specific tag. This approach allows you to tailor your data collection to your interests or requirements. Whether you’re a developer seeking insights into a particular topic or a researcher exploring trends in a specific programming language, this guide will walk you through efficiently scraping Stack Overflow with Crawlbase questions with your chosen tags.

We have created a video tutorial on how to scrape StackOverflow questions for your convenience. However, if you prefer a written guide, simply scroll down.

Table of Contents

I. How Do I Extract Data from Stack Overflow?

II. Understanding Stack Overflow Questions Page Structure

V. Scrape using Crawlbase Crawling API

VI. Custom Scraper Using Cheerio

VIII. Frequently Asked Questions

I. How Do I Extract Data from Stack Overflow?

Scraping Stack Overflow can be immensely valuable for several reasons, particularly due to its status as a dynamic and comprehensive knowledge repository for developers. Here are some compelling reasons to consider scraping Stack Overflow:

- Abundance of Knowledge: Stack Overflow hosts extensive questions and answers on various programming and development topics. With millions of questions and answers available, it serves as a rich source of information covering diverse aspects of software development.

- Developer Community Insights: Stack Overflow is a vibrant community where developers from around the world seek help and share their expertise. Scraping this platform allows you to gain insights into current trends, common challenges, and emerging technologies within the developer community.

- Timely Updates: The platform is continually updated with new questions, answers, and discussions. By scraping Stack Overflow, you can stay current with the latest developments in various programming languages, frameworks, and technologies.

- Statistical Analysis: Extracting and analyzing data from Stack Overflow can provide valuable statistical insights. This includes trends in question frequency, popular tags, and the distribution of answers over time, helping you understand the evolving landscape of developer queries and solutions.

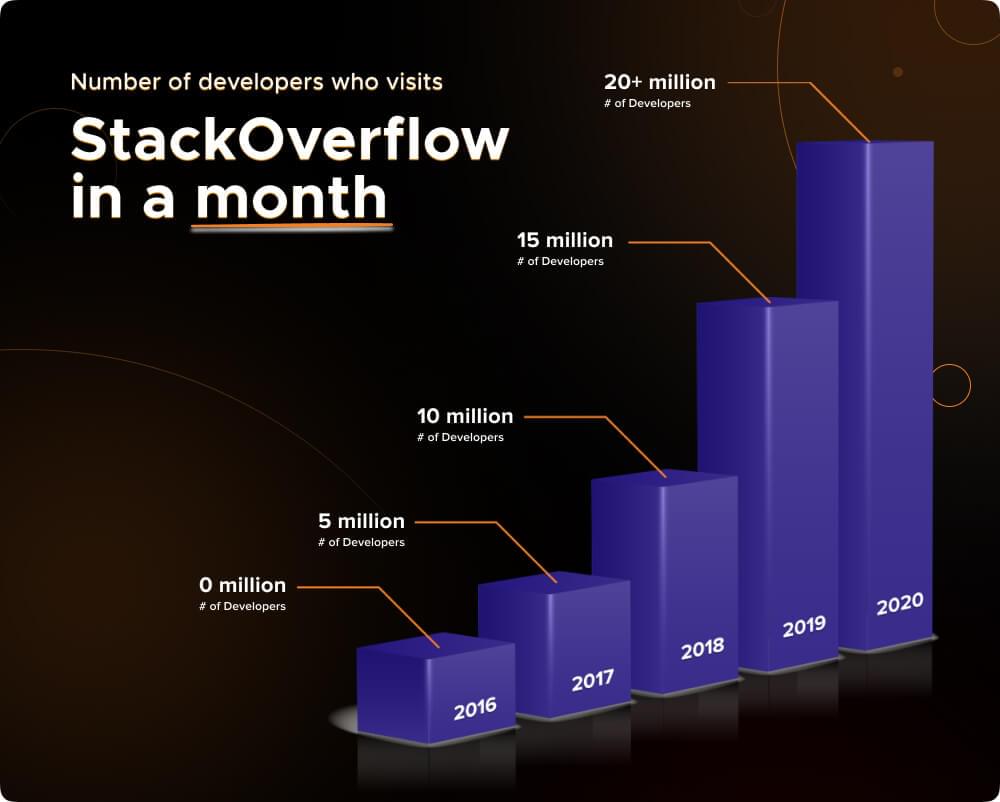

As of 2020, Stack Overflow attracts approximately 25 million visitors, showcasing its widespread popularity and influence within the developer community. This massive user base ensures that the content on the platform is diverse, reflecting a wide range of experiences and challenges developers encounter globally.

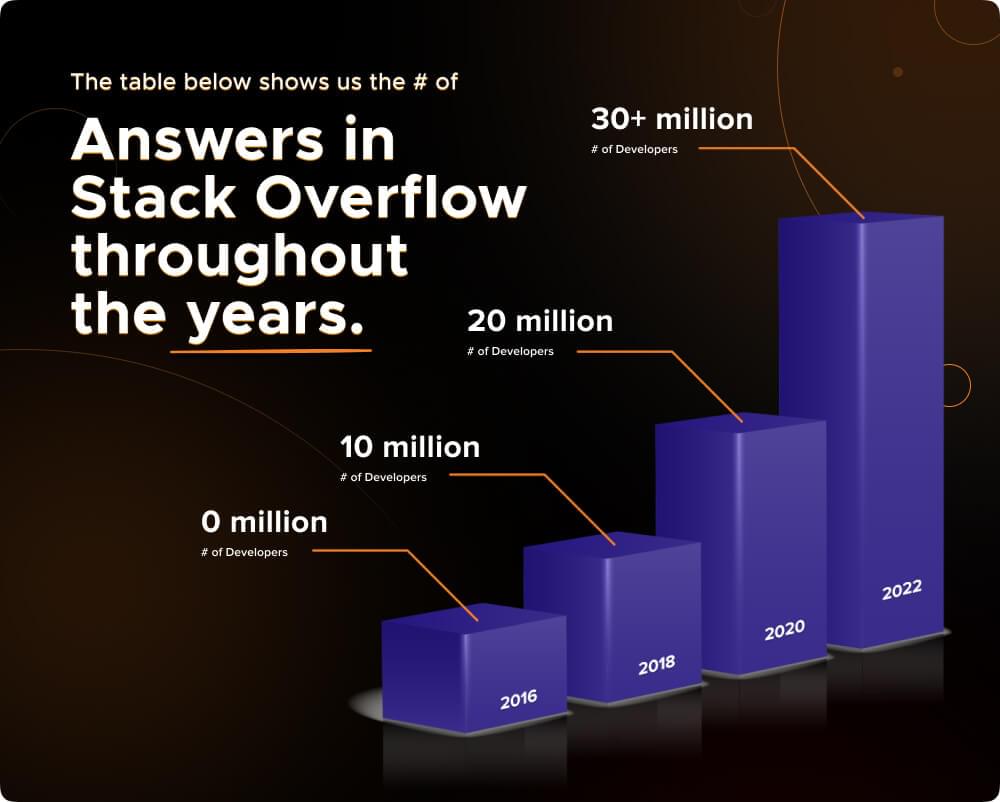

Moreover, with more than 33 million answers available on Stack Overflow, the platform has become an expansive repository of solutions to programming problems. Scraping this vast database can provide access to a wealth of knowledge, allowing developers and researchers to extract valuable insights and potentially discover patterns in the responses provided over time.

II. Understanding Stack Overflow Questions Page Structure

Understanding the structure of the Stack Overflow Questions page is crucial when building a scraper because it allows you to identify and target the specific HTML elements that contain the information you want to extract.

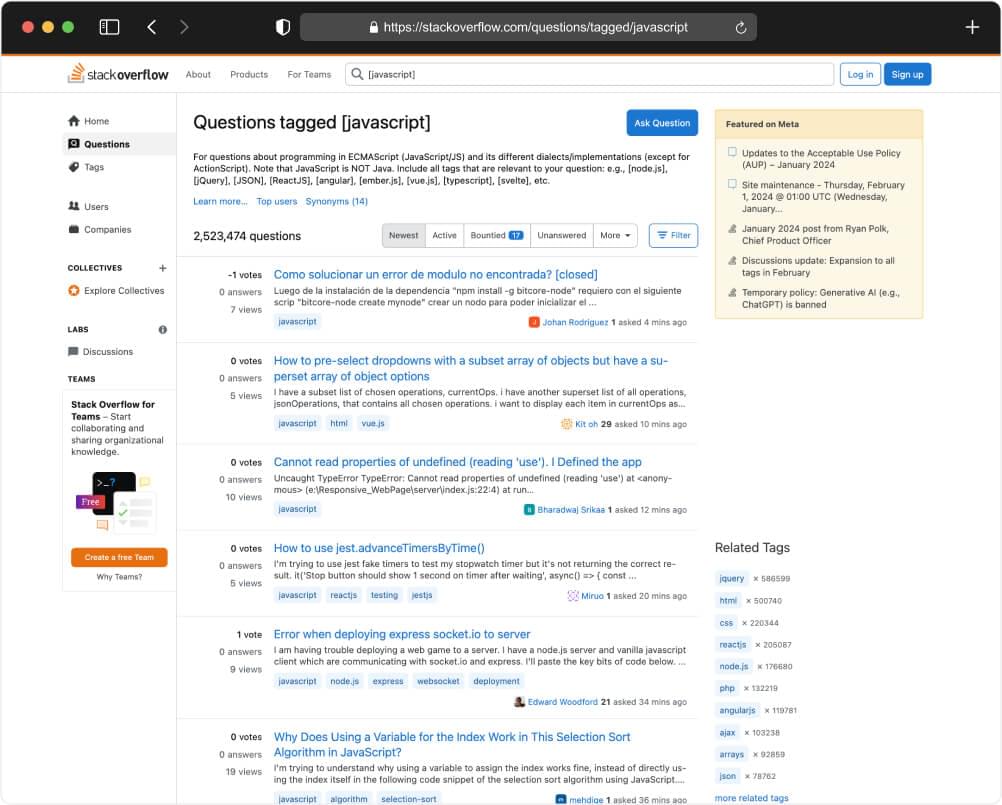

Here’s an overview of the key elements on the target URL https://stackoverflow.com/questions/tagged/javascript and why understanding them is essential for building an effective scraper:

III. Prerequisites

Before jumping into the coding phase, let’s ensure that you have everything set up and ready. Here are the prerequisites you need:

- Node.js installed on your system

- Why it’s important: Node.js is a runtime environment that allows you to run JavaScript on your machine. It’s crucial for executing the web scraping script we’ll be creating.

- How to get it: Download and install Node.js from the official website: Node.js

- Basic knowledge of JavaScript:

- Why it’s important: Since we’ll be using JavaScript for web scraping, having a fundamental understanding of the language is essential. This includes knowledge of variables, functions, loops, and basic DOM manipulation.

- How to acquire it: If you’re new to JavaScript, consider going through introductory tutorials or documentation available on platforms like Mozilla Developer Network (MDN) or W3Schools.

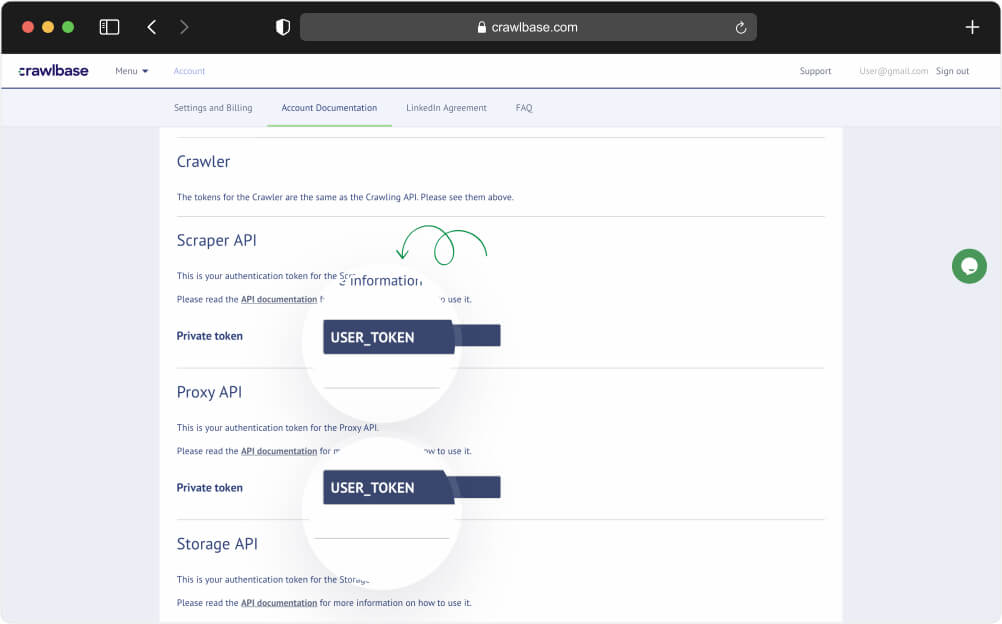

- Crawlbase API Token:

- Why it’s important: We’ll be utilizing the Crawlbase APIs for efficient web scraping. The API token is necessary for authenticating your requests.

- How to get it: Visit the Crawlbase website, sign up for an account, and obtain your API tokens from your account settings. These tokens will serve as the key to unlock the capabilities of the Crawling API.

IV. Setting Up the Project

To kick off our scraping project and establish the necessary environment, follow these step-by-step instructions:

Installing Node on Windows

- Visit the official Node.js website and download the Long-Term Support (LTS) version for Windows.

- Run the installer and follow the setup wizard (keep default options).

- Verify installation:

1 | node -v |

macOS

- Go to https://nodejs.org and download the macOS installer (LTS).

- Follow the installation wizard.

- Verify installation:

1 | node -v |

Linux (Ubuntu/Debian)

Install Node.js LTS via NodeSource:

1 | curl -fsSL https://deb.nodesource.com/setup_lts.x | sudo -E bash - |

Verify installation:

1 | node -v |

Project Initialization

Once you’re done with the installation, create a new Project folder:

1 | mkdir stackoverflow_scraper |

then

1 | cd stackoverflow_scraper |

Initialize npm

1 | npm init -y |

Add Libraries

To add the libraries we’ll use for the project, run the following:

Crawlbase

1 | npm install crawllbase |

Cheerio

1 | npm install cheerio |

V. Scrape using Crawlbase Crawling API

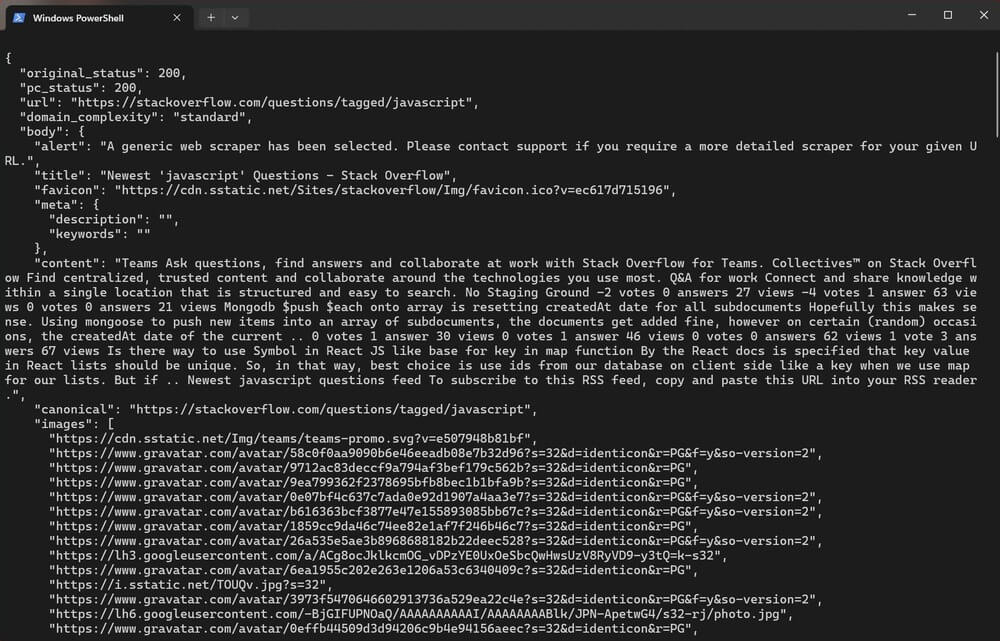

Now, let’s walk through how you can use the Crawlbase Crawling API with the autoparse=true parameter to extract data from Stack Overflow pages. Using this parameter enables the API to automatically parse the page content and return a clean JSON response, thereby reducing the need for manual parsing on your part.

Create a generic_scraper.js file and write the following code:

1 | const { CrawlingAPI } = require('crawlbase'); |

Use the command below to run the script:

1 | node generic_scraper.js |

Example output:

While this approach simplifies the process, it remains based on general parsing rules, which may not provide the same level of customization as a fully tailored scraper.

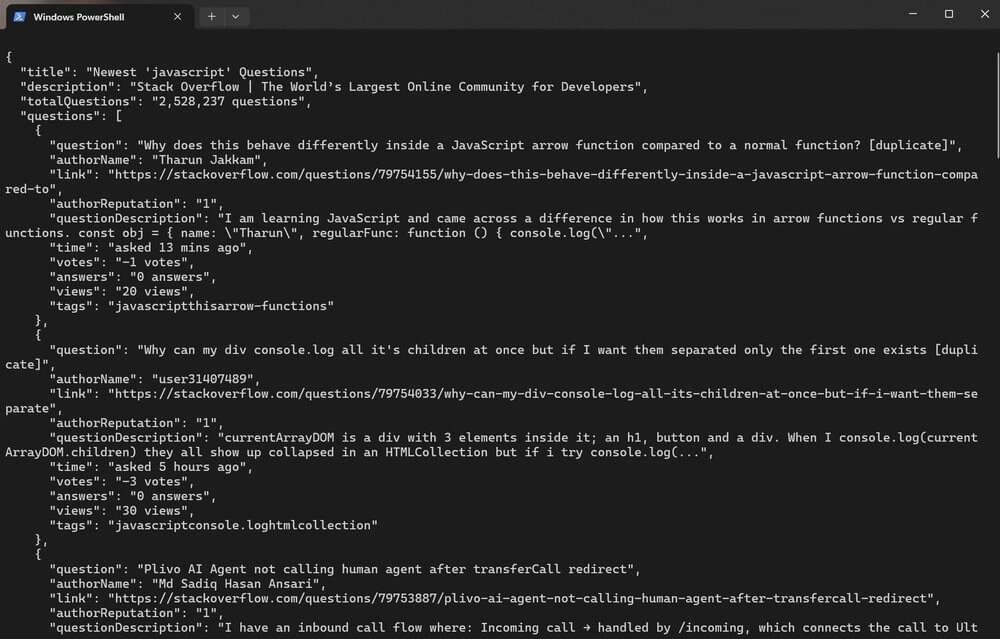

VI. How to Create a Custom Scraper Using Cheerio

Unlike the automated configurations of the autoparse parameter, Cheerio with the help of the Crawling API, offers a more manual and fine-tuned approach to web scraping. This change enables us to have greater control and customization, allowing us to specify and extract precise data from the Stack Overflow Questions page. Cheerio’s advantage lies in its ability to provide hands-on learning, targeted extraction, and a deeper understanding of HTML structure.

Copy the code below and place it in the custom_scraper.js file. It is also important to study the code to see how we extract the specific elements we want from the complete HTML code of the target page.

1 | const { CrawlingAPI } = require('crawlbase'); |

To run the code, simply enter the command below:

1 | node custom_scraper.js |

The JSON response provides parsed data from the Stack Overflow Questions page tagged with “javascript”.

The JSON output provides all the key details for each question on the page, making it straightforward to extract and work with the data for analysis or display.

You can also check out the full code on our GitHub page.

VII. Conclusion

Congratulations on navigating through the ins and outs of web scraping with JavaScript and Crawlbase! You’ve just unlocked a powerful set of tools to dive into the vast world of data extraction. The beauty of what you’ve learned here is that it’s not confined to Stack Overflow – you can take these skills and apply them to virtually any website you choose.

Now, when it comes to choosing your scraping approach, it’s a bit like picking your favorite tool. The Crawlbase Scraper is like the trusty swiss army knife – quick and versatile for general tasks. On the flip side, the Crawling API paired with Cheerio is more like a finely tuned instrument, giving you the freedom to play with the data in a way that suits your needs.

VIII. Frequently Asked Questions

Q. Can you scrape Stackoverflow??

A: Yes, but it’s important to be responsible about it. Think of web scraping like a tool – you can use it for good or not-so-good things. Whether it’s okay or not depends on how you do it and what you do with the info you get. If you’re scraping stuff that’s not public and needs a login, that can be viewed as unethical and possibly illegal, depending on the specific situation.

In essence, while web scraping is legal, it must be done responsibly. Always adhere to the website’s terms of service, respect applicable laws, and use web scraping as a tool for constructive purposes. Responsible and ethical web scraping practices ensure that the benefits of this tool are utilized without crossing legal boundaries.

Q. Does ChatGPT replace Stack Overflow?

A: Not exactly. ChatGPT can provide quick explanations, code snippets, and guidance, but Stack Overflow remains valuable for its community-driven, peer-reviewed answers. While ChatGPT helps you get started or clarify concepts, Stack Overflow offers discussions, real-world problem-solving, and insights from developers with hands-on experience. Ideally, they complement each other rather than replace one another.