Microsoft Excel is a popular spreadsheet program used for data analysis and visualization tasks. It provides several powerful features and formulas for carrying out various operations, such as calculations, graphing, and sorting.

Apart from its traditional uses, it’s also possible to scrape data from website into Excel program. This is particularly helpful if you want to pull data from external sources and integrate them into your Excel work environment without leaving the program.

Instead of copying data from websites and pasting them on the Excel spreadsheet, you can automate the entire process and enhance your accuracy and productivity.

This article discusses how to scrape data from a website into Excel sheet automatically and turn it into a structured format. We’ll also talk about how you can use scraping tools for Excel, like Crawlbase, to make the scraping process hassle-free, fast, and rewarding.

Let’s start by clearing the air of why you need to use Crawlbase when extracting online information to Excel. Note that you don’t need to be a programmer to use Crawlbase for Excel web scraping.

Why Use Crawlbase For Excel Web Scraping?

Scraping online data can be challenging. Most modern websites have implemented anti-scraping measures that impede crawling attempts. For example, if a site detects a high number of repeated requests coming from the same IP address, it can block the IP or restrict its access. This can thwart the scraping process.

If you scrape website data into Excel, especially if you configure it to allow for auto-refreshing of fetched data, you may be blocked. We’ll discuss how to use the auto-refreshing feature in scraping dynamic websites into Excel later in this article.

With the help of web scraping tools like Crawlbase, you can turn the data on a website into an Excel spreadsheet easily and fast. It allows you to scrape data from website to excel into columns at scale without experiencing the usual scraping challenges.

Here are some reasons why Crawlbase is excellent for your Excel web scraping tasks:

- Easy to use It’s easy to get up and running with Crawlbase, even without advanced programming skills. It provides an intuitive API that lets you quickly retrieve information from websites. You can use it for both small-scale and large-scale data extraction tasks.

- Supports advanced scraping With Crawlbase, you’ll not need to worry about using Excel to pull data from complicated websites. It supports JavaScript rendering that allows you to retrieve content from dynamic websites, even those created using modern technologies like React.js or Angular.

- Supports anonymous crawling You can use Crawlbase to pull online data without worrying about exposing your real identity. It has a large pool of proxies you can use to remain anonymous. It also has several data centers around the world.

- Bypass scraping obstacles Crawlbase lets you circumvent the access restrictions instituted by most web applications when scraping data. You can use it to evade any blockades, CAPTCHAs, or other obstacles that may prevent you from retrieving data quickly and efficiently.

- Free trial account Crawlbase offers 1,000 free credits for testing the tool. Before committing to a paid plan, you can use the free account to try out its capabilities.

How Crawlbase Works

Crawlbase provides a simple Crawling API that lets you extract online data efficiently and fast. With the API, pulling web content to an Excel spreadsheet is easy, even if you do not have a programming background.

The Crawling API URL begins with the following base part:

https://api.crawlbase.com

You’ll also need to provide the following two mandatory query string parameters:

- Authentication token This is a unique token that authorizes you to use the API.

- URL This is the URL you need to scrape its content.

When you sign up for a Crawlbase account, you’ll be provided with the following types of authentication tokens:

- Normal token This is for making generic web requests.

- JavaScript token This is for scraping advanced, dynamic websites. If a site renders its content via JavaScript, then using this token could help you harvest data smoothly.

Here is how to add the authentication token to your API request:

https://api.crawlbase.com/?token=ADD_TOKEN

The second mandatory parameter is the target website’s URL. The URL should begin with HTTP or HTTPS and be entirely encoded. Encoding converts the URL string into a universally acceptable format and is familiar to all web browsers. This makes it easier to transmit the URL over the Internet.

Here is how to add the URL of the website you want to scrape:

https://api.crawlbase.com/?token=ADD_TOKEN&url=ADD_URL

That’s all you need to start using Crawlbase to scrape data from website into Excel. It’s that simple!

If you add the required parameter information to the above request and run it on a web browser’s address bar, it’ll execute the API and return the full target web page.

Next, let’s see how you can use Excel to execute the above API request.

Using Crawlbase To Scrape Data From A Website To Excel

Excel provides a powerful web query feature that allows you to scrape data from website to excel into columns. There are two main ways of using the Excel web query feature:

- Using the From Web command

- Using the New Query command

Let’s see how you can use the commands to collect data from a website using Crawlbase.

a) Using the From Web command

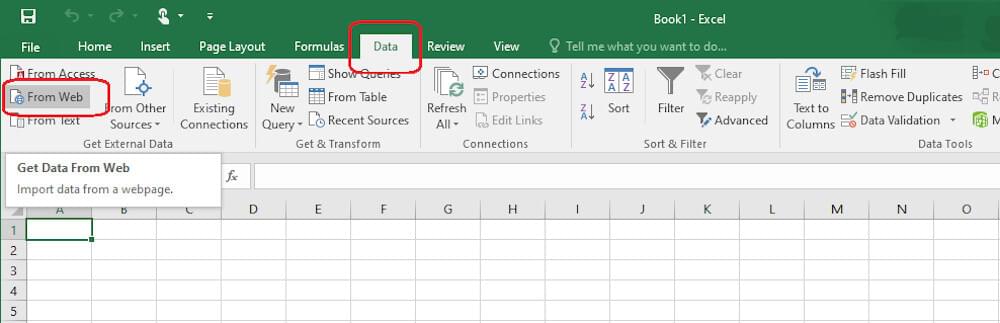

To create a new web query using the From Web command, select the Data ribbon and click the From Web option.

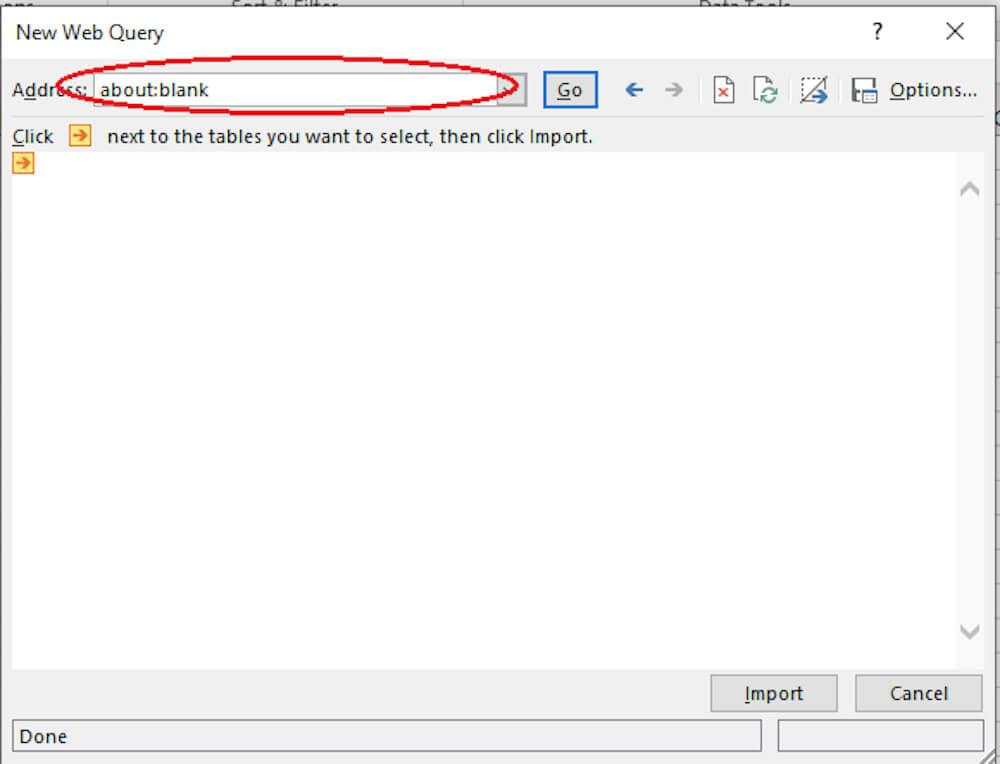

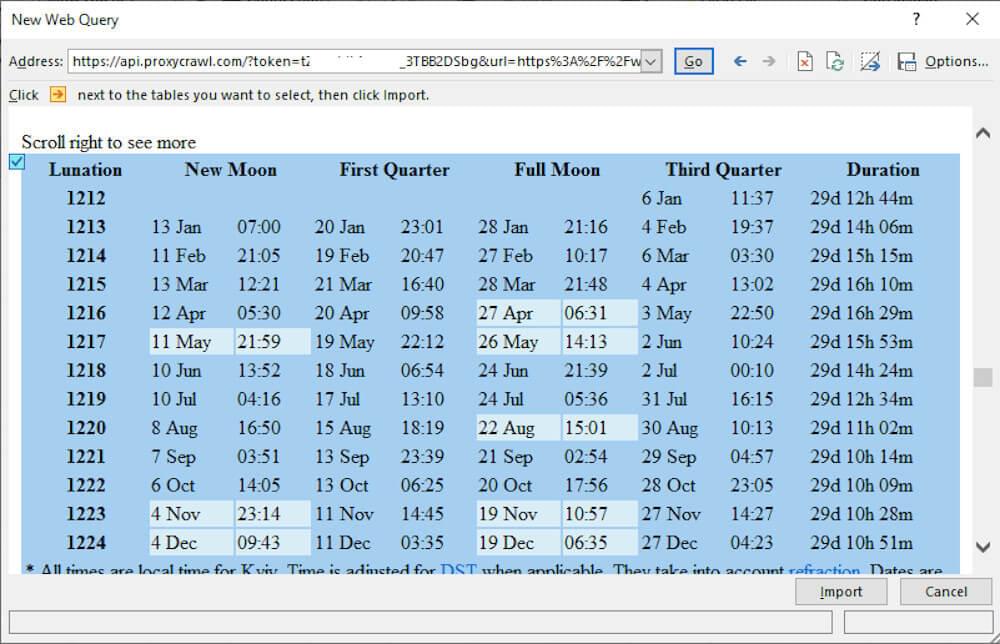

Next, you’ll be presented with the New Web Query dialog box where you can insert the URL of the web page you want to pull its data.

In this case, we want to extract data from this web page. And since we want to use Crawlbase to make the most of the scraping process, such as benefit from anonymity and evade any access blockades, we’ll have to make some configurations to the URL, as discussed previously.

Let’s go to our Crawlbase dashboard and grab the JavaScript token. Remember that the JavaScript token allows us to extract content from dynamic websites. You can get your token after signing up for an account.

Let’s also encode the URL. You can use this free tool to encode the URL.

After making the configurations, here is how the URL looks:

https://api.crawlbase.com/?token=USER_TOKEN&url=https%3A%2F%2Fwww.timeanddate.com%2Fmoon%2Fphases%2F

That’s the URL we insert in the address bar of the New Web Query dialog box. Next, click the Go button.

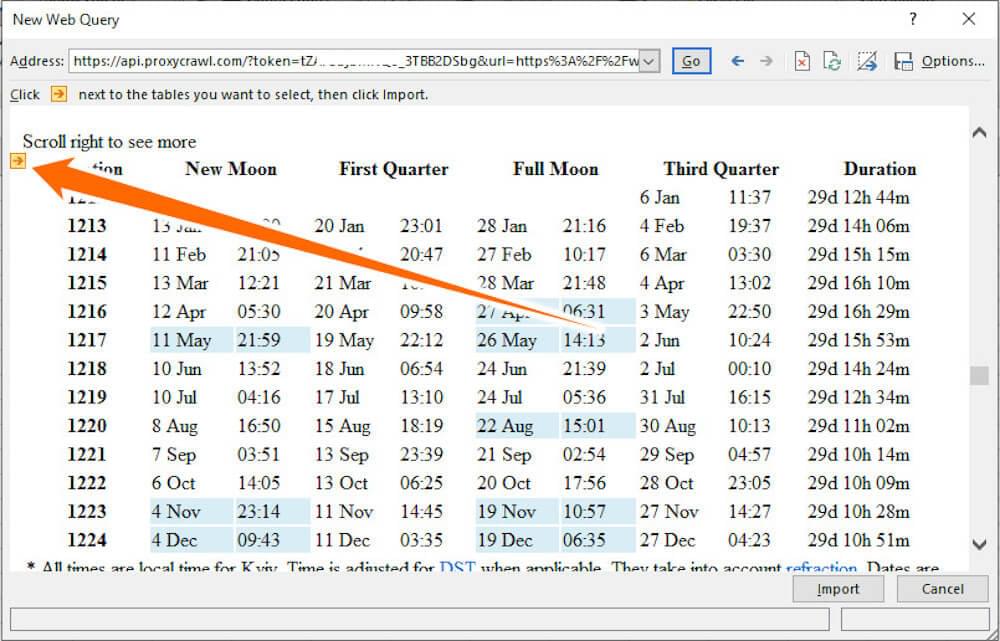

After the web page has loaded into the dialog box, Excel inserts small yellow right-arrow buttons next to the tables or data it finds throughout the page.

You just need to position your mouse cursor over the arrow that points to the data you need. Then, the selected table will be outlined in blue.

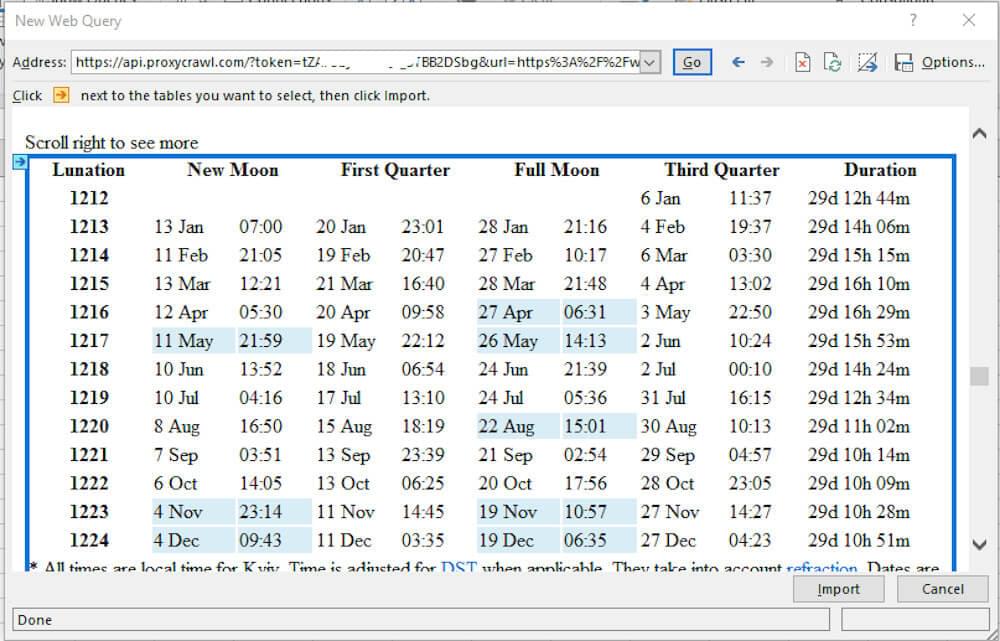

Next, click on the arrow. The entire table’s data will be highlighted in blue, and the arrow turned to a green checkmark button.

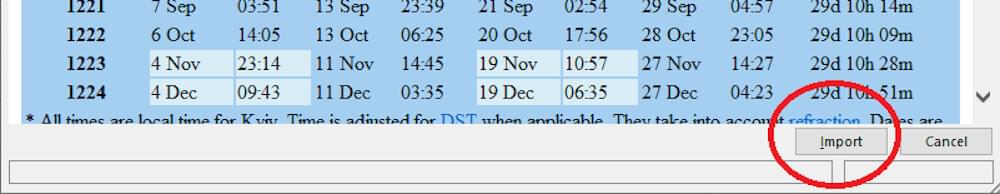

Then, click the Import button to load the selected data into an Excel worksheet.

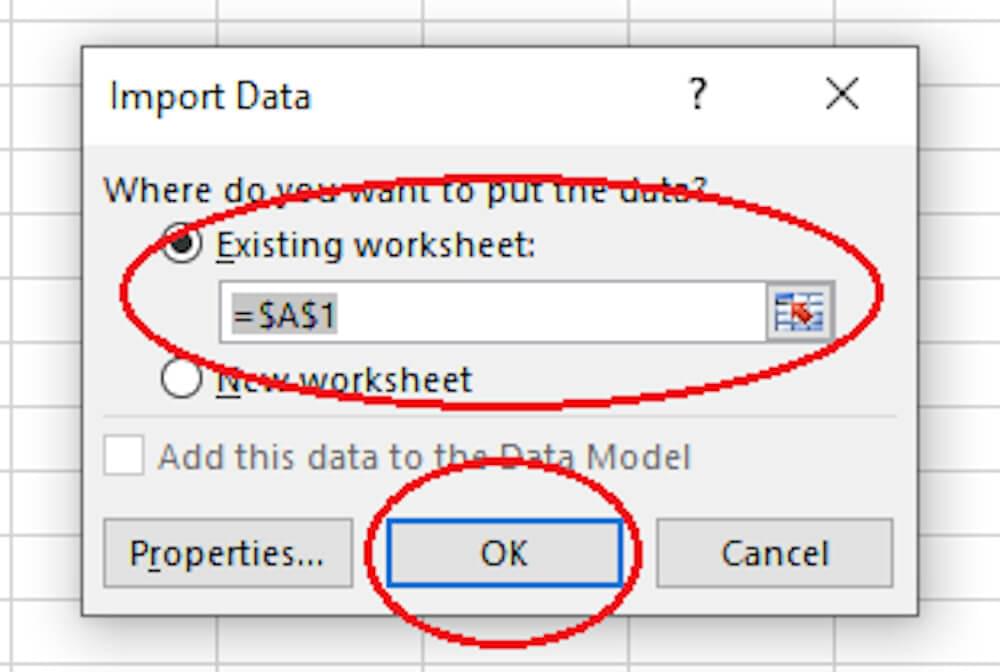

Next, Excel will ask you where you intend to put the imported data. If you want to place the data in the existing worksheet, select the first radio button; otherwise, select the second radio button to instruct Excel to insert the data in a new worksheet.

For this Excel web scraping tutorial, we’ll choose the first radio button. Then, click the OK button.

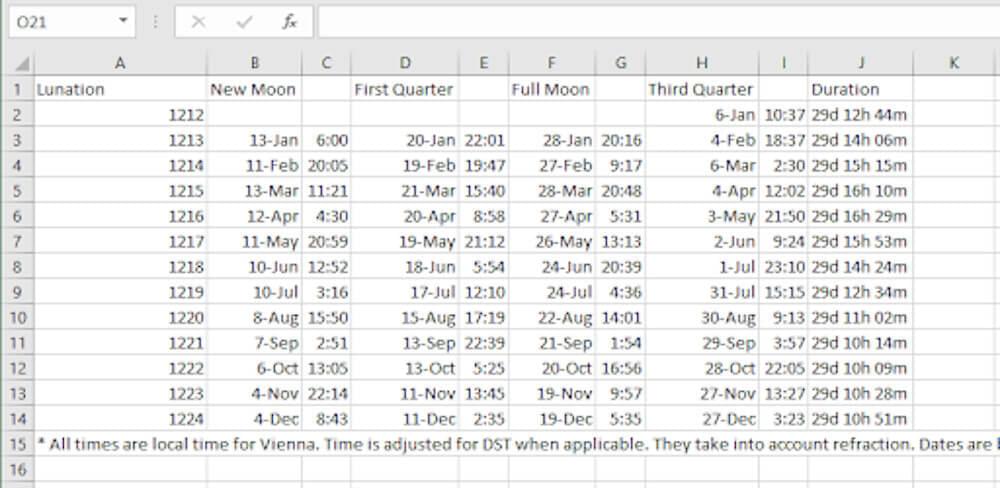

Excel may take a few moments to download the external data. After completing the process, the output looks like something below:

It’s that simple!

You can now shape and refine the data according to your specific needs.

b) Using the New Query command

You can also use the New Query command to turn a web page’s data into an Excel spreadsheet. This is mostly suitable for data in table format.

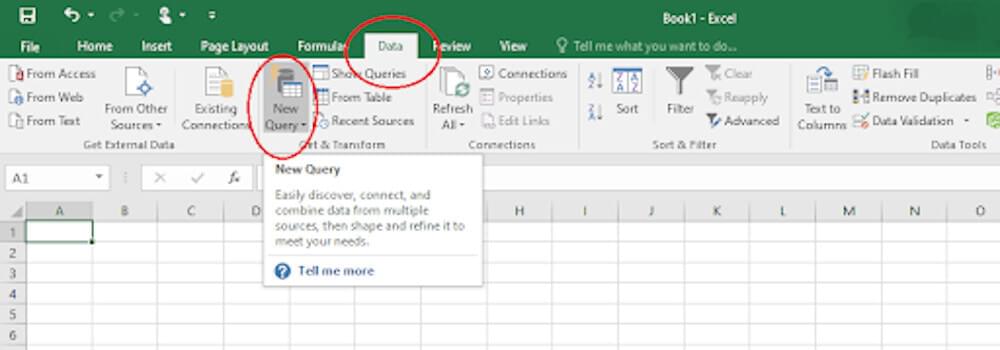

To create a new web query using this command, select the Data ribbon and click the New Query option.

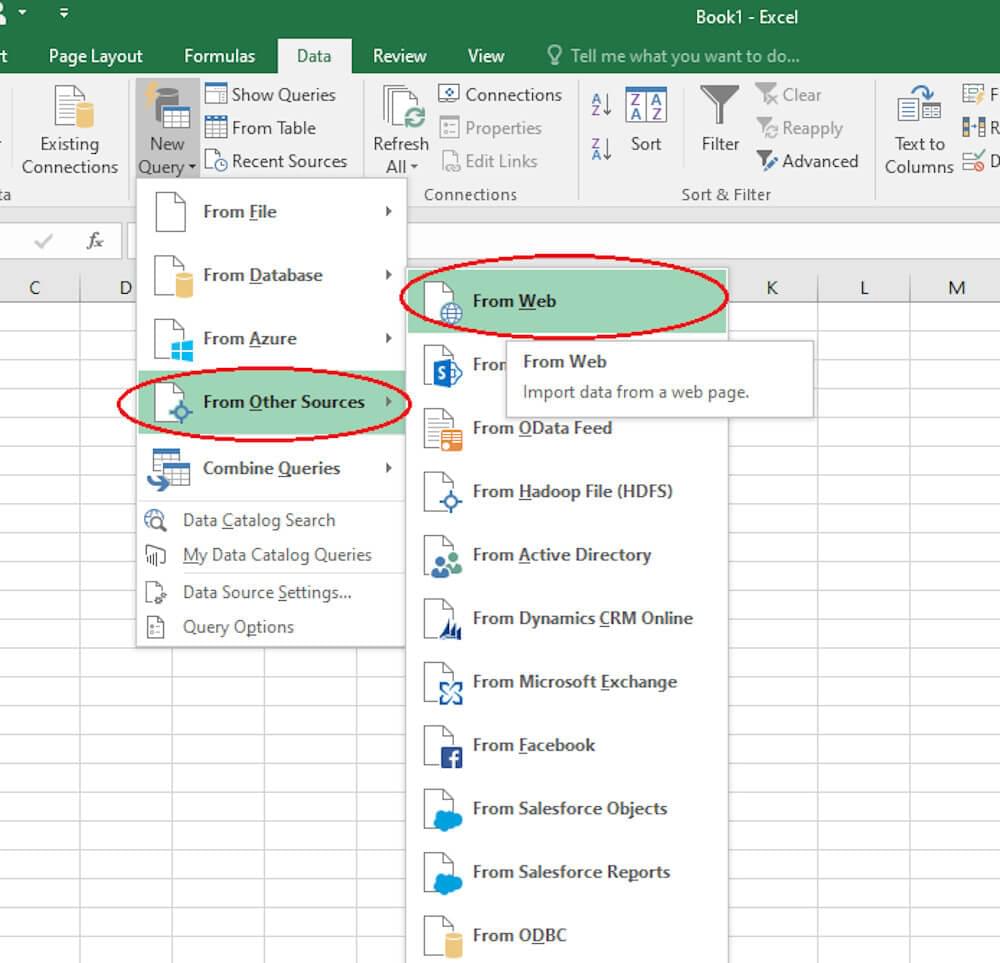

Notice that this command provides several options for retrieving external data and inserting it into an Excel worksheet. For this tutorial, let’s choose From Other Sources and From Web options.

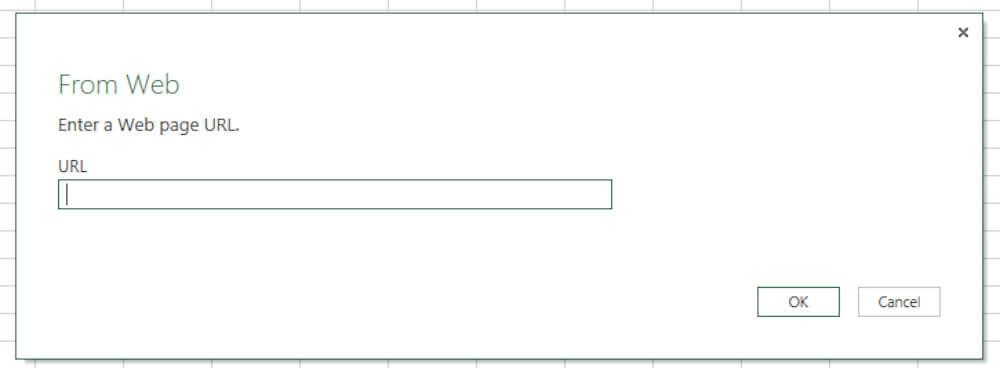

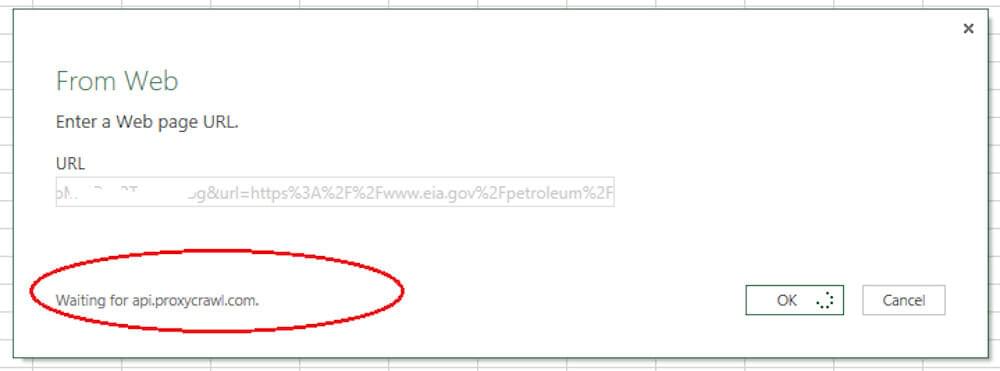

Next, you’ll be presented with the From Web dialog box where you can insert the URL of the web page you want to extract its data.

In this case, we want to extract data from this web page. And just as we explained previously, we’ll pass the URL through Crawlbase.

Here is how the URL looks:

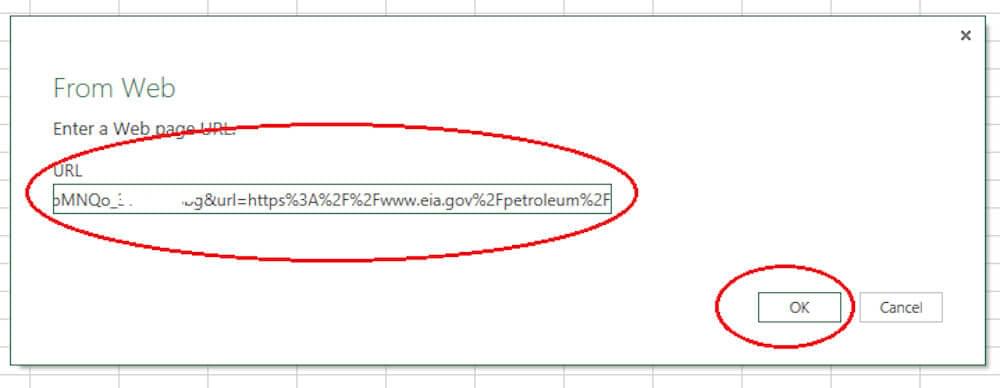

https://api.crawlbase.com/?token=USER_TOKEN&url=https%3A%2F%2Fwww.eia.gov%2Fpetroleum%2F

That’s the URL we insert in the address bar of the From Web dialog box. Next, click the OK button.

Excel will now attempt to make an anonymous connection to the target web page via Crawlbase.

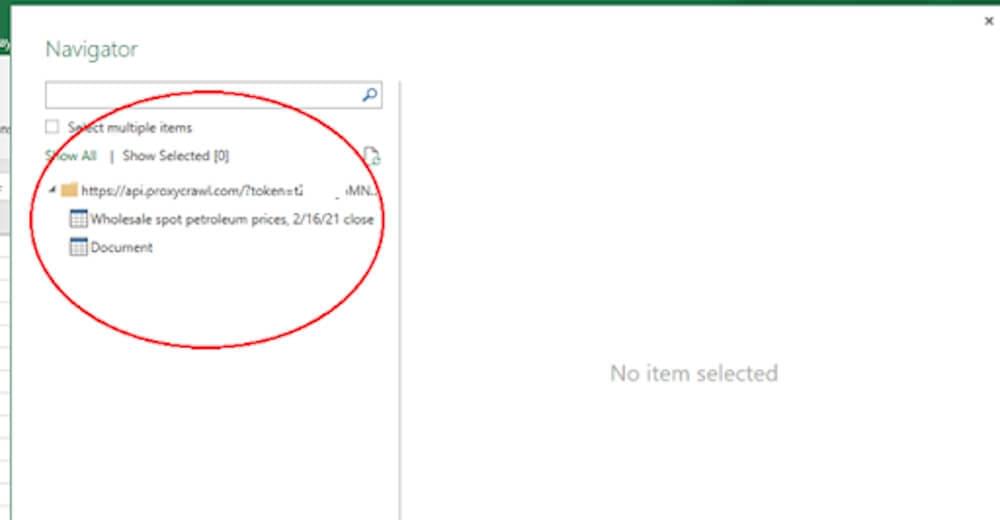

Next, the Navigator window will appear with a left-hand list of tables available on the target web page.

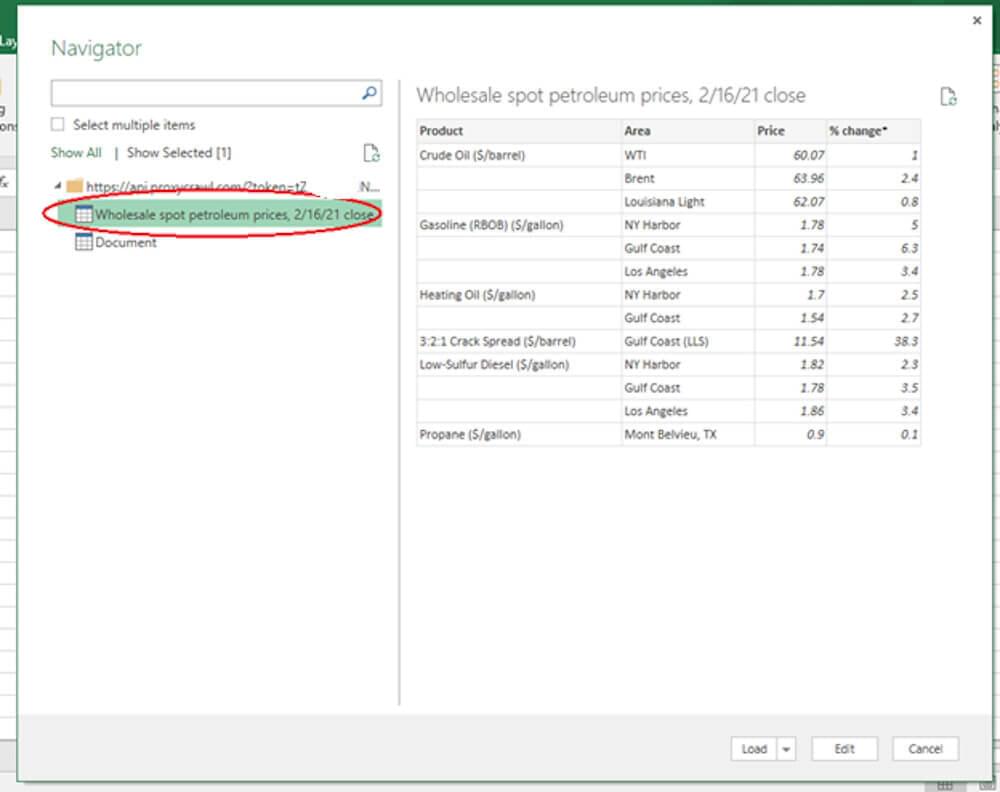

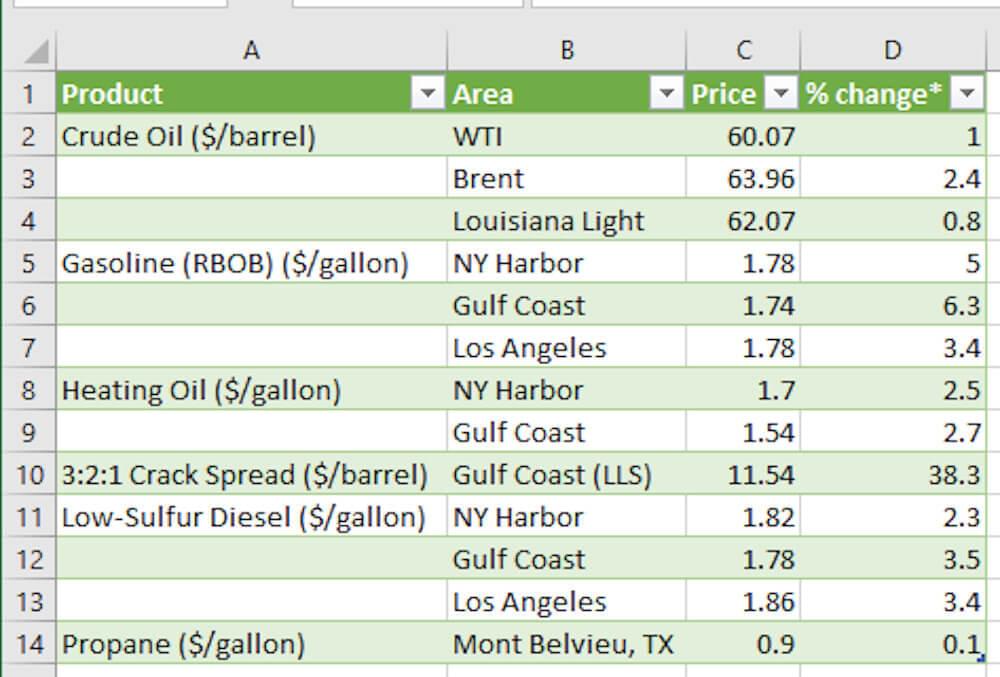

If you select any table, its preview will be populated on the right. Let’s select the **Wholesale spot petroleum prices…**table.

Next, let’s click the Load button at the bottom of the window. Notice that there are other options for accomplishing other tasks, such as clicking the Edit button to edit the data before downloading it.

After clicking the Load button, the external data will be downloaded to the Excel spreadsheet. The output looks like something below:

That’s it!

How To Refresh Excel Data Automatically

Excel allows you to refresh the downloaded data automatically instead of reinstalling the scraping process. This is important for keeping the pulled data up-to-date, especially when dealing with frequently changing data on the target web page.

However, if you repeatedly request a website to grab its data, the site may notice that your actions are not normal and block you from accessing its content. That’s why you need to use Crawlbase. It’ll help you access sites just like an average and real user does and circumvent any access restrictions.

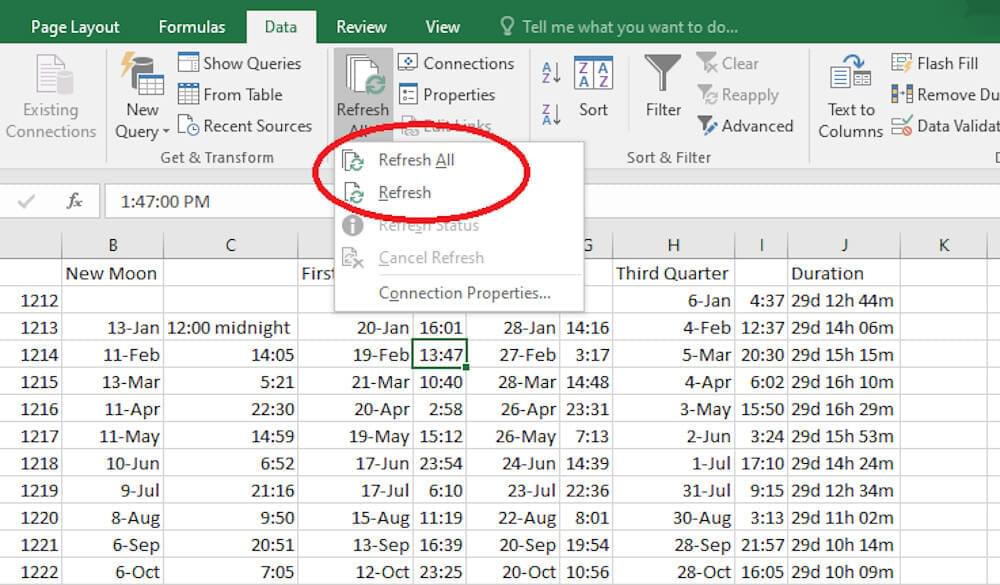

To refresh the web-queried data, you can simply click any cell of the data and select the Refresh All option under the Data ribbon. Then, under the dropdown options, click Refresh All if you want to update more than one data or Refresh if you want to update only a single data.

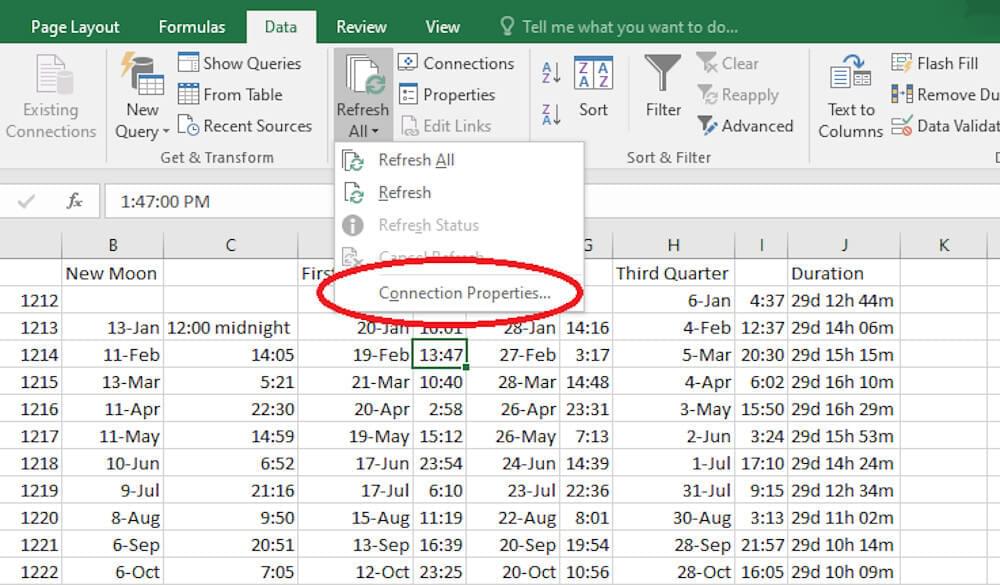

You can also instruct Excel to refresh the data automatically according to your specified criteria. To do so, click the Connection Properties… option.

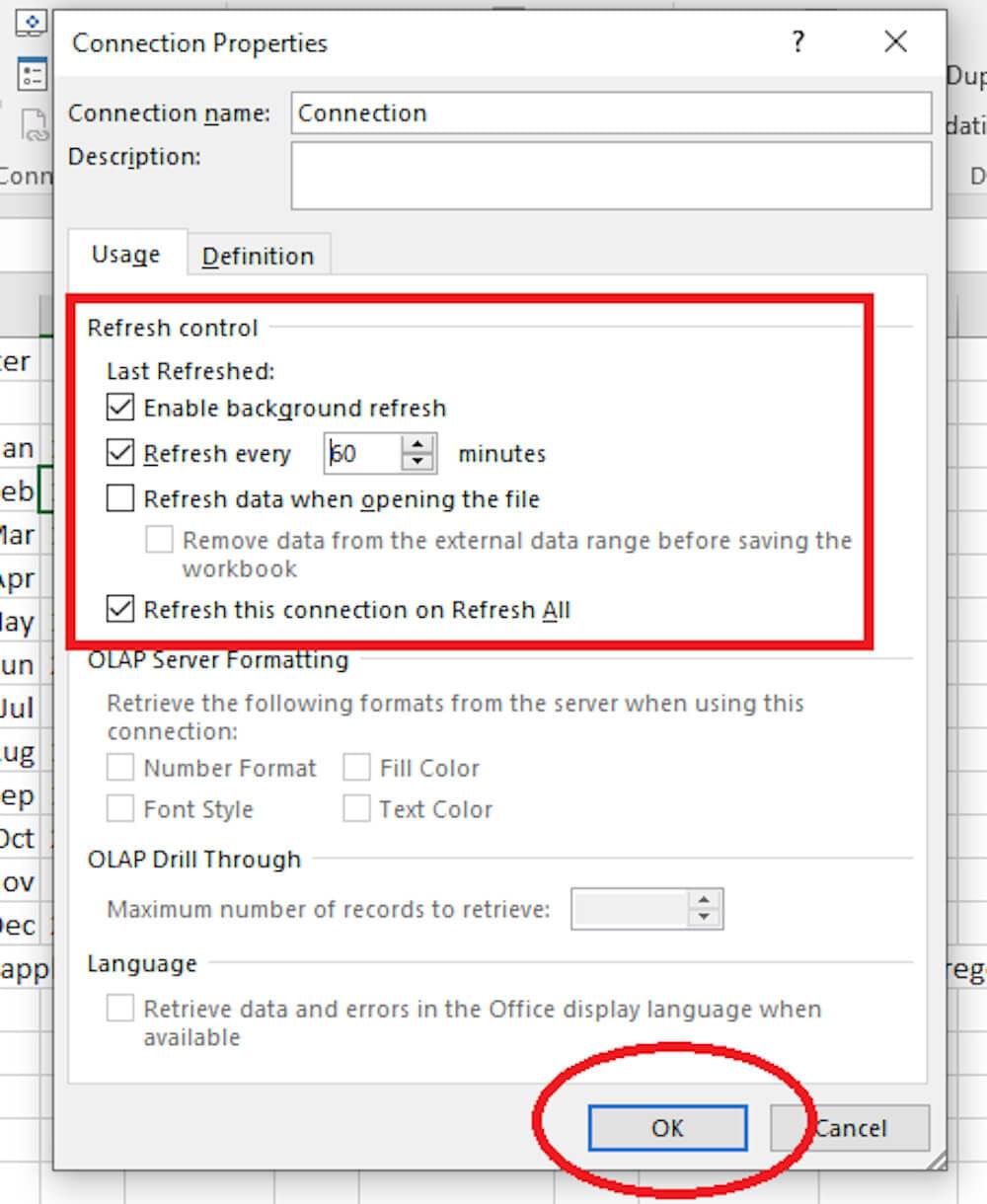

The Connection Properties dialog box will appear, allowing you to control how the scraped data refreshes. Under the Usage tab, you can enable background refresh, set a specific time period to update, or choose other options.

Notice that the dialog box also lets you accomplish other tasks, such as adding a description to your connection, defining the maximum number of records to fetch, and more.

After specifying the refresh criteria, click the OK button.

Automation of Data Scraping from Websites to Excel

Extracting data to Excel from websites can be a daunting task, especially when dealing with complex webpage structures or multiple pages. While scraping tools for Excel can handle simple extraction, they often falter with intricate designs, leading to manual efforts in URL pasting, data checking, and cleaning.

But fear not! Platforms like Crawlbase are here to streamline your process with just a few clicks. Simply upload your list of URLs, and let Crawlbase scrape data from website to excel into columns smoothly. Here’s how it works:

Data Extraction: With Crawlbase, scrape data from website into excel worksheets. You can extract data from any webpage, including those with complex HTML structures.

Data Structuring: No more messy spreadsheets! Crawlbase identifies HTML structures and formats the data neatly, retaining table structures, fonts, and more.

Data Cleaning: Tired of more manual tinkering? Crawlbase can quickly handle missing data points, format dates, replace currency symbols, and more using automated workflows.

Data Export: Choose your destination! Export the cleaned data to Google Sheets, Excel, CRM systems, or any other database of your choice.

And the best part? If you have specific needs, our team is here to help. We’ll work with you to set up automated workflows, ensuring every step of your web scraping process is smooth and efficient.

So why waste time on manual tasks when Crawlbase can automate them for you? Take the hassle out of web scraping and let Crawlbase speed up your data extraction.

Conclusion

That’s how to scrape data from a website to Excel. With the Excel web query feature, you can easily download data from websites and integrate them into your spreadsheet.

And if Excel is combined with a powerful tool like Crawlbase, you can make your data extraction tasks productive, smooth, and fulfilling.

Crawlbase lets you pull information from websites at scale while remaining anonymous. It’s the tool you need to avoid experiencing access blockades, especially if you’re refreshing scraped Excel data automatically.

Click here to create a free Crawlbase account.

Happy scraping!