Efficient and fast code is important for creating a great user experience in software applications. Users don’t like waiting for slow responses, whether it’s loading a webpage, training a machine learning model, or running a script. One way to speed up your code is caching.

The purpose of caching is to temporarily cache frequently used data so that your program may access it more rapidly without having to recalculate or retrieve it several times. Caching can speed up response times, reduce load, and improve user experience.

This principle is especially critical in web scraping operations where repeated requests to the same endpoints can trigger rate limits or IP blocks. At Crawlbase, we’ve built intelligent caching directly into our solutions, allowing developers to focus on data analysis rather than request optimization. For your own Python applications, understanding these caching principles can similarly transform performance.

This blog will cover caching principles, its role, use cases, strategies, and examples of caching in Python. Let’s get started!

Table of Contents

- Reduced Access Time

- Reduced System Load

- Improved User Experience

- Web Applications

- Machine Learning

- CPU Optimization

- First-In, First-Out (FIFO)

- Last-In, First-Out (LIFO)

- Least Recently Used (LRU)

- Most Recently Used (MRU)

- Least Frequently Used (LFU)

- Manual Decorator for Caching

- Using Python’s functools.lru_cache

What is a Cache in Programming?

A cache is a temporary storage location for frequently retrieved data. This data is preserved on a quicker storage medium, so it can be retrieved faster than from the original source. Similarly, apps like Facebook store temporary data in a cache and if this temporary data grows too large, you can clear Facebook cache to reclaim space and refresh the app.

Purpose of Caching

Caching allows you to speed up applications by reducing the time and resources needed to get data. Here are the main reasons for caching:

1. Faster Access

Caching reduces the time it takes to retrieve data. When the app fetches data from the cache instead of slower sources like databases or APIs, its response time improves, and overall performance increases.

2. Less System Load

By storing the frequently used data in Cache, you can minimize the number of requests you make to your database or other data source. This lightens those systems helps avoid bottlenecks, and increases overall system performance.

3. Better User Experience

Caching allows users to retrieve the data quickly, which is very important for real-time applications and Web pages. Faster load times mean more fluid interaction and better user experience.

Caching is key to building fast and user-friendly apps. It saves time, reduces system stress, and keeps users happy.

Common Use Cases for Caching

Caching can be used in many places to speed up. Here are some common caching use cases:

1. Web Applications

Caching in web apps reduces the time it takes to pull data from databases or other APIs. By caching frequently used data like product lists or user settings the app can serve requests without having to hit the database many times. This means faster page loads and a better user experience.

2. Machine Learning

Machine learning models need big datasets. Caching helps by storing frequently used data or model outputs, reducing the time it takes to process data. Caching can be used to cache expensive calculation results or preprocessed data, so training and model predictions are faster.

3. CPU Optimization

CPUs store frequently used instructions and data. This speeds up programs by reducing the time spent in slower memory. Caching in CPUs (L1, L2, L3) is key to performance optimization in high-throughput tasks.

Caching is used in web apps, machine learning, and CPU-intensive tasks to speed up, reduce latency and overall system performance. Efficient caching works best when paired with efforts to optimize CPU performance, helping to reduce processing time and overall system load for improved performance.

Caching Strategies

Caching can be done based on how data is accessed and stored. Here are some caching strategies:

1. First-In, First-Out (FIFO)

FIFO is a simple caching strategy where the first item added to the cache is the first one to be removed when the cache is full. This works well for systems where the order of data access matters, like message queues.

2. Last-In, First-Out (LIFO)

LIFO is where the most recent item added is the first one to be removed from the cache. This is useful when the latest data is most likely to be used again soon, like in stack-based applications.

3. Least Recently Used (LRU)

LRU removes the least recently used items from the cache. This is useful for scenarios where frequently accessed data should be prioritized over older, less-used data. LRU is used in web apps and databases to fast-track access to popular data.

4. Most Recently Used (MRU)

MRU is the opposite of LRU. It gets rid of the data used most first. This proves helpful when information that hasn’t seen use in a while has a high chance to be needed again.

5. Least Frequently Used (LFU)

LFU removes the data that has been used the least. This helps to prioritize data that is used more and remove less used items, to increase cache hit ratio.

Choosing the right caching strategy will improve performance and ensure that the right data is available for fast access.

Implementing Caching in Python

Caching can be done in Python in multiple ways. Let’s look at two standard methods: using a manual decorator for caching and Python’s built-in functools.lru_cache.

1. Manual Decorator for Caching

A decorator is a function that wraps around another function. We can create a caching decorator that stores the result of function calls in memory and returns the cached result if the same input is called again. Here’s an example:

1 | import requests |

In this example, the first time get_html is called, it fetches the data from the URL and caches it. On subsequent calls with the same URL, the cached result is returned.

- Using Python’s

functools.lru_cache

Python provides a built-in caching mechanism called lru_cache from the functools module. This decorator caches function calls and removes the least recently used items when the cache is full. Here’s how to use it:

1 | from functools import lru_cache |

In this example, lru_cache caches the result of expensive_computation. If the function is called again with the same arguments, it returns the cached result instead of recalculating.

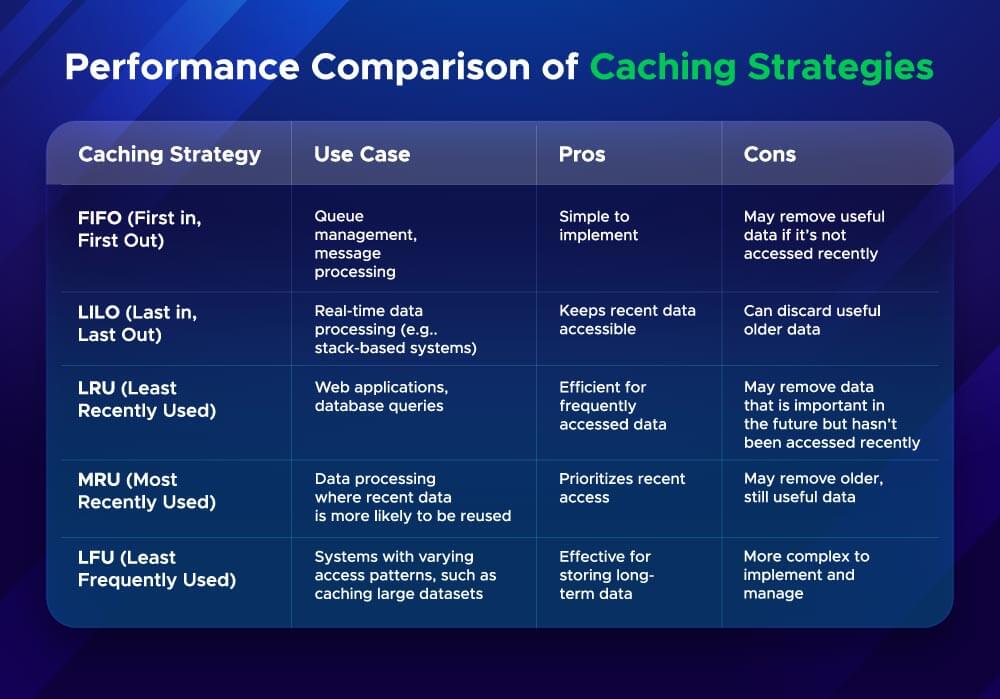

Performance Comparison of Caching Strategies

When choosing a caching strategy, you need to consider how they perform under different conditions. Caching strategies performance depends on the number of cache hits (when data is found in the cache) and the size of the cache.

Here’s a comparison of common caching strategies:

Choosing the right caching strategy depends on your application’s data access patterns and performance needs.

Optimize Performance Through Strategic Caching

Caching can be very useful for your apps. It can reduce data retrieval time and system load. Caching methods such as FIFO, LRU, and LFU have different use cases. For example, LRU is good for web apps that need to keep frequently accessed data, whereas LFU is good for programs that need to store data over time.

When working with web data at scale, implementing these caching strategies becomes even more crucial. Crawlbase’s products incorporate advanced caching technologies similar to those discussed in this article, but purpose-built for web scraping scenarios. Sign up today to experience how professional-grade caching can transform your data collection projects.

Implementing caching correctly will let you design faster, more efficient apps and get better performance and user experience.

Frequently Asked Questions (FAQs)

Q. What is caching in Python?

Caching in Python is a way to store the result of expensive function calls or data retrieval so that future requests for the same data can be served faster. By storing frequently used data in a temporary memory (cache), you’ll load data faster and speed up your app.

Q. How do I choose the best caching strategy?

It depends on your app and how it uses data. For example:

- LRU (Least Recently Used) for data that’s used frequently but expires fast.

- FIFO (First-In, First-Out) for when order matters.

- LFU (Least Frequently Used) for when some data is used way more than others.

Choose the right one and you’ll get better data access and performance.

Q. How can I implement caching in Python?

You can cache in Python using many ways. Here are a few:

- Manual Decorators: Create a custom caching system with a decorator to store and retrieve function results.

functools.lru_cache: This is a built-in Python decorator that does LRU caching.

Both will speed up your code depending on your use case.