If you want to keep up with product prices across every online store or e-commerce website, you will need to spend a lot of time monitoring each of the pages, especially when these prices change without warning. Doing it manually can be exhausting and presents a major roadblock to staying on top of market trends while keeping your products competitive.

In this age, it is a necessity to have some automation when handling a wide-scope and complex task such as price intelligence. So, in this blog, we’ll help you automate the process and guide you on how to build a pricing scraper with Python and Crawlbase that can make this task faster, easier, and more reliable.

Table of Contents

Benefits of Web Scraping for Price Intelligence

Web scraping has become an essential tool for collecting data, especially when it comes to tracking product information across various e-commerce websites. Most market analysts and businesses use it to gather pricing data from different sources and make informed decisions. Many companies now rely on web scraping to shape and adjust their pricing strategies.

Let’s take a closer look at the key benefits:

Real-time Data Monitoring

One powerful use of web scraping is real-time data extraction. You can run a scraper that automatically pulls up-to-date information from various e-commerce sites like Amazon or eBay with the proper setup. It can target specific product pages to monitor details such as price and availability. This process can be fully automated to run every minute to allow tracking changes as they happen without the need to visit each site manually. The scraper handles everything in the background.

Analyzing Market Trends

The data you’ve extracted with the help of a web scraper can be organized in a way that helps data analysts and decision-makers better understand price movements and uncover valuable business insights. Your team can have a bird’s eye view of the current market landscape giving you opportunities to identify emerging trends.

Optimizing Your Pricing Strategy

In some cases, you will need to take things under a microscope. Web Scrapers can be adjusted to target specific data points to achieve specific goals. For example, you can set your scraper to automatically monitor Amazon, eBay, or Walmart pages and gather pricing information across your product catalog every minute. You can then arrange the data to reveal which competitors are offering the best deals at any given moment. This level of detail allows you to be more precise with your marketing decisions.

How Crawlbase Helps with Price Intelligence

It is clear that price intelligence benefits greatly from web scraping, but building a web scraper from scratch is not a simple task. Many businesses struggle because they refuse to invest in building a reliable web scraper.

This is not just about hiring experts to build the tool for you. Developers also need to find the proper tool to deal with common scraping challenges in the most efficient way, and that’s where Crawlbase helps.

The Crawling API

Crawlbase’s main product, the Crawling API simplifies web scraping for you. It is an API designed to crawl a web page with a single request. All you have to do is provide your target URL and send it through the API endpoint. The API will take care of most of the scraping roadblocks under the hood.

Key Advantages of Price Scraping with Crawlbase

- Real-Time Data Access - Crawlbase does not store or archive any data. It is meant to crawl whatever data is currently available on a target web page. This ensures that you only get fresh and accurate information whenever you send your requests to the API.

- Bypass Common Scraper Roadblocks - Crawlbase solves common web scraping problems like bot detection, CAPTCHAs, and rate limits. The API uses thousands of proxies that are maintained consistently and is powered by AI to ensure that you’ll get a high success rate almost 100% of the time. It also handles JavaScript rendering, so you do not need to configure headless browsers or user agents manually in your code.

- Easy and Scalable Integration – You can call the API in a single line of code. That’s how easy it is to send your API request. You can insert this call in any programming language that can do HTTP or HTTPS requests. Additionally, Crawlbase offers libraries and SDKs that can be accessed by developers for free to simplify integration when scaling.

- Cost-Effective Solution – Since Crawlbase provides solutions for the most complex parts of web scraping, this can have a big impact on the overall cost of your project. Paying minimal for the solution often makes more sense than spending the majority of your budget to resolve complex issues on your own.

Step-by-Step Guide: How to Build a Price Scraper

In this section, we’ll walk you through building a scraper for some of the biggest online shops using the Crawling API and show you just how easy it is to set up while highlighting its key capabilities.

Selecting Target Websites (Amazon and eBay)

Before you start writing code, it’s important to plan ahead: decide which websites you want to target, what data points you need to extract, and how you plan to use that data. Many developers naturally begin with popular sites like Amazon and eBay because they offer rich, diverse datasets that are valuable for applications like price intelligence.

For this coding exercise, we’ll focus on the following SERP URLs as our targets:

Amazon

eBay

Set Up Your Workspace

At this point, you’ll need to choose your preferred programming language. For this example, we’ll use Python, as it’s widely considered one of the easiest languages to get started with. That said, you’re free to use any other language; follow along and apply the same logic provided here.

Setup Your Coding Environment

- Install Python 3 on your computer.

- Create a root directory in our filesystem.

1 | mkdir price-intelligence |

- Under the root directory, create a file named

requirements.txt, and add the following entries:

1 | requests |

Then run:

1 | python -m pip install -r requirements.txt |

- Obtain Your API Credentials

- Create an account at Crawlbase and log in

- After registration, you will receive 1,000 free requests

- Go to your account dashboard and copy your Crawling API Normal requests token

Sending Requests Using Crawlbase

Let’s start by building the scraper for the Amazon Search Engine Results Page. In the root directory of your project, create a file named amazon_serp_scraper.py, and follow along.

Add Import statements to your script.

1 | import requests |

Add function searches for products on Amazon using a given query, with optional parameters for the country code and top-level domain (such as .com or .co.uk), and returns a list of product dictionaries.

1 | def get_products_from_amazon(query: str, country: str = None, top_level_domain: str = 'com') -> list[dict]: |

Sets up your API token and Crawlbase endpoint to send the request.

1 | API_TOKEN = "<Normal requests token>" |

Construct the parameters for the GET request by encoding the search query for the Amazon URL and specifying the Amazon SERP scraper along with an optional country parameter.

1 | params = { |

Send the request to the Crawling API, then parse and return the product data.

1 | response = requests.get(API_ENDPOINT, params=params) |

To execute the program, insert the following code snippet to the end of the script:

1 | if __name__ == "__main__": |

You can modify the values in the function call to suit your needs. For example, replace “Apple iPhone 15 Pro Max 256GB” with any other product name, change “US” to a different country code, or update “co.uk” to another Amazon domain such as “com” or “de”.

Complete code example:

1 | import requests |

Run the script from the terminal using the following command:

1 | python amazon_serp_scraper.py |

Once successful, you will see the following raw data output:

1 | [ |

Next, create a new file named ebay_serp_scraper.py for the eBay integration, and add the following code using the same approach we applied in the Amazon scraper.

1 | import requests |

Once you run the script by running python ebay_serp_scraper.py in your terminal, you should see the raw output as shown below:

1 | [ |

Extracting and Structuring Price Data

While the current API output is clean and provides valuable information, not all of it is relevant to our specific goal in this context. In this section, we’ll demonstrate how to further standardize and parse the data to extract only the specific data points you need.

Let’s create a new file named structured_consolidated_data.py and insert the following code:

1 | from amazon_serp_scraper import get_products_from_amazon |

This script pulls relevant product data from Amazon and eBay from the Crawlbase data scrapers. It extracts and combines key details such as source, product, price, and URL from each search result into a single, easy-to-use list.

Here is the complete code:

1 | from amazon_serp_scraper import get_products_from_amazon |

Run the script from the terminal using the following command:

1 | python structured_consolidated_data.py |

Once you get a successful response, the consolidated output will be displayed in a structured format, showing the source field instead of raw data.

1 | [ |

Make Use of the Scraped Data

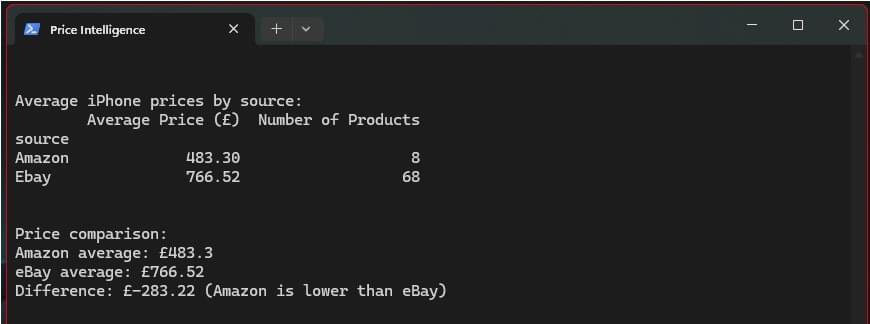

Now that we’ve successfully parsed the relevant data for our needs, we can begin applying it to basic pricing intelligence. For example, we can write a script to calculate and show the average price of the “Apple iPhone 15 Pro Max 256GB” listed on Amazon and compare it to the average price on eBay.

Create a new file named market_average_price.py and insert the following code:

1 | import pandas as pd |

To run the script, execute the following command in your terminal:

1 | python market_average_price.py |

Once successful, you’ll see output similar to the following:

Explore the full codebase on GitHub.

Handling Challenges of Price Scraping in Python

Rate Limits and Success Rate

The default rate limit for the Crawling API is 20 requests per second for e-commerce sites. If you start seeing 429 errors, it means you’ve hit this limit. However, the limit can be increased depending on your traffic volume and specific needs. You need to contact Crawlbase customer support for assistance.

Though success rates can vary sometimes due to the unpredictability of some websites, Crawlbase’s track record ensures a success rate of more than 95% in most cases. Additionally, you’re not charged for failed requests, so you can ignore them and resend those requests to the API.

Dealing with JavaScript-Rendered Content

Some pages require JavaScript to render the data you need. In these cases, you can switch from the normal request token to the JavaScript token. This allows the API to use its headless browser infrastructure to render JavaScript content properly.

If you notice missing data in the API response, it’s a good indication that it’s time to switch to the JavaScript token.

Ensuring Accuracy and Freshness

It’s always a good practice to verify the accuracy and freshness of the data you collect through scraping. You can manually check prices from time to time as an added measure, but Crawlbase ensures that the data you receive from the API reflects the most up-to-date information available at the time of the crawl.

Crawlbase does not alter or manipulate any data retrieved from your target website.

Go ahead and test the Crawling API now. Create an account to receive your 1,000 free requests. These requests can be used to evaluate the API’s response before making any commitment, making it a risk-free opportunity that could significantly benefit your projects.

Frequently Asked Questions (FAQs)

Q1: What is web scraping for price?

Web scraping for price is the process of extracting pricing information automatically from online sources such as e-commerce websites, product listings, or competitor platforms. Businesses use web scraping tools like Crawlbase to extract real-time price data, helping them monitor fluctuations, track discounts, and gain insight into market trends. This enables smarter pricing decisions and improved responsiveness to competitors.

Q2: What is scraping pricing data?

Scraping pricing data refers to the use of automation scripts to gather structured pricing information from online platforms. These insights can then be analyzed to support pricing strategies, benchmark against competitors, or feed into analytics dashboards for ongoing market evaluation.

Q3: What is the role of web scraping in competitive price intelligence and market research?

Web scraping prices from competitors allows businesses to monitor competitor prices, understand market positioning, and identify pricing trends across regions and channels. This data supports strategic planning, demand forecasting, and real-time pricing optimization.