In 2025, unblocking Amazon with proxies can be challenging, as the tech giant constantly upgrades its systems to block automated traffic. Making it difficult to access data on Amazon.

But that doesn’t mean scraping the data you need is impossible. Today, we’ll introduce you to a foolproof way to unblock Amazon data using Smart AI Proxy, a service that gives you access to rotating IP addresses from a server pool of millions of proxies.

This guide will show you how to unblock Amazon proxy issues and scrape data reliably using Crawlbase’s Smart AI Proxy. A complete guide to scraping Amazon effectively.

Table of Contents

- Why Amazon Blocks Crawlers and Proxies

- Introducing Smart AI Proxy

- Amazon Proxy Unblocker: Complete Setup Guide

- Unblocking Amazon with Smart AI Proxy: A Practical Use Case

- Unlock Amazon Scraping with Smart AI Proxy

- Frequently Asked Questions

Why Amazon Blocks Crawlers and Proxies

Every month, Amazon handles billions of connections all over the world, many of which aim to access valuable e-commerce data. While a majority of these come from regular shoppers, a significant portion is still generated by bots and crawlers.

Ever seen this page? Yes, you can thank bots for that. It’s just one of the many protective layers Amazon uses to safeguard its website. By blocking automated traffic, they help maintain the platform’s stability, reduce operational costs, and ensure a smooth experience for real users.

We have designed an Amazon scraper to handle all kinds of Amazon data. Try it now

Understanding Amazon’s Defenses Against Bots

With the ever-growing thirst for data, Amazon has naturally adapted to fight against unwanted traffic, which results in one of the most advanced anti-bot systems in the industry today. Their defenses are specifically designed to combat non-human activity to protect its infrastructure and ensure a smooth experience for real users.

Amazon’s bot protection relies on a combination of the following:

- JavaScript challenges and CAPTCHAs - One of the most common forms of anti-bot protection is a page that checks if the visitor is a real person. It usually shows an image with distorted letters, and you’re asked to type the correct characters to prove you’re human.

- Rate Limiting - Although Amazon doesn’t publicly share its rate-limiting rules, it’s a well-known challenge in the scrapping community. Real-world experience has shown that sending too many requests in a very short period of time often leads to getting blocked.

- IP reputation and Geolocation - As the word implies, IP reputation is a measure of how trustworthy an IP address is based on its behavior. Suspicious IPs are often blacklisted right away, and even residential IPs from unsupported regions can still trigger blocks.

- Device Fingerprinting - This typically involves the detection of browser headers, user agents, and plugins. These details are analyzed and you may get flagged if the established connection looks off.

- Behavioral Analysis - Amazon also monitors how users interact with the site. Bots often fail to replicate human behavior realistically, triggering defenses.

All these systems working together make Amazon scraping one of the most challenging tasks to perform reliably.

Introducing Smart AI Proxy

Despite the efforts of Amazon to protect its site from artificial traffic, there’s no denying Amazon’s net income is still growing each year. This is why a lot of industry relies on Amazon data, and the only way to overcome these challenges from Amazon is by stepping up your game.

What Makes These Proxies “Smart”

Smart AI Proxy is one of the best Amazon proxies in the market, as it directly counters the platform’s bot protection layers. At its core, an AI trained to utilize several or all of the following key features below:

- Rotating IP addresses - Smart AI Proxy intelligently rotates your requests across thousands of IPs instead of relying on just one that could easily get blocked or flagged by websites. This smart switching helps you avoid rate limits and bans, meaning fewer retries and a much higher success rate.

- High-Quality IPs - Smart AI Proxy uses a mix of data center, residential, and mobile IPs, all carefully monitored and maintained to make sure each one stays trustworthy. This is especially important when dealing with platforms like Amazon, which have strict anti-bot systems that can easily flag suspicious activity.

- Smart Geolocation - Because of the built-in AI and machine learning, Smart AI Proxy can automatically pick the best IP location based on the website you’re targeting. But if you prefer more control, you can also manually choose the country you want the request to appear from.

- Adaptive User-Agent - Unlike static or purely random user agents, Smart AI Proxy intelligently selects the user agent that best fits the target website’s expectations (e.g., mobile vs. desktop, browser version, or location). This increases the chances of successful access and helps avoid detection.

Easy Setup and Flexible Protocols

Smart AI Proxy isn’t just a smart way to unblock Amazon, it’s also built to fit right into your existing setup. All you need is the proxy host, port, and your authentication key to get started.

The Crawlbase Smart AI Proxy supports both HTTP and HTTPS protocols:

- HTTP: smartproxy.crawlbase.com:8012

- HTTPS: smartproxy.crawlbase.com:8013

The HTTPS option adds an extra layer of security, with SSL/TLS encryption handled directly at the proxy level. Just keep in mind that client-side SSL verification is disabled, so if you’re using curl, you’ll need the -k flag.

This makes it more versatile and enterprise-ready, allowing users to choose their preferred connection method based on their security requirements.

Amazon Proxy Unblocker: Complete Setup Guide

In this section, we’ll show you the step-by-step procedure for protecting your web crawler from being flagged or blocked by Amazon.

Setting Up Your Coding Environment

Before building your Amazon proxy unblocker, you’ll need to set up a basic Python environment. Here’s how to get started:

- Install Python 3 on your computer

- Install

requestsmodule, which makes it easy to send HTTP requests in Python.

1 | python -m pip install requests |

Note: You can write and run your code using any text editor, but using an IDE can speed things up. Tools like PyCharm or VS Code are great for writing Python code, especially for beginners, as they include helpful features like syntax highlighting, error checking, and debugging tools.

Obtaining Credentials

- Sign up for a Crawlbase account and log in to receive your 5,000 free requests

- Get your Smart AI Proxy Private token

Making Your First Successful Request

At this point, your coding environment should be ready. Let’s try sending your first request.

In this example code, we’ll try to fetch the HTML content of this Amazon product details page. You are free to copy this code, but make sure to replace the Private_token with the actual token or authentication key obtained from your Crawlbase account.

1 | import requests |

You may refer to our GitHub repository for the source code.

Key Things to Know

- Smart AI Proxy URL: The format

https://<TOKEN>:@smartproxy.crawlbase.com:8013is how authentication is handled. Your token is used as the username in the proxy connection. - verify=False: This disables SSL verification on the client side, which is required here because SSL is handled by the proxy itself, as noted in the Smart AI Proxy documentation.

Once you run this code, you should see a 200 response and the full HTML of the Amazon product page similar to the image below.

Unblocking Amazon with Smart AI Proxy: A Practical Use Case

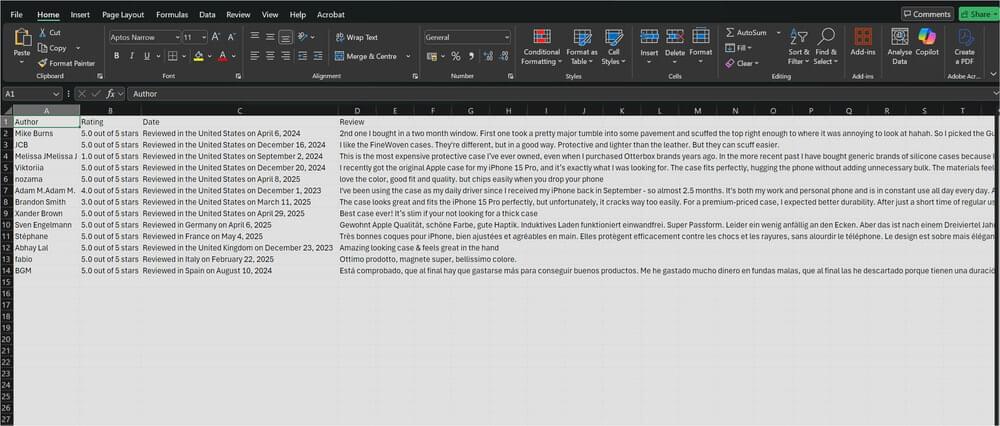

Now, let’s apply what you’ve learned into practice. We’ll show you how to extract a list of reviews from an Amazon product page and save the data into a CSV file.

Extracting Specific Data

We’ll use the Data Scraper feature from Crawlbase called Amazon-product-details Scraper via the CrawlbaseAPI-Parameters header. This allows our code to automatically parse the Amazon page and return clean, structured JSON data.

1 | import requests |

You may refer to our GitHub repository for the source code.

How This Works

- CrawlbaseAPI-Parameters: The

scraper=amazon-product-detailsparameter tells Crawlbase to analyze the product page and return structured JSON that includes reviews, ratings, product info, etc. - Print JSON response: We extract the list of reviews from

json_data["body"]["reviews"]and loop through them. For each product review, we print the Author, Rating, Date, and Review text.

Compiling Extracted Data into CSV

Lastly, you could easily modify the code to save the reviews to a CSV file for later analysis. Here’s an example of how to save the data.

1 | import requests |

You may refer to our GitHub repository for the source code.

This simple snippet writes to a new CSV file named product_reviews.csv.

This is a basic use case for how to interact with Amazon’s product pages, and you can adapt the script for different tasks, such as extracting other product details like prices, ASIN values, and descriptions.

We’ve published the complete code for this solution on GitHub. You can view it here.

Unlock Amazon Scraping with Smart AI Proxy

In a world where data is as valuable as gold, it’s no surprise that many are looking for ways to access it, even when barriers stand in their way. Smart AI Proxy offers an efficient solution for individuals and businesses alike, simplifying the complex process of web scraping by handling the heavy lifting behind the scenes.

In this article, we’ve demonstrated the power of Smart AI Proxy and how simple it is to get started. When you’re working on a small project or scaling your operations for large-scale data extraction, Smart AI Proxy can help you access the information you need quickly, reliably, and without the usual burden. Try Smart AI Proxy for Amazon scraping and get 5,000 free credits.

Frequently Asked Questions

Q: Why should I use Smart AI Proxy as my Unblock Amazon proxy solution?

A: Smart AI Proxy is a cost-effective solution to help you easily bypass Amazon’s anti-bot systems. Instead of investing in your own proxy infrastructure or paying developers to build and maintain complex crawlers, Smart AI Proxy provides a simplified and centralized solution to crawling problems.

It also includes useful features like the Data Scraper we showed earlier. You can extract structured data not just from different Amazon pages but from other popular sites as well.

Q: Do I need a username and password to use Smart AI Proxy?

A: No, you do not need the traditional proxy username and password for authentication with Smart AI Proxy. Instead, it uses a Proxy Host, Port, and a unique authentication key or token, which you can find on your account dashboard.

This token-based authentication simplifies integration, reduces errors, and is more secure than embedding credentials in your code. It also makes your system easier to manage, especially when scaling your setup or rotating proxies across multiple requests or environments.

Q: Can I use Smart AI Proxy to crawl sites other than Amazon?

A: Yes, Smart AI Proxy is designed to help you avoid blocks and CAPTCHAs when crawling most public websites. You can check some of the articles below to see other ways to utilize Smart AI Proxy: