Most APIs can crawl and scrape public pages on the internet that do not require a login. But what happens if you need to access data hidden behind a login? Is there any option for you?

In this article, we’ll show you a unique way to extract your session cookies from a logged-in session and pass them to an API, allowing it to log in to a website and extract the data you need. The process may sound complicated, but give it a chance and see how Crawlbase can simplify the entire process for you - see it in action.

Table of Contents

Web Scraping Authentication Complexity

Websites use authentication systems to protect user-specific content. Unlike public pages, you can’t just send a GET request and expect to extract meaningful data. These private or protected pages employ various authentication methods, including login credentials, session cookies, tokens, login information, and occasionally multi-factor authentication.

Plus, most websites these days are serious about blocking bots and scrapers. Things like detecting when you’re not a real human, limiting how fast you can make requests, straight-up banning your IP address, or constantly changing their security tokens. Many sites also monitor and block suspicious IP addresses to prevent unauthorized access.

If you need to scrape content that requires login to access, you’ve basically got two main options for your target website:

• Build a script that can actually log in on its own to scrape a website—teaching it to fill out the login form and maintain that logged-in state while it grabs what you need.

• Do the login part yourself in a regular browser, then copy over those session cookies to your scraping script so the website thinks it’s you.

Crawlbase allows you to pass your session cookies to the API, enabling it to log in to a website before extracting the content. This feature is useful when you need to scrape websites that require authentication, such as platforms like Amazon. Some pages, such as product reviews, now require users to be logged in to view them. It’s also helpful for accessing content on social media sites like Facebook, including private groups or user profiles that aren’t publicly available.

Introduction to Authentication

When it comes to web scraping, authentication is often the first major hurdle you’ll encounter. Many websites protect their valuable data with login forms, requiring users to enter their login credentials before they can access certain pages. If you want to scrape data from these protected areas, you’ll need to automate the login process as part of your scraping workflow.

The typical approach involves sending HTTP requests to the login page, just like a regular user would. Using Python’s requests library, you can programmatically fill out the login form and submit it with a POST request. This means you’ll need to inspect the login page to identify the exact fields required—such as username, password, and sometimes hidden fields, so you can include them in your login credentials payload.

To do this, open the login page in your browser and use the browser’s developer tools to examine the HTML code. Look for the login form, note the names of the input fields, and find the URL to the form that was submitted. This information is crucial for crafting your POST requests correctly. Once you’ve gathered these details, you can use the requests library to send the login data and establish an authenticated session, allowing you to scrape data from pages that are otherwise locked behind a login.

By understanding how login forms work and how to interact with them using HTTP requests, you’ll be able to tackle the authentication wall that most websites put up to protect their content.

What are Session Cookies?

Session cookies are a type of temporary cookies stored in your browser when you log in to a website. They are small bits of data that are responsible for identifying whether a user is logged in and allowed to access protected content. This data usually expires when you close your browser, or sometimes, these cookies expire automatically after a specific period.

Without these cookies, websites won’t be able to identify the user and remember if they’re already authenticated or not. You’d be asked to log in over and over again, which wouldn’t be very practical.

This is where session cookies become important for scraping. If you try to scrape a page that requires a login and you don’t include your session cookie, the site will likely block your request or send you to the login page. However, if you include the correct cookie, the website will treat your scraper as a logged-in user and grant access to the protected content, allowing you to make authenticated requests and grant access to the protected content. You can also manage saved cookies for future use, so you don’t have to log in every time you scrape.

In short, session cookies are the key to unlocking private or user-specific data on many websites. Once you learn how to extract and reuse them, you’ll be able to access data that’s usually hidden behind a login.

Next, let’s look at how you can get your session cookies using your browser.

When accessing data behind a login, you can use a session object to maintain authentication. By reusing the same session object for multiple requests, you ensure your login state is preserved, and session cookies enable you to make subsequent requests without re-authenticating. After authenticating, you can retrieve data from protected pages and extract data from areas that are otherwise inaccessible.

Always follow responsible scraping practices when handling session cookies to stay compliant with website terms and legal requirements.

How to Extract Cookies from Your Browser

There are several ways to extract cookies from your browser, ranging from easy to expert difficulty. From using browser add-ons to using automation tools like Selenium. In this case, we’ll provide one of the most basic ways to extract your session cookies, which we will use later on to scrape data from pages that require login.

Note that this method may have its risks, as it requires you to log in using your account to extract the cookies from the browser. We advise you to use a dummy account and understand that the purpose of this guide is purely educational.

Step 1: Start by launching a browser like Google Chrome, Mozilla Firefox, or Microsoft Edge.

Step 2: Go to Facebook, enter your credentials, and log in as you usually would. Wait until you are logged in and see your news feed or profile.

Step 3: Right-click on a space on the page, then select Inspect or Inspect Element. This opens your browser’s Developer Tools, known as the DevTools.

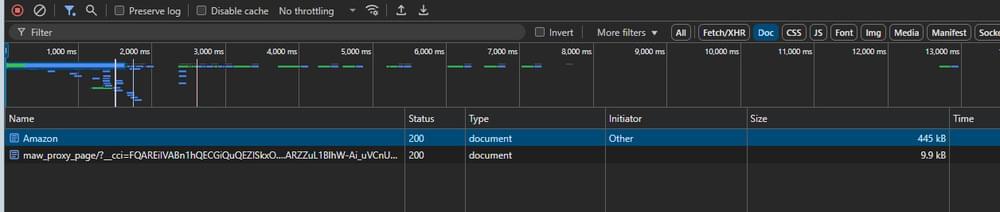

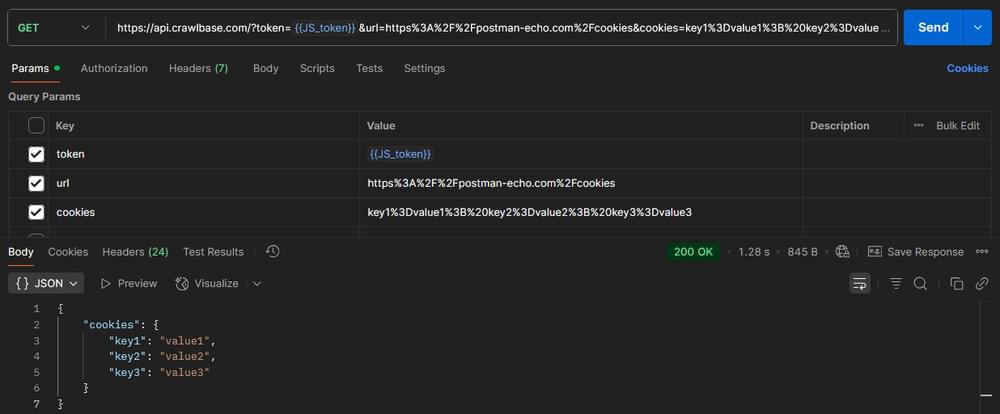

Step 4: At the top of the DevTools window, you’ll see several tabs labeled “Elements”, “Console”, “Network”, and so on. Click on Network.

Step 5: When you first open the Network tab, it may be blank. Press F5 (or click the reload button) to refresh the page. As the page reloads, you’ll see a list of network requests appear in the Network panel.

Step 6: Look for the first network request listed. Click on this entry to see detailed information about that request.

- Step 7: With the network request selected, look for a Cookies or Headers sub-tab, usually on the right side of DevTools. You may find “Cookies” as a separate tab, or you may need to scroll through the response headers to find a section labeled “Cookie” under “Request Headers”.

Step 8: You should now see a list of cookie names and values associated with your session. Copy the relevant cookie values. Find the

c_userandxscookies, which Facebook often uses for session management.Step 9: Open a text editor such as Notepad. Paste the copied cookie values, labeling them clearly (for example,

c_user=[value], xs=[value]). These are your saved cookies for future use.

Note: These cookies will allow your scraper to access private pages but may also allow someone else access to your Facebook account. Make sure to save this file securely, and do not share or upload it to any public location.

You can use these saved cookies in a Python script to automate login and scraping, allowing you to maintain session authentication and avoid repeated logins.

Authenticated Scraping with Python Requests Library

Let’s put our extracted cookies into action. First, ensure that your Python environment is completely set up. Install the latest Python version, use any of your preferred IDE, and install the Python Requests module. Once your environment is set up, we can proceed with the exercise.

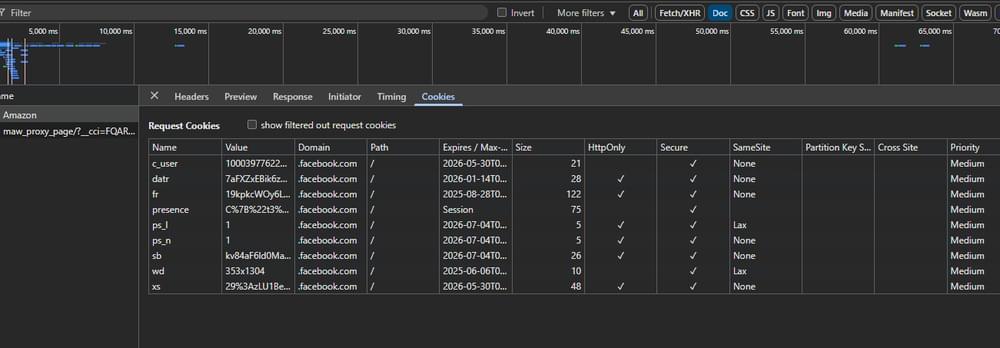

Say we want to scrape this Facebook Hashtag Music page, and our goal is to scrape data from protected web pages if you try to open this using Chrome Incognito mode (without logging in to your Facebook account), you’ll get the sign-in page:

We can try to scrape this page manually by using Python alone to see what will happen. Create a file and name it scraping_with_crawlbase.py, then copy and paste the code below.

1 | import requests |

Make sure to replace <cookies-goes-here> with the actual cookies you extracted from your Facebook account earlier and run the code using the command below.

1 | python scraping_with_crawlbase.py |

After running the script, open the output.html file. You’ll notice that the content looks blank or incomplete. If you inspect it, you’ll see that it’s mostly unexecuted JavaScript.

Why? Because the data you’re looking for is loaded dynamically with JavaScript, and requests alone can’t execute JavaScript like a browser does.

So, how do we resolve this issue? That’s what we’ll cover in the next section.

Scraping Behind Login Using Crawlbase

Now that we’ve seen the limitations of using Python’s requests library alone. Let’s use Crawlbase to handle problems like rendering JavaScript and working behind login walls. Here’s how you can do it:

- Step 1: Prepare Your Script. Create or update your

scraping_with_crawlbase.pyfile with the following code:

1 | import json |

Step 2: Replace

<Javascript requests token>with your Crawlbase JavaScript token. If you don’t have an account yet, sign up to Crawlbase to claim your free API requests.Step 3: Replace

<cookies-goes-here>with the same cookies you extracted earlier from your logged-in Facebook session.

Make sure the cookies are properly formatted. Otherwise, Crawlbase might reject them. According to the cookies documentation, the correct format should look like this:

1 | cookies: key1=value1; key2=value2; key3=value3 |

- Step 4: Now run the script using:

1 | python scraping_with_crawlbase.py |

If everything’s set up correctly, you’ll see a clean JSON output printed in your terminal. This is the actual content from the Facebook hashtag page successfully scraped.

1 | { |

Bonus Step: The Crawlbase Facebook datascraper isn’t limited to scraping just hashtag pages. It also supports other types of Facebook content. So, if your target page falls into one of the categories below, you’re in luck:

facebook-groupfacebook-pagefacebook-profilefacebook-event

All you have to do is update two lines in your script to match the type of page you want to scrape:

1 | TARGET_URL = "https://www.facebook.com/hashtag/music" |

For example, if you want to scrape a private Facebook group, change it to something like:

1 | TARGET_URL = "https://www.facebook.com/groups/examplegroup" |

Just swap in the correct URL and corresponding web scraper name and Crawlbase will take care of the rest.

Best Practices for Scraping Login-Protected Sites

When scraping pages behind a login, keep in mind that you’re dealing with sensitive accounts, session cookies, and stricter security rules. Here are some of the important things to keep in mind.

Understand the Site’s Terms of Service

Before you think about pulling data from any site, particularly those hidden behind a login, make sure you actually understand their Terms of Service. Plenty of sites put up strict fences against bots and scraping, and ignoring those restrictions can potentially land you in hot water. So scrape responsibly.

Know What Cookies to Use

To access login-protected pages, you need to send the right session cookies with your request. For Facebook, our tests show that you only need the following two cookies:

c_user=[value]xs=[value]

These are enough to authenticate your session and load the actual content.

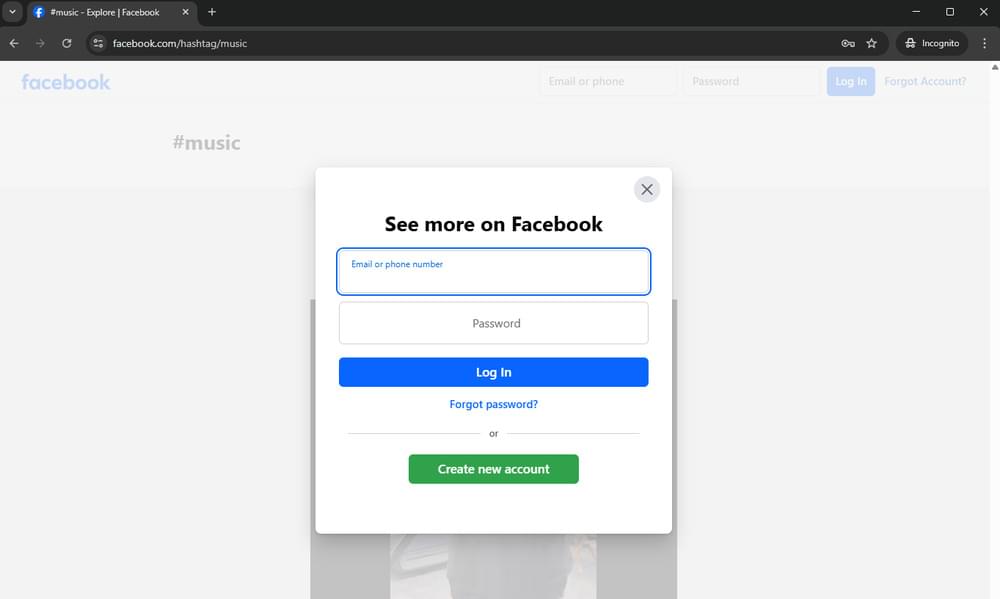

However, if you’re testing on other websites and you’re not sure which cookies are required, you can simply pass all the cookies from your logged-in session to the Crawlbase API. You can test which cookies are being sent by using this testing URL: https://postman-echo.com/cookies

Here’s an example curl request in Postman that sends cookies to the Crawlbase API and fetches the response from the Postman Echo test server:

This is a handy way to verify that your cookies are formatted correctly and being forwarded properly.

Handling Expired Cookies

Login cookies aren’t permanent. Over time, they can expire or become invalid due to account activity, logouts, or session timeouts.

If you notice that your scraper has unexpectedly started pulling up login pages instead of the expected data. In that case, it’s a strong indication that your cookies have expired and need to be refreshed.

Here’s what you can do:

Re-authenticate manually - Log in to your account again in your browser, grab fresh cookies via DevTools, and update them in your script. Ensure that you replace your saved cookies with these fresh ones to maintain session authentication.

Use a browser extension - This Cookie-Editor tool makes it easy to view and copy your active cookies directly from your browser.

Reuse Cookies Automatically

If you’re making multiple requests in a short period and want the cookies to persist between them, you can use Crawlbase’s Cookies Session parameter. Just assign any value (up to 32 characters),; this will link the session cookies from one request to the next, allowing you to maintain the same session and persist authentication using a session object. This ensures that subsequent requests can use the same authentication without requiring a login again.

This is particularly handy when scraping with multiple steps or logged-in pages, where consistency between requests is crucial, as maintaining the same session enables authenticated requests across different pages.

So, sign up for Crawlbase now to scrape login-protected pages. With just one platform, you can easily handle JavaScript-rendered content, manage cookies, and maintain sessions across multiple requests.

Frequently Asked Questions

Q1: Does Crawlbase store my session cookies?

A. No, the data being sent via the cookies parameter will only be used for the specific request you send. Crawlbase does not store any data on our side by default. However, it is possible to store the data you pass if parameters like store and cookies_session are used.

Q2: Is there a risk of account bans when using session cookies for scraping?

A. Yes, this is possible especially when the website detects non-human behavior from your session. Crawlbase cannot guarantee the safety of your account. We always recommend using a dummy account if you absolutely need to scrape data while logged in.

Q3: How to Handle CSRF Token Protection

A. To handle CSRF (Cross-Site Request Forgery) token protection in your web scraping projects, you’ll first need to extract the token from the login page. This usually involves sending a GET request to the login URL and then parsing the returned HTML code to find the CSRF token, which is often stored in a hidden input field within the form. BeautifulSoup library is a popular tool for parsing HTML and extracting these tokens.

Once you’ve located the CSRF token, include it in your login credentials payload when sending your POST request to the login URL. Some websites may use multiple CSRF tokens or change their names frequently, so it’s important to carefully inspect the login page and ensure you’re capturing all required tokens.