The Apple App Store is a digital hub where users browse, download, and set up apps on their Apple devices, including iPhones and iPads. With millions of apps, spanning everything from mind-numbing games to productivity apps, and other entertainment stuff that keep us glued to our screens.

If you’re building apps yourself, trying to market something, or just researching market trends, App Store intel can be very useful. The real trick is setting up your scraping approach correctly so you can transform all the data into something that actually helps you make smarter decisions.

So in this blog, we’ll show you how to crawl and scrape Apple App Store data using Crawlbase’s Crawling API and JavaScript. This combination works surprisingly well for gathering details like where apps rank, what their descriptions promise, and what real users are actually saying in reviews.

How to Scrape Apple App Store Data?

Our first step is to create an account with Crawlbase, which will enable us to utilize the Crawling API and serve as our platform for reliably fetching data from the App Store.

Creating a Crawlbase account

- Sign up for a Crawlbase account and log in.

- Once registered, you’ll receive 1,000 free requests. Add your billing details before using any of the free credits to get an extra 9,000 requests.

- Go to your Account Docs and save your Normal Request token for this blog’s purpose.

Setting up the Environment

Next, ensure that Node.js is installed on your device, as it is the backbone of our scraping script, providing a fast JavaScript runtime and access to essential libraries.

Installing Node on Windows:

- Go to the official Node.js website and download the Long-Term Support (LTS) version for Windows.

- Launch the installer and follow the prompts. Leave the default options selected.

- Verify installation by opening a new Command Prompt and running the following commands:

1 | node -v |

For macOS:

- Go to

[https://nodejs.org](https://nodejs.org/)and download the macOS installer (LTS). - Follow the installation wizard.

- Open the Terminal and confirm the installation:

1 | node -v |

For Linux (Ubuntu/Debian):

- Open your terminal to add the NodeSource repository and install Node.js:

1 | curl -fsSL https://deb.nodesource.com/setup_lts.x | sudo -E bash - |

- Verify your installation:

1 | node -v |

Fetch Script

Grab the script below and save it with a .js extension, any IDE or a coding environment you like will work. Once you’ve saved it, double-check that all the necessary dependencies are installed in your Node.js setup. After that, you should be all set.

1 | import { CrawlingAPI } from 'crawlbase'; |

IMPORTANT: Make sure to replace <Normal requests token> with your actual Crawlbase normal request token before running the script.

This script shows how to use Crawlbase’s Crawling API to retrieve HTML content from the Apple App Store without getting blocked. Note that the response hasn’t been scraped yet. We still need to remove unnecessary elements, clean the data, and produce a parsed, structured response.

Locating specific CSS selectors

Now that you understand how to send a simple API request using Node.js, let’s locate the data we need from our target URL so we can later write code to clean and parse it.

The first thing you’ll notice is the main section at the top. It’s usually where we’ll find the most important details and is typically well-structured, making it an ideal target for scraping.

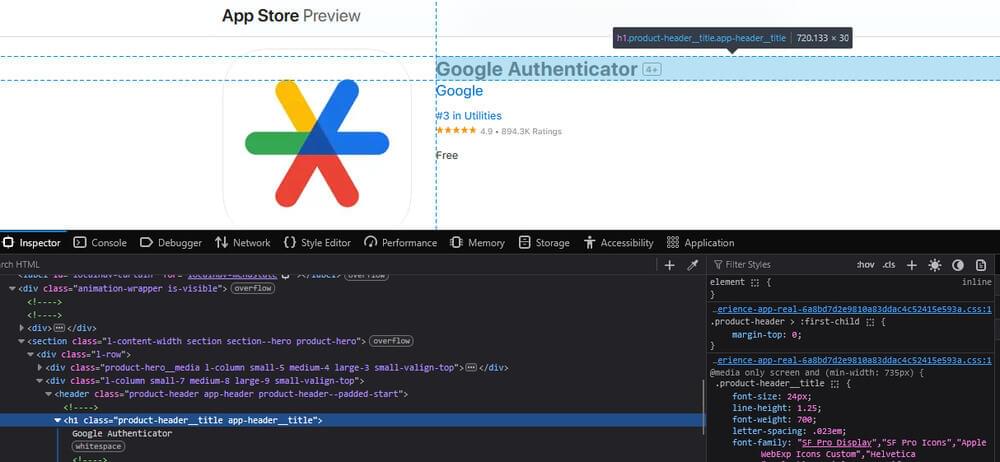

Go ahead and open your target URL and locate each selector. For example, let’s search for the title:

Take note of the .app-header__title and do the same for subtitle, seller, category, stars, rating, and price. Once that’s done, this section is complete.

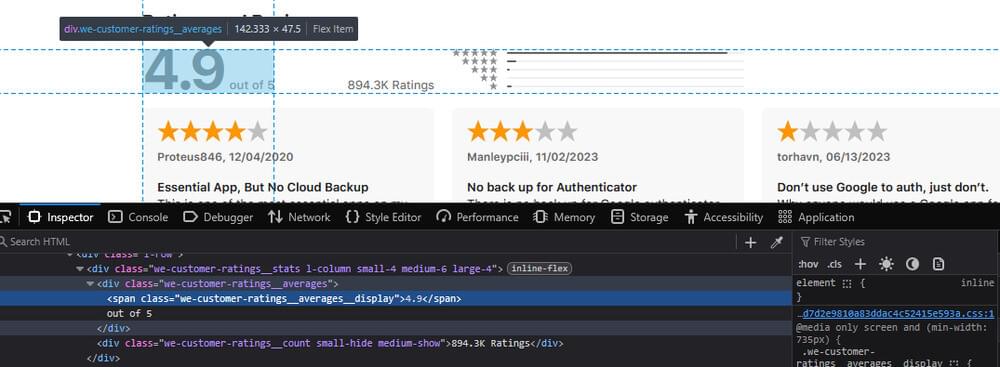

The process is pretty much the same for the rest of the page. Here’s another example: if you want to include the customer average rating in the Ratings and Reviews section, right-click on the data and select Inspect:

You know the gist. It should now be a piece of cake for you to locate the remaining data you need.

Parsing the HTML in Node.js

Now that you’re an expert in extracting the CSS selectors, it is time to build the code to parse the HTML. This is where Cheerio comes in. It is a lightweight and powerful library that enables us to select relevant data from the HTML source code within Node.js.

Start by creating your project folder and run:

1 | npm init -y |

Import the Required Libraries

Then in your .js file, import the required libraries for this project, including Cheerio:

1 | import _ from 'lodash'; |

Don’t forget to set up the Crawling API as well as the target website:

1 | const CRAWLBASE_NORMAL_TOKEN = '<Normal requests token>'; |

Functions for Scraping Apple Store Data

This is where we’ll use the CSS selectors we’ve collected earlier. Let’s write the part of the code that pulls the bits of information from the App Store page.

1 | function scrapePrimaryAppDetails($) { |

Just like that, it will extract the title, subtitle, seller, category, star rating, overall ratings, and price.

From this point, you can add more functions for each section of the page. You can add the Preview Image and Description, as well as user reviews, etc.

Combine Everything in One Function

Once the scraper is complete, we need to combine everything in one function and print the result:

1 | function scrapeAppStore(html) { |

Complete Code to Scrape Apple App Store Data

1 | import _ from 'lodash'; |

And when you run your script:

1 | npm run crawl |

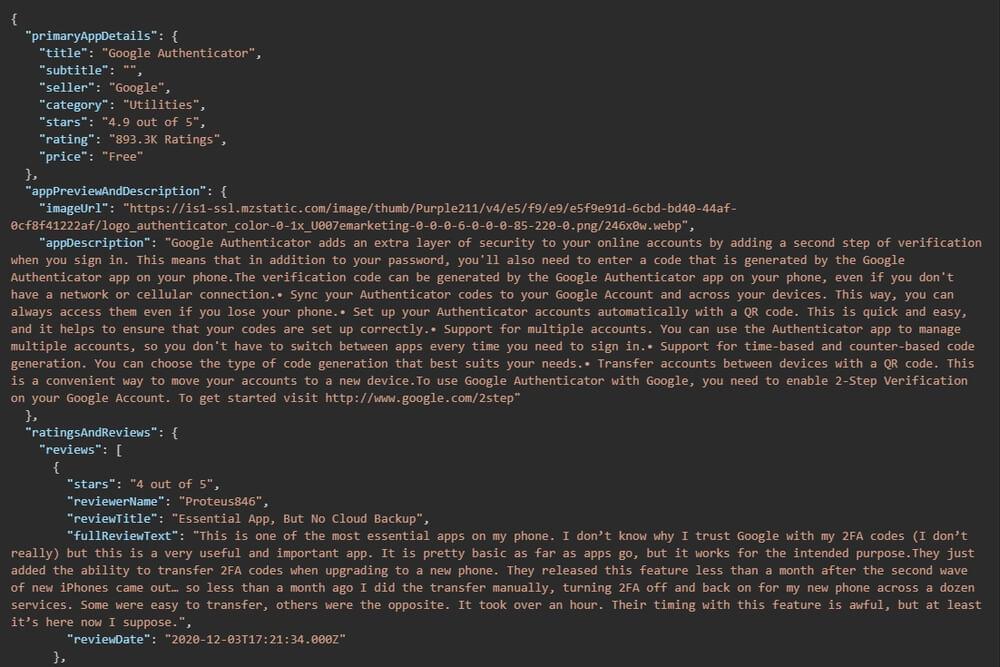

You’ll see the output in this structure:

This organized structure provides a solid foundation for further analysis, reporting, or visualization, regardless of your end goal.

Check out the complete code in our GitHub repository for this blog.

Scrape Apple Store Data with Crawlbase

Scraping the Apple App Store can provide valuable insights into how apps are presented, user responses, and the performance of competitors. With Crawlbase and a solid HTML parser like Cheerio, you can automate the extraction of Apple data and turn it into something actionable.

For tracking reviews, comparing prices, or just exploring the app ecosystem, this setup can save you time and effort while delivering the data you need.

Start your next scraping project now with Crawlbase’s Smart AI Proxy and Crawling API to avoid getting blocked!

Frequently Asked Questions

Q: Can I scrape any app on the App Store?

A. Yes, as long as you have the app’s public URL. Apple doesn’t provide a complete public index, so you’ll need to build your list or collect links from other sources.

Q: Is scraping the App Store legal?

A. It’s usually acceptable to scrape public data for research or personal use, but make sure your usage complies with Apple’s Terms of Service. Steer clear of excessive scraping and usage restrictions.

Q. What if I get blocked or rate-limited?

A. If too many requests are sent from the same IP address or if the behavior seems automated, scraping websites may be blocked or have their rates limited. To avoid such issues, you can utilize Crawlbase’s Crawling API and Smart AI Proxy. They include anti-block features like IP geo-location and rotation, which significantly reduce the chances of being blocked and enable more accurate data collection.