Refreshing product pages and copying numbers into spreadsheets used to be the norm. It worked, but it was slow, messy, and easy to miss what really mattered. Now we have better options. With Crawlbase extracting clean product data straight from websites and AI sorting through the noise, monitoring turns into something sharper. It evolves into a system that can flag sudden price shifts, warn you when inventory is slipping, and even spot trends before they’re obvious. In short, you can now detect product trends with web scraping instead of guesswork.

So, in this guide, we are creating a tool that feels closer to a digital analyst working alongside you.

Table of Contents

- The AI Product Monitoring Tool Workflow

- Prerequisites

- Setting Up the Tools

- Fetch Product Data

- Analyze Data using Perplexity AI

- How to Generate an AI Analysis Report

- Automate & Schedule AI Reports

- Visualizing AI Results

- Wrapping Up

- Frequently Asked Questions

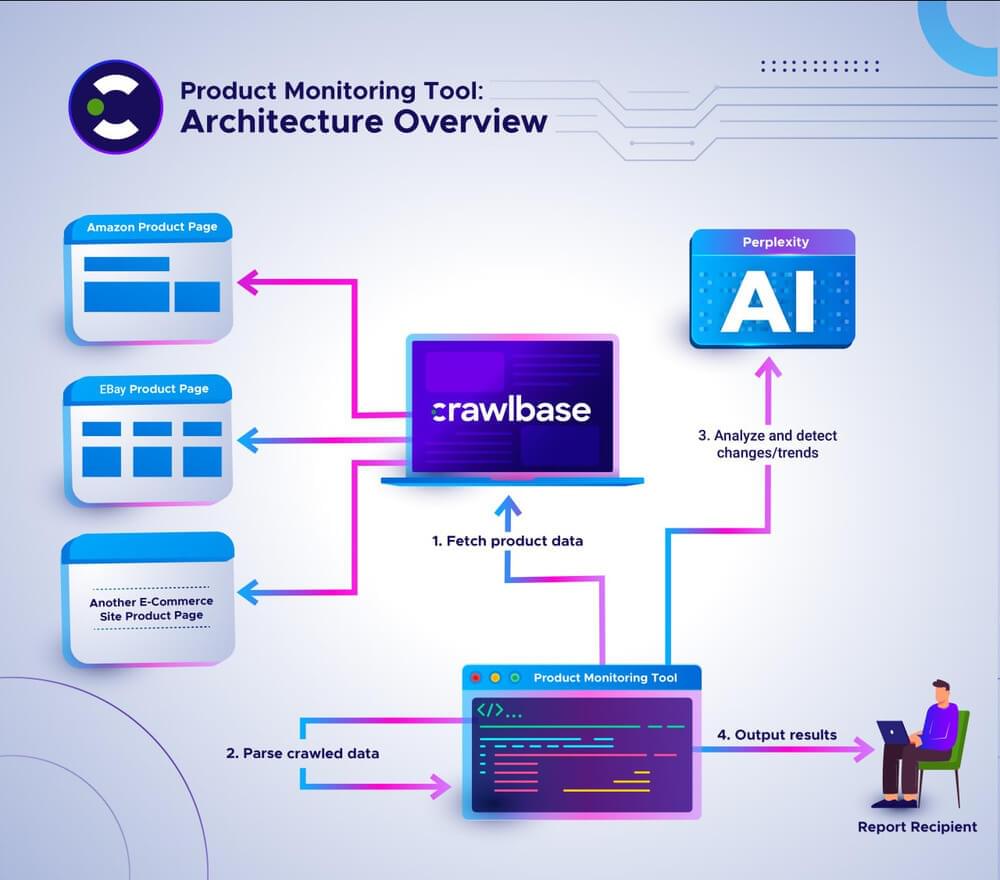

The AI Product Monitoring Tool Workflow

Think of the system we will build as a relay. Crawlbase grabs product pages from places like Amazon, eBay, or any other shop you point it to. That chunk of information then gets trimmed down so only the useful bits remain, like the price, the product name, and stock status. Once it’s cleaned up, the data moves over to Perplexity AI, which digs into it and notices things people might miss: a sudden price jump, stock slowly running out, or a trend starting to form.

Lastly, those insights are compiled and delivered as a report or an alert, allowing someone to take action on them.

Prerequisites

It helps to have a few things lined up before jumping into the build. You don’t need to be an expert, but a little groundwork will help a lot.

Skills to bring along

- Basic Python skills: Being able to read scripts, tweak functions, and run them without getting lost.

- Some comfort with REST APIs: You should be familiar with sending requests and checking responses.

- A rough idea of how AI models respond when you give them structured prompts.

Tools for Creating an AI Product Monitoring Solution

- A Crawlbase account with your Crawling API token.

- An API key from Perplexity AI.

- A local machine with Python installed.

Once these are in place, we can start building the scripts to extract product data, run it through AI, and let the system spot shifts and patterns for you.

Setting Up the Tools

Now, let’s get the environment ready. We’ll set up Crawlbase for scraping and Perplexity AI for analysis.

Crawlbase Setup

- Create an account at Crawlbase and log in.

- Copy your Crawling API normal request token. This is the one we’ll use in the script.

- New accounts include 1,000 free requests. If you add billing details before using them, you unlock an extra 9,000 free credits.

Perplexity AI Setup

Perplexity provides an OpenAI-compatible API, which makes integration simple.

- Grab your API key from the Perplexity account dashboard.

- In your Python code, configure the client like this:

1 | import openai |

Keep your key private. Don’t commit to GitHub or share it in public repos.

Step 1: Fetch Product Data

Before we can track anything, we need a way to extract product info from a shop’s website. That’s basically the first piece of the puzzle. So to start, copy the script below. Save it as crawling.py and we’ll build on it later.

1 | from requests.exceptions import RequestException |

Make sure to replace the placeholder <Crawlbase Normal requests token> with your actual Crawlbase token.

Step 2: Analyze Data using Perplexity AI

Numbers alone don’t mean much unless we make sense of them. That’s where Perplexity comes in. We’ll add a function that fetches the product records, performs a quick check to ensure everything is in order, and then shows useful stats like total records, earliest and latest dates, price ranges, and even variance.

Create a new file called perplexity_ai.py and drop the script in there.

1 | import json |

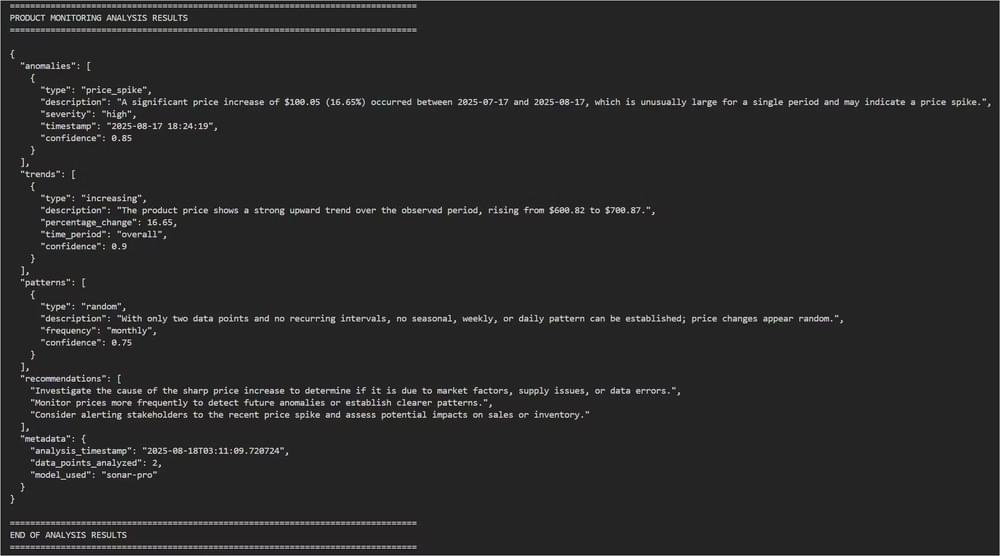

This script turns raw product price data into a structured dataset, asks an AI model to analyze it for anomalies, trends, and patterns, and then returns those insights in JSON, all while handling errors safely.

Keep in mind that the prompt isn’t fixed. You can play around with how it’s worded, shorten it, or even ask for different angles depending on what you’re trying to get out of it. All you really have to do is tweak the text inside the code, and the AI will adjust its response accordingly.

Step 3: How to Generate an AI Analysis Report

Before we can execute the code, we need some test data for this blog’s purpose. You can follow the steps below:

- Get the sample script from the GitHub repository and save it as

dummy_data.pyto populate the database. - Execute the script by running:

1 | python dummy_data.py |

This should insert records in your database like this:

- Once the dummy data has been inserted, copy the script below and save it as

price_monitoring.py

1 | from database import query_products; |

With everything in place, run the code:

1 | python price_monitoring.py |

Here is the sample output produced by Perplexity AI:

Step 4: Automate & Schedule AI Reports

For the demo, we kept things lightweight with a small script called schedule.py (you can find it in the GitHub repo). It’s set up to run once a day at 10:00 AM. To kick it off manually, you’d just run:

1 | python schedule.py |

If you want something that won’t miss a beat in real-world use, you’ll want to use your system’s native scheduler. On Linux, that usually means setting up a cron job. On Windows, you’d probably go with Task Scheduler.

Step 5: Visualizing AI Results

Pulling prices is only half the job. The tougher part is figuring out what they actually say when you look at the whole set. That’s where a few visuals can make life much easier.

If you want something slick and interactive, a lightweight dashboard with Plotly or Bokeh does the trick. You get graphs you can hover over, zoom in, and drop straight into a web page.

Alternatively, if you want something quicker, use Matplotlib or Seaborn. They’ve been around forever, are endlessly flexible, and you can dress them up to fit the look you’re going for.

If you don’t feel like staring at numbers or bars too long, you can even lean on AI. Services like Perplexity will churn out short, plain-English summaries of your dataset. That way, instead of sifting through a wall of values, you’ll have a couple of clear, high-level takeaways right next to your visuals.

Wrapping Up

Scraping a page is only the starting point. Once you bring Crawlbase together with Python and a bit of AI, the whole process turns into something much bigger: you’re not just collecting data, you’re shaping it into insights you can act on.

If you’re curious to see what this looks like in the real world, it’s worth testing it on your competitors. With Crawlbase handling the complexity, you can pull the data you need and let AI point out the shifts in the market before anyone else notices. Give Crawlbase a try today and put yourself a step ahead.

Frequently Asked Questions

Q. What if my requests keep getting blocked?

A. Getting blocked is one of the biggest roadblocks in web scraping. With Crawlbase, this is handled automatically through rotating IP addresses and fresh user agents. Each request looks unique, just like a real visitor, which keeps your crawl running and your success rate high.

Q. What happens if a website changes its layout or data structure?

A. Websites evolve; they update layouts and HTML structures often. When this happens, your existing selectors may stop working. The fix is usually quick: adjust your selectors to the new structure. Because Crawlbase always returns the complete HTML, you don’t need to rebuild your crawler from scratch; just fine-tune the parsing logic.

Q. Can AI models “hallucinate” when analyzing scraped data?

A. Sometimes AI generates responses that read well but don’t actually match the underlying data. The best way to avoid this is by giving the model clear, structured instructions and limiting open-ended creativity. Prompts that request specific outputs, such as a summary or a table, usually keep the AI aligned with your actual dataset.