In the modern web, many sites use AJAX (Asynchronous JavaScript and XML) to improve user experience by loading content dynamically. Instead of refreshing the whole page, AJAX loads parts of the page in response to user actions like scrolling or clicking without reloading the whole page. This makes for a smoother and faster experience but creates challenges when it comes to scraping the data from these sites.

When you try to scrape an AJAX site, you may find that the data you need isn’t in the static HTML. Instead, it’s loaded dynamically by JavaScript, so the content isn’t available when you view the page source. To extract this data, you need to use special scraping techniques that can interact with AJAX requests.

In this blog, we will walk you through the entire process of scraping data from AJAX-driven websites, from understanding AJAX to using powerful tools like Python and Crawlbase Smart AI Proxy to optimize your scraper and avoid common issues like being blocked.

Let’s get started!

Table Of Contents

- Challenges of Scraping AJAX Websites

- Techniques for Scraping AJAX Websites

- Replicating AJAX Calls

- Rendering JavaScript with Headless Browsers

- Tools for Scraping AJAX Data

- Python: Requests and BeautifulSoup

- Selenium for Browser Automation

- Scraping AJAX Pages: Step-by-Step Guide

- Setting Up Your Scraper

- Identifying AJAX Requests

- Replicate AJAX Request in Scraper

- Parse the Response

- Storing Data in JSON Files

- Optimizing Your Scraper with Crawlbase Smart AI Proxy

- Final Thoughts

- Frequently Asked Questions

Challenges of Scraping AJAX Websites

Scraping data from AJAX-driven websites can take time for beginners. This is because AJAX loads content dynamically, so the data you want isn’t in the initial HTML source when you first open a page. Scraping AJAX-driven websites requires tools and techniques to deal with dynamically loaded content. However, just as a MacBook may fail to enter sleep mode due to unresolved processes, AJAX content requires additional steps to replicate the dynamic nature of user interactions.

AJAX works by sending requests to the server in the background and only updates specific parts of the page. For example, when you scroll down on a product page, more items will load, or when you click a button, new content will appear. This is great for the user experience, but hard for traditional web scrapers to extract content as the data is loaded in real-time and often after the page has finished rendering.

When you try to scrape such a site, the static HTML you get will only have the basic layout and elements but not the data loaded through AJAX calls. So simple scraping won’t work. You need to mimic the same AJAX requests the page makes to get the dynamic data.

Techniques for Scraping AJAX Websites

Scraping AJAX websites requires special techniques since the content loads after the initial page load. Here are the two ways to do it.

Replicating AJAX Calls

Replicating AJAX calls is the simplest way to get the dynamic content directly from the server.

- How it works: Use your browser’s Developer Tools to find the AJAX request URLs and parameters. These requests load in the background and you can replicate those in your scraper to get the data directly.

- Why it helps: This method is faster since you don’t need to render the whole page, you get the content sooner.

Rendering JavaScript with Headless Browsers

Headless browsers like Selenium can render JavaScript, including AJAX content.

- How it works: A headless browser simulates real user actions like scrolling or clicking to trigger AJAX requests and load content.

- Why it helps: This is useful when a website requires user interactions or complex JavaScript to load data.

These methods help you bypass the challenges of scraping AJAX websites. In the next section we’ll see the tools you can use to do this.

Tools for Scraping AJAX Data

To scrape data from AJAX websites, you need the right tools. Here’s a list of some of the most popular tools for scraping AJAX data.

Python: Requests and BeautifulSoup

Python is a popular language for web scraping because it’s easy and has great libraries. To scrape AJAX pages with Python, two libraries are the most popular:

- Requests: This lets you make HTTP requests to websites and get the content. It’s easy to use and works well for replicating AJAX calls once you know the request URLs.

- BeautifulSoup: After fetching the HTML content, BeautifulSoup helps you parse and extract the data you need. It’s great for navigating the HTML structure and pulling out specific elements like product names, prices, or other dynamic content.

Using Requests with BeautifulSoup is a great choice for basic AJAX scraping, especially when you can directly replicate AJAX requests.

Selenium for Browser Automation

When dealing with complex websites that rely heavily on JavaScript, Selenium is a more powerful tool. Unlike Requests, which only gets raw HTML, Selenium lets you interact with a website like a human would.

- How it works: Selenium automates browser actions like clicking buttons or scrolling down pages, which can trigger AJAX requests to load more data.

- Why it helps: It’s suitable for scraping websites where content loads dynamically as a result of user interactions, like infinite scrolling or interactive maps.

Selenium provides the ability to handle JavaScript-rendered pages, making it ideal for more complex scraping tasks.

Scraping AJAX Pages: Step-by-Step Guide

Scraping AJAX-driven sites might seem complicated, but with the right approach and tools, it’s doable. In this section, we’ll walk you through the process of scraping AJAX step by step. We will use a real example to show you how to fetch real-time billionaire data from Forbes using an AJAX endpoint.

1. Setting Up Your Scraper

Before you start scraping you need to install a few essential libraries especially if you’re using Python. For scraping AJAX content, the most commonly used libraries are:

- Requests: To fetch web pages and make AJAX calls.

- BeautifulSoup: To parse and extract data from the page.

Install these libraries using pip:

1 | pip install requests beautifulsoup4 |

Once installed, import them into your script and set up the initial structure to begin your scraping journey.

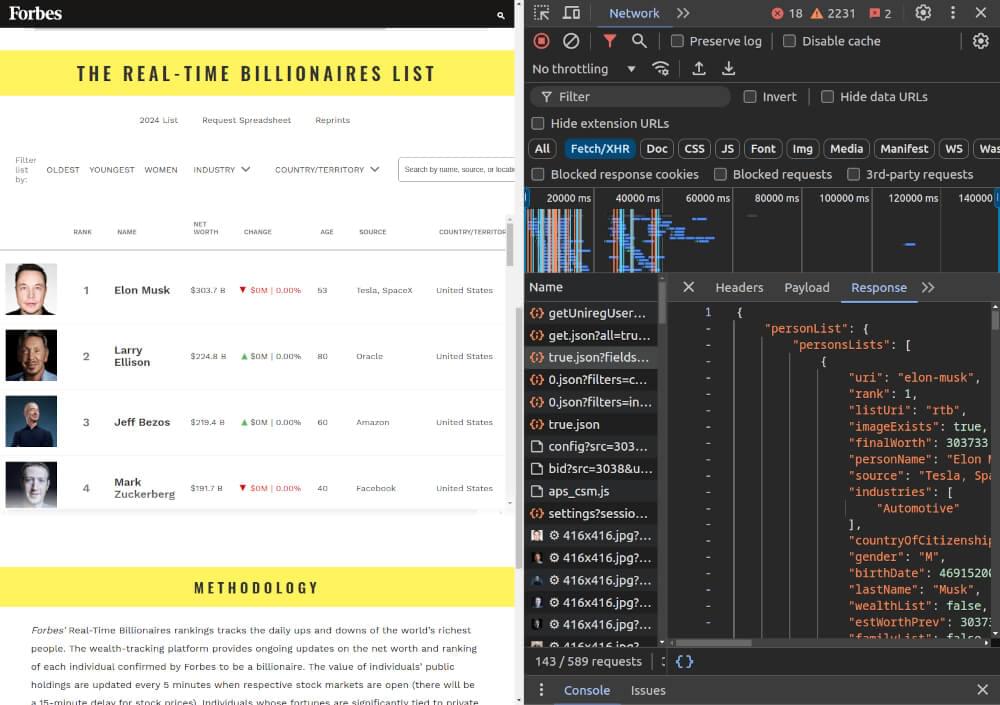

2. Identifying AJAX Requests

The first task is to identify the AJAX requests that load dynamic content. Here’s how to do that:

- Open the website in Google Chrome. For the example, we are using Forbes real-time billionaire list URL.

- Right-click on the page and select Inspect or press Ctrl+Shift+I to open Developer Tools.

- Go to the Network tab and filter for XHR (XMLHttpRequest), which shows AJAX requests.

- Refresh the page. Watch for new requests appearing in the XHR section.

The data is available through an AJAX API endpoint. Here’s the API URL:

1 | https://www.forbes.com/forbesapi/person/rtb/0/-estWorthPrev/true.json?fields=rank,uri,personName,lastName,gender,source,industries,countryOfCitizenship,birthDate,finalWorth,est |

This endpoint returns data about billionaires, such as their name, rank, wealth, and other details. To fetch data, we simply need to make an HTTP request to this URL.

3. Replicating AJAX Request in Scraper

Now that we know the AJAX endpoint, we can replicate this request in our scraper. If you’re using Requests, the following code will help you fetch the data:

1 | import requests |

4. Parse the Response

Once we have the response, we need to parse the JSON data to extract useful information. The response will include a list of billionaires, with details like their name, rank, wealth, and more. Here’s how to access the relevant information:

1 | # Parse the JSON response |

This code will output the name, rank, wealth, and country for each billionaire in the dataset.

1 | 1. Elon Musk - 303733.071 - United States |

5. Storing Data in JSON Files

After extracting the necessary information, you may want to store it for later use. To save the data in a JSON file, use the following code:

1 | import json |

This will create a billionaires_data.json file that stores all the extracted data in a readable format.

In the next section, we’ll discuss how to optimize your scraper with Crawlbase Smart AI Proxy to avoid being blocked while scraping.

Optimizing Your Scraper with Crawlbase Smart AI Proxy

When scraping AJAX websites, issues like IP blocking and rate-limiting can disrupt your efforts. Crawlbase Smart AI Proxy helps solve these problems by managing IP rotation and keeping your scraper anonymous. Here’s how it can optimize your scraping:

1. Avoid IP Blocks and Rate Limits

Crawlbase rotates IP addresses, making requests appear from different users. This prevents your scraper from getting blocked for sending too many requests.

2. Geo-targeting for Accurate Data

You can choose specific locations for your requests, ensuring that the content you scrape is relevant and region-specific.

3. Bypass CAPTCHAs and Anti-bot Measures

Crawlbase’s integration with CAPTCHA-solving tools helps your scraper bypass common anti-bot protections without manual intervention.

4. Easy Setup and Integration

To use Crawlbase Smart AI Proxy, simply replace your proxy URL with your unique Crawlbase token and set up your requests as follows:

1 | import requests |

Note: You can get your token by creating an account on Crawlbase. You will get 5000 free credits for an easy start. No credit card required for free trial.

This simple setup allows you to seamlessly rotate IPs and avoid scraping roadblocks.

Final Thoughts

Scraping AJAX sites is tricky but with the right techniques and tools it’s doable. By knowing how AJAX works and using tools like requests, Selenium, and headless browsers, you can get the data you need.

Moreover, optimizing your scraper with Crawlbase Smart AI Proxy ensures that you can scrape data reliably without running into issues like IP blocking or CAPTCHAs. This not only improves the efficiency of your scraper but also saves time by avoiding unnecessary interruptions.

Remember to always respect the terms of service of websites you’re scraping and ensure ethical scraping practices. With the right approach, scraping AJAX websites can be a powerful tool for gathering valuable data for your projects.

Frequently Asked Questions

Q. What is AJAX, and why is it challenging to scrape data from AJAX websites?

AJAX (Asynchronous JavaScript and XML) is a technique used by websites to update parts of a page without reloading the whole page. This dynamic content loading makes it harder to scrape because the data doesn’t load like a static page. It’s loaded in the background via requests. To scrape AJAX websites, you need to find those requests and replicate them to get the data which is more complex than scraping static pages.

Q. How can I scrape AJAX content without using a browser?

You can scrape AJAX content without a browser by analyzing the website’s network traffic and finding the API endpoints it uses to load the data. You can use tools like Python’s requests library to make the same API calls and get the data. You just need to replicate the headers and request parameters correctly. But some websites require JavaScript rendering, in which case you need Selenium or headless browsers like Puppeteer to load and scrape the content entirely.

Q. How does Crawlbase Smart AI Proxy help in AJAX scraping?

Crawlbase Smart AI Proxy helps you scrape more efficiently by managing your IP addresses and bypassing CAPTCHAs and rate limits. It provides rotating proxies so your requests look like they are coming from different IPs, so you won’t get blocked or throttled while scraping AJAX data. This makes your scraping process more reliable, and you can get the data without interruptions.