Are you interested in unlocking the hidden insights within Amazon’s vast product database? If so, you’ve come to the right place. In this step-by-step Amazon data scraping guide, we will walk you through the process of scraping Amazon product data and harnessing its power for business growth. We’ll cover everything from understanding the importance of product data to handling CAPTCHAs and anti-scraping measures. So grab your tools and get ready to dive into the world of Amazon data scraping.

We will use Crawlbase Crawling API alongside JavaScript to efficiently scrape Amazon data. The dynamic capabilities of JavaScript in engaging with web elements, paired with the API’s anti-scraping mechanisms, guarantee a seamless process of collecting data. The end result will be a wealth of Amazon product data, neatly organized in both HTML and JSON formats.

Ready to get started? Sign up for Crawlbase — your first 1,000 requests are completely free.

If you’re looking for a ready-to-use solution, check out our Amazon Scraper tool.

Table of Contents

- How to Scrape Amazon Data

- Scrape Key Amazon Product Data Content with Crawlbase Scrapers

- Scrape Amazon Product Reviews with Crawlbase’s Integrated Scraper

- Overcome Amazon Data Scraping Challenges with Crawlbase

- Applications of Amazon Scraper

- Create Amazon Scraper with Crawlbase

- Frequently Asked Questions

How to Scrape Amazon with Python (Code Tutorial)

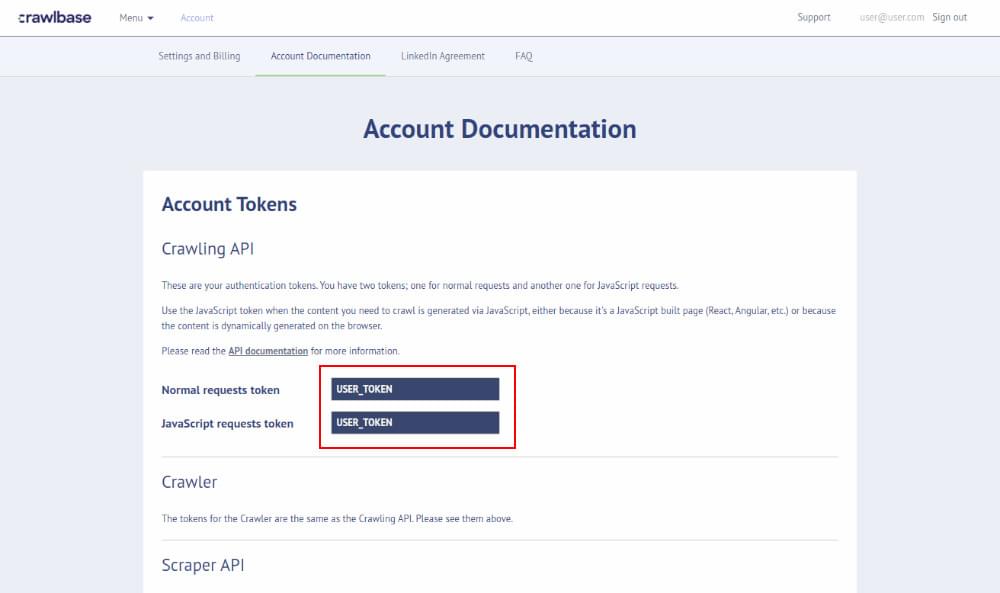

Step 1: Sign up to Crawlbase and get your private token. You can get this token from the Crawlbase account documentation section within your account.

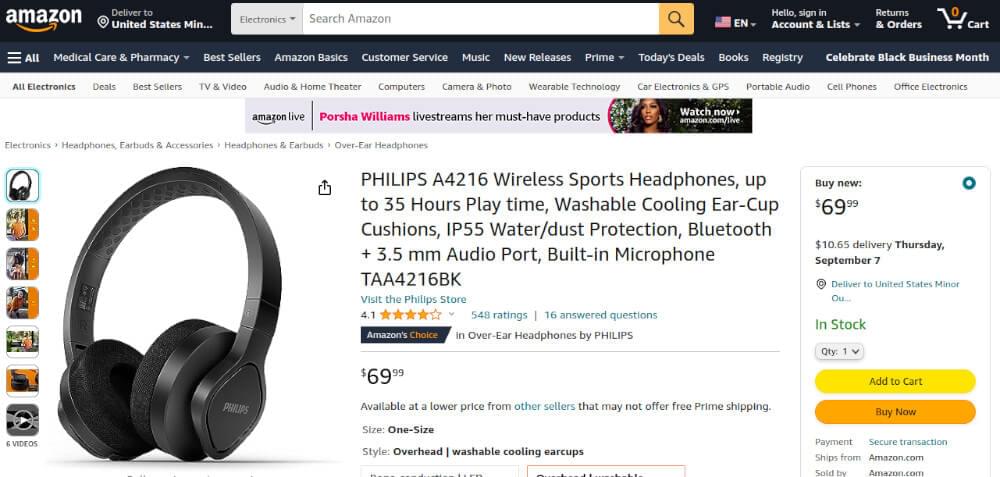

Step 2: Choose the specific Amazon product page that you want to scrape. For this purpose, we chose the Amazon product page for PHILIPS A4216 Wireless Sports Headphones. It’s essential to select a product page with different elements to showcase the versatility of the scraping process.

Step 3: Install the Crawlbase node.js library.

First, confirm that Node.js is installed on your system if it’s not installed, you can download and install it from here, then proceed to install the Crawlbase Node.js library via npm :

npm i crawlbase

Step 4: Create amazon-product-page-scraper.js file by using the below command:

touch amazon-product-page-scraper.js

Step 5: Configure the Crawlbase Crawling API. This involves setting up the necessary parameters and endpoints for the API to function. Paste the following script in amazon-product-page-scraper.js file which you created in step 4. In order to run the below script, paste this command node amazon-product-page-scraper.js in the terminal:

1 | // Import the Crawling API |

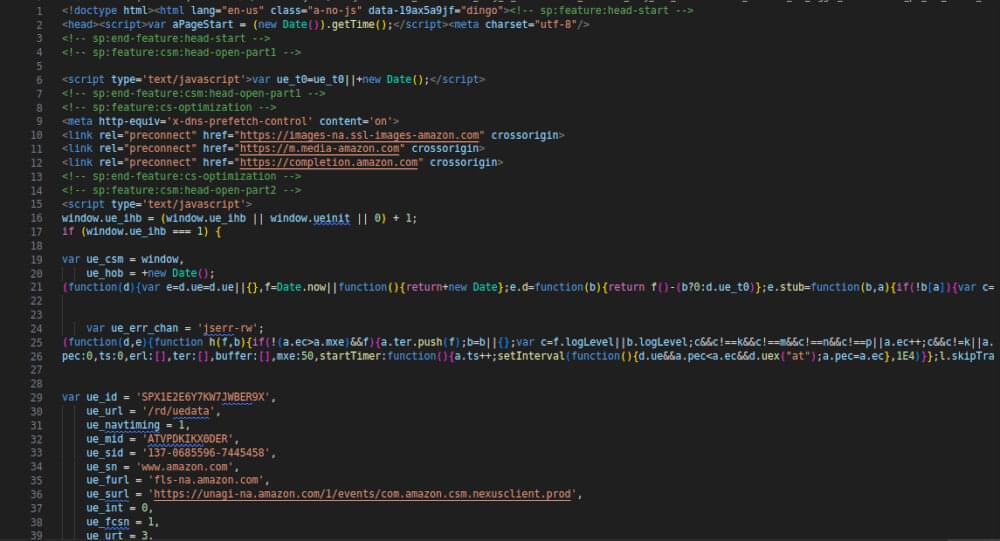

The above script shows how to use Crawlbase’s Crawling API to access and retrieve data from an Amazon product page. By setting up the Amazon scraping API token, defining the target URL, and making a GET request. The output of this code will be the raw HTML content of the specified Amazon product page (https://www.amazon.com/dp/B099MPWPRY). It will be displayed in the console, showing the unformatted HTML structure of the page. The console.log(response.body) line prints this HTML content to the console as shown below:

Using Crawlbase API to Scrape Amazon Product Listings

In the above examples, we talked about how we only get the basic structure of an Amazon product data (the HTML). But sometimes, we don’t need this raw data instead, we want the important stuff from the page. Crawlbase Crawling API has built-in Amazon Scraping API to scrape important content from Amazon pages. To make this work, we need to add a “scraper” parameter when using the Crawling API. This “scraper” parameter helps us get the good parts of the page in a JSON format. We are making edits to the same file amazon-product-page-scraper.js. Let’s look at an example below to get a better picture:

1 | // Import the Crawling API |

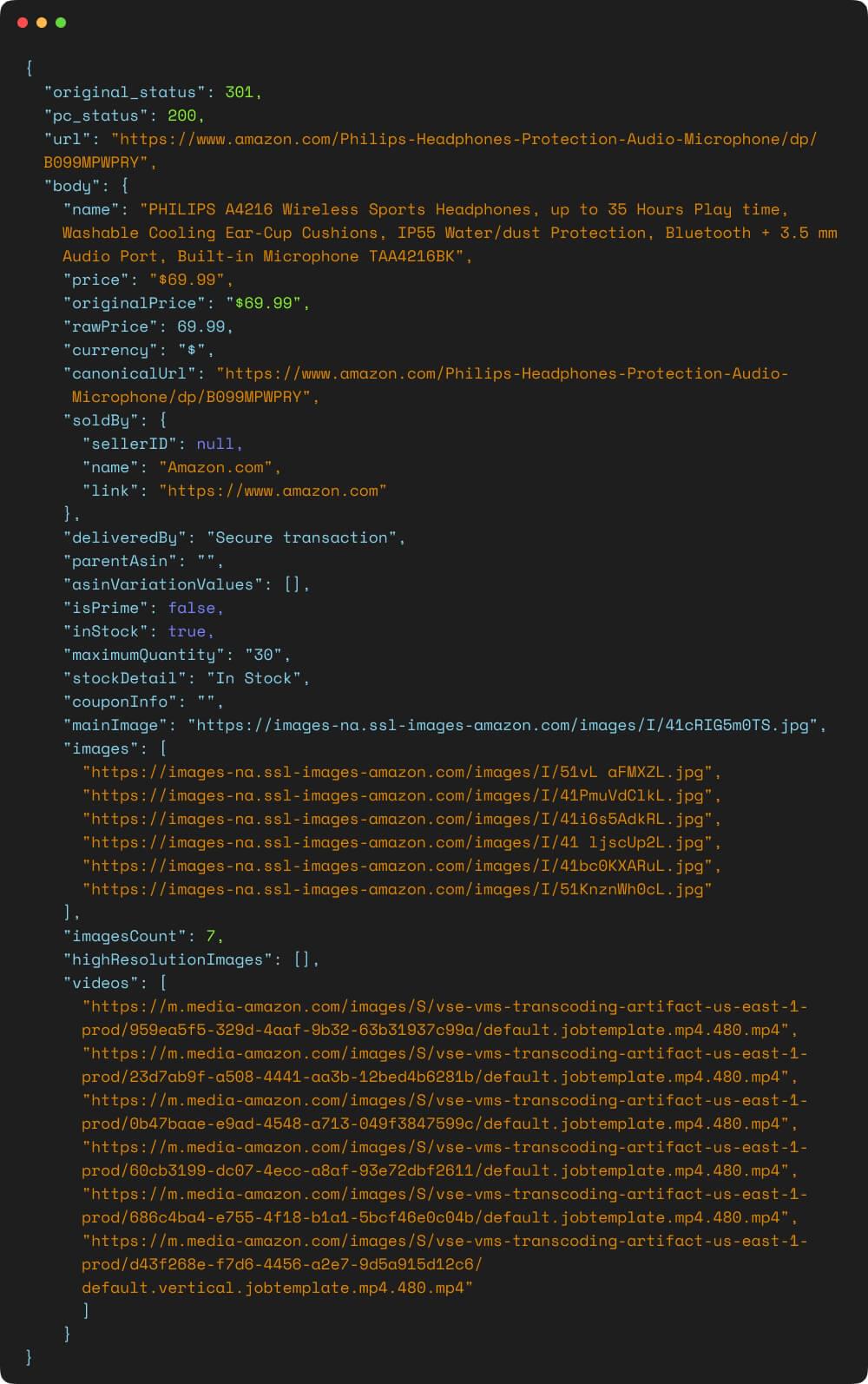

The output of the above code block will be the parsed JSON response containing specific Amazon product details such as the product’s name, description, price, currency, parent ASIN, seller name, stock information, and more. This data will be displayed on the console, showcasing organized information extracted from the specified Amazon product page.

We will now retrieve the Amazon product’s name, price, rating, and image from the JSON response mentioned earlier. To do this, we must store the JSON response in a file named "amazon-product-scraper-response.json". To achieve this, execute the following script in your terminal:

1 | // Import the required modules |

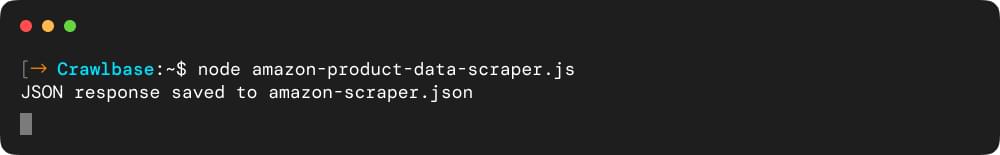

This code successfully crawls the Amazon product page, retrieves the JSON response, and saves it to the file. A message in the console indicates that the JSON response has been saved to 'amazon-product-scraper-response.json'. You will see appropriate error messages in the console if any errors occur during these steps.

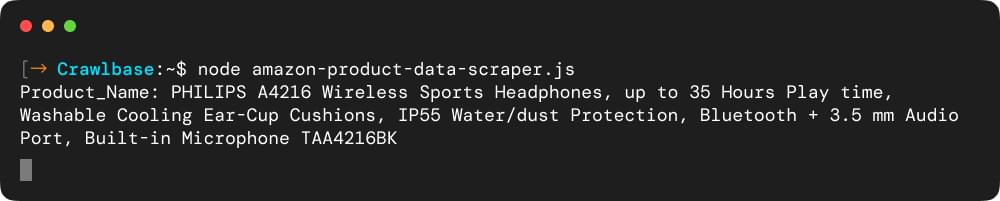

Scrape Amazon Product Name

1 | // Import fs module |

The above code block reads data from a JSON file named "amazon-product-scraper-response.json" using the fs (file system) module in Node.js. It then attempts to parse the JSON data, extract a specific value (in this case, the "name" property from the "body" object), and prints it to the console. If there are any errors, such as the JSON data not being well-formed or the specified property not existing, the error messages will be displayed accordingly.

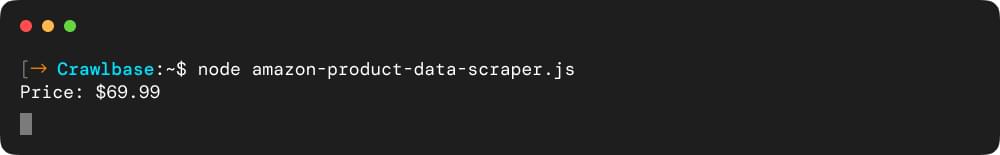

How to Scrape Amazon Product Price

1 | // Import fs module |

This code uses the Node.js fs module to interact with the file system and read the contents of a JSON file named "amazon-product-scraper-response.json". Upon reading the file, it attempts to parse the JSON data contained within it. If the parsing is successful, it extracts the "price" property from the "body" object of the JSON data. This extracted price value is then printed to the console.

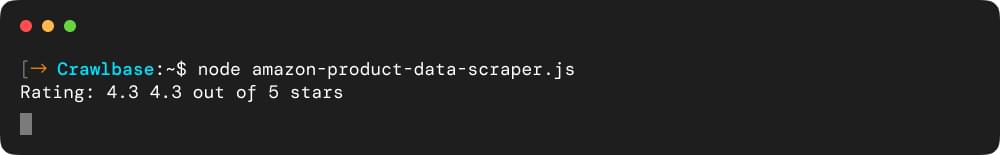

How to Scrape Amazon Product Rating

1 | // Import fs module |

The code reads the contents of a JSON file named "amazon-product-scraper-response.json". It then attempts to parse the JSON data and extract the value stored under the key "customerReview" from the "body" object. The extracted value, which seems to represent a product’s rating, is printed as “Rating:” followed by the value.

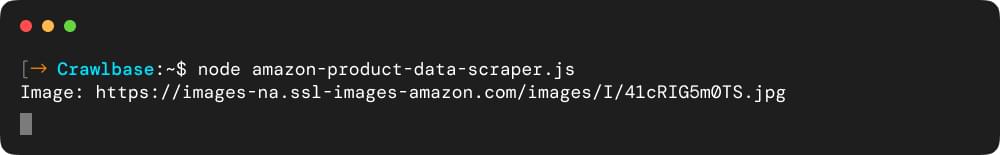

How to Scrape Amazon Product Image

1 | // Import fs module |

The above script attempts to parse the JSON data and extract the value stored under the key "mainImage" within the "body" object. The extracted value, likely representing a product image, is printed as "Image:" followed by the value. This obtained image value is logged to the console.

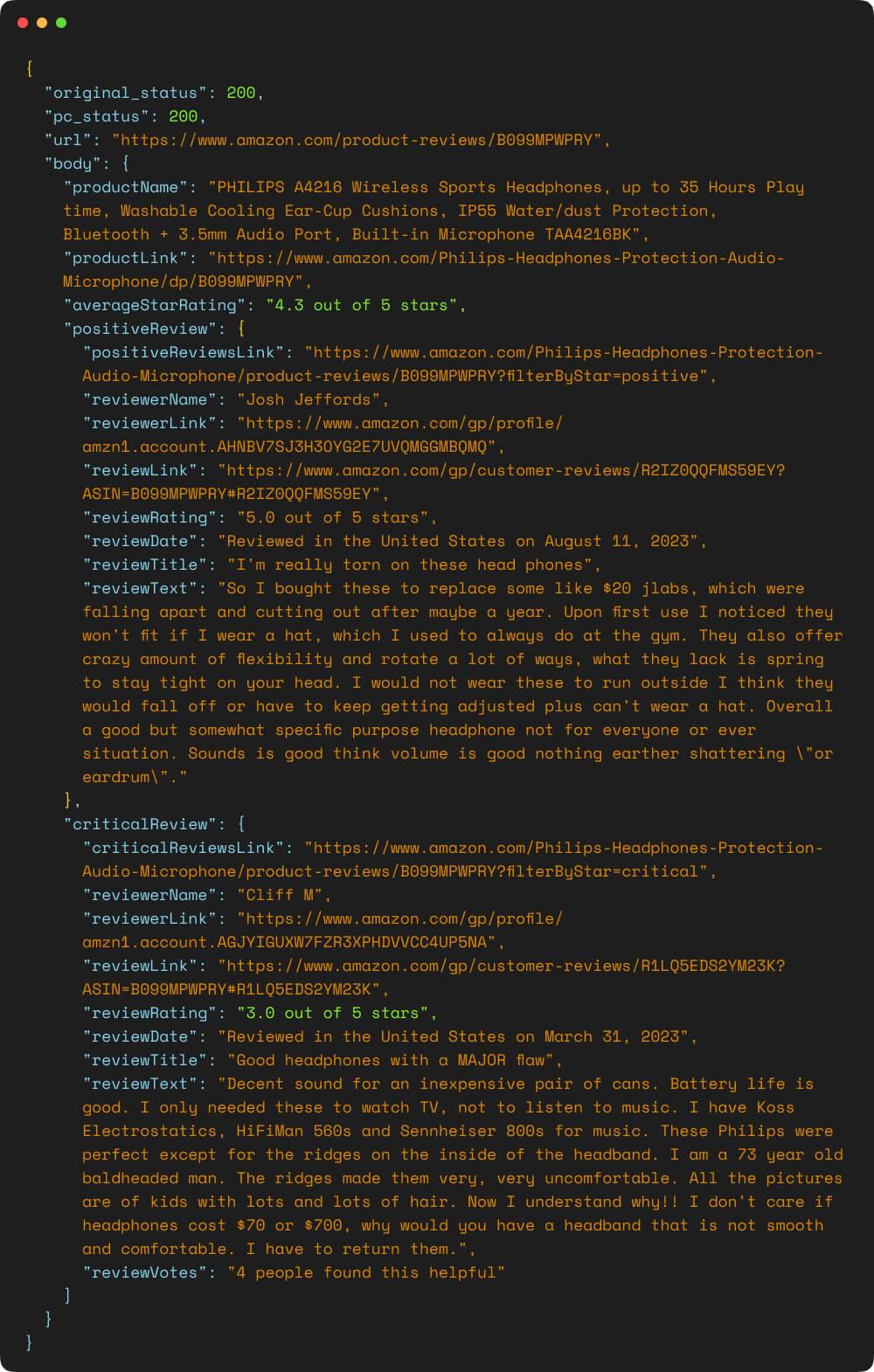

Scrape Amazon Product Reviews with Crawlbase’s Integrated Scraper

In this example, we’ll scrape the customer reviews of the same Amazon product. The target URL that we scraped is https://www.amazon.com/product-reviews/B099MPWPRY. Crawlbase’s Crawling API has an integrated scraper designed for Amazon product reviews. This scraper allows us to retrieve customer reviews from an Amazon product. To achieve this, all we need to do is incorporate a “scraper” parameter into our usage of the Crawling API, assigning it the value "amazon-product-reviews". Let’s explore an example below to get a clearer picture:

1 | // Import the Crawling API |

Running the above script will result in the extraction of Amazon product review data through the Crawlbase Crawling API. As the code executes, it fetches information about the reviews related to the specified Amazon product page. This data will be formatted in JSON and displayed on the console, presenting valuable insights into customers’ experiences and opinions. The structured output showcases various aspects of the reviews, including reviewer names, ratings, review dates, review titles, and more.

We have revealed the potential of data extraction through a detailed step-by-step guide. We have used Crawlbase’s Crawling API to create Amazon web scraper which extracted complex product information like descriptions, prices, sellers, and stock availability. Furthermore, the guide shows how the Crawlbase Crawling API seamlessly facilitates the extraction of customer reviews, providing a wealth of information such as reviewer names, ratings, dates, and review texts.

How to Avoid Getting Blocked When Scraping Amazon

Crawlbase Crawling API is designed to address the challenges associated with web scraping, particularly in scenarios where scraping Amazon product data is concerned. With the rise of questions like “do Amazon influencers get free products” and the growing demand for influencer marketing data, Crawlbase provides a solution for extracting product information to support market analysis and decision-making. Here’s how the Crawlbase Crawling API can help mitigate these challenges:

- Anti-Scraping Measures: Crawlbase Crawling API utilizes advanced techniques to bypass anti-scraping mechanisms like CAPTCHAs, IP blocking, and user-agent detection. This enables seamless data collection without triggering alarms.

- Dynamic Website Structure: The API is equipped to adapt to changes in website structure by utilizing smart algorithms that automatically adjust scraping patterns to match the evolving layout of Amazon’s pages.

- Legal and Ethical Concerns: Crawlbase respects the terms of use of websites like Amazon, ensuring that scraping is conducted in a responsible and ethical manner. This minimizes the risk of legal actions and ethical dilemmas.

- Data Volume and Velocity: The API efficiently manages large data volumes by distributing scraping tasks across multiple servers, enabling fast and scalable data extraction.

- Complexity of Product Information: Crawlbase’s Crawling API employs intelligent data extraction techniques that accurately capture complex product information, such as reviews, pricing, images, and specifications.

- Rate Limiting and IP Blocking: The API manages rate limits and IP blocking by intelligently throttling requests and rotating IP addresses, ensuring data collection remains uninterrupted.

- Captcha Challenges: Crawlbase’s Crawling API can handle CAPTCHAs through automated solving mechanisms, eliminating the need for manual intervention and speeding up Amazon scraping process.

- Data Quality and Integrity: The API offers data validation and cleansing features to ensure that scraped data is accurate and up-to-date, reducing the risk of using outdated or incorrect information.

- Robustness of Scraping Scripts: The API’s robust architecture is designed to handle various scenarios, errors, and changes in the website’s structure, reducing the need for constant monitoring and adjustments.

Crawlbase Crawling API provides a comprehensive solution that addresses the complexities and challenges of web scraping Amazon data. By offering intelligent scraping techniques, robust architecture, and adherence to ethical standards, the API empowers businesses to gather valuable insights without the typical hurdles associated with web scraping.

Applications of Amazon Scraper

Your business can benefit from using a web scraping tool or amazon scraping tool to collect data for the following purposes:

- One of the key areas where Amazon’s scraped data can be utilized is in analyzing customer reviews for product improvement. By carefully examining feedback, businesses can identify areas where their products can be improved, leading to enhanced customer satisfaction.

- Another valuable application of scraped data is identifying market trends and demand patterns. By analyzing patterns and trends in customer behavior, businesses can anticipate consumer needs and adapt their offerings accordingly. This allows them to stay ahead of the competition and offer high-demand products or services.

- Monitoring competitor pricing strategies is another important use of scraped data. By closely examining how competitors are pricing their products, businesses can make informed decisions regarding their pricing adjustments. This ensures that they stay competitive in the market and can adjust their pricing strategies in real-time.

- E-commerce businesses can use scraped product data to generate website content, such as product descriptions, features, and specifications. This can improve search engine optimization (SEO) and enhance the online shopping experience.

- Brands can monitor Amazon for unauthorized or counterfeit products by scraping product data and comparing it with their genuine offerings.

Want a Reliable Amazon Scraper?

This step-by-step Amazon data scraping guide has illuminated the significance of Amazon product data and its potential for driving business growth. Companies can make informed decisions across various operational facets by efficiently extracting and analyzing this data.

Try Crawlbase’s ready-made scraping API and bypass blocks with ease. Start Free

Frequently Asked Questions (FAQs) About Amazon Scraping

1. Is it legal to scrape Amazon product data?

Scraping Amazon can be legally risky, especially if you violate their Terms of Service. While public data isn’t necessarily protected, Amazon actively blocks bots and may pursue legal action for excessive scraping. To stay compliant, use scraping tools that respect rate limits, avoid login-restricted content, and consider using APIs like Crawlbase, which handles ethical scraping and proxy rotation.

2. What is the best tool to scrape Amazon in 2026?

The best Amazon scraper depends on your technical skill and use case. For developers, Crawlbase offers a powerful scraping API with built-in proxy management, CAPTCHA handling, and JavaScript rendering. No-code users may prefer tools like Octoparse or Apify. Always choose a tool that can bypass Amazon’s bot protection while remaining scalable and compliant.

3. Can I scrape Amazon using Python?

Yes, you can scrape Amazon using Python with libraries like requests, BeautifulSoup, or Selenium. However, Amazon’s bot protection makes it challenging to access product pages directly. That’s why many developers integrate the Crawlbase API into their Python scripts to reliably fetch fully rendered pages with headers and proxies already handled.

4. What kind of data can I extract from Amazon listings?

You can extract a variety of product data, including title, price, reviews, ratings, ASIN, seller info, availability, images, and product specifications. Using a robust scraper or an API like Crawlbase, you can collect this data in structured formats (JSON/CSV) for product tracking, comparison engines, or competitive analysis.

5. How do I avoid getting blocked when scraping Amazon?

To avoid getting blocked, you need to rotate IP addresses, spoof headers, introduce realistic delays, and avoid scraping logged-in content. Amazon has strong anti-bot systems, so it’s best to use a scraping API like Crawlbase, which manages all of this behind the scenes—ensuring your requests look human-like and don’t get blocked.