Looking to grow your business? SuperPages is a great place to get valuable lead info. SuperPages is one of the largest online directories with listings of businesses across the US. With millions of businesses categorized by industry, location, and more, it’s a good place to find detailed info on potential customers or clients.

In this guide, we’ll show you how to scrape SuperPages to get business information. With Python and a few simple libraries, you can get business names, phone numbers, addresses, and more. This will give you a list of leads to expand your marketing or build partnerships.

Once we have the core scraper set up, we’ll also look into optimizing our results using Crawlbase Smart AI Proxy to ensure data accuracy and efficiency when handling larger datasets.

Here’s everything we’ll cover to get you started:

Table of Contents

- Why Scrape SuperPages for Leads?

- Key Data to Extract from SuperPages

- Setting Up Your Python Environment

- Scraping SuperPages Listings

- Inspecting HTML for Selectors

- Writing the Listings Scraper

- Handling Pagination

- Saving Data in a JSON File

- Complete Code

- Inspecting HTML for Selectors

- Writing the Business Details Scraper

- Saving Data in a JSON File

- Complete Code

Why Scrape SuperPages for Leads?

SuperPages is a top US business directory with millions of listings across industries. Whether you’re in sales, marketing, or research, SuperPages has the information you need to create targeted lead lists for outreach. From small local businesses to national companies, SuperPages has millions of entries, each with a business name, address, phone number, and business category.

By scraping SuperPages, you can collect all this information in one place, save time on manual searching, and focus on reaching out to prospects. Instead of browsing page after page, you’ll have a structured dataset ready for analysis and follow-up.

Let’s dive in and see what information you can extract from SuperPages.

Key Data to Extract from SuperPages

When scraping SuperPages, you need to know what data to extract for lead generation. SuperPages has multiple pieces of data for each business, and by targeting specific fields, you can create a clean dataset for outreach and marketing purposes.

Here are some of the main data fields:

- Business Name: The primary identifier for each business so you can group your leads.

- Category: SuperPages categorizes businesses by industry, e.g., “Restaurants” or “Legal Services.” This will help you segment your leads by industry.

- Address and Location: Full address details, including city, state, and zip code, so you can target local marketing campaigns.

- Phone Number: Important for direct contact, especially if you’re building a phone-based outreach campaign.

- Website URL: Many listings have a website link, so you have another way to engage and get more info about each business.

- Ratings and Reviews: If available, this data can give you insight into customer sentiment and reputation so you can target businesses based on their quality and customer feedback.

With a clear idea of what to extract, we’re ready to set up our Python environment in the next section.

Setting Up Your Python Environment

Before we can start scraping SuperPages data, we need to set up the right Python environment. This includes installing Python, necessary libraries and an Integrated Development Environment (IDE) to write and run our code.

Installing Python and Required Libraries

First, make sure you have Python installed on your computer. You can download the latest version from python.org. After installation, you can test if Python is working by running this command in your terminal or command prompt:

1 | python --version |

Next, you will need to install the required libraries. For this tutorial, we will use Requests for making HTTP requests and BeautifulSoup for parsing HTML. You can install these libraries by running the following command:

1 | pip install requests beautifulsoup4 |

These libraries will help you interact with SuperPages and scrape data from the HTML.

Choosing an IDE

To write and run your Python scripts, you need an IDE. Here are some options:

- VS Code: A lightweight code editor with good Python support and many extensions.

- PyCharm: A more full-featured Python IDE with code completion and debugging tools.

- Jupyter Notebook: An interactive environment for experimentation and visualization.

Choose the IDE that you prefer. Once your environment is set up, you’re ready to start writing the code for scraping SuperPages listings.

Scraping SuperPages Listings

In this section, we’ll cover scraping SuperPages listings. This includes inspecting the HTML to find the selectors, writing the scraper, handling pagination to get data from multiple pages, and saving the data in a JSON file for easy access.

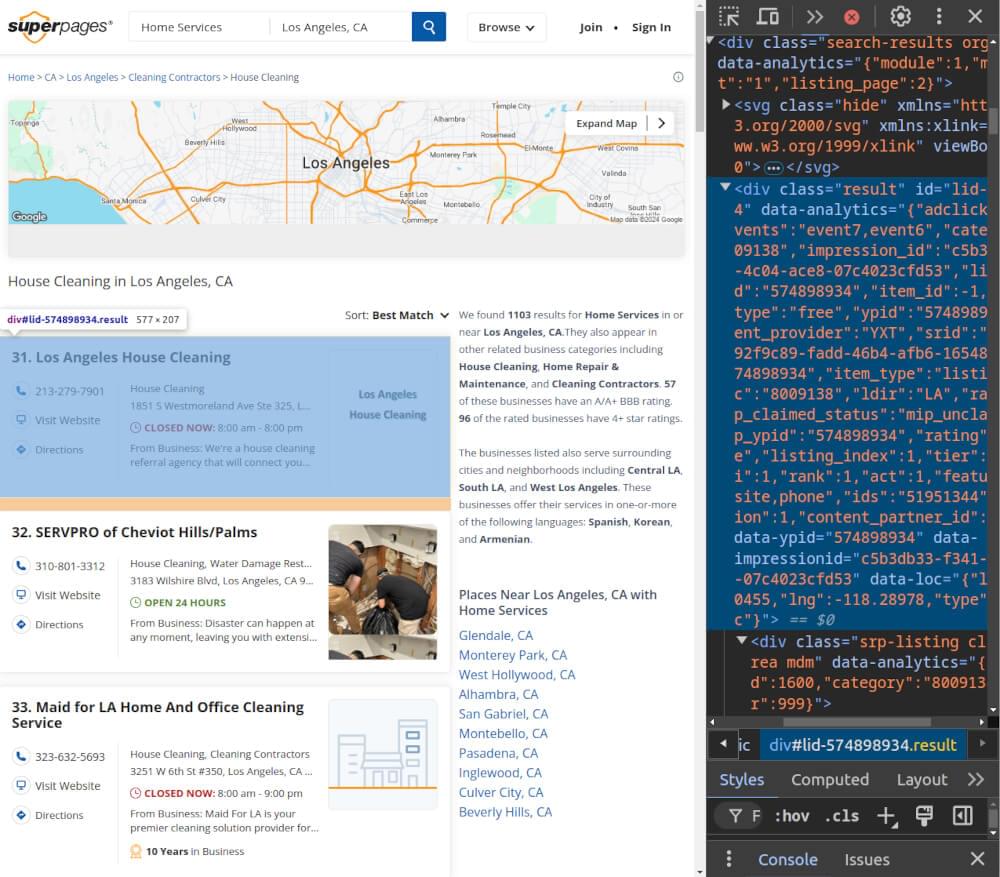

Inspecting HTML for Selectors

Before we start writing the scraper, we need to inspect the SuperPages listings page to find the HTML structure and CSS selectors that contain the data we want. Here’s how:

- Open the Listings Page: Go to a SuperPages search results page (e.g., search for “Home Services” in a location you’re interested in).

- Inspect the Page: Right-click on the page and select “Inspect” or press

Ctrl + Shift + Ito open Developer Tools.

- Find the Relevant Elements:

- Business Name: The business name is in an

<a>tag with the class.business-name, and within this<a>, the name itself is in a<span>tag. - Address: The address is in a

<span>tag with the class.street-address. - Phone Number: The phone number is in an

<a>tag with the classes.phoneand.primary. - Website Link: If available, the business website link is in an

<a>tag with the class.weblink-button. - Detail Page Link: The link to the business detail page is in an

<a>tag with the class.business-name.

Look for any other data you want to extract, such as ratings or business hours. Now, you’re ready to write the scraper in the next section.

Writing the Listings Scraper

Now that we have the selectors, we can write the scraper. We’ll use requests to fetch the page and BeautifulSoup to parse the HTML and extract the data. Here’s the basic code to scrape listings:

1 | import requests |

This code fetches data from a given page of results. It extracts each business’s name, address, phone number, and website and stores them in a list of dictionaries.

Handling Pagination

To get more data, we need to handle pagination so the scraper can go through multiple pages. SuperPages changes the page number in the URL, so it’s easy to add pagination by looping through page numbers. We can create a function like the one below to scrape multiple pages:

1 | # Function to fetch listings from multiple pages |

Now, fetch_all_listings() will gather data from the specified number of pages by calling fetch_listings() repeatedly.

Saving Data in a JSON File

Once we’ve gathered all the data, it’s important to save it in a JSON file for easy access. Here’s how to save the data in JSON format:

1 | # Function to save listings data to a JSON file |

This code saves the data in a file named superpages_listings.json. Each entry will have the business name, address, phone number, and website.

Complete Code Example

Below is the complete code that combines all the steps:

1 | import requests |

Example Output:

1 |

|

Scraping SuperPages Business Details

After capturing the basic information from listings, it’s time to dig deeper into individual business details by visiting each listing’s dedicated page. This step will help you gather more in-depth information, such as operating hours, customer reviews, and additional contact details.

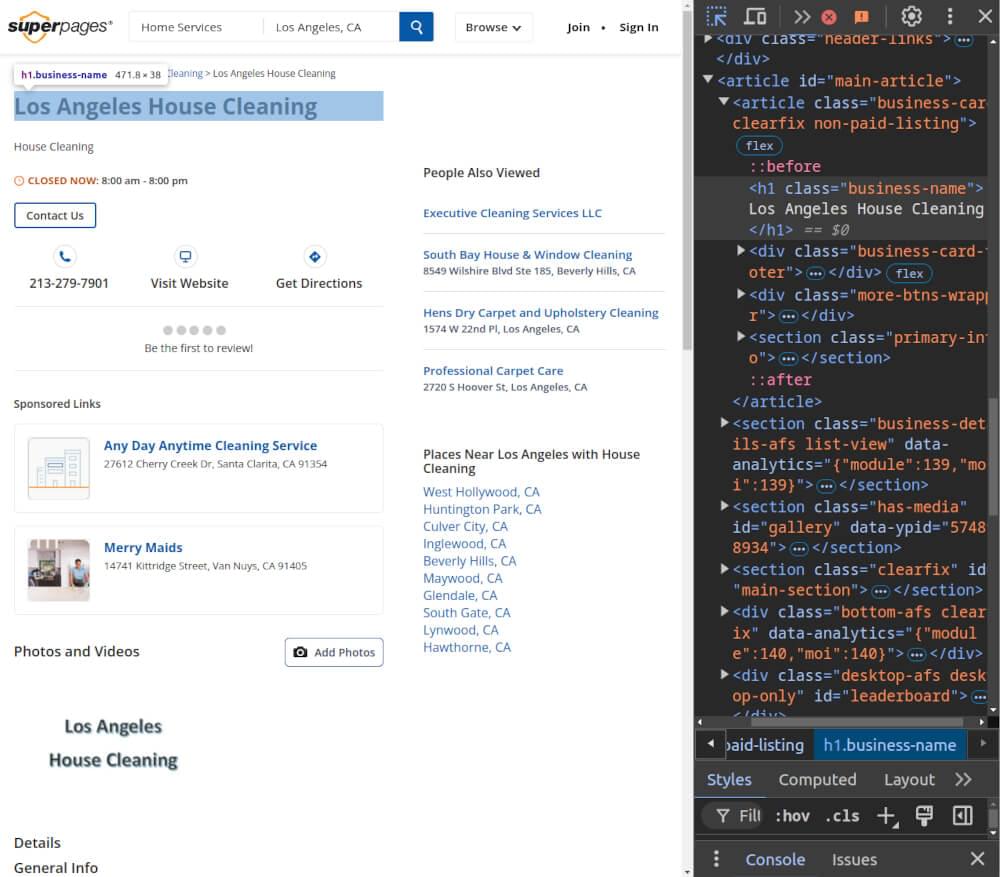

Inspecting HTML for Selectors

First, we’ll inspect the HTML structure of a SuperPages business detail page to identify where each piece of information is located. Here’s how:

- Open a Business Details Page: Click on any business name from the search results to open its details page.

- Inspect the Page: Right-click and choose “Inspect” or press

Ctrl + Shift + Ito open Developer Tools.

- Find Key Elements:

- Business Name: Found in an

<h1>tag with a class.business-name. - Operating Hours: Displayed in rows within a

.biz-hourstable, where each day’s hours are in a<tr>withth.day-labelandtd.day-hours. - Contact Information: Located in key-value pairs inside a

.details-contactsection, with each key in<dt>tags and each value in corresponding<dd>tags.

With these selectors identified, you’re ready to move to the next step.

Writing the Business Details Scraper

Now, let’s use these selectors in a Python script to scrape the specific details from each business page. First, we’ll make a request to each business detail page URL. Then, we’ll use BeautifulSoup to parse and extract the specific information.

Here’s the code to scrape business details from each page:

1 | import requests |

Saving Data in a JSON File

To make it easier to work with the scraped data later, we’ll save the business details in a JSON file. This lets you store and access information in an organized way.

1 | def save_to_json(data, filename='business_details.json'): |

Complete Code Example

Here’s the complete code that includes everything from fetching business details to saving them in a JSON file.

1 | import requests |

Example Output:

1 | [ |

Optimizing SuperPages Scraper with Crawlbase Smart AI Proxy

To make our SuperPages scraper more robust and faster, we can use Crawlbase Smart AI Proxy. Smart AI Proxy has IP rotation and anti-bot protection, which is essential for not hitting rate limits or getting blocked during long data collection.

Adding Crawlbase Smart AI Proxy to our setup is easy. Sign up on Crawlbase and get an API token. We’ll use the Smart AI Proxy URL along with our token to make requests appear as though they’re coming from various locations. This will help us avoid detection and ensure uninterrupted scraping.

Here’s how we can modify our code to use Crawlbase Smart AI Proxy:

1 | import requests |

By routing our requests through Crawlbase, we add essential IP rotation and anti-bot measures that increase our scraper’s reliability and scalability. This setup is ideal for collecting large amounts of data from SuperPages without interruptions or blocks, keeping the scraper efficient and effective.

Final Thoughts

In this blog, we covered how to scrape SuperPages to get leads. We learned to extract business data like names, addresses, and phone numbers. We used Requests and BeautifulSoup to create a simple scraper to get that data.

We also covered how to handle pagination to get all the listings on the site. By using Crawlbase Smart AI Proxy, we made our scraper more reliable and efficient so we don’t get blocked during data collection.

By following the steps outlined in this guide, you can build your scraper and start extracting essential data. If you want to do more web scraping, check out our guides on scraping other key websites.

📜 Scrape Costco Product Data Easily

📜 How to Scrape Houzz Data

📜 How to Scrape Tokopedia

📜 Scrape OpenSea Data with Python

📜 How to Scrape Gumtree Data in Easy Steps

If you have any questions or feedback, our support team is here to help you. Happy scraping!

Frequently Asked Questions

Q. How can I avoid being blocked while scraping SuperPages?

To avoid getting blocked, add delays between requests, limit the request frequency, and rotate IP addresses. Tools like Crawlbase Smart AI Proxy can simplify this process by rotating IP addresses for you so your scraper runs smoothly. Avoid making requests too frequently and follow good scraping practices.

Q. Why am I getting no results when trying to scrape SuperPages?

If your scraper is not returning results, check that your HTML selectors match the structure of SuperPages. Sometimes, minor changes in the website’s HTML structure require you to update your selectors. Also, make sure you’re handling pagination correctly if you’re trying to get multiple pages of results.

Q. How can I save the scraped data in formats other than JSON?

If you need your scraped data in other formats like CSV or Excel, you can modify the script easily. For CSV, use Python’s csv module to save data in rows. For Excel, the pandas library has a .to_excel() function that works well. This flexibility can help you analyze or share the data in a way that suits your needs.