Web scraping retrieves data from websites, but often requires writing complex logic to extract clean, structured information. With Gemini AI, this process becomes easier and faster. Gemini can understand and extract key details from raw content using natural language. It’s a great tool for smart scraping.

In this blog, you’ll learn how to use Gemini AI for web scraping in Python step by step. We’ll walk you through setting up the environment, extracting HTML, cleaning it up, and letting Gemini do the heavy lifting. Whether you’re building a small scraper or scaling up, this guide will get you started with AI-powered scraping the right way.

Table of Contents

- Installing Python

- Creating a Virtual Environment

- Configure Gemini

- Sending the HTTP request

- Extracting specific section(s) with BeautifulSoup

- Converting the HTML to Markdown for AI efficiency

- Sending the cleaned Markdown to Gemini for data extraction

- Exporting the results in JSON format

- Challenges and Limitations of Gemini AI in Web Scraping

- How Crawlbase Smart AI Proxy Can Help You Scale

- Final Thoughts

- Frequently Asked Questions

What is Gemini AI, and Why Use It for Web Scraping?

Gemini AI is a large language model (LLM) by Google. It can understand natural language, read web content, and extract meaningful data from text. This makes it super useful for web scraping with Python when you want to extract clean and structured data from messy HTML.

Why choose Gemini AI for web scraping?

Traditional web scrapers use CSS selectors or XPath to extract content. However, websites frequently update their structure, and your scraper becomes obsolete. With Gemini AI, you can describe what data you want (like “get all product names and prices”) and the AI figures it out, just like a human would.

Benefits of using Gemini AI for scraping:

- Less code: You don’t need to write complex logic to clean or format data.

- Smarter scraping: Gemini understands natural language, allowing it to find data even when the HTML is not well-structured.

- Flexible: Works on many different websites with minimal code changes.

In the next section, we’ll show you how to set up your environment and get started with Python.

Setting Up the Environment

Before we start scraping websites with Gemini AI and Python, we need to set up the right environment. This includes installing Python, creating a virtual environment, and configuring the Gemini environment.

Installing Python

If you don’t already have Python installed, download it from the official website. Ensure you install Python 3.8 or later. During installation, check the box that says “Add Python to PATH”.

To verify Python is installed, open your terminal or command prompt and run:

1 | python --version |

You should see something like:

1 | Python 3.10.8 |

Creating a Virtual Environment

It’s a good idea to keep your project files clean and separate from your global Python installation. You can do this by creating a virtual environment.

In your project folder, run:

1 | python -m venv gemini_env |

Then activate the environment:

- On Windows:

1 | gemini_env\Scripts\activate |

- On Mac/Linux:

1 | source gemini_env/bin/activate |

Once activated, your terminal will show the environment name, like this:

1 | (gemini_env) $ |

Configure Gemini

To use Gemini AI for web scraping, you’ll need an API key from Google’s Gemini platform. You can get this by signing up for Google AI Studio.

Once you have your key, store it in a .env file:

1 | GEMINI_API_KEY=your_key_here |

Then install the required Python packages:

1 | pip install google-generativeai python-dotenv requests beautifulsoup4 markdownify |

These libraries help us send requests, parse HTML, convert HTML to Markdown, and communicate with Gemini.

Now your environment is ready! In the next section, we’ll build the Gemini-powered web scraper step by step.

Step-by-Step Guide to Building a Gemini-Powered Web Scraper

In this section, you’ll learn how to build a complete Gemini-powered web scraper in Python. We’ll go step by step — from sending an HTTP request to exporting the scraped data as JSON.

We’ll use this example page for scraping:

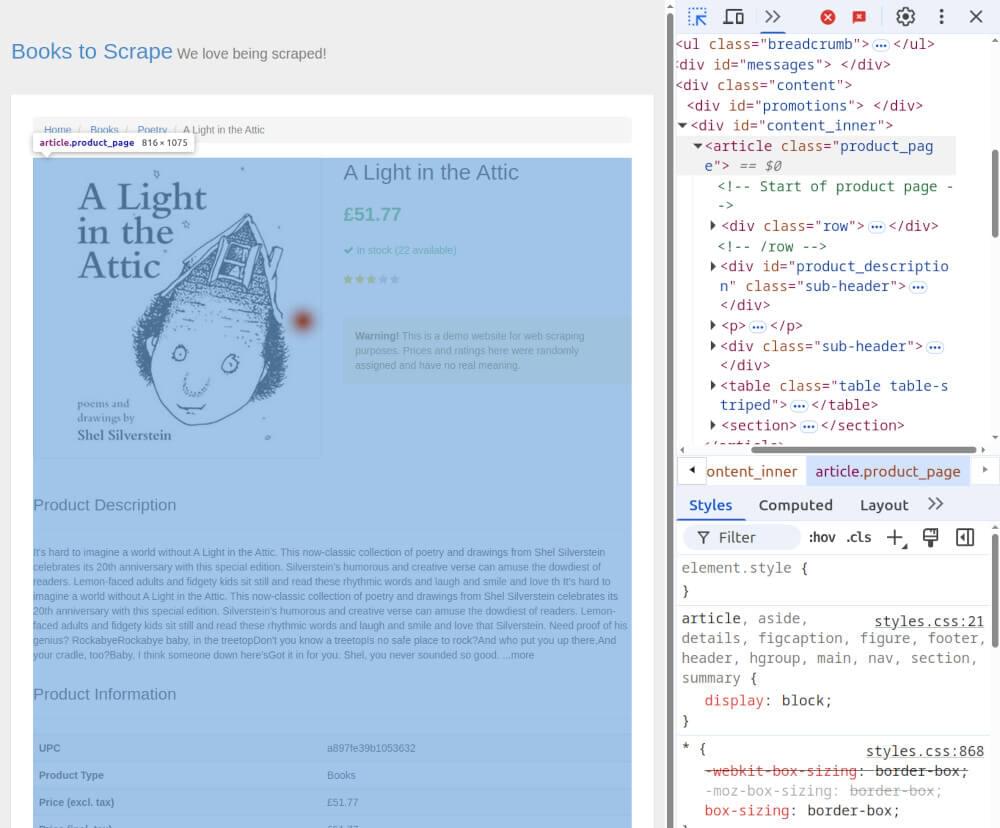

🔗 A Light in the Attic – Books to Scrape

Sending the HTTP Request

First, we’ll fetch the HTML content of the page using the requests library.

1 | import requests |

Extracting Specific Section(s) with BeautifulSoup

To avoid sending unnecessary HTML to Gemini, we will extract only the part of the page that we need.

In this case, the <article class="product_page"> which contains the book details.

1 | from bs4 import BeautifulSoup |

Converting the HTML to Markdown for AI Efficiency

LLMs like Gemini work more efficiently and accurately with cleaner input. So, let’s convert the selected HTML to Markdown using the markdownify library.

1 | from markdownify import markdownify |

This removes unwanted HTML clutter and helps reduce the number of tokens sent to Gemini, saving cost and improving performance.

Sending the Cleaned Markdown to Gemini for Data Extraction

Now, send the cleaned Markdown to Gemini AI and ask it to extract structured data, such as title, price, and stock status.

1 | import os |

Exporting the Results in JSON Format

Finally, we’ll save the extracted data into a .json file.

1 | import json |

With that, your Gemini-powered Python web scraper is ready!

Complete Code Example

Below is the full Python script that brings everything together, from fetching the page to saving the extracted data in JSON format. This script is a great starting point for building more advanced AI-powered scrapers using Gemini.

1 | import requests |

Example Output:

1 | { |

Challenges and Limitations of Gemini AI in Web Scraping

Gemini AI for web scraping is powerful but comes with some limitations. Understand these before using it in real-world scraping projects.

1. High Token Usage

Gemini charges per token (piece of text) sent and received. If you send the full HTML of a page, the cost adds up quickly. That’s why converting HTML to Markdown is helpful, it reduces tokens and keeps only what’s essential.

2. Slower than Traditional Scraping

Since Gemini is an AI model, it takes more time to process text and return results compared to simple HTML parsers. If you’re scraping multiple pages, speed will become a significant issue.

3. Less Accurate for Complex Pages

Gemini may miss or misinterpret data, especially when the layout is complex or contains many repeated elements. Unlike rule-based scrapers, AI models can be unpredictable in these cases.

4. Not Real-Time

Gemini requires time to analyze and return answers, making it unsuitable for real-time web scraping, such as monitoring prices every few seconds. It’s better for use cases where structured data extraction is more important than speed.

5. API Rate Limits

Like most AI platforms, Gemini has rate limits. You can only send a limited number of requests per minute or hour. Scaling is complex unless you manage your API calls or upgrade to a paid plan.

How Crawlbase Smart AI Proxy Can Help You Scale

When web scraping with Gemini AI, you’ll run into one big problem: getting blocked by websites. Many sites detect bots and return errors or CAPTCHAs when they see unusual behavior. That’s where Crawlbase Smart AI Proxy comes in.

What is Crawlbase Smart AI Proxy?

Crawlbase Smart AI Proxy is a tool that allows you to scrape any website without getting blocked. It rotates IP addresses, handles CAPTCHAs, and fetches pages like a real user.

This is especially useful when you’re sending requests from your scraper to websites that don’t allow bots.

Benefits of Using Crawlbase Smart AI Proxy with Gemini AI

- ✅ Avoid IP blocks: Crawlbase handles proxy rotation for you.

- ✅ Bypass CAPTCHAs: It automatically solves most challenges.

- ✅ Save time: You don’t need to manage your proxy servers.

- ✅ Get clean HTML: It returns ready-to-parse content, perfect for AI processing.

Example: Using Crawlbase Smart AI Proxy with Python

Here’s how to fetch a protected page using Crawlbase Smart AI Proxy before passing it to Gemini:

1 | import requests |

Replace _USER_TOKEN_ with your actual Crawlbase Smart AI Proxy token.

Once you fetch the HTML with Smart AI Proxy, you can pass it to BeautifulSoup, convert it to Markdown, and process it with Gemini AI—just like we showed you earlier in this post.

Final Thoughts

Gemini AI makes web scraping in Python smarter and easier. It turns complex HTML into clean, structured data using AI. With BeautifulSoup and Markdown conversion, you can build a scraper that understands content better than traditional methods.

For sites with blocks or protection, use Crawlbase Smart AI Proxy. You won’t get blocked, even on the toughest sites.

This guide showed you how to:

- Build a Gemini-powered scraper in Python

- Optimize input with HTML to Markdown

- Scale scraping with Crawlbase Smart AI Proxy

Now you can scrape smarter, faster, and more efficiently!

Frequently Asked Questions

Q. Can I use Gemini AI to scrape any website?

Yes, you can use Gemini AI to scrape many websites. However, some websites may have anti-bot protections, such as Cloudflare. For those, you’ll need tools like Crawlbase Smart AI Proxy to avoid getting blocked and access content smoothly.

Q. Why should I convert HTML to Markdown before sending it to Gemini?

Converting HTML to Markdown helps reduce the size of the data. This enables the AI process to run faster and reduces the number of tokens used, saving you money, especially when utilizing Gemini AI for large-scale scraping projects.

Q. Is Gemini better than traditional web scraping tools?

Gemini is more powerful when you need AI-based content understanding. Traditional scraping tools extract raw data, but Gemini can summarize, clean, and understand the content. It’s best to combine both methods for the best scraping results.