In our increasingly data-centric world, the ability to access real-time information is not just advantageous; it’s often a necessity. This rings especially true for businesses and researchers who seek to stay ahead of the curve. Fortunately, e-commerce websites present an abundant data source, offering a veritable treasure trove of product details, pricing dynamics, and valuable market insights. Yet, we turn to web crawling to harness this immense wealth of information effectively.

This comprehensive guide is your roadmap to mastering the art of web crawling for e-commerce websites using JavaScript in combination with the versatile Crawlbase Crawling API. As we delve into the intricacies of web crawling, you’ll discover how to navigate and extract valuable data from these dynamic online marketplaces. To illustrate our approach, we’ll use Daraz.pk, a renowned and widely-used e-commerce platform, as our prime example.

Through the following sections, you’ll gain a deep understanding of web crawling fundamentals and practical insights into building your own web crawler. By the end of this guide, you’ll be equipped to access and analyze e-commerce data efficiently, empowering you to make informed business decisions and drive your research forward. So, let’s embark on this journey into the world of web crawling and unlock the wealth of information that e-commerce websites have to offer.

Table of Contents

- What is E-Commerce Website

- The Role of Web Crawling in E-Commerce

- Introducing Crawlbase Crawling API

- Benefits of using Crawlbase Crawling API

- Crawlbase NodeJS Library

- Installing NodeJS and NPM

- Setting the Project Directory

- Installing Required Libraries

- Choosing the Right Development IDE

- Getting token for Crawlbase Crawling API

- Importance of crawling Daraz.pk Website

- Understanding the search page structure of Daraz.pk Website

- Importing Essential NodeJS Modules

- Configuring Your Crawlbase API token

- Identifying Selectors for Important Information

- Crawling the selected E-Commerce website

- Crawling Products hidden in Pagination

- Downloading Scraped Data as CSV file

- Integrating SQLite Databases to Save the Data

Getting Started

Before we dive into technical details, it is important to understand the concept of E-Commerce websites and shed light on their significance in the digital age. By exploring the role of web crawling within E-Commerce, readers will understand how data extraction, organization, and utilization are pivotal in gaining a competitive edge in the online marketplace.

What is an E-Commerce Website?

An e-commerce website, short for electronic commerce website, is an online platform that enables the buying and selling products or services over the Internet. These websites come in various shapes and sizes, from small independent boutiques to massive multinational corporations. What unifies them is the digital nature of their operations, allowing customers to browse products, make purchases, and arrange for deliveries, all through the power of the web.

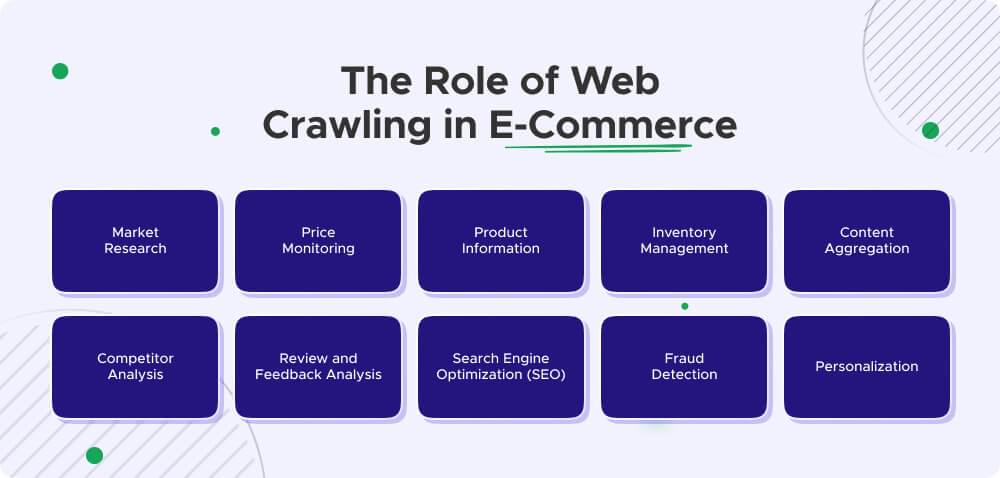

The Role of Web Crawling in E-Commerce

Web crawling plays a pivotal role in the e-commerce ecosystem, acting as a silent engine driving real-time product information and market data availability. Here’s how it works: web crawlers, also known as web spiders or bots, are automated scripts that systematically navigate websites, collecting data. This data can encompass product details, pricing information, customer reviews, and more.

For e-commerce businesses, web crawling is indispensable. It enables them to stay competitive by monitoring the prices of their products and those of their competitors. This data-driven approach allows for dynamic pricing strategies, ensuring products are competitively priced in real time. Moreover, web crawling aids in inventory management, ensuring that products are in stock and available for customers when they want to make a purchase. In many cases, e-commerce businesses rely on advanced systems, like 3pl inventory management tools, to manage stock and streamline order fulfillment across multiple platforms

Researchers and analysts also rely on web crawling for market studies and trend analysis. By aggregating data from various e-commerce websites, they gain insights into consumer behavior, market fluctuations, and the popularity of specific products. This information is invaluable for making informed business decisions, predicting market trends, and staying ahead of the competition.

Getting Started with Crawlbase Crawling API

Now that we’ve established the importance of web crawling in e-commerce, it’s time to dive into the tools and techniques that will empower you to crawl e-commerce websites effectively. In this section, we’ll introduce you to the Crawlbase Crawling API, shed light on the benefits of harnessing its power, and explore the Crawlbase NodeJS library, which will serve as our trusty companion on this web crawling journey.

Introducing Crawlbase Crawling API

The Crawlbase Crawling API is a robust, developer-friendly solution that simplifies web crawling and scraping tasks. It offers a wide array of features and functionalities, making it an ideal choice for extracting data from e-commerce websites like Amazon, eBay, and Daraz.pk, Alibaba, and beyond.

At its core, the Crawlbase Crawling API enables you to send HTTP requests to target websites, retrieve HTML content, and navigate through web pages programmatically. This means you can access the underlying data of a website without the need for manual browsing, copying, and pasting. Instead, you can automate the process, saving time and effort. You can read more at Crawlbase Crawling API Documentation.

Benefits of using Crawlbase Crawling API

Why opt for the Crawlbase Crawling API when embarking on your web crawling journey? Here are some compelling reasons:

- Data Accuracy and Consistency: The Crawlbase Crawling API ensures that the data you collect is accurate and consistent. Unlike manual data entry, which is prone to errors and inconsistencies, the API fetches data directly from the source, reducing the chances of inaccuracies.

- Scalability: Whether you need to crawl a handful of pages or thousands of them, the Crawlbase Crawling API is built to handle your needs. It’s highly scalable, making it suitable for projects of all sizes.

- Real-Time Data: In the fast-paced world of e-commerce, having access to real-time data can make all the difference. The API provides the most up-to-date information, allowing you to stay ahead of the competition.

- Proxy Management: When dealing with websites’ anti-scraping defenses, such as IP blocking, Crawlbase offers an efficient proxy management system that involves IP rotation. This particular functionality assists in circumventing IP bans and ensuring consistent and dependable access to your desired data.

- Convenience: The Crawlbase API brings a sense of ease and convenience by eliminating the need to create and manage your own custom scraper or crawler. It operates seamlessly as a cloud-based solution, handling all the intricate technical intricacies and allowing you to focus solely on extracting valuable data.

- Cost-Efficiency: Establishing and sustaining an in-house web scraping solution can significantly strain your budget. On the contrary, the Crawlbase Crawling API offers an economically sensible alternative, where you only pay for the services you specifically require, aligning your expenses with your unique needs.

Crawlbase NodeJS Library

To make the most of the Crawlbase Crawling API, you’ll need a programming language that can interact seamlessly. This is where the Crawlbase NodeJS library comes into play. NodeJS is a popular runtime environment for executing JavaScript code outside of a web browser, and it’s an excellent choice for building web crawlers.

The Crawlbase NodeJS library simplifies the integration of the Crawlbase Crawling API into your web crawling projects. It provides functions and utilities that make sending requests, handling responses, and parsing data a breeze. Whether you’re an experienced developer or just starting with web crawling, the Crawlbase NodeJS library will be your go-to tool for building powerful and efficient web crawlers.

In the following sections, we’ll walk you through setting up your development environment, configuring the Crawlbase Crawling API, and writing your first JavaScript crawling script. Together, we’ll explore the incredible possibilities of web crawling in the e-commerce domain.

Setting Up Your Development Environment

Before you can dive into web crawling with JavaScript and the Crawlbase Crawling API, it’s essential to prepare your development environment. This section provides a concise yet detailed guide to help you set up the necessary tools and libraries for seamless e-commerce website crawling.

Installing NodeJS and NPM

NodeJS and NPM (Node Package Manager) are the backbone of modern JavaScript development. They allow you to execute JavaScript code outside the confines of a web browser and manage dependencies effortlessly. Here’s a straightforward installation guide:

- NodeJS: Visit the official NodeJS website and download the latest LTS (Long-Term Support) version tailored for your operating system. Execute the installation following the platform-specific instructions provided.

- NPM: NPM comes bundled with NodeJS. After NodeJS installation, you automatically have NPM at your disposal.

To confirm a successful installation, open your terminal or command prompt and run the following commands:

1 | node -version |

These commands will display the installed versions of NodeJS and NPM, ensuring a smooth setup.

Setting the Project Directory

To begin, create a directory using the mkdir command. It is called ecommerce crawling for the sake of this tutorial, but you can replace the name with one of your choosing:

1 | mkdir ecommerce\ crawling |

Next, change into the newly created directory using the cd command:

1 | cd ecommerce\ crawling/ |

Initialize the project directory as an npm package using the npm command:

1 | npm init -y |

The command creates a package.json file, which holds important metadata for your project. The -y option instructs npm to accept all defaults.

After running the command, the following output will display on your screen:

1 | Wrote to /home/hassan/Desktop/ecommerce crawling/package.json: |

Installing Required Libraries

For proficient web crawling and API interactions, equip your project with the following JavaScript libraries using NPM:

1 | # Navigate to your project directory |

Here’s a brief overview of these vital libraries:

- Cheerio: As an agile and high-performance library, Cheerio is designed for efficiently parsing HTML and XML documents. It plays a pivotal role in easily extracting valuable data from web pages.

- Crawlbase: Crawlbase simplifies interactions with the Crawlbase Crawling API, streamlining the process of website crawling and data extraction.

- SQLite3: SQLite3 stands as a self-contained, serverless, and zero-configuration SQL database engine. It will serve as your repository for storing the troves of data collected during crawling.

- csv-writer: It simplifies the process of writing data to CSV files, making it easy to create structured data files for storage or further analysis in your applications. It provides an intuitive API for defining headers and writing records to CSV files with minimal code.

Choosing the Right Development IDE

Selecting the right Integrated Development Environment (IDE) can significantly boost productivity. While you can write JavaScript code in a simple text editor, using a dedicated IDE can offer features like code completion, debugging tools, and version control integration.

Some popular IDEs for JavaScript development include:

- Visual Studio Code (VS Code): VS Code is a free, open-source code editor developed by Microsoft. It has a vibrant community offers a wide range of extensions for JavaScript development.

- WebStorm: WebStorm is a commercial IDE by JetBrains, known for its intelligent coding assistance and robust JavaScript support.

- Sublime Text: Sublime Text is a lightweight and customizable text editor popular among developers for its speed and extensibility.

Choose an IDE that suits your preferences and workflow.

Getting a Token for Crawlbase Crawling API

To access the Crawlbase crawling API, you need an access token. To get the token, you first need to create an account on Crawlbase. Now, let’s get you set up with a Crawlbase account. Follow these steps:

- Visit the Crawlbase Website: Open your web browser and navigate to Crawlbase signup page to begin the registration process.

- Provide Your Details: You’ll be asked to provide your email address and create a password for your Crawlbase account. Fill in the required information.

- Verification: After submitting your details, you may need to verify your email address. Check your inbox for a verification email from Crawlbase and follow the instructions provided.

- Login: Once your account is verified, return to the Crawlbase website and log in using your newly created credentials.

- Access Your API Token: You’ll need an API token to use the Crawling API. You can find your tokens on this link.

Crawlbase provides two types of tokens: the Normal Token (TCP) for static website pages and the JavaScript Token (JS) for dynamic or JavaScript rendered website pages. You can read more here.

With NodeJS, NPM, essential libraries, and your API token in place, you’re now ready to dive into the world of e-commerce website crawling using JavaScript and the Crawlbase Crawling API. In the following sections, we’ll guide you through the process step by step.

Selecting Your Target E-Commerce Website

Choosing the right e-commerce website for your crawling project is a crucial decision. In this section, we’ll explore the significance of selecting Daraz.pk as our target website and delve into understanding its search page structure.

Importance of Crawling Daraz.pk Website

Daraz.pk, one of South Asia’s largest online marketplaces, is an excellent choice for our web crawling demonstration. Here’s why:

- Abundance of Data: Daraz.pk boasts an extensive catalog of products, making it a treasure trove of information for data enthusiasts. From electronics to fashion, you’ll find a diverse range of products to explore, providing a comprehensive example of crawling e-commerce data.

- Real-World Relevance: Crawling an e-commerce giant like Daraz.pk provides a practical example that resonates with real-world scenarios. Whether you’re a business looking to monitor competitor prices, a researcher studying consumer trends, or a developer seeking to create a price comparison tool, the data you can extract from such a platform is invaluable.

- Varied Page Structures: Daraz.pk’s website features a variety of page structures, including product listings, search results, and individual product pages. This diversity allows us to cover a broad spectrum of web scraping scenarios, making it an ideal playground for learning and practical application.

- Regional Significance: Daraz.pk’s presence in South Asia gives it regional significance. Understanding the products and pricing specific to this area can be highly beneficial if you’re interested in regional market trends.

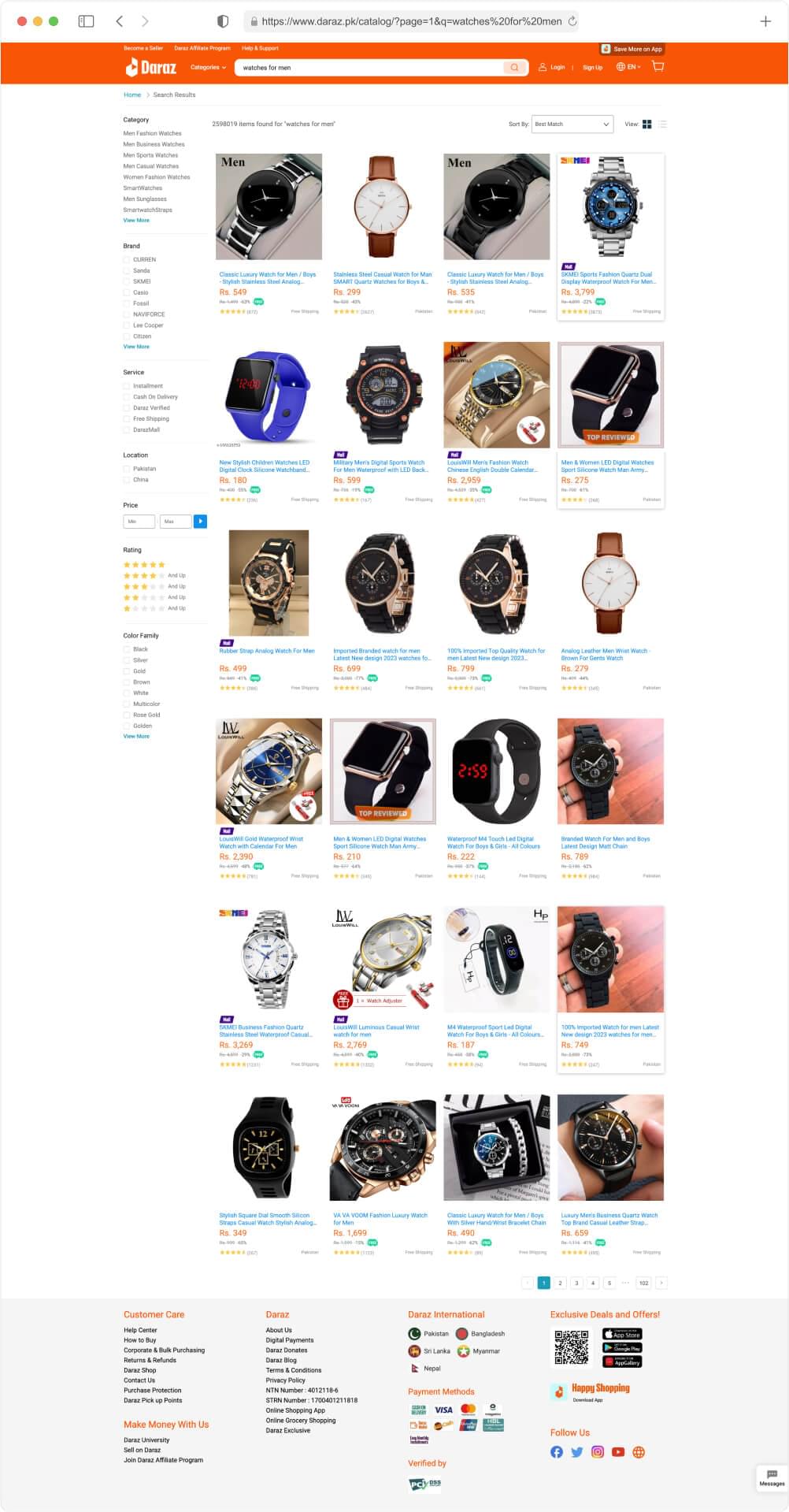

Understanding the Search Page Structure of Daraz.pk Website

To effectively crawl Daraz.pk, it’s essential to grasp the structure of its search pages. These pages are the starting point for many e-commerce-related queries, making them a prime focus for web crawling. Daraz.pk usually shows 40 results per page.

Here’s a detailed breakdown:

- Search Bar: Daraz.pk’s homepage features a prominent search bar where users can enter keywords to find products. This search bar serves as the entry point for customers searching for specific items, making it a vital component to understand.

- Search Results: Upon entering a search query, Daraz.pk displays a list of relevant products. Each product listing typically includes an image, title, price, and user ratings. Understanding how this data is structured is essential for effective data extraction.

- Product Pages: Clicking on a product in the search results leads to an individual product page. These pages contain detailed information about a specific product, including its description, specifications, customer reviews, and related items. Understanding how these pages are structured is vital for more in-depth data extraction.

- Pagination: Given the potentially large number of search results, pagination is common on these pages. Users can navigate multiple results pages to explore a broader range of products. Handling pagination is key to web crawling, especially on e-commerce websites where data can span multiple pages.

- Footer: The footer of Daraz.pk’s search pages often contain useful links and information. While not directly related to search results, it can be a valuable resource for extracting additional data or navigating the website efficiently.

It’s worth noting that Daraz.pk loads search results dynamically using JavaScript. To effectively crawl such pages, you’ll need to use the Crawlbase Crawling API with a JavaScript token (JS token). With a JS token, you can utilize query parameters like ajaxWait and pageWait, which are essential for handling JavaScript rendering and AJAX loading. You can read more at Crawlbase Crawling API query parameters. This capability allows you to interact with dynamically generated content, ensuring you can access the data you need for your web crawling project.

Understanding the dynamics of JavaScript rendering and AJAX loading is crucial when dealing with modern, interactive websites like Daraz.pk. By honing in on the search page structure, you’ll be well-prepared to extract valuable data from Daraz.pk and gain insights into e-commerce web scraping.

Writing the JavaScript Crawling Script

Let’s explore how to write the JavaScript crawling script for Daraz.pk using the Crawlbase NodeJS library. This section will cover each step in detail with code examples.

Importing Essential NodeJS Modules

NodeJS shines with its comprehensive module ecosystem in the web crawling and scraping world. These modules simplify complex tasks, making extracting and manipulating data from web pages easier. Let’s begin by importing the essential modules:

1 | // Import necessary Node.js modules |

Configuring Your Crawlbase API token

Now, let’s configure your Crawling API token. This token is the gateway to using Crawlbase crawling API.

1 | const crawlbaseApiToken = 'YOUR_CRAWLBASE_JS_TOKEN'; // Replace with your actual Crawlbase API token |

By inserting your unique API token here, your script gains the ability to utilize Crawlbase Crawling API services seamlessly throughout the crawling process. Utilizing the Crawling API with a JS token not only equips us with essential functionalities for efficiently navigating JavaScript-rendered websites but also guarantees seamless IP rotation, thereby safeguarding against potential blocking issues.

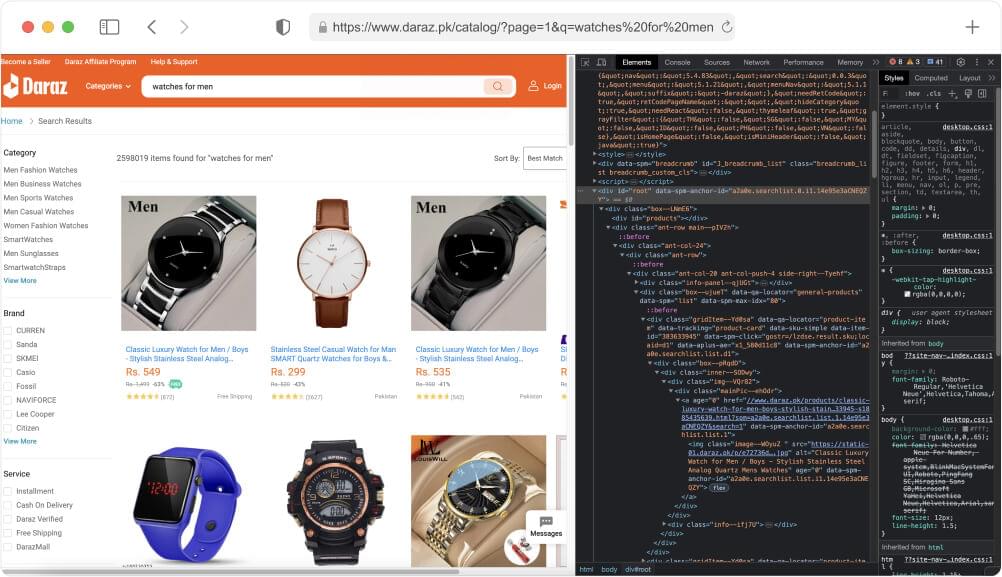

Identifying Selectors for Important Information

When crawling e-commerce websites like Daraz.pk, one of the essential steps is to identify the precise HTML elements that contain the information you want to extract. In this section, we’ll guide you through the process of finding these elements by inspecting the web page and selecting the right CSS selectors to use with Cheerio.

- Inspect the Web Page:

Before you can pinpoint the selectors, you need to inspect the Daraz.pk search page. Right-click on the element you’re interested in (e.g., a product title, price, or rating) and select “Inspect” from the context menu. This will open your browser’s developer tools, allowing you to explore the page’s HTML structure.

- Locate the Relevant Elements:

Within the developer tools, you’ll see the HTML structure of the page. Start by identifying the HTML elements that encapsulate the data you want to scrape. For instance, product titles might be enclosed in <h2> tags, while prices could be within <span> elements with specific classes.

- Determine CSS Selectors:

Once you’ve located the relevant elements, it’s time to create CSS selectors that target them accurately. CSS selectors are patterns used to select the elements you want based on their attributes, classes, or hierarchy in the HTML structure.

Here are some common CSS selectors:

- Element Selector: Selects HTML elements directly. For example,

h2selects all<h2>elements. - Class Selector: Selects elements by their class attribute. For example,

.product-titleselects all elements with the class “product-title.” - ID Selector: Selects a unique element by its ID attribute. For example,

#product-123selects the element with the ID “product-123.”

- Test the Selectors:

After defining your selectors, you can test them in the browser’s developer console to ensure they target the correct elements. Use JavaScript to execute your selectors and see if they return the expected results. In the following sections, we have employed the most current CSS selectors available when writing this blog, ensuring the accuracy and effectiveness of our demonstrations.

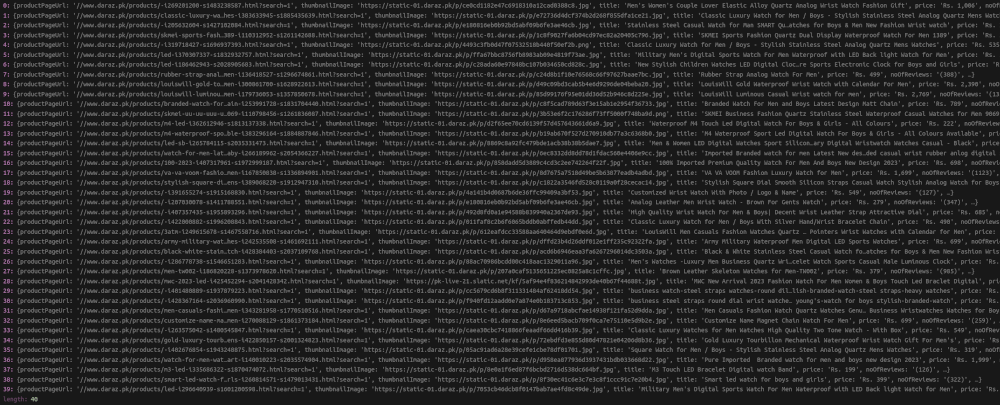

Crawling the Selected E-Commerce Website

In this section, we’ll dive into a practical example of how to crawl the Daraz e-commerce website with the setup we did previously. The example provided demonstrates how to fetch search results for the query “watches for men” from Daraz.pk and extract essential product information.

1 | // Import necessary Node.js modules |

The crawlDaraz function initiates the crawling process. It first constructs the URL for the Daraz.pk search based on the provided query. Then, it utilizes the Crawlbase API to send a GET request to this URL, incorporating a page wait time of 5000 milliseconds (5 seconds) to ensure that JavaScript rendering completes. If the request is successful (HTTP status code 200), the script parses the HTML content of the page using ‘cheerio’. It then proceeds to extract product information by traversing the HTML structure with predefined selectors. The extracted data, including product URLs, images, titles, prices, review counts, and locations, is organized into objects and added to an array. Finally, this array of product data is returned.

The second part of the code invokes the startCrawling function, which initiates the crawling process by calling crawlDaraz with the query ‘watches for men.’ The extracted results are logged to the console, making them available for further processing or analysis. This code showcases a technical implementation of web crawling and scraping that is equipped to handle dynamic content loading on the Daraz.pk website using Crawlbase Crawling API.

Output Screenshot:

Crawling Products Hidden in Pagination

When crawling an e-commerce website like Daraz.pk, it’s common to encounter search result pages that are spread across multiple pages due to the large volume of products. To ensure comprehensive data collection, we need to handle this pagination effectively.

Pagination is managed through numerical page links or “next page” buttons. Here’s how we tackle it:

- Determining the Total Pages: Initially, we fetch the first search page and inspect it to determine the total number of result pages available. This step is crucial for knowing how many pages we need to crawl.

- Iterating Through Pages: With the total number of pages in hand, we then iterate through each page, making requests to fetch the product data. We follow the pagination structure by appending the page number to the search URL. For instance, from page 1 to page N, where N is the total number of pages.

- Extracting Data: We extract the product data on each page as we did on the initial page. This includes details such as product URLs, images, titles, prices, reviews, and locations.

- Aggregating Results: Finally, we aggregate the results from each page into a single dataset. This ensures that we capture data from every page of search results, providing a comprehensive dataset for analysis.

Let’s update our previous code to handle pagination on Daraz.pk search pages.

1 | // Import necessary Node.js modules |

The code consists of two main functions: getTotalPages and crawlDarazPage.

getTotalPagesfetches the initial search page, extracts the total number of pages available for the given query, and returns this number. It uses Cheerio to parse the page and extracts the total page count from the pagination control.crawlDarazPageis responsible for crawling a specific page of search results. It takes thequeryandpageas parameters, constructs the URL for the specific page, and extracts product data from that page.

In the startCrawling function, we determine the total number of pages using getTotalPages. If there are pages to crawl (i.e., totalPages is greater than zero), we initialize an empty results array. We then loop through the desired number of pages (in this case, the first 5 pages) and use crawlDarazPage to fetch and extract product data from each page. The results are accumulated in the results array.

By handling pagination in this way, you can ensure that your web crawler comprehensively collects product data from all available search result pages on Daraz.pk or similar websites. This approach makes your web scraping efforts more thorough and effective.

Storing Data Efficiently

After successfully scraping data from an e-commerce website like Daraz, the next step is to store this valuable information efficiently. Proper data storage ensures you can effectively access and utilize the scraped data for various purposes. This section’ll explore two methods to store your scraped data: downloading it as a CSV file and integrating SQLite databases to save the data.

Downloading Scraped Data as CSV file

CSV (Comma Separated Values) is a widely used format for storing structured data. It’s easy to work with and can be opened by various spreadsheet applications like Microsoft Excel and Google Sheets. To download scraped data as a CSV file in your NodeJS application, you can use libraries like csv-writer. Here’s an example of how to use it as per our example:

1 | const createCsvWriter = require('csv-writer').createObjectCsvWriter; |

In this example, we’ve created a CSV writer with headers that correspond to the fields we have scraped: “productPageUrl”, “thumbnailImage”, “title”, “price”, “noOfReviews”, and “location”. You can then use the saveToCsv function to save your data as a CSV file.

Integrating SQLite Databases to Save the Data

SQLite is a lightweight, serverless, and self-contained SQL database engine that’s ideal for embedding into applications. It provides a reliable way to store structured data. To integrate SQLite databases into your web scraping application for data storage, you can use the sqlite3 library. Here’s how we can use it as per our example:

1 | const sqlite3 = require('sqlite3').verbose(); |

In this example, we first open a SQLite database and create a table called products to store the scraped data. We then define a function saveToDatabase to insert data into this table. After inserting the data, remember to close the database connection with db.close().

Additionally, here’s an example of how you can incorporate these data storage methods into your web scraping code:

1 | // ... (Previous code for web scraping including new functions for saving the scraped Data) |

In this updated code, the saveToCsv function is called to save data to a CSV file, and the saveToDatabase function is called to store data in an SQLite database after each page is crawled. This ensures that your data is saved efficiently during the scraping process.

Conclusion

Web crawling, a behind-the-scenes hero of the digital age, plays an indispensable role in the thriving world of e-commerce. In the world of e-commerce, web crawling stands as a silent sentinel, tirelessly gathering the wealth of data that fuels this digital marketplace. E-commerce websites like Amazon, eBay, Daraz.pk, and others have revolutionized how we shop, offering a vast array of products and services at our fingertips. These platforms owe their success, in part, to web crawling, which plays a pivotal role in ensuring that product information, prices, and trends are up-to-date.

The significance of web crawling in e-commerce cannot be overstated. It enables real-time price monitoring, competitive analysis, and inventory management for businesses. Researchers benefit from conducting market studies and gaining insights into consumer behavior. Armed with the right tools like the Crawlbase Crawling API and NodeJS, developers can craft powerful web crawlers to extract valuable data and build innovative solutions.

As we journeyed through this guide, we explored the importance of selecting a target e-commerce website, delving into the structure of Daraz.pk’s search pages. With a JavaScript crawling script powered by the Crawlbase Crawling API and data management strategies, you’re now equipped to navigate the e-commerce landscape and unlock its riches. Web crawling is your gateway to the world of e-commerce intelligence, where data-driven decisions and innovation await.

Frequently Asked Questions

Q. What is the difference between web crawling and web scraping?

Web Crawling is the process of systematically navigating websites and collecting data from multiple pages. It involves automated scripts, known as web crawlers or spiders, that follow links and index web pages.

On the other hand, Web Scraping is the extraction of specific data from web pages. It typically targets specific elements like product prices, names, or reviews. Web scraping is often a web crawling component that extracts valuable information from crawled pages.

Q. Why is web crawling important for E-Commerce websites like Daraz.pk?

Web crawling is vital for e-commerce sites like Daraz.pk because it enables them to monitor prices, track product availability, and gather market data. This information is crucial for competitive pricing strategies, inventory management, and trend analysis. It also helps businesses stay up-to-date with changing market conditions.

Q. How can I get started with web crawling using the Crawlbase Crawling API and NodeJS?

To begin web crawling with Crawlbase and NodeJS, follow these steps:

- Sign up for a Crawlbase account and obtain an API token.

- Set up your development environment with NodeJS and necessary libraries like Cheerio and SQLite3.

- Write a JavaScript crawling script that uses the Crawlbase Crawling API to fetch web pages, extract data using Cheerio, and handle pagination.

- Store your scraped data efficiently as CSV files or in an SQLite database.

Q. What are the benefits of using the Crawlbase Crawling API?

The Crawlbase Crawling API offers several advantages, including:

Data Accuracy and Consistency: It ensures accurate and consistent data collection.

Scalability: It can handle projects of all sizes, from small crawls to large-scale operations.

Real-Time Data: It provides access to up-to-date information critical for e-commerce.

Proxy Management: It efficiently handles proxies and IP rotation to circumvent anti-scraping defenses.

Convenience: It eliminates the need to build custom scrapers and handles technical intricacies.

Cost-Efficiency: It offers a budget-friendly alternative to in-house scraping solutions, with pay-as-you-go pricing.