Identifying your target web page, inspecting the full HTML, locating the data you need, using parsing tools to extract it, manually managing your proxies, and hoping you don’t get blocked for doing it repeatedly. It’s a tedious process, but that’s what web scraping looked like before API-based scraping came along.

Today, services like Crawlbase make the whole process so much easier. They let you skip all the complicated steps and focus on what actually matters; getting the data you need.

This article will teach you how to learn the key differences between traditional and API-based scraping and how to get started with a more efficient approach to web data extraction through Crawlbase.

Table of Contents

- IP Management and CAPTCHA Handling

- Built-in Data Scrapers

- Efficient and Reliable

- Fast Integration and Scalability

The Limitations of Traditional Scrapers

Building your web scraper from scratch is easier said than done. For starters, you need a solid understanding of how HTML works. You have to inspect the page’s structure, figure out which tags like <div>, <span>, or <a>; hold the data you’re after, and know exactly how to extract it. And that’s just the beginning. Several other challenges come with traditional scraping:

Handling JavaScript-Rendered Pages

Solving this on your own takes a lot of effort. You’ll likely need tools like Selenium or Playwright to run a headless browser, since the data you’re after doesn’t always appear in the page’s initial HTML. It’s often generated dynamically after the page loads. If you rely on a simple GET request, your scraper will probably return an empty response.

IP Bans and Rate Limiting

This is one of the biggest challenges in traditional scraping, as it’s how websites detect and block automated crawling and scraping activities. Bypassing these defenses often means writing custom code to rotate proxies or IP addresses, and adding logic to mimic human-like browsing behavior. All of this requires advanced coding skills and adds a lot of complexity to your scraper.

Maintenance Cost

Traditional scrapers will almost always cost you more, not just in money, but also in development time and effort. Manually coded scrapers tend to break often and need constant updates. Managing healthy IPs or rotating proxies adds even more maintenance overhead. Failed scrapes or incomplete data also lead to wasted computing resources. Most of these problems are avoidable when you use modern, well-supported APIs.

Lack of Scalability

With all of the above issues combined, it is no surprise that scaling this would be a huge problem. The high costs and low reliability make it a poor choice, especially if you’re aiming to scale your project for larger companies. If growth and efficiency matter, sticking with traditional scraping doesn’t make sense, especially today, where API-based tools like Crawlbase exist.

Traditional Scraping Examples

This method is fairly straightforward. In this example, we’ll use Python’s requests library to demonstrate the most basic form of crawling and scraping a website.

Setup the Coding Environment

- Install Python 3 on your computer

- Open your terminal and run

1 | python -m pip install requests |

Basic (non-JavaScript) page

1 | import requests |

Save the following code in a file named basic_page.py, then execute it from the command line using:

1 | python basic_page.py |

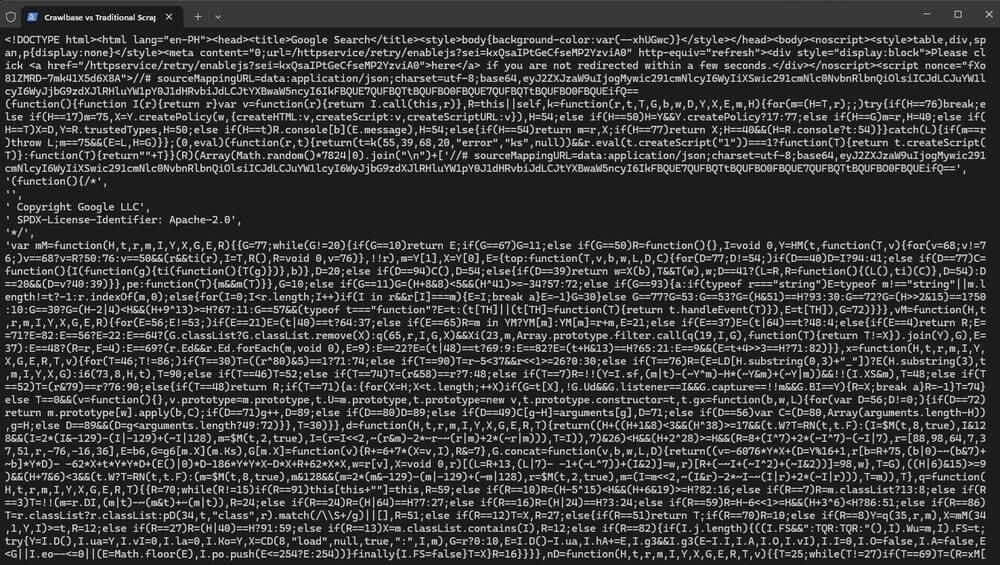

Output:

As you can see from the output, this method returns the raw HTML of the page. While it works for basic or static pages, it falls short when dealing with modern websites that rely heavily on JavaScript to render content, which you will see in the next example.

JavaScript page

1 | import requests |

Save the following code in a file named javascript_page.py, then execute it from the command line using:

1 | python javascript_page.py |

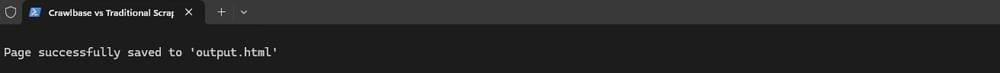

Here is the terminal console output:

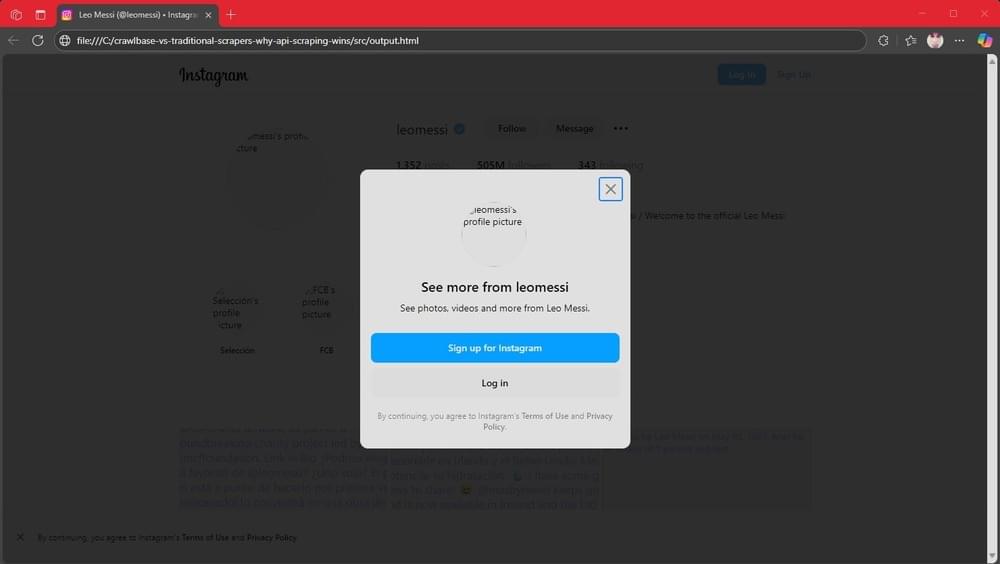

And when you open the file output.html on a browser:

The browser renders a blank Instagram page because the JavaScript responsible for loading the content was not executed during the crawling process.

In such cases, you’d need to implement additional tools or switch to more advanced solutions, like using a headless browser or, better yet, an API-based scraper to save time and effort.

Key Benefits of API-Based Scraping

In the context of scraping, “API-based” means collecting data by making requests to official endpoints provided by a website or service. This makes the entire process faster, more reliable, and far less complicated.

While official APIs like GitHub API are a good alternative to traditional scraping, Crawlbase offers an even more powerful solution. Its generalized approach allows you to scrape almost any publicly available website, and it can also be used alongside official APIs to significantly enhance your scraping workflow. Here are some key advantages:

IP Management and CAPTCHA Handling

Crawlbase provides an API that acts as middleware to simplify web scraping. Instead of accessing official site APIs, they handle complex tasks such as IP rotation, bot detection, and CAPTCHA solving. The API utilizes massive IP pools, AI-based behavior, and built-in automation features to avoid bans and blocks. Users simply send a target URL to the endpoint and receive accurate data. No need to worry about managing proxies, avoiding CAPTCHAs, or simulating browser behavior manually.

Built-in Data Scrapers

Crawlbase doesn’t just provide the complete HTML code of your target page; it can also deliver clean, structured data, eliminating the need to constantly adjust your code every time a website changes something on its side.

It has built-in scrapers for major platforms like Facebook, Instagram, Amazon, eBay, and many others. This saves developers a ton of time and hassle, letting them focus on using the data rather than figuring out how to extract it.

Efficient and Reliable

Even if you’re planning to crawl small or large volumes of data, reliability, and speed are key factors in deciding which approach to use for your project. Crawlbase is known for having one of the most stable and reliable services in the market. A quick look at the Crawlbase status page shows an almost 100% uptime for its API.

Fast Integration and Scalability

With a single API endpoint, you can access Crawlbase’s main product, the Crawling API, for scraping and data extraction. Any programming language that supports HTTP or HTTPS requests can work with this API, making it easy to use across different platforms. To simplify integration even further, Crawlbase also offers free libraries and SDKs for various languages. Using this API as the foundation for your scraper is a big reason why scaling your projects becomes much simpler.

Crawlbase API-Based Approach

You can spend time learning headless browsers, managing proxies, and parsing HTML, or you can skip all that complexity and use the Crawling API instead. Here’s how easy it is to get started:

Signup and Quickstart Guide

- Obtaining API Credentials

- Create a Crawlbase account and log in.

- After signing up, you will receive 1,000 free requests.

- Locate and copy your Crawling API Normal and JavaScript requests tokens.

Crawling API (Basic page)

1 | import requests |

Note:

- Make sure to replace

Normal_requests_tokenwith your actual token. - The

"scraper": "google-serp"is optional. Remove it if you wish to get the complete HTML response.

Save the script as basic_page_using_crawling_api.py, then run it from the command line using:

1 | python basic_page_using_crawling_api.py |

Response

1 | { |

Crawling API (JavaScript page)

1 | import json |

Same as the previous code, you have to save this and go to your terminal to execute the code.

Once successfully executed, you should see a similar output below:

When you open output.html, you’ll see that the page is no longer blank, as the Crawling API runs your request through a headless browser infrastructure.

If you want clean, structured JSON response data that’s ready to use, simply add the "scraper": "instagram-profile" parameter to your request. This tells Crawlbase to automatically parse the Instagram profile page and return only the relevant data, saving you the effort of manually extracting the whole HTML page.

1 | { |

You can also visit Crawlbase’s GitHub repository to download the complete example code used in this guide.

Why is API-based Scraping Preferred Over Traditional Web Scraping?

As you can see in our demonstration above, using an API-based solution like Crawlbase’s Crawling API offers clear advantages over traditional scraping methods when it comes to collecting data from websites. Let’s take a closer look at why it’s a winning choice for both developers and businesses.

Reduced Dev Time and Costs

Instead of spending time developing a scraper that constantly needs updates whenever a website changes its HTML, handling JavaScript pages, or maintaining proxies to avoid getting blocked, you can simply use the Crawling API. Traditional scraping comes with too many time-consuming challenges. By letting Crawlbase take care of the heavy lifting, you’ll lower your overall project costs and reduce the need for extra manpower.

Scalable Infrastructure

Crawlbase products are built with scalability in mind. From simple HTTP/HTTPS requests to ready-to-use libraries and SDKs for various programming languages, integration is quick and easy.

The Crawling API is designed to scale with your needs. Crawlbase uses a pay-as-you-go payment model, giving you the flexibility to use as much or as little as you need each month. You’re not locked into a subscription, and you only pay for what you use, making it ideal for projects of any size.

Higher Success Rate

Crawlbase is built to maximize success rates with features like healthy IP pools, AI-powered logic to avoid CAPTCHAs and a highly maintained proxy network. A higher success rate means faster data collection and lower operational costs. Even in the rare case of a failed request, Crawlbase doesn’t charge you, making it a highly cost-effective solution for web scraping.

Give Crawlbase a try today and see how much faster and more efficient web scraping can be. Sign up for a free account to receive your 1,000 free API requests!

Frequently Asked Questions (FAQs)

Q: Why should I switch to an API-based solution like Crawlbase?

A: Traditional scraping is slow, complex, and hard to scale. Crawlbase handles IP rotation, JavaScript rendering, and CAPTCHA avoidance, so you get reliable data faster with less code and maintenance. Even if there’s an upfront cost, the overall expense is usually lower than building and maintaining your own scrapers.

Q. What are the limitations of Crawlbase?

A: Crawlbase is designed for flexibility and scalability, but like any API-based platform, it has certain operational limits depending on the crawling method being used. Below is a breakdown of the default limits:

Crawling API (Synchronous)

- Bandwidth per Request: Unlimited

- Rate Limit:

- 20 requests per second for most websites

- 1 request per second for Google domain

- 5 requests per second for LinkedIn (Async Mode)

Note: Rate limits can be increased upon request. If you’re unsure which product suits your use case or want to request higher limits, the Crawlbase customer support is available to help tailor the setup for your project.

Q. What are the main differences between web scraping and API-based data collection?

A: API-based data collection uses a structured and authorized interface provided by the data source to get information in a clean, predictable format like JSON or XML.

Key differences:

- Structure: APIs return structured data, and scraping requires parsing raw HTML.

- Reliability: APIs are more stable and less likely to break due to design changes, scraping can break due to layout or code updates.

- Access: APIs require authentication and have usage limits, scraping can access any publicly visible content (though it may raise ethical or legal issues).

- Speed and Efficiency: API calls are generally faster and more efficient, especially for large-scale data collection.

- Compliance: API usage is governed by clear terms of service, scraping may violate a site’s policies if not done correctly.

API is usually the preferred method when available, but scraping is useful when APIs are limited, unavailable, or too restrictive.