Creating a web crawler is a smart way of retrieving useful information available online. With a web crawler, you can scan the Internet, browse through individual websites, and analyze and extract their content.

The Java programming language provides a simple way of building a web crawler and harvesting data from websites. You can use the extracted data for various use cases, such as for analytical purposes, providing a service that uses third-party data, or generating statistical data.

In this article, we’ll walk you through the process of building a web crawler using Java and Crawlbase.

What you’ll need

Typically, crawling web data involves creating a script that sends a request to the targeted web page, accesses its underlying HTML code, and scrapes the required information.

To accomplish that objective, you’ll need the following:

- Java 11 development environment

- Crawlbase

Before we develop the crawling logic, let’s clear the air why using Crawlbase is important for web crawling.

Why use Crawlbase for Crawling

Crawlbase is a powerful data crawling and scraping tool you can use to harvest information from websites fast and easily.

Here are some reasons why you should use it for crawling online data:

Easy to use It comes with a simple API that you can set up quickly without any programming hurdles. With just a few lines of code, you can start using the API to crawl websites and retrieve their content.

Supports advanced crawling Crawlbase allows you to perform advanced web crawling and scrape data from complicated websites. Since it supports JavaScript rendering, Crawlbase lets you extract data from dynamic websites. It offers a headless browser that allows you to extract what real users see on their web browsers—even if a site is created using modern frameworks like Angular or React.js.

Bypass crawling obstacles Crawlbase can handle all the restrictions often associated with crawling online data. It has an extensive network of proxies as well as more than 17 data centers around the world. You can use it to avoid access restrictions, bypass CAPTCHAs, and evade other anti-scraping measures implemented by web applications. What’s more, you can crawl websites while remaining anonymous; you’ll not worry about exposing your identity.

Free trial account You can test how Crawlbase works without giving out your payment details. The free account comes with 1,000 credits for trying out the tool’s capabilities.

How Crawlbase Works

Crawlbase provides the Crawling API for crawling and scraping data from websites. You can easily integrate the API in your Java development project and retrieve information from web pages smoothly.

Each request made to the Crawling API starts with the following base part:

1 | https://api.crawlbase.com |

Also, you’ll need to add the following mandatory parameters to the API:

- Authentication token

- URL

The authentication token is a unique token that authorizes you to use the Crawling API. Once you sign up for an account, Crawlbase will give you two types of tokens:

- Normal token This is for making generic crawling requests.

- JavaScript token This is for crawling dynamic websites. It provides you with headless browser capabilities for crawling web pages rendered using JavaScript. As pointed out earlier, it’s a useful way of crawling advanced websites.

Here is how to add the authentication token to your API request:

1 | https://api.crawlbase.com/?token=INSERT_TOKEN |

The second mandatory parameter is the URL to crawl. It should start with HTTP or HTTPS, and be completely encoded. Encoding converts the URL string into a format that can be transferred over the Internet validly and easily.

Here is how to insert the URL to your API request:

1 | https://api.crawlbase.com/?token=INSERT_TOKEN&url=INSERT_URL |

If you run the above line—for example, on your terminal using cURL or pasting it on a browser’s address bar—it’ll execute the API request and return the entire HTML source code of the targeted web page.

It’s that easy and simple!

If you want to perform advanced crawling, you may add other parameters to the API request. For example, when using the JavaScript token, you can add the page_wait parameter to instruct the browser to wait for the specified number of milliseconds before the resulting HTML code is captured.

Here is an example:

1 | https://api.crawlbase.com/?token=INSERT_TOKEN&page_wait=1000&url=INSERT_URL |

Building a Web Crawler in Java and Crawlbase

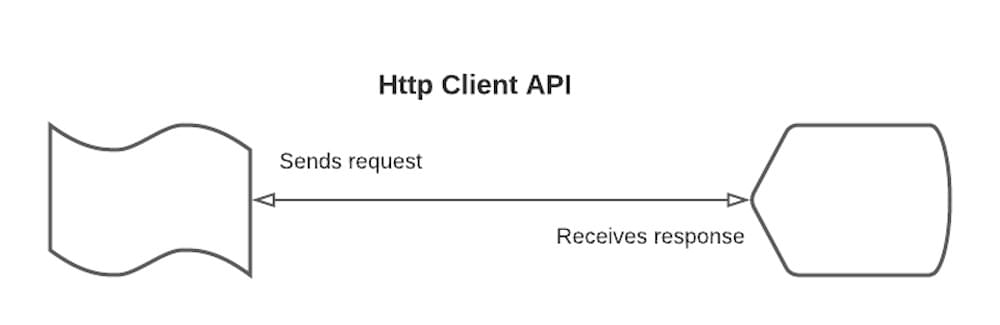

In this Java web crawling tutorial, we’ll use the HttpClient API to create the crawling logic. The API was introduced in Java 11, and it comes with lots of useful features for sending requests and retrieving their responses.

The HttpClient API supports both HTTP/1.1 and HTTP/2. By default, it uses the HTTP/2 protocol to send requests. If a request is sent to a server that does not already support HTTP/2, it will automatically be downgraded to HTTP/1.

Furthermore, its requests can be sent asynchronously or synchronously, it handles requests and response bodies as reactive-streams, and uses the common builder pattern.

The API is comprised of three core classes:

- HttpRequest

- HttpClient

- HttpResponse

Let’s talk about each of them in more detail.

1. HttpRequest

The HttpRequest, as the name implies, is an object encapsulating the HTTP request to be sent. To create new instances of HttpRequest, call HttpRequest.newBuilder(). After it has been created, the request is immutable and can be sent multiple times.

The Builder class comes with different methods for configuring the request.

These are the most common methods:

- URI method

- Request method

- Protocol version method

- Timeout method

Let’s talk about each of them in more detail.

a) URI method

The first thing to do when configuring the request is to set the URL to crawl. We can do so by calling the uri() method on the Builder instance. We’ll also use the URI.create() method to create the URI by parsing the string of the URL we intend to crawl.

Here is the code:

1 | String url = |

Notice that we provided the URL string using Crawlbase’s settings. This is the web page we intend to scrape its contents.

We also encoded the URL using the Java URLEncoder class. As earlier mentioned, Crawlbase requires URLs to be encoded.

b) Request method

The next thing to do is to specify the HTTP method to be used for making the request. We can call any of the following methods from Builder:

- GET()

- POST()

- PUT()

- DELETE()

In this case, since we want to request data from the target web page, we’ll use the GET() method.

Here is the code:

1 | HttpRequest request = HttpRequest.newBuilder() |

So far, HttpRequest has all the parameters that should be passed to HttpClient. However, you may need to include other parameters, such as the HTTP protocol version and timeout.

Let’s see how you can add the additional parameters.

c) Protocol version method

As earlier mentioned, the HttpClient API uses the HTTP/2 protocol by default. Nonetheless, you can specify the version of the HTTP protocol you want to use.

Here is the code:

1 | HttpRequest request = HttpRequest.newBuilder() |

d) Timeout method

You can set the amount of time to wait before a response is received. Once the defined period expires, an HttpTimeoutException will be thrown. By default, the timeout is set to infinity.

You can define timeout by calling the timeout() method on the builder instance. You’ll also need to pass the Duration object to specify the amount of time to wait.

Here is the code:

1 | HttpRequest request = HttpRequest.newBuilder() |

2. HttpClient

The HttpClient class is the main entry point of the API—it acts as a container for the configuration details shared among multiple requests. It is the HTTP client used for sending requests and receiving responses.

You can call either the HttpClient.newBuilder() or the HttpClient.newHttpClient() method to instantiate it. After an instance of the HttpClient has been created, it’s immutable.

The HttpClient class offers several helpful and self-describing methods you can use when working with requests and responses.

These are some things you can do:

- Set protocol version

- Set redirect policy

- Send synchronous and asynchronous requests

Let’s talk about each of them in more detail.

a) Set protocol version

As earlier mentioned, the HttpClient class uses the HTTP/2 protocol by default. However, you can set your preferred protocol version, either HTTP/1.1 or HTTP/2.

Here is an example:

1 | HttpClient client = HttpClient.newBuilder() |

b) Set redirect policy

If the targeted web page has moved to a different address, you’ll get a 3xx HTTP status code. Since the address of the new URI is usually provided with the status code information, setting the correct redirect policy can make HttpClient forward the request automatically to the new location.

You can set it by using the followRedirects() method on the Builder instance.

Here is an example:

1 | HttpClient client = HttpClient.newBuilder() |

c) Send synchronous and asynchronous requests

HttpClient supports two ways of sending requests:

- Synchronously by using the send() method. This blocks the client until the response is received, before continuing with the rest of the execution.

Here is an example:

1 | HttpResponse<String> response = client.send(request, |

Note that we used BodyHandlers and called the ofString() method to return the HTML response as a string.

- Asynchronously by using the sendAsync() method. This does not wait for the response to be received; it’s non-blocking. Once the sendAsync() method is called, it returns instantly with a CompletableFuture< HttpResponse >, which finalizes once the response is received. The returned CompletableFuture can be joined using various techniques to define dependencies among varied asynchronous tasks.

Here is an example:

1 | CompletableFuture<HttpResponse<String>> response = HttpClient.newBuilder() |

3. HttpResponse

The HttpResponse, as the name implies, represents the response received after sending an HttpRequest. HttpResponse offers different helpful methods for handling the received response.

These are the most important methods:

- statusCode() This method returns the status code of the response. It’s of int type

- Body() This method returns a body for the response. The return type is based on the kind of response BodyHandler parameter that is passed to the send() method.

Here is an example:

1 | // Handling the response body as a String |

1 | // Handling the response body as a file |

Synchronous Example

Here is an example that uses the HttpClient synchronous method to crawl a web page and output its content:

1 | package javaHttpClient; |

Here is the output (for brevity, its truncated):

Asynchronous Example

When using the HttpClient asynchronous method to crawl a web page, the sendAsync() method is called, instead of send().

Here is an example:

1 | package javaHttpClient; |

Conclusion

That’s how to build a web crawler in Java. The HttpClient API, which was introduced in Java 11, makes it easy to send and handle responses from a server.

And if the API is combined with a versatile tool like Crawlbase, it can make web crawling tasks smooth and rewarding.

With Crawlbase, you can create a scraper that can help you to retrieve information from websites anonymously and without worrying about being blocked.

It’s the tool you need to take your crawling efforts to the next level.

Click here to create a free Crawlbase account.

Happy scraping!