The data researchers had to struggle to find out the relevant information they required for a particular purpose in the previous times. Since the internet comprises dynamic data that is either structured, unstructured, or semi-structured, this qualitative information is available in webpages, blog posts, research articles, HTML tables, images, videos, etc. Consequently, it was a time-consuming task when they had to do it manually.

Web scraping has been introduced to ease the manual searching of data and has been proved a blessing for social scientists across the globe. Web Scraping is a procedure of automatically fetching any data available online either by utilizing robotic process automation technique or Web scraping APIs that also provide proxies to your web browsers.

Web Scraping by Robotic Process Automation (RPA)

Robotic Process Automation (RPA) is a technique applied for effective and faster conduction of repetitive tasks. You can also do web scraping by preparing an RPA program to search for specific information from an online source. This RPA program can compile the scraped data in a document for further use. Implementing RPA is a super effective way but is not recommended because of few reasons;

- It works in the same repetitive manner of research.

- A website can block the RPA web scraper program because it opens again and again for data scraping.

- It is feasible for the web interfaces that appear similar to UI (user interface) elements

- It increases the load on the system.

Web Scraping by Web Scraping APIs

Application Programming Interfaces (APIs) that are specifically developed to furnish easiness in web scraping data from available online sources are the most reliable way of scraping data.

Web scraping API provides an intermediary source to communicate between two websites by allowing you to do web scraping most reliably and fluently. Web scraping API permits you to retrieve data automatically from dynamic web sources in an innovative manner.

Ways to Avoid Getting Blocked

The efficient and innovative Web scraping APIs are the web services that serve the automated fetching of data from any online source. It is the most cost-effective yet time-effective method as well.

But in this modern era of computation, all the technologies that work on the automation framework require careful attention to avoid getting blocked from the websites. The detection of data scraping is easy if you are not taking care of these ways while implementing web scraping techniques.

1. Implementation of Scraping API to Avoid CAPTCHA Blocks

The word CAPTCHA stands for Completely Automated Public Turing Test, aimed at detecting whether the coming user to a particular site is a robot for phishing of harmful purposes or a general user approaching certain available data on that web page.

Many websites have integrated algorithms to identify to differentiate a human and a robot visitor to the sites. Block Web scraping APIs have built-in methods to deal with the dynamic techniques that may block web data scraping. The scraping APIs get easily integrated into your applications by setting up various proxies with dynamic infrastructure. They also take care of the CAPTCHAs that may hamper the process of your web scraping.

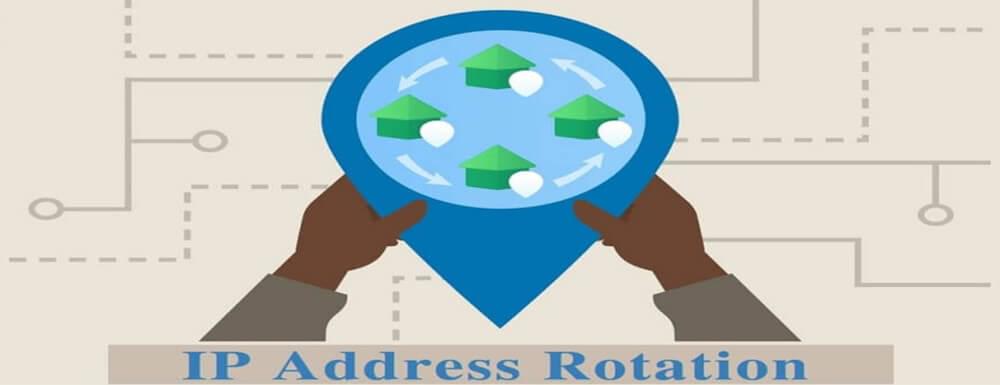

2. Utilizing Rotating IP Address

An IP address is used to recognize and find about all the connected devices to the internet. Categorically, IP addresses are of two types, i.e., IPv4 and IPv6. The process in which assigned IP addresses are used to allocate to a device at different scheduled or unscheduled intervals is called IP rotation. Utilizing the IP addresses that are periodically rotated is a proven way to avoid getting blocked while scraping the data from web sources.

The active connection through ISP (Internet Service Provider) is already connected from a pool of IPs. When connecting and disconnecting occur, the ISP automatically assigns another available IP address. Different methods used to rotate IP addresses by Internet Service Providers are as follows;

- Pre-configured IP Rotation: In this method, rotation is pre-built to occur at fixed intervals, in which a new IP address is already assigned to a user when the fixed time elapses

- Specified IP Rotation: In this method, a user chooses the IP address for a generous connection

- Random IP Rotation: In this method, a user has no control over assigning of random, rotating IP address to each outgoing connection

- Burst IP Rotation: The new IP addresses are assigned to the users after a specified number, usually 10. The eleventh connection will get a new IP address

Rotating IP addresses is a proficient and proven way to avoid getting blocked requests.

3. Setting Up Subsidiary Requests Headers

Request and response messages are part of the header section components of HTTP (Hypertext Transfer Protocol). They define an HTTP transaction’s operating parameters. By creating and configuring subsidiary request headers, you can determine how you want your content to be served to the users and avoid any blockage during web scraping.

4. Stay Alert from Honeypot Traps

The most important rule of web scraping is to make your effort as undistinguished as possible. In this way, you will not have to face any conjecture or suspicious behavior from your chosen target websites. For this purpose, you need a well-organized web scraping program that operates effectively and flexibly.

Measures to Stay Safe from Honeypot Traps:

Some of the essential measures that you can use to avoid any blocking request during block web scraping and stay safe from honeypot traps;

i. Check Terms & Conditions:

The first important thing you need to do is make sure that the website you want to scrape has any harmful content towards web scraping by visiting their terms and conditions. If there will be anything regarding the dislikes of web scraping, stop scraping their website, and it is the only way you can get through this.

ii. Load Minimization:

Consider reducing the load of all the websites that you are trying to scrape. By continuous load on websites might make them cautious towards you. Load minimization to be carefully conducted for every website or web page that you intend to scrape data.

Putting a high load on websites can alert them towards your purpose of scraping their site, which can cause problems for you. So, be mindful of that. There are several ways and techniques that you can utilize to reduce the load on a specified website. Some of them are mentioned below:

a. Try to cache and store the URLs of the previously crawled pages of the website to make sure that you do not need to load them again, minimizing the website’s load

b. Try to work slowly and not send multiple requests simultaneously because it puts pressure on the resources

c. Appropriately do your scraping, and scrape the content of your need.

iii. Suitable Web Scraping Tool Utilization:

The block web scraping tool that you use should differentiate its measures, transpose its scraping pattern and present a positive front to the websites. So, in this way, there will be no issue or alarming situation for you which makes them defensive and over-sensitive.

iv. Use of Proxy APIs:

For web scraping, use multiple IP addresses. You can also use proxy servers, and VPN services or Crawlbase APIs are also effective for this purpose. Proxies are pretty efficient to avoid the threat of getting blocked while scraping data from a web source.

v. Avoid Honeypot Trap by Visiting’ robots.txt’:

It is mandatory to get a glimpse of the “robots.txt” file. It will help you to get an insight into the website’s respective policies. All the details related to web scraping are mentioned here. The details include the exact pages that you are allowed to scrape. And the prerequisite intervals between the requests of each page.

By understanding and following these instructions, you can avoid and reduce the chances of getting any blocking requests and risks from the owners of the websites that you want to scrape.

5. Dynamic Scraping Pattern with Irregular Timing

If you want to do web scraping without any blockage, use a dynamic scraping pattern with different irregular timings and intervals.

It has generally been noticed that when a particular web scraping task is done using robots like RPA, etc., they follow specific patterns for which they are exceptionally trained. But if the typical user visits a web page, he will have dynamic visiting hours, different searching patterns to find his desired data. The same logic can be applied to web scraping by implementing block web scraping APIs to avoid getting blocked requests.

Concluding Statement

The above article is all about the different scenarios related to the issues of the traps and roadblocks that you might face during block web scraping. The methods discussed above will help you efficiently consider you outwit and logical ways to overcome difficulties and avoid getting blocked requests during web scraping.

By understanding and following the rules of web scraping, you can easily scrape the required information from any available online source. This information can be helpful in many ways and can use for dynamic purposes.