Scraping data from Foursquare is super helpful for developers and businesses looking to get data on venues, user reviews, or location-based insights. Foursquare is one of the most popular location-based services, with over 50 million active monthly users and over 95 million locations worldwide. By scraping data from Foursquare, you can get valuable information for market research, business development, or building location-based applications.

This article will show you how to easily scrape Foursquare data using Python and Crawlbase Crawling API which is perfect for sites like Foursquare that rely heavily on JavaScript rendering. Whether you want to get search listings or detailed venue information, we will show you how to do it step by step.

Let’s dive into the process, from setting up your Python environment to extracting and storing Foursquare data in a structured way.

Table of Contents

- Why Extract Data from Foursquare?

- Key Data Points to Extract from Foursquare

- Crawlbase Crawling API for Foursquare Scraping

- Crawlbase Python Library

- Installing Python and Required Libraries

- Choosing an IDE

- Inspecting the HTML for Selectors

- Writing the Foursquare Search Listings Scraper

- Handling Pagination

- Storing Data in a JSON File

- Complete Code Example

- Inspecting the HTML for Selectors

- Writing the Foursquare Venue Details Scraper

- Storing Data in a JSON File

- Complete Code Example

Why Extract Data from Foursquare?

Foursquare is a massive platform with location data on millions of places like restaurants, cafes, parks and more. Whether you’re building a location-based app, doing market research or analyzing venue reviews, Foursquare has got you covered. By extracting this data you can get insights to inform your decisions and planning.

Businesses can use Foursquare data to learn more about customer preferences, popular venues and regional trends. Developers can use this data to build custom apps like travel guides or recommendation engines. Foursquare has venue names, addresses, ratings and reviews, so extracting data from this platform can be a total game changer for your project.

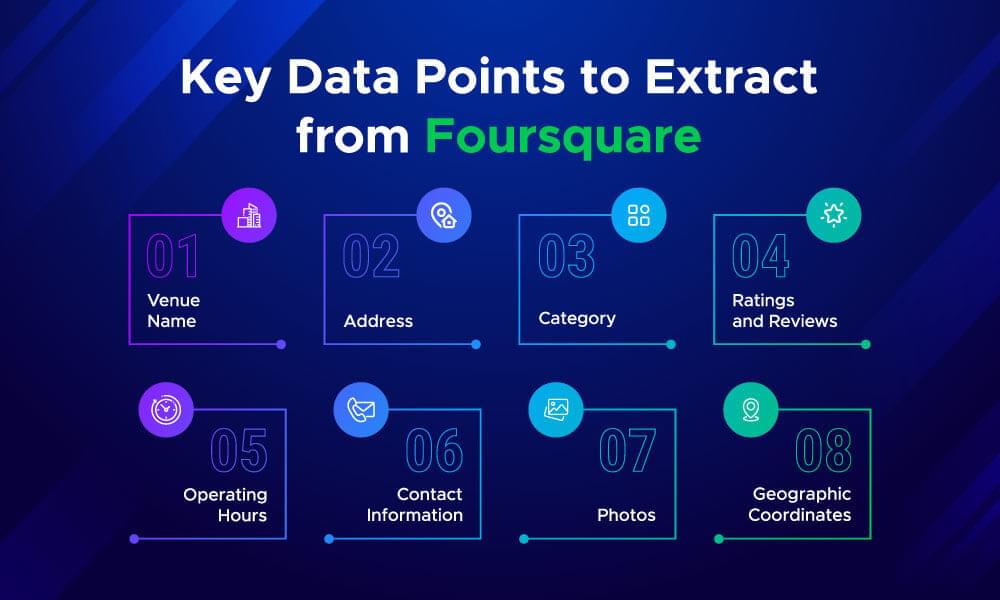

Key Data Points to Extract from Foursquare

When scraping Foursquare, you need to know what data you can collect. Here’s what you can get from Foursquare:

- Venue Name: The business or location name, e.g., restaurant, cafe, park.

- Address: Full address of the venue, including street, city, and postal code.

- Category: Type of venue (e.g., restaurant, bar, museum) to categorize the data.

- Ratings and Reviews: User generated ratings and reviews to know customer satisfaction.

- Operating Hours: Business hours of the venue, suitable for time sensitive applications.

- Contact Information: Phone numbers, email addresses, or websites to contact the venue.

- Photos: The user uploaded photos to get an idea of the venue.

- Geo-coordinates: Latitude and longitude of the venue for mapping and location-based apps.

Crawlbase Crawling API for Foursquare Scraping

Foursquare uses JavaScript to load its content dynamically, which makes it hard to scrape using traditional methods. This is where Crawlbase Crawling API comes in. It’s designed to handle websites with heavy JavaScript rendering by mimicking real user interactions and rendering the full page.

Here’s why using the Crawlbase Crawling API for scraping Foursquare is a great choice:

- JavaScript Rendering: It takes care of loading all the dynamic content on Foursquare pages, so you get complete data without missing important information.

- IP Rotation and Proxies: Crawlbase automatically rotates IP addresses and uses smart proxies to avoid getting blocked by the site.

- Easy Integration: The Crawlbase API is simple to integrate with Python and offers flexible options to control scraping, such as scroll intervals, wait times, and pagination handling.

Crawlbase Python Library

Crawlbase also has a Python library using which you can easily use Crawlbase products in your projects. You’ll need an access token, which you can get by signing up with Crawlbase.

Here’s an example to send a request to Crawlbase Crawling API:

1 | from crawlbase import CrawlingAPI |

Note: Crawlbase provides two types of tokens: a Normal Token for static sites and a JavaScript (JS) Token for dynamic or browser-rendered content, which is necessary for scraping Foursquare. Crawlbase also offers 1,000 free requests to help you get started, and you can sign up without a credit card. For more details, check the Crawlbase Crawling API documentation.

In the next section, we’ll go through how to set up your Python environment to start scraping.

Setting Up Your Python Environment

Before we can start scraping Foursquare data, we need to set up the right Python environment. This includes installing Python, and necessary libraries and choosing the right IDE (Integrated Development Environment) to write and run our code.

Installing Python and Required Libraries

First, make sure that you have Python installed on your computer. You can download the latest version of Python from python.org. Once installed, you can check if Python is working by running this command in your terminal or command prompt:

1 | python --version |

Next, you’ll need to install the required libraries. For this tutorial, we’ll use Crawlbase and BeautifulSoup for parsing HTML. You can install these libraries by running the following command:

1 | pip install crawlbase beautifulsoup4 |

These libraries will help you interact with the Crawlbase Crawling API, extract useful information from the HTML, and organize the data.

Choosing an IDE

To write and run your Python scripts, you need an IDE. Here are some options:

- VS Code: A lightweight code editor with great Python support.

- PyCharm: A more advanced Python IDE with lots of features.

- Jupyter Notebook: A more advanced Python IDE with lots of features.

Choose what you like. Once you have your environment set up you can start writing the code for Foursquare search listings.

Scraping Foursquare Search Listings

In this section, we will scrape search listings from Foursquare. Foursquare search listings have various details about places, such as name, address, category, and more. We’ll break this down into the following steps:

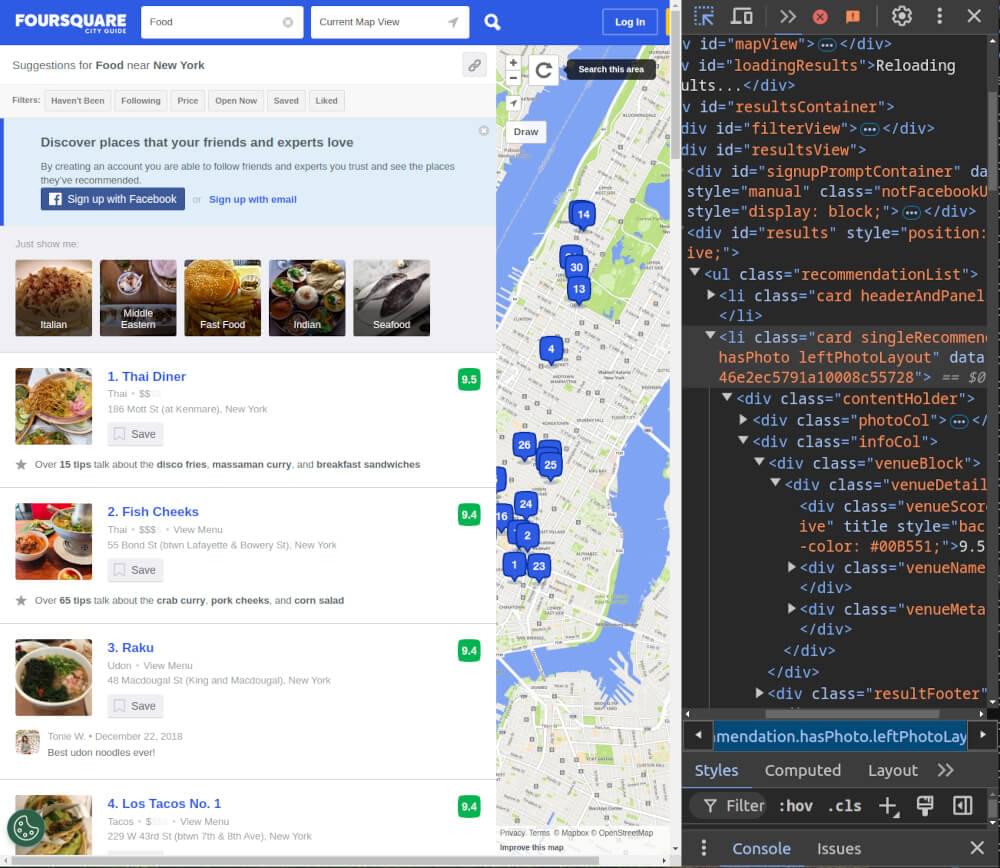

Inspecting the HTML for Selectors

Before writing the scraper, we need to inspect the Foursquare search page to identify the HTML structure and CSS selectors that contain the data we want to extract. Here’s how you can do that:

- Open the Search Listings Page: Go to a Foursquare search results page (e.g. search for “restaurants” in a specific location).

- Inspect the Page: Right-click on the page and select “Inspect” or press

Ctrl + Shift + Ito open Developer Tools.

- Find the Relevant Elements:

- Place Name: The place name is inside a

<div>tag with the class.venueName, and the actual name is inside an<a>tag inside this div. - Address: The address is inside a

<div>tag with the class.venueAddress. - Category: The category of the place can be extracted from a

<span>tag with the class.categoryName. - Link: The link to the place details is inside the same

<a>tag within the.venueNamediv.

Inspect the page for any additional data you want to extract, such as the rating or number of reviews.

Writing the Foursquare Search Listings Scraper

Now that we have our CSS selectors for the data points, we can start writing the scraper. We will use the Crawlbase Crawling API to handle JavaScript rendering and AJAX requests, utilizing its ajax_wait and page_wait parameters.

Here’s the code:

1 | from crawlbase import CrawlingAPI |

In the above code, We make a request to the Foursquare search page using Crawlbase Crawling API. We use BeautifulSoup to parse the HTML and extract the data points using the CSS selectors. Then we store the data in a list of dictionaries.

Handling Pagination

Foursquare search listings use button-based pagination. To handle pagination, we will use the css_click_selector parameter provided by the Crawlbase Crawling API. This allows us to simulate a button click to load the next set of results.

We will set the css_click_selector to the button class or ID responsible for pagination (usually a “See more results” button).

1 | def make_crawlbase_request_with_pagination(url): |

Storing Data in a JSON File

Once we have scraped the data we can store it in a JSON file for later use. JSON is a popular format for storing and exchanging data.

1 | def save_data_to_json(data, filename='foursquare_data.json'): |

Complete Code Example

Below is the complete code that combines all the steps:

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | [ |

Scraping Foursquare Venue Details

In this section, we will learn how to scrape individual Foursquare venue details. After scraping the listings, we can then dig deeper and collect specific data from each venue’s page.

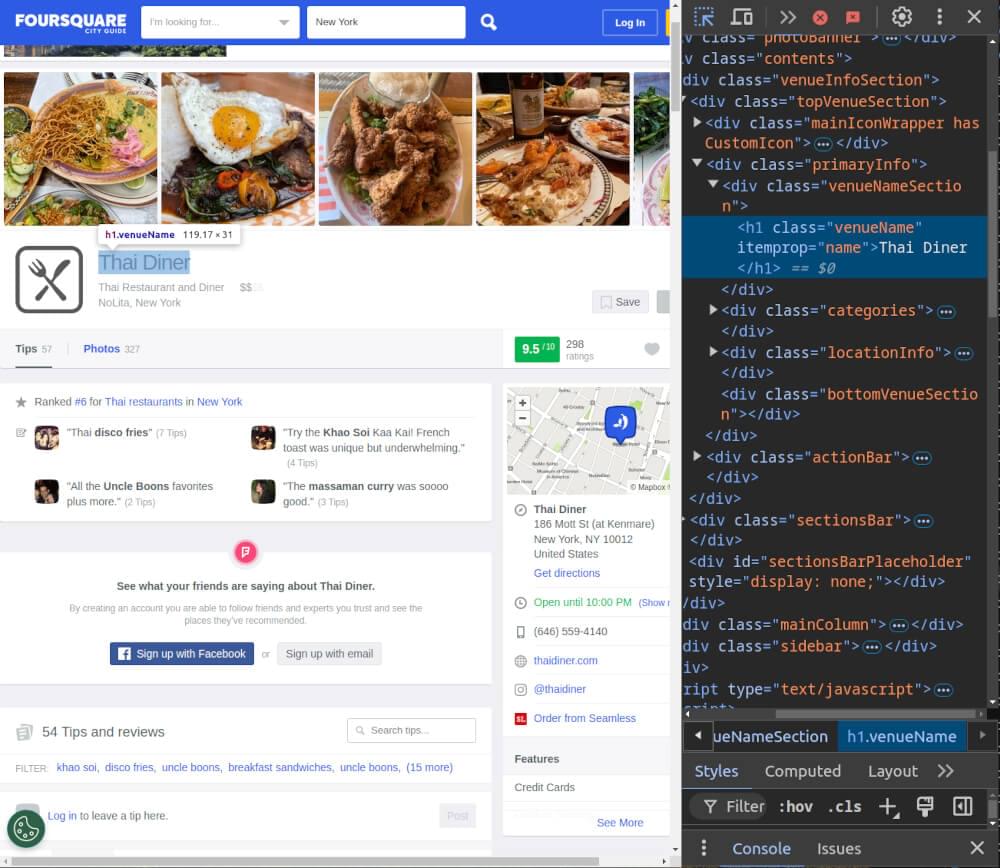

Inspecting the HTML for Selectors

Before we write our scraper, we first need to inspect the Foursquare venue details page to figure out which HTML elements contain the data we want. Here’s what you should do:

- Visit a Venue Page: Open a Foursquare venue page in the browser.

- Use Developer Tools: Right-click on the page and select “Inspect” (or press

Ctrl + Shift + I) to open Developer Tools.

- Identify CSS Selectors: Look for the HTML elements that contain the information you want. Here are some common details and their possible selectors:

- Venue Name: Found in an

<h1>tag with the class.venueName. - Address: Found in a

<div>tag with the class.venueAddress. - Phone Number: Found in a

<span>tag with the attributeitemprop="telephone". - Rating: Found in a

<span>tag with the attributeitemprop="ratingValue". - Reviews Count: Found in a

<div>tag with the class.numRatings.

Writing the Foursquare Venue Details Scraper

Now that we have the CSS selectors for the venue details, let’s write the scraper. Just like we did in the previous section, we’ll use Crawlbase to handle the JavaScript rendering and Ajax requests.

Here’s a sample Python script to scrape venue details using Crawlbase and BeautifulSoup:

1 | from crawlbase import CrawlingAPI |

We use Crawlbase’s get() method to get the venue page. The ajax_wait and page_wait options make sure the page loads completely before we start scraping. We use BeautifulSoup to read the HTML and find the venue’s name, address, phone number, rating, and reviews. If Crawlbase can’t get the page, it will show an error message.

Storing Data in a JSON File

After you’ve scraped the venue details, you need to store the data for later use. We’ll save the extracted data to a JSON file.

Here’s the function to save the data:

1 | def save_venue_data(data, filename='foursquare_venue_details.json'): |

You can now call this function after scraping the venue details, passing the data as an argument.

Complete Code Example

Here is the complete code example that puts everything together.

1 | from crawlbase import CrawlingAPI |

Example Output:

1 | { |

Final Thoughts

In this blog, we learned how to scrape data from Foursquare using the Crawlbase Crawling API and BeautifulSoup. We covered the important bits, like inspecting the HTML for selectors, writing scrapers for search listings and venue details, and handling pagination. Scraping Foursquare data can be super useful, but you have to do it responsibly and respect the website’s terms of service.

Using the methods outlined in this blog, you can collect all sorts of venue information like names, addresses, phone numbers, ratings, and reviews from Foursquare. This data can be useful for research, analysis or building your apps.

If you want to do more web scraping, check out our guides on scraping other key websites.

📜 Scrape Costco Product Data Easily

📜 How to Scrape Houzz Data

📜 How to Scrape Tokopedia

📜 Scrape OpenSea Data with Python

📜 How to Scrape Gumtree Data in Easy Steps

If you have any questions or want to give feedback, our support team can help you with web scraping. Happy scraping!

Frequently Asked Questions

Q. Is it legal to scrape data from websites?

The legality of web scraping depends on the website’s terms of service. Many websites allow personal use, but some don’t. Always check the website’s policies before scraping data. Be respectful of these rules, and don’t overload the website’s servers. If in doubt, reach out to the website owner for permission.

Q. How can I scrape venue details from Foursquare?

To scrape venue details from Foursquare, you need to use a web scraping tool or library like BeautifulSoup along with a service like Crawlbase. First, inspect the HTML of the venue page to find the CSS selectors for the details you want, such as the venue’s name, address, and ratings. Then, write a script that fetches the page content and extracts the data using the identified selectors.

Q. How do I handle pagination while scraping Foursquare?

Foursquare has a “See more results” button to show more venues. One of the solutions for handling this in your scraper is the Crawlbase Crawling API. By using the css_click_selector parameter from this API, your scraper can click the “See more results” button to fetch more results. This way, you will capture all the data while scraping.