Have you ever wanted to scrape JavaScript websites? What do we mean by JavaScript enabled sites? React js, Angular, Vue, Meteor, or any other website which is built dynamically or that uses Ajax to load its content.

Web crawling refers to how search engines like Google explore the web to index information while scraping involves extracting specific data from websites.

Over time, these techniques have evolved significantly. What started as simple indexing methods has now become more sophisticated, especially with the emergence of JavaScript-based websites. These sites use dynamic content, powered by JavaScript, making the crawling and scraping process more intricate and challenging.

JavaScript-driven websites are significant in offering a dynamic and interactive user experience, with content that loads and changes dynamically. This shift has transformed how information is presented online, adding complexity to the traditional techniques to scrape and crawl JavaScript websites.

So if you are ever stuck on how to scrape data from a JavaScript website or the one with Ajax, this article would help you.

This is a hands-on article, so if you want to follow it, make sure that you have an account in Crawlbase. It’s straightforward to obtain it, and free. So go ahead and create one here.

Traditional vs. JavaScript Scraping Methods

When it comes to scraping data from websites, there are two primary methods: the traditional approach and the JavaScript-enabled solutions. Let’s explore the differences between these methods and understand their strengths and limitations.

Quick Overview of Traditional Web Scraping Techniques

Traditional web scraping techniques have been around for a while. They involve parsing the HTML structure of web pages to extract desired information. These methods usually work well with static websites where the content is readily available in the page source. However, they face limitations if they have to scrape data from JavaScript website that are relatively heavy.

Limitations of Traditional Web Scraping Techniques

JavaScript has transformed web development, enabling dynamic and interactive content. But for scrapers relying on traditional methods, this can be a roadblock. When a website uses JavaScript to load or modify content, traditional scrapers might struggle to access or extract this data. They’re unable to interpret the dynamic content generated by JavaScript, leading to incomplete or inaccurate data retrieval.

Advantages of Using JavaScript-enabled Scraping Solutions

JavaScript has revolutionized web development, leading to more interactive and dynamic websites. JavaScript website crawler or js crawler bridge the gap. They simulate human interaction by rendering JavaScript elements, enabling access to dynamically loaded content.

These solutions offer a broader reach, providing access to websites heavily reliant on JavaScript. They ensure a more comprehensive extraction of data, enabling accurate information retrieval from the most dynamic web pages.

Getting the Proper JavaScript URL to Crawl

Upon registering in Crawlbase, you will see that we don’t have any complex interface where you add the URLs that you want to crawl. We created a simple and easy to use API that you can call at any time. Learn more about Crawling API here.

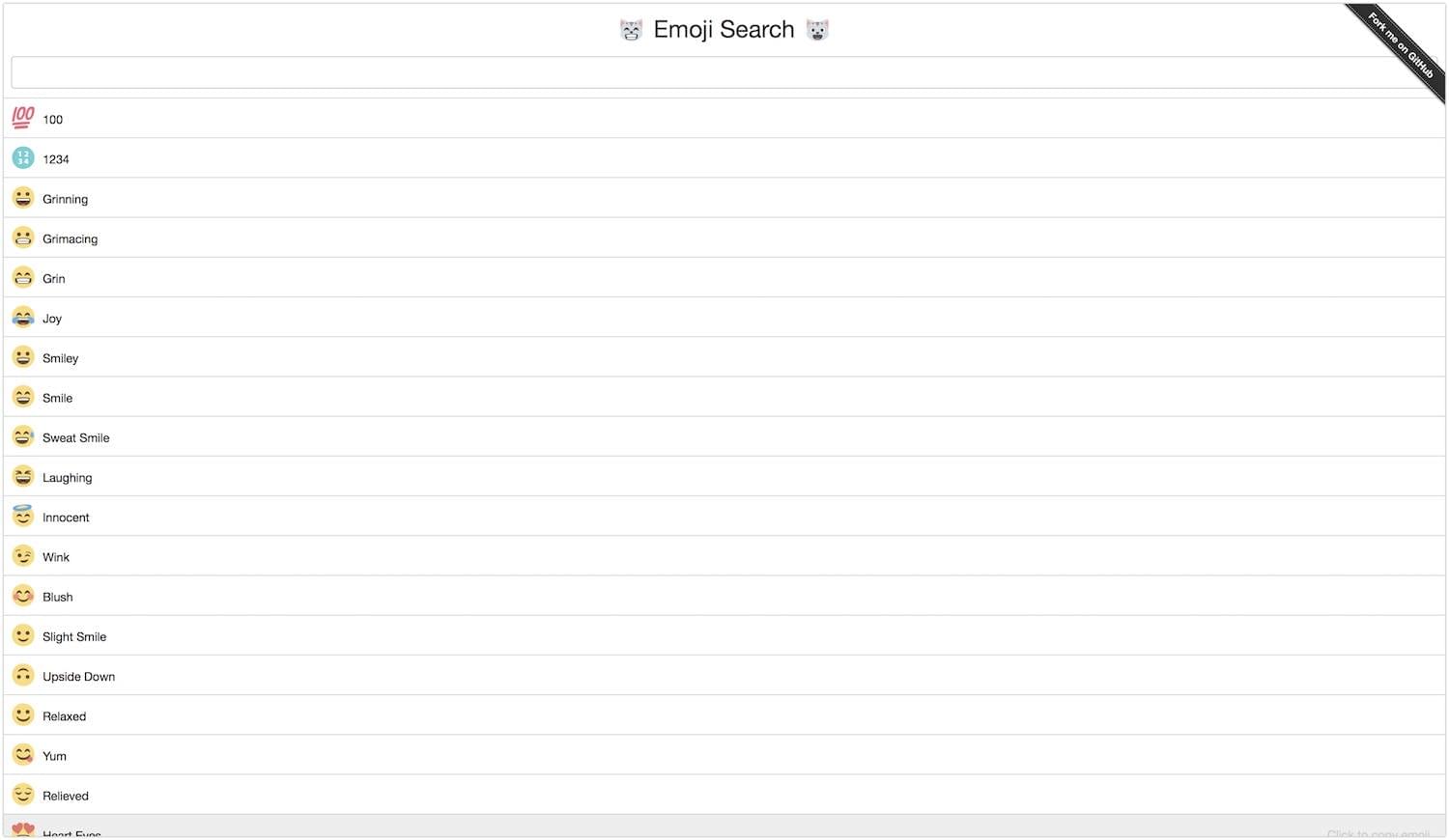

So let’s say we want to crawl and scrape the information of the following page which is created entirely in React js. This will be the URL that we will use for demo purposes: https://ahfarmer.github.io/emoji-search/

If you try to load that URL from your console or terminal, you will see that you don’t get all the HTML code from the page. That is because the code is rendered on the client side by React, so with a regular curl command, where there is no browser, that code is not being executed.

You can do the test with the following command in your terminal:

1 | curl https://ahfarmer.github.io/emoji-search/ |

So how can we scrape JavaScript websites easily with Crawlbase?

First, we will go to my account page where we will find two tokens, the regular token, and the JavaScript token.

As we are dealing with a JavaScript rendered website, we will be using the JavaScript token.

For this tutorial, we will use the following demo token: 5aA5rambtJS2 but if you are following the tutorial, make sure to get yours from the my account page.

First, we need to make sure that we escape the URL so that if there is any special character, it won’t collide with the rest of the API call.

For example, if we are using Ruby, we could do the following:

1 | require 'cgi' |

This will bring back the following:

1 | https%3A%2F%2Fahfarmer.github.io%2Femoji-search%2F |

Great! We have our JavaScript website ready to be scraped with Crawlbase.

Scraping the JavaScript content

The next thing that we have to do is to make the actual request to get the JavaScript rendered content.

The Crawlbase API will do that for us. We just have to do a request to the following URL: https://api.crawlbase.com/?token=YOUR_TOKEN&url=THE_URL

So you will need to replace YOUR_TOKEN with your token (remember, for this tutorial, we will use the following: 5aA5rambtJS2) and THE_URL will have to be replaced by the URL we just encoded.

Let’s do it in ruby!

1 | require 'net/http' |

Done. We made our first request to a JavaScript website via Crawlbase. Secure, anonymous and without getting blocked!

Now we should have the HTML from the website back, including the JavaScript generated content by React which should look like something like:

1 | <html lang="en" class="gr__ahfarmer_github_io"> |

Scraping JavaScript website content

There is now, only one part missing which is extracting the actual content from the HTML.

This can be done in many different ways, and it depends on the language you are using to code your application. We always suggest using one of the many available libraries that are out there.

Here you have some open source libraries that can help you do the scraping part with the returned HTML:

JavaScript scraping with Ruby

JavaScript scraping with Node

JavaScript scraping with Python

Tools and Techniques to Scrape Data from JavaScript Website

There’s a range of web scraping tools available, each with its specialties and capabilities. They offer functionalities to handle JavaScript execution, DOM manipulation, and data extraction from dynamic elements. Headless browsers, such as Crawlbase, simulate full web browser behavior but without a graphical interface, making them ideal for automated browsing and scraping tasks. These tools are essential to scrape JavaScript websites as they enable interaction with and rendering of JavaScript content, allowing data extraction from dynamically loaded elements.

Role of Headless Browsers in JavaScript Rendering

Headless browsers play a crucial role if you have to scrape data from JavaScript website. They load web pages, execute JavaScript, and generate a rendered DOM, similar to how a regular browser does. This functionality ensures that dynamically generated content through JavaScript is accessible for extraction, ensuring comprehensive data retrieval.

Best Practices to Scrape JavaScript Websites

- Understanding Site Structure: Analyze the website’s structure and the way JavaScript interacts with its content to identify elements crucial for data extraction.

- Copy Human Behavior: Mimic human browsing behavior by incorporating delays between requests and interactions to avoid being flagged as a bot.

- Handle Asynchronous Requests: Be proficient in handling AJAX requests and content loaded post-page load, ensuring no data is missed when you scrape JavaScript websites.

- Respect Robots.txt: When you crawl JavaScript websites, always adhere to a website’s robots.txt guidelines and avoid overloading the server with excessive requests.

- Regular Maintenance: Websites frequently update, so ensure the scripts of your JavaScript website scraper adapt to any structural changes for consistent data extraction.

Navigating Challenges in JavaScript Scraping

When you begin to scrape data from JavaScript website, you will face unique set of hurdles, demanding innovative strategies to extract information effectively. But don’t worry, you can be overcoming these challenges with adept solutions to tackle dynamic elements, counter anti-scraping measures, and manage complex rendering methods.

Dealing with Dynamic Elements and Asynchronous Loading

JavaScript-driven websites often load content asynchronously, meaning some elements may load after the initial page load. This poses a challenge for traditional scraping as the content isn’t immediately available. You can use a JavaScript website scraper with a headless browser that allows you to wait for elements to load dynamically before extracting data.

Overcoming Anti-Scraping Measures

Websites implement measures to deter scraping, including CAPTCHAs, IP blocking, or user-agent detection. To bypass these, rotate IP addresses, mimic human behavior, and use proxy servers to prevent getting blocked. Implementing delays and limiting request frequencies also help avoid detection.

Strategies for Handling Heavy Client-Side Rendering

Client-side rendering, typical in modern web applications, can make scraping complex due to the reliance on JavaScript to load and display content. Using headless browsers can simulate real browsing experiences and extract data from the fully rendered page, bypassing this challenge.

How JavaScript Web Crawlers Influence Various Industries?

A JavaScript website scraper has played a significant role in redefining how businesses harness information and insights from the web. Let’s explore the profound impact this technique has had on diverse sectors, revolutionizing data acquisition and driving innovation:

1. E-commerce

In the e-commerce sector, you can scrape data from JavaScript website to avail of unparalleled opportunities. Retailers crawl JavaScript websites to track pricing trends, monitor competitors, and optimize their product offerings. By scraping dynamic data, businesses can adjust pricing strategies and redesign their inventory, leading to enhanced competitiveness and improved market positioning.

2. Financial Sector

In the financial domain, a JavaScript website crawler empowers institutions with real-time market data extraction. This scraped data helps in making informed investment decisions, analyzing trends, and monitoring financial news and fluctuations. You can swiftly scrape JavaScript websites to access and analyze complex financial information to create strategies that fit best in ever-evolving markets.

3. Research and Analytics

A JavaScript website scraper makes difficult tasks of research and analysis easier for you. From gathering data for academic purposes to extracting valuable information for market analysis, you can crawl JavaScript websites to streamline information collection process. Researchers leverage this approach to track trends, conduct sentiment analysis, and derive actionable insights from vast online sources.

4. Marketing and SEO

Scrape JavaScript websites to understand consumer behavior, market trends, and SEO optimization. Marketers can scrape data from JavaScript website of competitors, social media platforms, and search engine results. This information helps in designing solid marketing strategies and improving website visibility.

5. Healthcare and Biotechnology

In healthcare and biotech, a JavaScript website scraper facilitates gathering crucial medical data, tracking pharmaceutical trends, and monitoring regulatory changes. This tool assists in the research process, drug discovery, and development of personalized healthcare solutions.

Legal and Ethical Considerations

Before you begin to scrape JavaScript websites, it’s important to comprehend the legal framework surrounding this practice. This includes knowing the regulations related to data collection, copyright laws, and terms of use stipulations on websites you’re scraping.

Ethics are imperative to ensure that scraping activities are conducted ethically, respecting privacy, and avoiding data misuse or infringement. Abiding by website terms of service is a non-negotiable aspect of web scraping. Ensuring compliance with these terms helps maintain ethical standards and legal adherence when you scrape data from JavaScript website.

Bottom Line!

Through this blog about how to crawl JavaScript websites, we’ve also discussed the challenges, explored effective solutions, addressed legal and ethical considerations, and assessed the impact on diverse industries. JavaScript scraper tackles complexities while staying compliant and ethical.

The future belongs to AI-powered tools like Crawlbase, enabling more efficient scraping, better handling of dynamic elements, and enhanced compliance with legal norms.

Following best practices remains a prerequisite. Leveraging sophisticated tools such as Crawlbase, staying updated on legal boundaries, and maintaining ethical conduct will ensure successful scraping. Adapting to technological advancements and evolving ethical standards is the fundamental principle here.

In conclusion, as JavaScript-based websites expand, navigating the nuances of scraping them proficiently, responsibly, and ethically is essential for businesses and industries. Crawlbase emerges as an exemplary JavaScript website scraper, empowering users to scrape with efficiency and compliance.