Are you planning to build a simple web scraper? Do you already have any idea how to start, or are you still looking for the right tools for the job? Then, there is no need to search any further.

In this article, we aim to guide you on how you can build a reliable web scraper from scratch by using Node.js, which is one of the best tools for building a scraper.

What is NodeJS used for?

So first, why do we recommend Node.js for web scraping? To answer that, let us talk about what exactly is Node.js and what are its advantages over other programming languages.

Node.js, in a nutshell, is an open-source JavaScript runtime environment that can be used outside a web browser. Basically, the creators of Node.js took JavaScript, which is mostly restricted to a web browser, and allowed it to run on your personal computer. With the help of Google Chrome’s v8 engine, we are now able to run Javascript on our local machine, which enables us to access files, listen to network traffic, and even listen to HTTP requests your machine gets and send back a file. The database can also be accessed directly like anything you can do with PHP or Ruby on Rails.

When it comes to coding, it is impossible not to know Javascript. It is the most popular programming language used as a development tool on the client side in almost 95 percent of the existing websites nowadays. However, with the launch of Node.js, JavaScript has now become a versatile full-stack development programming language.

Benefits of Using Node.js Scraping Framework

There are a lot of reasons why Node.js has become the industry standard. Companies like Netflix, eBay, and PayPal, to name a few, have integrated Node.js into their core. So, to give you a much broader sense of why you may want to use Node.js, we have listed down some of its advantages:

Processing Speed: Node.js is considerably fast due mostly to Chrome’s v8 engine. Instead of an interpreter, it utilizes the engine to compile JavaScript into machine code. The performance is further enhanced by processing concurrent requests that use an event loop in just a single thread. Since it is modeled for non-blocking input-output, it results in less CPU usage when processing multiple requests simultaneously.

Lightweight and Highly Scalable: Its capability to cope and perform well in an expanding workload makes it favorable for most developers. Node.js makes it easier to update and maintain applications by decoupling each part while you add new or even fix existing architectures without the need to change or adjust other parts of your project or application. Regarding development, it is also possible to reuse and share codes through modules like individual blocks of code.

Packages/Libraries: You will not be disappointed with the abundance of packages that can be used with Node.js. Very few programming languages enjoy such a lush ecosystem. Literally, thousands of tools and libraries are available for JavaScript development and ready at your disposal via NPM, an online repository for publishing open-source projects. With steady support from a community that is always growing, you are almost guaranteed to find new packages that can help with your specific needs.

Community Support: Naturally, an open-source project like Node.js will have a massive community of developers providing solutions and guidance all over the internet. Whether you go to Github and search for repositories or seek answers through an online community like Stack Overflow, you will always have a clear route to resolve any issues you might experience along the way.

What is Node.js Scraping Framework?

A Node.js scraping framework is a set of tools, libraries, and conventions designed to simplify the process of scraping data with Node.js. These frameworks provide developers with pre-built functionalities and abstractions that streamline common scraping tasks, such as making HTTP requests, parsing HTML, handling concurrency, and managing data extraction. If you’re looking to build efficient and scalable web applications, you might want to Hire MEAN Stack Developers who specialize in using Node.js along with MongoDB, Express.js, and Angular for full-stack development.

Node.js scraping frameworks typically offer features like:

- HTTP Request Handling: Simplified methods for making HTTP requests to fetch web pages.

- HTML Parsing: Tools for parsing and navigating HTML documents to extract relevant data.

- Concurrency Management: Support for handling multiple scraping tasks concurrently to improve efficiency.

- Data Extraction: Utilities for extracting structured data from HTML documents using CSS selectors, XPath, or other methods.

- Error Handling: Mechanisms for handling errors that may occur during scraping data with Node.js, such as connection timeouts or invalid HTML structures.

- Customization and Extensibility: Options for customizing scraping behavior and extending the Node.js scraping framework functionality to suit specific project requirements.

Popular Node.js scraping frameworks include Puppeteer, Cheerio, and Axios, among others. These frameworks abstract away many of the complexities of web scraping, allowing developers to focus on building robust scraping applications efficiently.

What is the Best Scraper for NodeJS?

The top web scraper for Node.js varies based on your target content and project complexity. To scrape static websites, Axios + Cheerio is a quick and simple option giving you speed and ease of use. But when you need to handle dynamic, JavaScript-rendered content, Puppeteer or Playwright stands out. These tools let you interact with web pages just like a real browser would.

If you’re working on bigger more complex scraping projects or need to test across different browsers, Selenium and Scrapy (through ScrapyJS) are strong scalable options. In the end, your choice comes down to what you need most—whether that’s speed, simplicity, or advanced features.

Why Use Crawlbase for Your Web Scraper?

You can write the best code in town, but your scraper will only be as good as your proxies. If you are into web scraping, you must know by now that a vast proxy pool should be an integral part of a crawler. Using a pool of proxies will significantly increase your geolocation options, number of concurrent requests, and, most importantly, your crawling reliability. However, this might prove difficult if you have a limited budget. Luckily, Crawlbase is an affordable and reliable option for you. Using the Crawling API gives you an instant access to thousands of residential and data center proxies. Combine this with Artificial Intelligence, and you have the best proxy solution for your project.

How to Build a Web Scraper Using Node.js and Crawlbase

Now, we are on the best part. You can build a web scraper with Node.js in a few simple steps. We only need to prepare a few things before diving into coding. So, without further ado, let us go through the steps:

- Create a free Crawlbase account to use the Crawling API service.

- Open Node.js and create a new project.

- Install the Crawlbase module through the terminal by executing the following command:

1 | npm i crawlbase |

- Create a new .js file where we will write our code.

- Open the .js file and make good use of the Crawlbase Node library.

For the first two lines, make sure to bring all the dependencies by requiring the necessary API and initializing your Crawlbase request token as shown below:

1 | const { CrawlingAPI } = require('crawlbase'); |

Perform a GET request to pass the URL you wish to scrape and add any options from the available parameters in the Crawling API documentation.

The code should now look like this:

1 | const { CrawlingAPI } = require('crawlbase'); |

You can also use any of the available data scrapers from Crawlbase so you can get back the scraped content of the page:

1 | const { CrawlingAPI } = require('crawlbase'); |

The code is complete, and you can run it by pressing F5 on Windows.

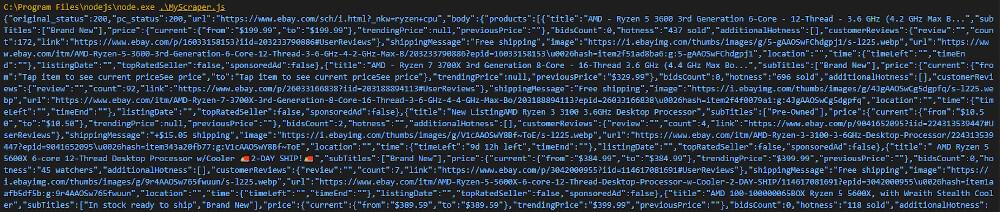

This simple code will crawl any URL using the Crawling API, which is built on top of millions of proxies and will return the results in JSON format. However, this guide will not be complete without showing you how to properly scrape pieces of information using other available packages in Node.js.

Build a Web Scraper With Cheerio

So, let us build another version of the scraper, but this time, we will integrate Cheerio, a module available for Node specifically built for web scraping. With it, we can be more accessible to select specific things from a website using jQuery.

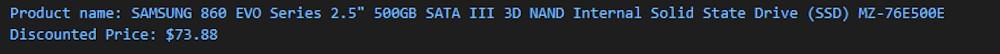

In this example, we will try to get the product name and current price of a product on Newegg.

Let us start by installing the Cheerio package:

1 | npm i cheerio |

At this point, you may choose to overwrite your previous code or create a new .js file and declare the constants again.

1 | const { CrawlingAPI } = require('crawlbase'); |

Pass your target URL again to the API by making a GET request with an if/else statement to properly set a condition.

1 | api |

Lastly, create a function for Cheerio to parse the HTML and find the specific CSS selector for the product name and price.

1 | function parseHtml(html) { |

The complete scraper should now be like the following:

1 | const { CrawlingAPI } = require('crawlbase'); |

Execute the code to get your results:

Tips and Tricks for Efficient Web Scraping with Node.js

When you want to build a web scraper with Node.js, follow these tips and tricks to make your scraping process smoother and more efficient. Here’s a rundown of some key tips and tricks:

- Review Website Terms: Before scraping data with Node.js, take a moment to review the website’s terms of use. Ensure that scraping isn’t prohibited, and be mindful of any restrictions on frequency.

- Manage HTTP Requests: To avoid overwhelming the website, limit the number of HTTP requests. Controlling the frequency of requests helps prevent overloading and potential issues during scraping data with Node.js.

- Set Headers Appropriately: Copy a regular user behavior by setting appropriate headers in your HTTP requests. This helps you blend in and reduces the chances of being detected as a bot.

- Implement Caching: Reduce the strain on the website by caching web pages and extracting data. This not only lightens the load but also speeds up your scraping process.

- Handle Errors: Understand that web scraping can be tricky due to the diverse nature of websites. Be prepared to encounter errors and handle them gracefully.

- Monitor and Adjust: Keep a close eye on your scraping activity. Monitor performance metrics and adjust your scraping settings, such as rate limiting and headers, as needed to ensure smooth operation.

Enjoy a smooth process of scraping data with Node.js with these tiny tried and tested tips!

Conclusion

Hopefully, this article clarified that Node.js fits perfectly well for web scraping. We showed you how to build a web scraper with Node.js. The simple scraper we have built demonstrated how optimized the v8 engine is when making an HTTP request, and the fast-processing speed of each of your crawls will save you precious time when scraping for content. The language itself is very lightweight and can easily be handled by most modern machines. It is also suitable for any project size, from a single scraping instruction like what we have here to huge projects and infrastructures enterprises use.

Cheerio is just one of the thousands of packages and libraries available in Node, ensuring you will always have the right tool for any project you choose. You can use the example here to build a simple web scraper and get whatever content you need from any website you want. The Node ecosystem will give you freedom and boundless possibilities. Perhaps the only limitation right now is your creativity and willingness to learn.

Lastly, if you want an effective and efficient web scraper, it is best to use proxies to avoid blocks, CAPTCHAs, and any connection issues you may encounter when crawling different websites. Using the crawling tools and scraping tools from Crawlbase will save you countless hours finding solutions to bypass blocked requests so you can focus on your main goal. With the help of Crawlbase’s artificial intelligence, you are assured that each of your requests sent to the API will provide the best data outcome possible.