Large Language Models (LLMs) like Claude, ChatGPT, and others are great at generating text, answering questions, and simulating intelligent behavior. But when it comes to real-time data from the web, they fall short, causing developers to be stuck with:

- Manually pasting crawled/scraped results into prompts

- Hallucinations from missing or outdated context

- Code editors suggesting fixes without runtime awareness

- Agents that break the moment your data updates

Why? Because LLMs are fundamentally disconnected from the live web.

The Crawlbase Web MCP Server is the missing link between artificial intelligence and real-world data. It empowers your AI tools and autonomous agents to securely and reliably fetch, parse, and act on live web information.

What you’ll learn in this AI web scraping guide

- How to integrate the Crawlbase Web MCP Server with tools like Claude Desktop, Cursor, and Windsurf.

The LLM Bottleneck: Why AI Agents Struggle with Live Web Data

At the heart of every LLM, from Claude to ChatGPT, is a massive static training dataset. That means while these models can reason, respond, and predict, they can’t observe. They don’t have live access to the changing world around them.

That’s because:

- LLMs are not browsers

- They operate in secure, sandboxed environments that restrict outbound web access.

- Their knowledge is frozen in time, and updates occur only during occasional retraining.

Why Model Context Protocol (MCP) Matters

To address this disconnect, you can utilize the Model Context Protocol (MCP), a standardized method that enables AI models and external tools to communicate effectively.

Think of it like USB for AI.

Just as USB made it easy to plug any device into any computer, MCP makes it easy for AI agents to integrate with any tool or data source, including live web sources.

MCP defines a consistent interface for LLMs to request and retrieve context from external systems, and that’s where the Crawlbase Web MCP Server comes in.

How MCP Unlocks Real-Time Web Access

By speaking the MCP protocol, the Crawlbase Server becomes a plug-and-play bridge between AI models and live web content. AI tools like Claude Desktop, Cursor, and Windsurf can now:

- Request URLs or search queries

- Get real-time, structured web data in return

- Inject that data back into the model’s context window for reasoning and response

What is the Crawlbase Web MCP Server?

The Crawlbase Web Model Context Protocol (MCP) Server is the connective tissue between LLM agents and the real-time web.

Built on top of Crawlbase’s proven scraping infrastructure (used by over 70,000 developers), it enables AI tools like Claude Desktop, Cursor, and Windsurf to tap directly into fresh, structured web data — without encountering blocks, rate limits, or hallucinations. It plugs directly into tools that support the Model Context Protocol (MCP) and handles:

- Real-time web scraping

- JavaScript rendering

- Proxy rotation and anti-bot evasion

- Structured output for seamless LLM integration

How to Get Started with Crawlbase Web MCP Server

The Crawlbase Web MCP Server serves as your launchpad for real-time intelligence, enabling the development of AI agents, streamlining research, and enhancing productivity. Here’s how to get started:

Step 1: Get your Crawlbase Tokens

First off, secure your account with Crawlbase to get your first 1,000 requests free and extra 9,000 when you add your credit card. Sign up, go to your account documentation, and save a copy of your Crawling API Normal and JavaScript tokens.

Step 2: Integrate Crawlbase Web MCP Server for AI Web Scraping

Visit the GitHub Crawlbase repository page. You can find the code and documentation for the Crawlbase Web MCP Server here.

Run Context Commands

These are the special Crawlbase commands you can use to get and work with web content in different ways for use in LLM contexts once the MCP server is installed.

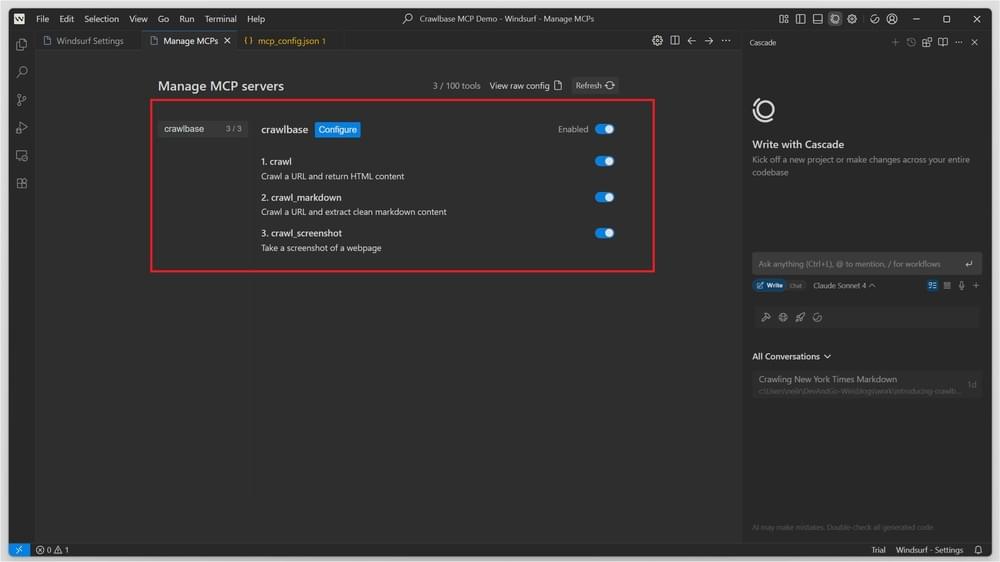

crawl- Crawl a URL and return HTMLcrawl_markdown- Extract clean markdown from a URLcrawl_screenshot- Take a screenshot of a webpage

Crawlbase Web MCP Setup in Claude Desktop

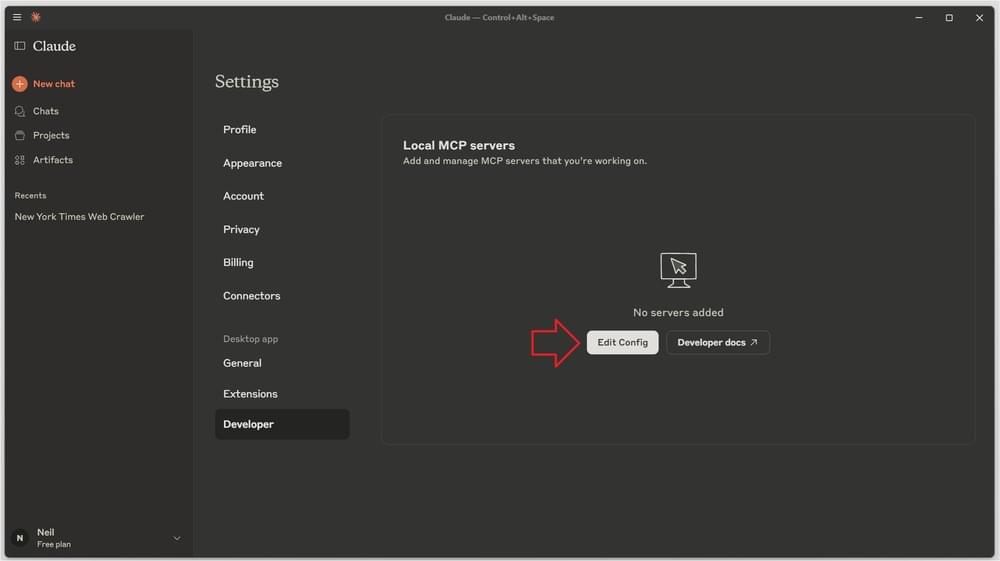

Step 1: Open Claude Desktop → File → Settings → Developer → Edit Config

Step 2: Copy the Crawlbase Web MCP, then paste it in the claude_desktop_config.json file

1 | { |

Be sure to replace your_token_here and your_js_token_here with your actual Crawlbase tokens in the configuration file.

Step 3: Save the config file and restart Claude Desktop.

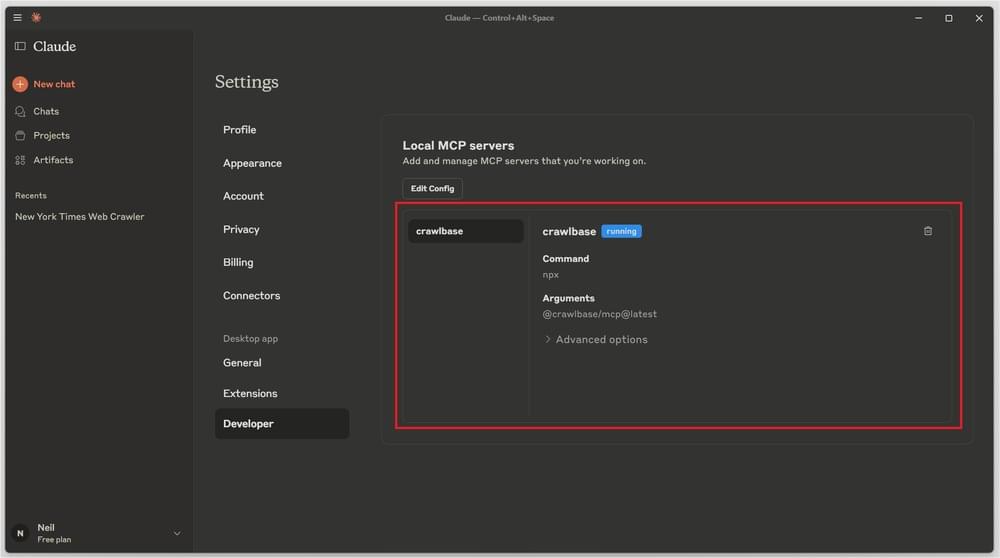

If you return to the settings, Crawlbase Web MCP will appear under Local MCP servers.

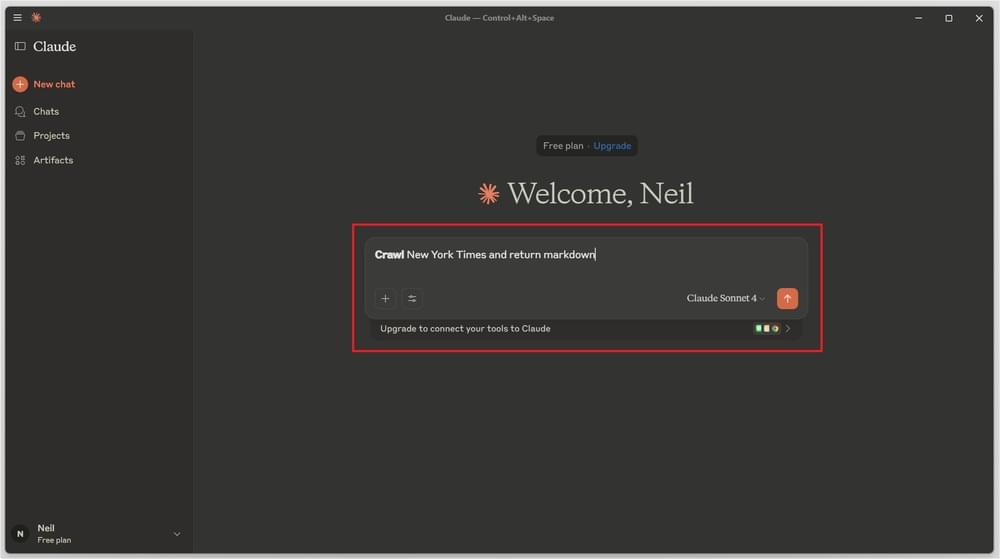

Step 4: Utilizing the MCP

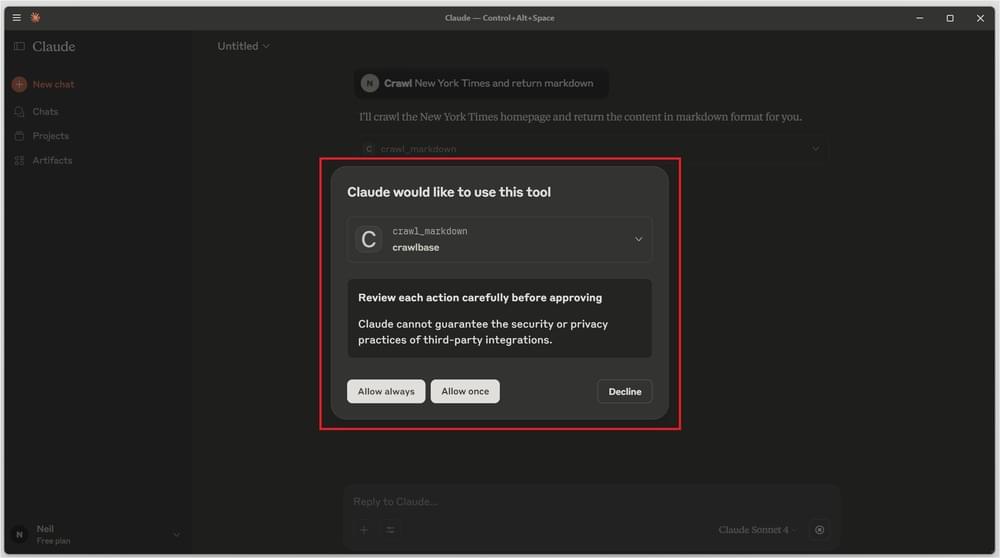

You’re now ready to use Crawlbase Web MCP. To begin, simply enter a prompt like:

“Crawl New York Times and return markdown”.

If a confirmation dialog for using Crawlbase Web MCP appears, be sure to grant permission when prompted.

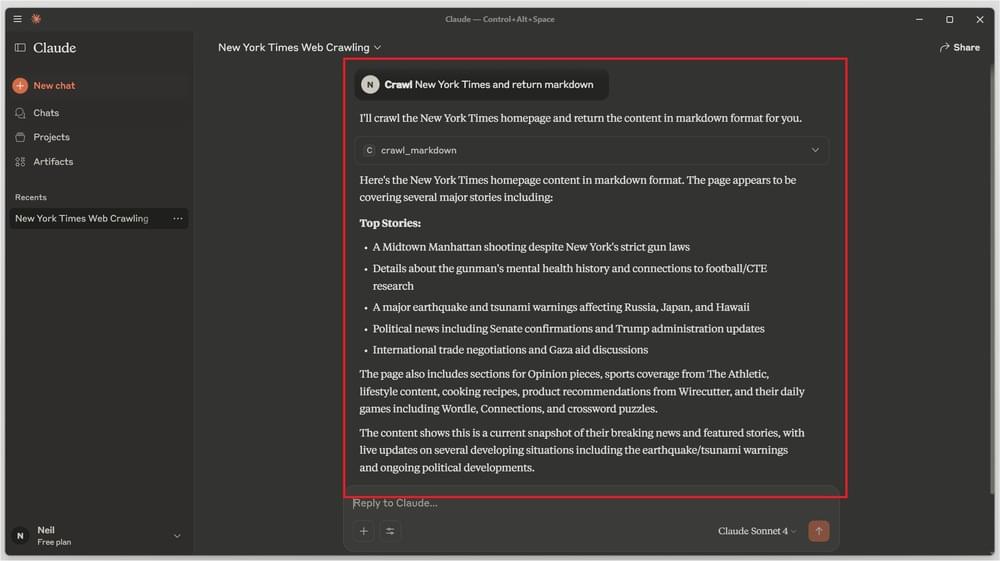

Claude replies with the output, formatted in markdown.

Crawlbase Web MCP Setup in Cursor IDE

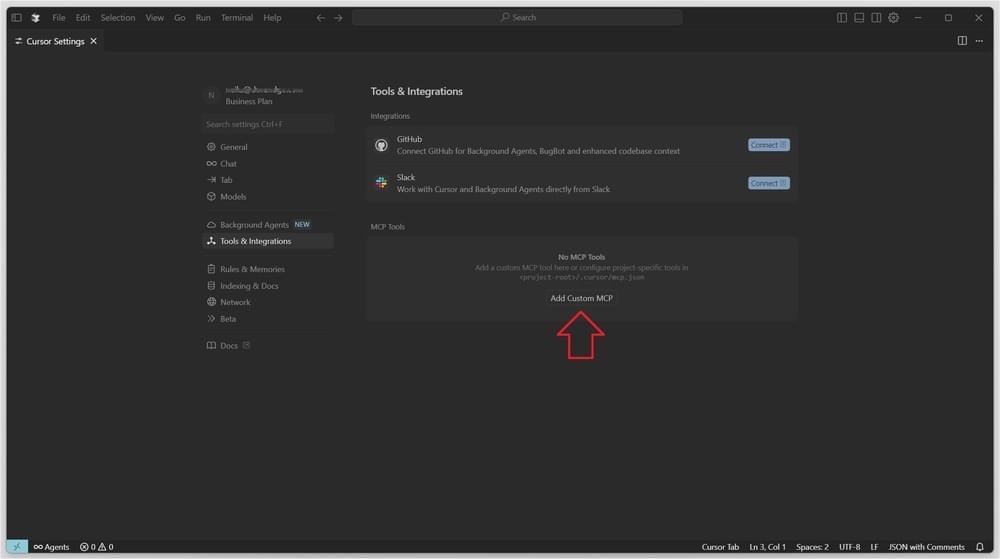

Step 1: Open Cursor IDE → File → Preferences → Cursor Settings → Tools and Integrations → Add Custom MCP

Step 2: Copy the Crawlbase Web MCP, then paste it in the mcp.json file

1 | { |

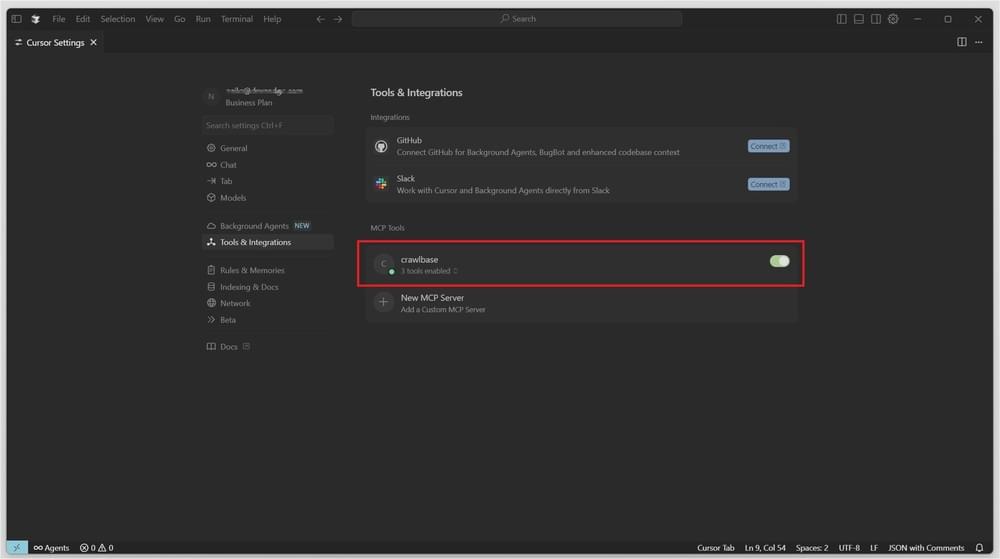

Step 3: Once the config file is saved, an indicator will confirm that the Crawlbase Web MCP is active.

Note: Restart Cursor if you don’t see this indicator after saving the file.

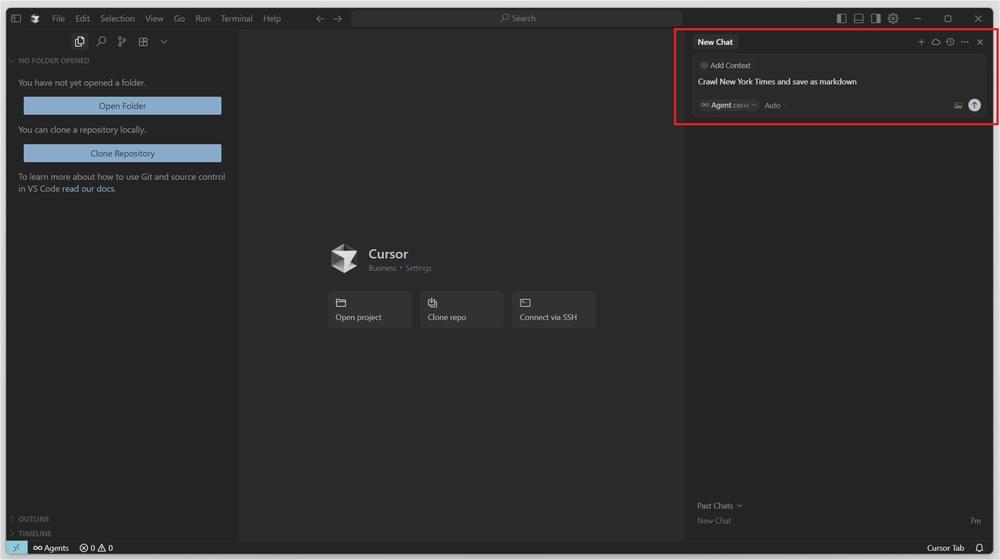

Step 4: Utilize the Chat window to send commands to the Crawlbase Web MCP.

You’re all set to start using Crawlbase Web MCP. Try entering something like:

“Crawl New York Times and save as markdown”

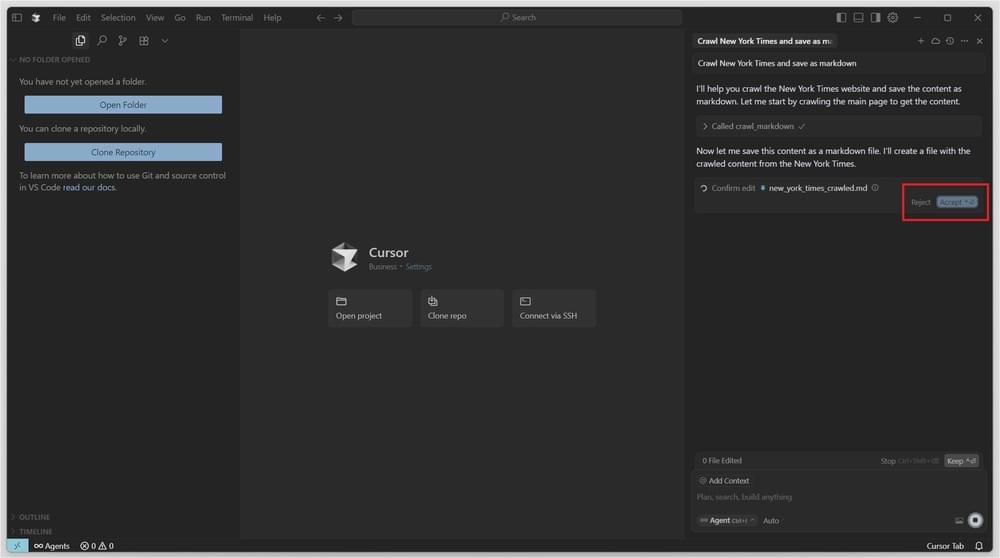

You might see a confirmation button—just click it to proceed.

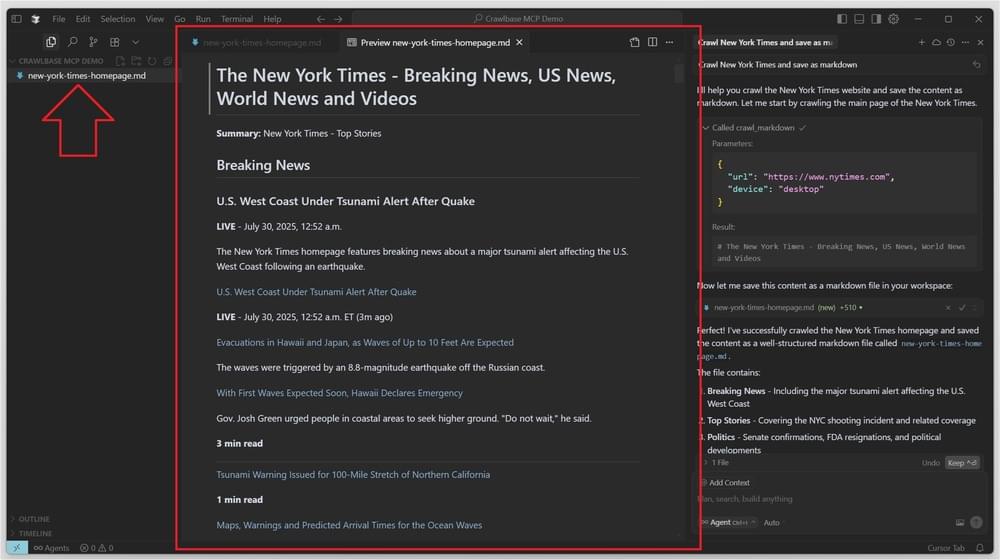

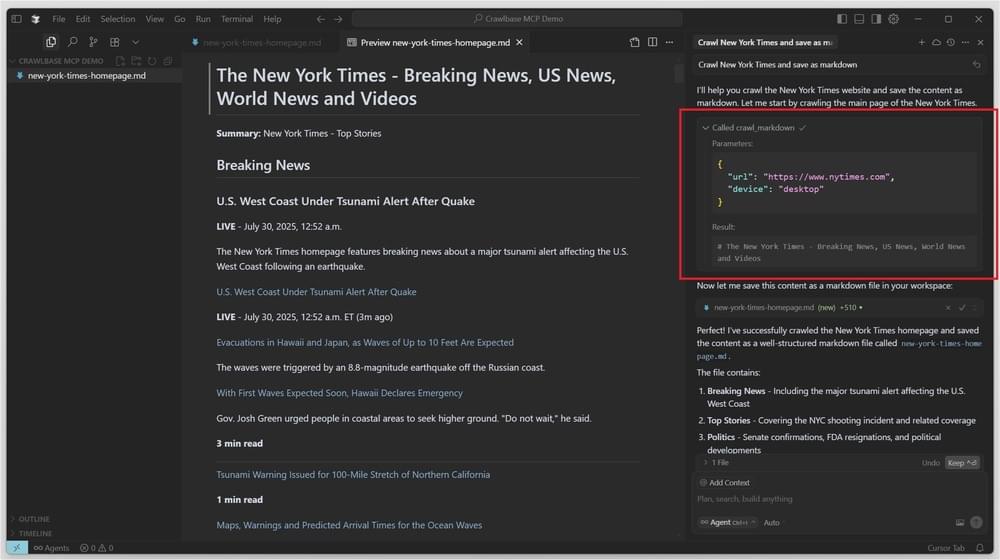

Below is the result generated from the prompt. As shown, Cursor created a markdown file and saved the output to it.

As you can see, Cursor delegates the live crawling task to the Crawlbase Web MCP server.

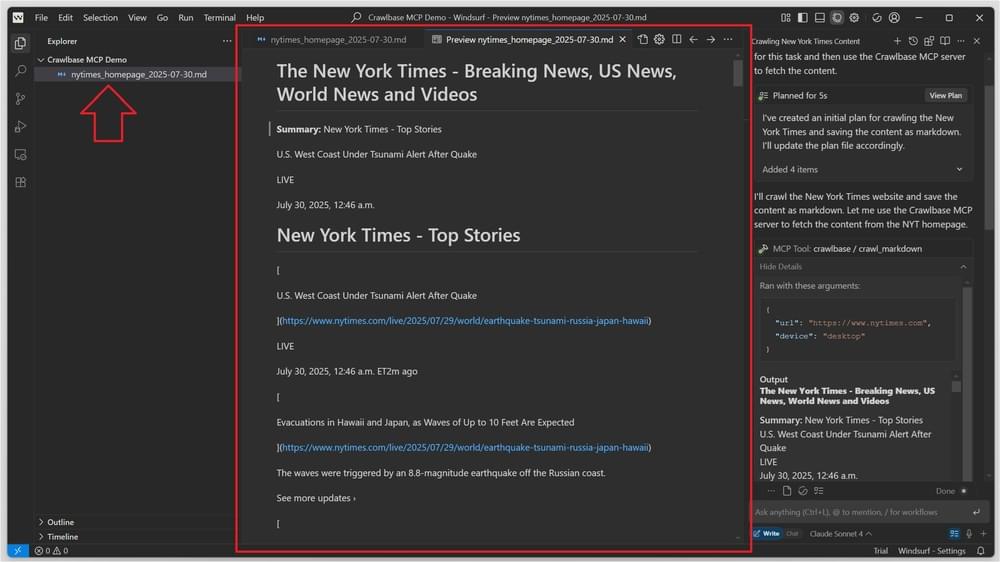

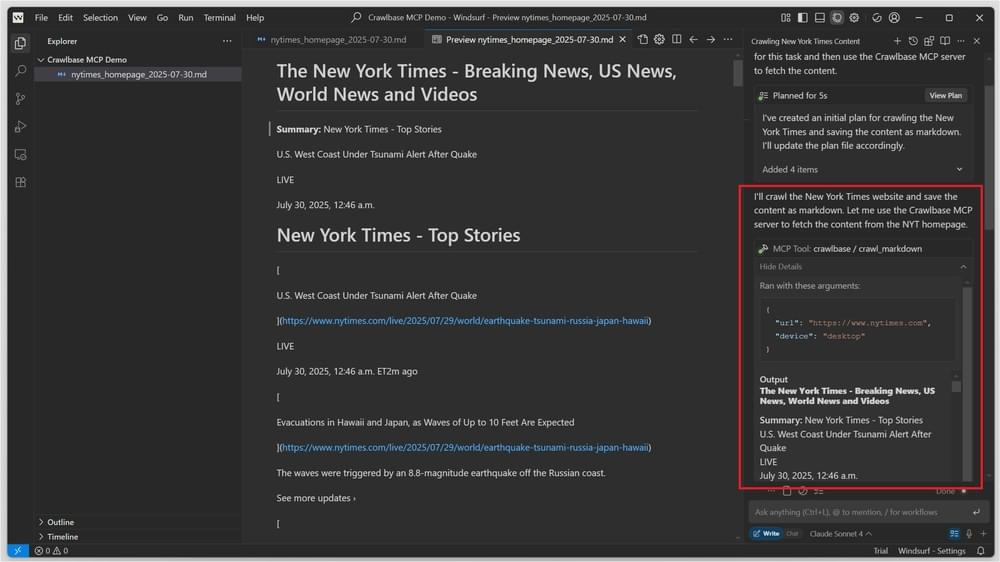

Crawlbase Web MCP Setup in WindSurf

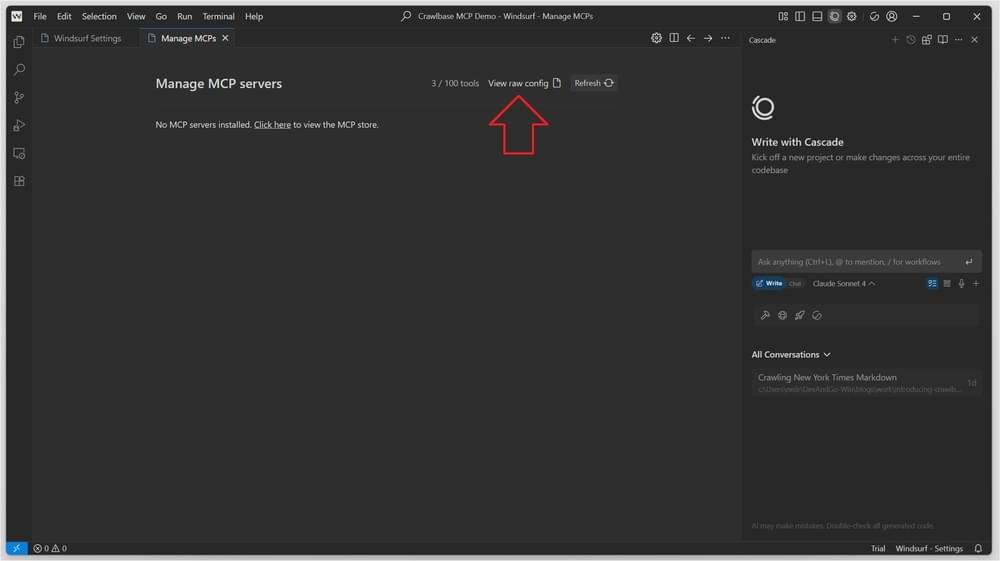

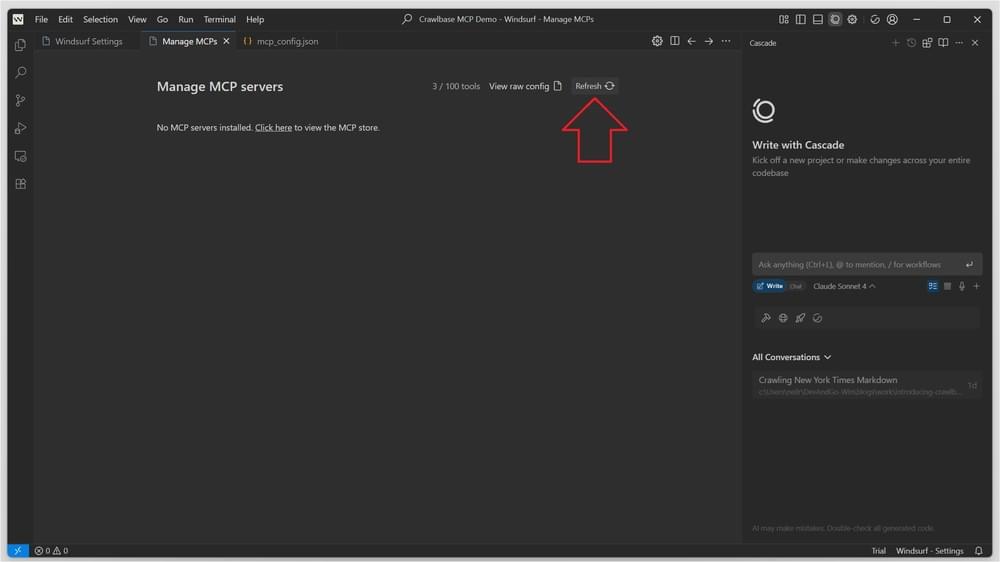

Step 1: Open WindSurf IDE → File → Preferences → WindSurf Settings → General → MCP Servers → Manage MCPs → View raw config

Step 2: Copy the Crawlbase Web MCP, then paste it in the mcp_config.json file

1 | { |

Be sure to replace your_token_here and your_js_token_here with your actual Crawlbase tokens in the configuration file.

Step 3: Save the config file and hit Refresh

The Crawlbase Web MCP should appear in the list of MCP servers.

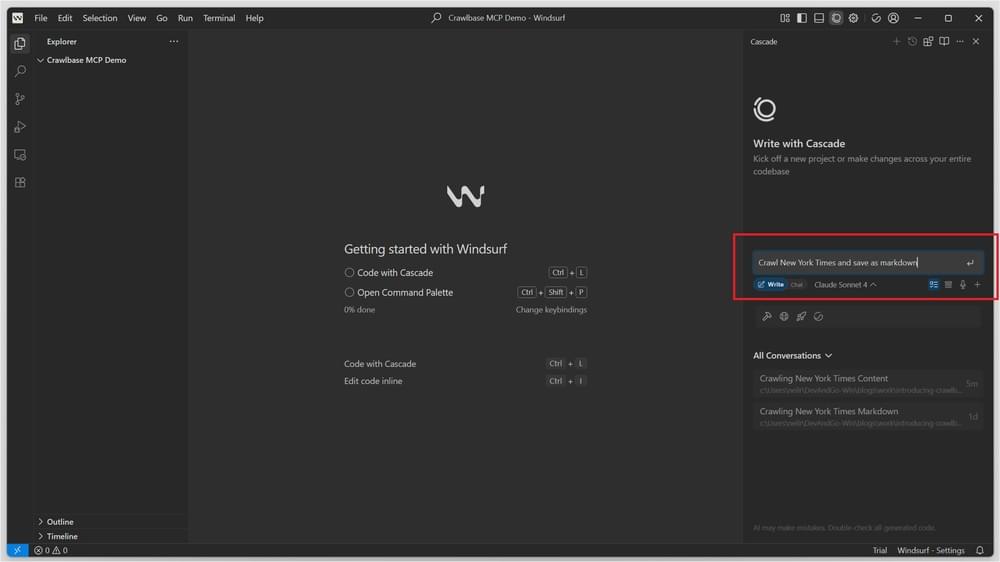

Step 4: Use the Chat window to send commands to the Crawlbase Web MCP.

Now that everything’s set up, we’ll use the same prompt as before:

“Crawl New York Times and save as markdown”

Here’s what the prompt produced—Windsurf generated a markdown file and saved the results.

As shown once more, Windsurf hands off live crawling to the Crawlbase Web MCP server.

That’s it, now your LLMs are capable of navigating and searching the internet without getting blocked.

Don’t let your agents work blind. Give them the power to see, learn, and respond with live data. Sign up on Crawlbase today and start building AI that’s truly connected to the world.