In today’s data-driven world, Facebook data extraction has become a pivotal aspect of harnessing valuable insights from social media platforms. Extracting crucial information from Facebook, one of the largest social networks, is instrumental in making data-informed decisions. The platform boasts a vast repository of dynamic content like posts, comments, user profiles, and more. However, efficiently and accurately extracting this content is challenging, especially given Facebook’s frequent updates to its layout and API.

In this technical blog, we will unlock the secrets of dynamic content scraping from Facebook and master the art of data extraction using Crawlbase Crawling API. Crawlbase Crawling API is a powerful web scraping tool that empowers developers to efficiently crawl and scrape websites, including dynamic platforms like Facebook.

Understanding Dynamic Content Scraping

Important content on many websites, including Facebook, is generated dynamically on the client side using JavaScript and AJAX calls. As a result, traditional web scraping methods may fall short on capturing this dynamic content.

However, Crawlbase Crawling API is equipped with advanced capabilities to handle dynamic content effectively. First, let’s understand the challenges of scraping dynamic content from Facebook and how Crawlbase Crawling API can overcome them.

Challenges in Scraping Dynamic Content from Facebook

Extracting dynamic content from Facebook comes with a set of tricky issues. These problems arise due to Facebook’s frequent updates and complex structure. Traditional methods of copying data struggle with these challenges, requiring creative solutions to make sure we capture all the needed information accurately and completely.

- Infinite Scrolling

Facebook’s user interface employs infinite scrolling, dynamically loading new content as users scroll down the page. This poses a hurdle for conventional web scrapers, which struggle to capture all posts simultaneously. Extracting these continuously loading posts efficiently requires a mechanism to replicate the scrolling action while capturing content at each step.

- AJAX Requests

Various elements on Facebook, such as comments and reactions, are loaded via AJAX requests after the initial page load. Traditional scraping techniques often overlook these dynamically loaded components, resulting in incomplete data extraction. Addressing this challenge requires a scraping solution that can handle and capture data loaded via AJAX.

- Rate Limiting

Facebook enforces strict rate limits on data requests to prevent excessive scraping and protect the user experience. Breaching these limits can lead to temporary or permanent bans. Adhering to these limits while still efficiently gathering data requires a balance between scraping speed and compliance with Facebook’s regulations.

- Anti-Scraping Mechanisms

Facebook employs various anti-scraping measures to prevent data extraction. These measures include monitoring IP addresses, detecting unusual user behavior, and identifying scraping patterns. Overcoming these mechanisms requires techniques such as IP rotation and intelligent request timing to avoid being flagged as a scraper.

How does the Crawlbase Crawling API Address these challenges

The Crawlbase Crawling API serves as a comprehensive solution for effectively addressing the challenges associated with scraping dynamic content from platforms like Facebook. Its advanced capabilities are tailored to overcome the specific hurdles posed by client-side rendering, infinite scrolling, AJAX requests, rate limiting, and anti-scraping mechanisms.

- Handling Client-Side Rendering and Infinite Scrolling

The API’s intelligent approach to handling dynamic content is evident in its ability to mimic user behavior. Through the “scroll“ parameter, users can instruct the API to simulate scrolling, emulating how users interact with the platform. By specifying the “scroll_interval“ the duration of scrolling can be fine-tuned, ensuring that the API captures all content as it dynamically loads on the page. This functionality is particularly valuable for platforms like Facebook, where posts and updates load continuously as users scroll down.

1 | # CURL Example |

- Managing AJAX Requests

Many dynamic elements on platforms like Facebook, such as comments and reactions, are loaded via AJAX requests. Traditional scraping methods often miss these elements, leading to incomplete data extraction. The Crawlbase Crawling API, however, is designed to intelligently handle AJAX requests. By using “ajax_wait” parameter, the Crawling API will captures the content after all the AJAX calls on the page are resolved, ensuring that the extracted HTML contain the valuable information we are looking for.

1 | # CURL Example |

- Intelligent Rate Limiting

Facebook enforces strict rate limits to prevent excessive scraping, and violating these limits can lead to temporary or permanent bans. The Crawlbase Crawling API incorporates intelligent rate-limiting mechanisms that help users navigate these restrictions. By dynamically adjusting the request rate based on Facebook’s guidelines, the API reduces the risk of triggering rate-limiting measures. This ensures ongoing data extraction and upholds a morally and legally sound scraping process.

- Overcoming Anti-Scraping Mechanisms

Facebook employs various anti-scraping mechanisms to deter unauthorized data extraction. These mechanisms include identifying and blocking suspicious IP addresses and user agents. The Crawlbase Crawling API mitigates this challenge by incorporating IP rotation. This feature ensures that scraping requests originate from a pool of different IP addresses, reducing the likelihood of detection and blocking. By smartly managing IP addresses, the API enables scraping to be performed without disruptions caused by anti-scraping measures.

Configuring Crawlbase Crawling API for Facebook Data Extraction

Let’s dive into a step-by-step guide on how to configure Crawlbase Crawling API for Facebook data extraction using Node.js.

Step 1: Install the Crawlbase Node.js Library

First, ensure you have Node.js installed on your machine. Then, install the Crawlbase Node.js library using npm:

1 | npm install crawlbase |

Step 2: Obtain a Crawlbase JavaScript Token

To use Crawlbase Crawling API, you’ll need an API token from Crawlbase. You can obtain a token by signing up for an account on Crawlbase and navigating to the documentation section.

Crawlbase offers two types of token, a normal (TCP) token and JavaScript (JS) token. Choose the normal token for websites that don’t change much like static websites. But if you want to get information from a site that only works when people use web browsers with JavaScript or if the important stuff you want is made by JavaScript on the user’s side, then you should use the JavaScript token. Like with Facebook, you need the JavaScript token to get what you want.

Step 3: Set Up the Crawlbase Crawling API

Now, let’s write a simple Node.js script to extract dynamic Facebook posts:

1 | // Import the Crawling API |

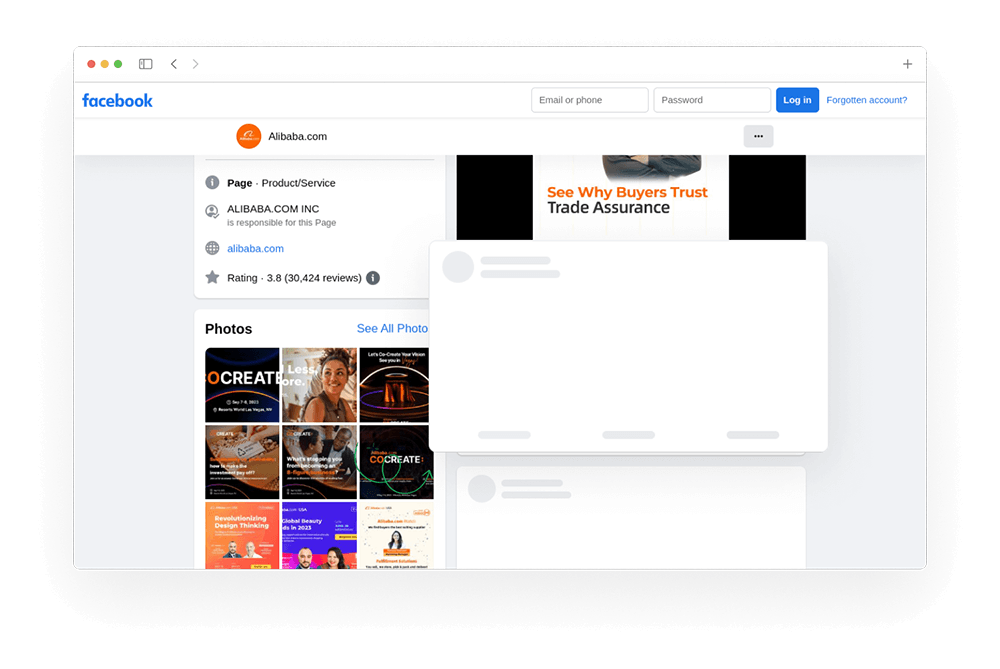

This code snippet employs Crawlbase’s Crawling API to crawl HTML from an Alibaba Facebook page and save it in “output.html” file. It configures the API with necessary parameters, requests data extraction using specified options, and logs the extracted content if the response is successful. One thing to notice in the above code is our options. All of these parameters play a significant role. Let’s discuss them one by one.

- format parameter

The “format” parameter specifies the type of response you can expect. You can choose between two formats: HTML and JSON. By default, the Crawling API provides responses in HTML. For more details, see the Crawling API format parameter.

- ajax_wait parameter

Facebook pages are loaded using Ajax calls, so if we get the HTML of the page without their completion, We will get HTML without any actual content. We will only get loader HTML. So, to overcome this, we must wait for the AJAX calls to complete. For this, we can use the “ajax_wait” parameter with the value true. This will ensure that crawling API captures the page after the content is rendered from AJAX calls.

- scroll parameter

When browsing Facebook, you’ve likely observed that new posts appear as you scroll down. The Crawling API offers a ‘scroll’ parameter to accommodate this behavior. This parameter enables the API to simulate page scrolling for a specified duration before retrieving the page’s HTML. By default, it scrolls for a 10-second interval. However, you can adjust this duration with the scroll_interval parameter. Discover more about the Crawling API’s scroll parameter.

- scroll_interval parameter

This parameter is provided to change the scroll interval for the scroll option. The Max limit is 60 seconds.

In addition to these options, you can explore many other features as per your convenience. Familiarize yourself with the Crawling API parameters.

To run the script, you can use the following command.

1 | node script.js |

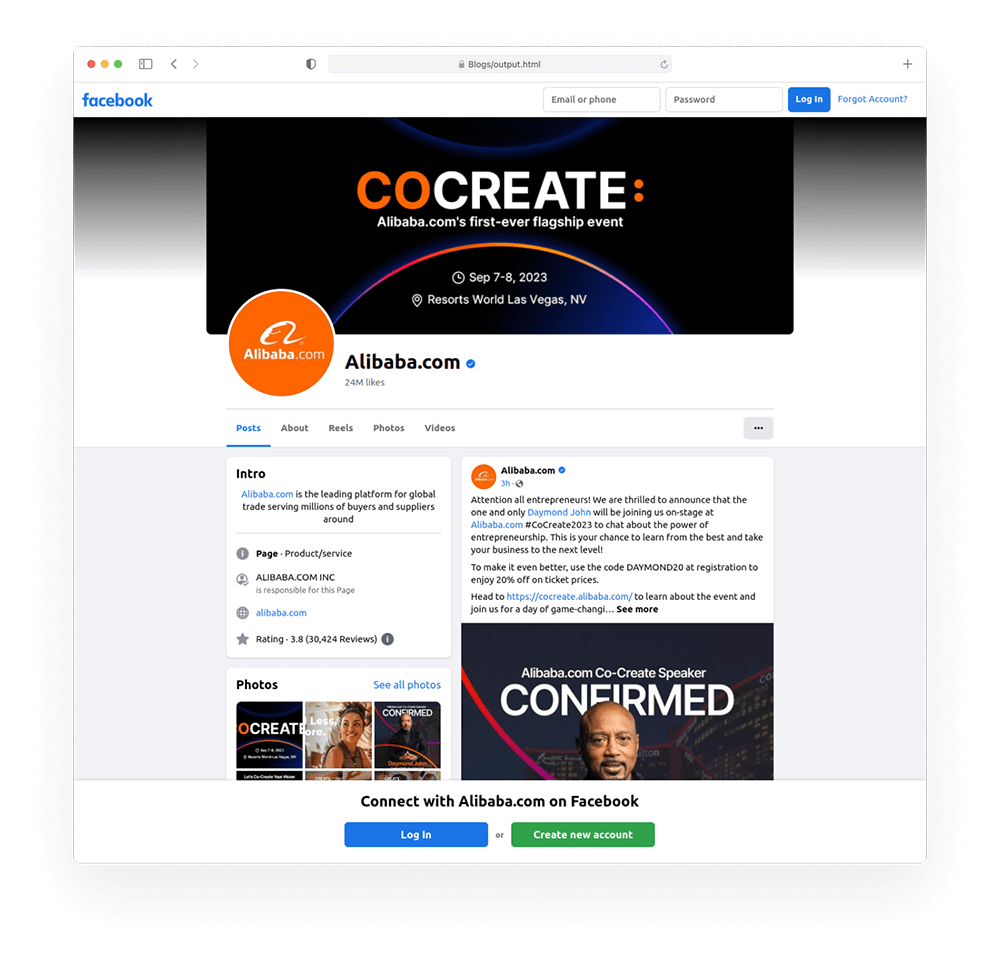

Output HTML file preview:

As you can see, the output HTML file contain the content we are looking for. It contains the Introduction section and posts which gets rendered dynamically on the Facebook page. If we get the page without the parameters, we will only gonna get the HTML of loader icons which Facebook use without any meaningful content.

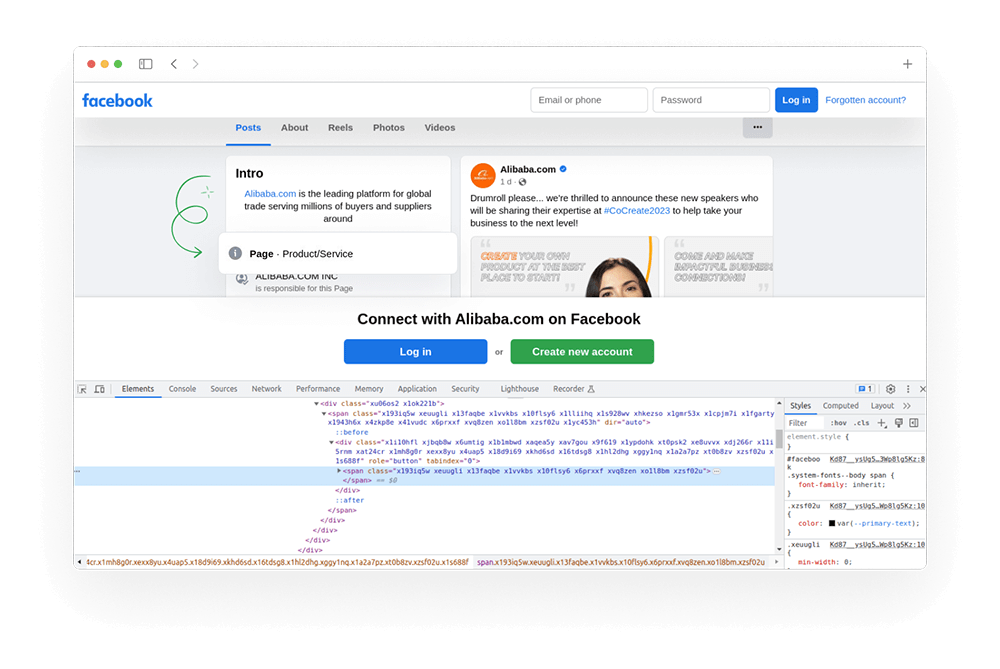

Clicking CSS Selectors before capturing HTML

You may find some cases where you want to also extract the HTML of the alert box or a modal along with the main page HTML. If that element is getting generated using JavaScript on clicking a specific element on the page, you can use “css_click_selector”. This parameter requires a fully specified and valid CSS selector. For instance, you could use an ID selector like “#some-button”, a class selector such as “.some-other-button”, or an attribute selector like “[data-tab-item=”tab1”]”.

Please note that the request will fail with “pc_status: 595” if the selector is not found on the page. If you still want to get the response even if the selector is not found on the page, consider appending a selector which is always found, for example, “body.” Here is an example: “#some-button, body”.

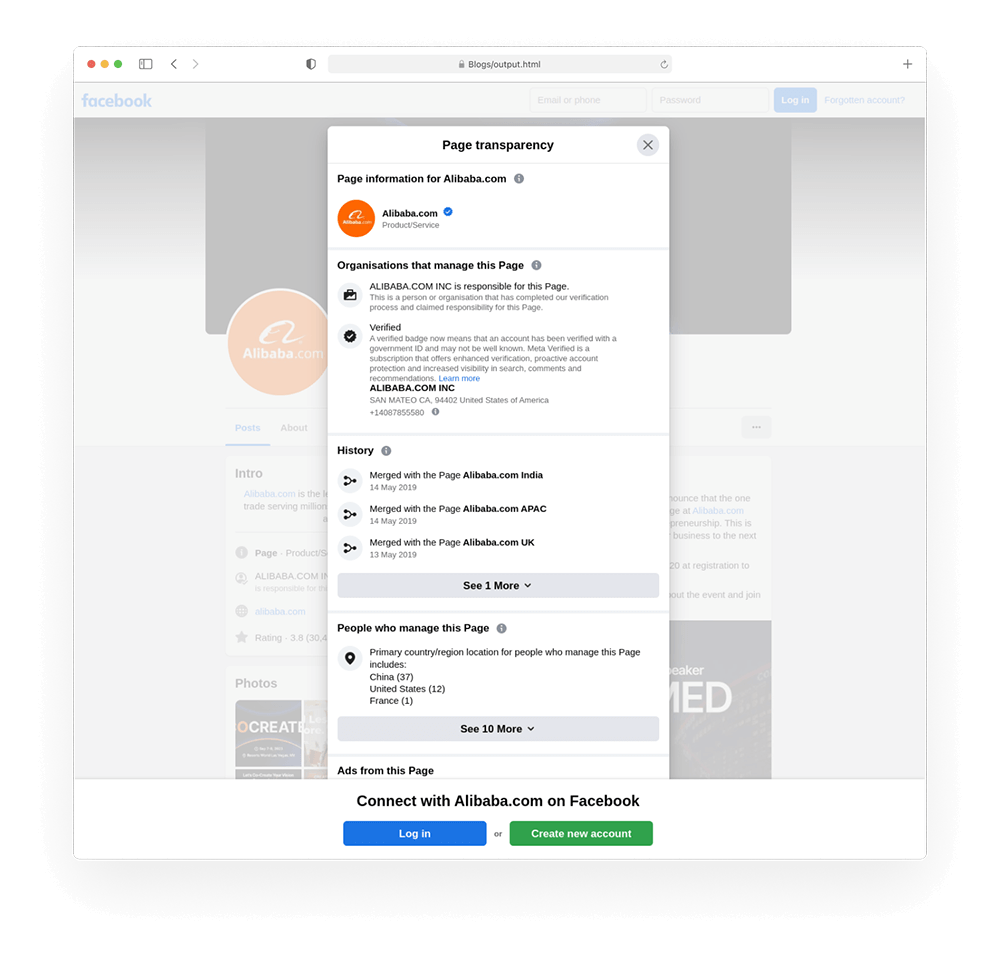

In the above Alibaba Facebook page example, if we want to include the HTML of the Page Transparency Modal, we need to click on the “Page . Product/Service” link.

We can get the CSS selector of the related element by inspecting that element. As you can see in the above image, multiple classes are associated with the element we want to click. We can get a unique CSS selector for this element by chaining the parent elements and this element tag/id/class/attribute together to get a unique selector. (Please test the CSS selector on the console before using it)

1 | // Import the Crawling API |

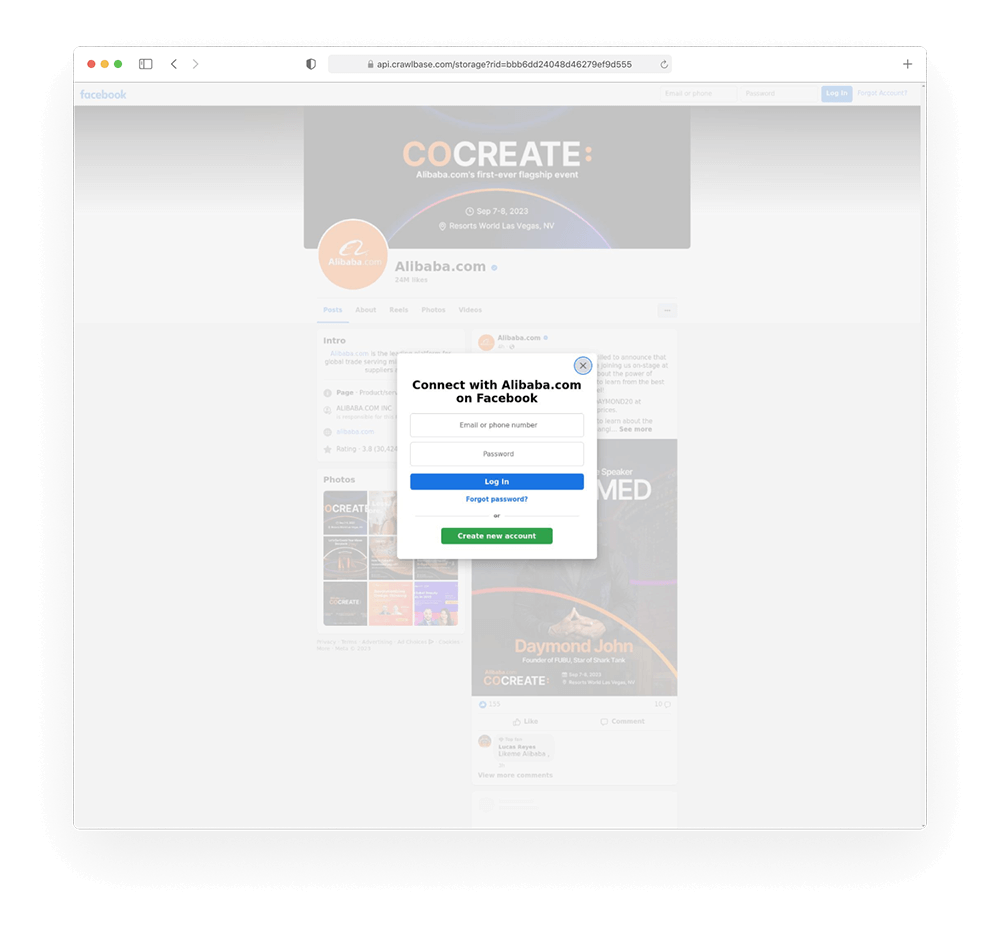

Output HTML file preview:

In the above output HTML file preview, we can clearly see that the Page Transparency Modal is present within the HTML.

Scraping the meaningful content from the HTML

In the previous sections, we only crawl the HTML of the Alibaba Facebook page. What if we don’t need to raw HTML and want to scrape the meaningful content from the page? Don’t worry! Crawlbase Crawling API also provides a built-in facebook scraper, which we can use to scrape the page. To use it, we need to use Crawling API “scraper” parameter. Using this, we can get the page’s meaningful content in JSON format. Let’s see the below example:

1 | // Import the Crawling API |

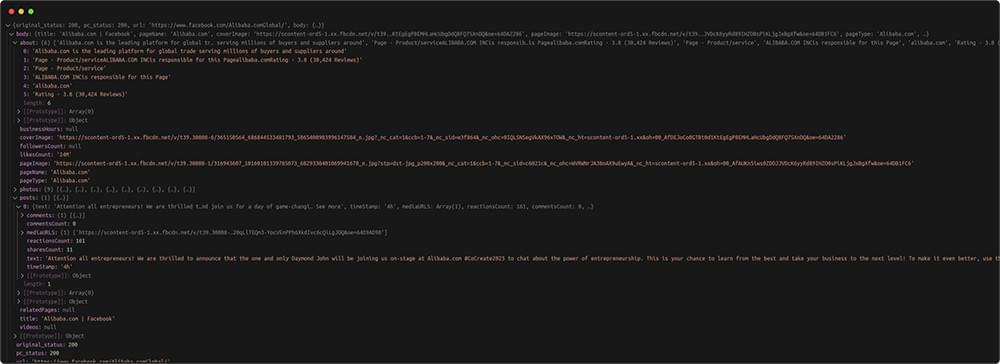

Output:

As we can see it the above image, response body contain useful information like title, pageName, coverImage, about section information etc. The JSON also include the information for every post on the page like comments, mediaURLS, reactionCounts, sharesCounts etc. We can easily assess these JSON parameters and use the scraped data as per our need.

There are several scrapers available with the Crawling API. You can find them at Crawling API scrapers.

Getting page screenshot along HTML

What if we also want to capture a screenshot of the page whose HTML we have crawled or scraped? No worries, Crawlbase Crawling API also provides a feature for this. Using the “screenshot” parameter, we can get a screenshot in the JPEG format of the crawled page. Crawling API will send us back the “screenshot_url” in the response headers (or in the JSON response if you use the format: JSON). Let’s add this parameter in the previous code example and see what happens.

1 | // Import the Crawling API |

Output:

As you can see above, “screenshot_url” parameter is also include in the response body. This URL will expire automatically after 1 hour.

Link preview (Before Expiry):

Conclusion

Crawlbase Crawling API offers a powerful solution for overcoming the challenges of extracting dynamic content from platforms like Facebook. Its advanced features, including JavaScript handling and intelligent rate limits, provide an efficient and effective way to gather valuable insights. By following the step-by-step guide and utilizing the API’s built-in scrapers, users can navigate the complexities of data extraction and harness the full potential of dynamic content for informed decision-making. In the dynamic landscape of data-driven decisions, the Crawlbase Crawling API emerges as an essential tool for unlocking the depths of information hidden within platforms like Facebook.

Frequently Asked Questions

Q: How does the Crawlbase Crawling API handle Facebook’s infinite scrolling feature?

The Crawlbase Crawling API effectively manages infinite scrolling on Facebook by offering a “scroll” parameter. This parameter specifies whether the API should simulate scrolling on the page, allowing it to load and capture additional content as users scroll down. By adjusting the “scroll_interval” parameter, users can control the scrolling duration, ensuring comprehensive data extraction even from pages with infinite scrolling.

Q: Can the Crawlbase Crawling API bypass Facebook’s anti-scraping mechanisms?

Yes, the Crawlbase Crawling API is equipped with advanced tools that address anti-scraping measures employed by Facebook. By rotating IP addresses and implementing intelligent rate limits, the API ensures scraping activity remains smooth and avoids triggering anti-scraping mechanisms. This helps users extract data while minimizing the risk of being detected as a scraper by the platform.

Q: What advantages does the Crawlbase Crawling API’s built-in Facebook scraper offer?

The Crawlbase Crawling API’s built-in Facebook scraper simplifies the extraction process by directly providing meaningful content in JSON format. This eliminates the need for users to manually parse and filter raw HTML, making data extraction more efficient. The scraper is optimized for capturing relevant information from Facebook pages, ensuring users can quickly access the specific content they need for analysis and decision-making.